-

Notifications

You must be signed in to change notification settings - Fork 2

SolarNode Managed Deploy Guide

The SolarNode Deploy Guide describes how to deploy the SolarNode platform using a base platform and additional components. This guide describes how to make use of the S3 Setup and S3 Backup plugins to make management of a set of similar nodes easier by storing the settings on Amazon S3.

The goal of this S3-based deployment strategy is to create a customized SolarNode OS image that will:

- download and install the latest setup package available at first boot

- perform backup and restore operations to and from S3

- respond to a SolarNetwork instruction to update to, or rollback to, a specific setup package version

To accomplish those goals, this guide will show how to setup a S3 bucket with the necessary setup metadata and resources, and to customize a standard SolarNode OS image so it supports using that S3 bucket from the start.

The S3 Setup plugin will look for versioned package metadata files

using a solarnode-backups/setup-meta/ prefix by default. This can be

customized, and it is in fact recommended to do so unless you have very basic

needs. The package metadata lists the S3 objects that are included in the

package. The objects are simply tar archives, whose file contents are stored

with paths relative to the SolarNode home directory (which is typically

/home/solar).

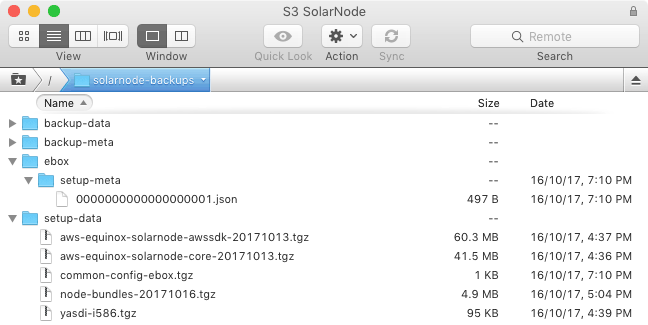

Here's a screenshot of an example S3 bucket with the described setup:

Note in the screenshot that a custom setup package prefix

solarnode-backups/ebox/ is being used. This configuration is specific to the

eBox SolarNode image; another configuration group could be created

like solarnode-backups/pi/ for a Raspberry Pi SolarNode image.

The setup package metadata files are named using an alphabetically

sorted version. In the screenshot above, the only package version available

is 0000000000000000001.json. The next package versions could thus be named

0000000000000000002.json, 0000000000000000003.json, and so on. The

versions themselves don't matter other than keeping in mind that when

SolarNode attempts to install the "latest version" that means the last

version when sorted alphabetically in ascending order.

The metadata JSON structure is described in detail elsewhere, but here's an example to provide some context:

{

"syncPaths":[

"{sn.home}/app"

],

"cleanPaths":[

"{osgi.configuration.area}/config.ini"

],

"objects":[

"solarnode-backups/setup-data/aws-equinox-solarnode-core-20171013.tgz",

"solarnode-backups/setup-data/aws-equinox-solarnode-awssdk-20171013.tgz",

"solarnode-backups/setup-data/node-bundles-20171016.tgz",

"solarnode-backups/setup-data/yasdi-i586.tgz",

"solarnode-backups/setup-data/common-config-ebox.tgz"

],

"restartRequired":true

}The objects array contains the list of S3 files that are included in

the package. The S3 files are simply tar archives, which will be expanded

in the SolarNode home directory. The objects listed in this example are:

-

aws-equinox-solarnode-core-20171013.tgz- SolarNode base framework, i.e. the OSGi runtime and supporting JARs inapp/bootandapp/core -

aws-equinox-solarnode-awssdk-20171013.tgz- AWS SDK for base framework, separated from #1 to keep the size of the tar archives reasonable -

node-bundles-20171016.tgz- SolarNode additional components, i.e. all the plugins needed to integrate with the desired hardware components and stored inapp/main -

yasdi-i586.tgz- compiled YASDI libraries for integrating with SMA power inverters -

common-config-ebox.tgz- configuration files forconf/servicesthat are common to all nodes in this setup configuration group

This guide assumes you're starting from a SolarNode OS image, such as the Raspberry Pi SolarNode image. You will then make changes to that image so you can then deploy as many nodes as you like using your specific configuration. This will include adding S3 credentials to the image so SolarNode can access S3.

For Linux based images, the easiest way to customize the image file is to

attach a loop device to the file, and then mount the SOLARNODE partition

from that device. In Debian derivatives, the mount package provides the

losetup tool for managing loop devices.

For example, if you are using the aforementioned Raspberry Pi image

you'd have an image file named solarnode-deb8-pi-1GB.img. You can attach

a loop device like this:

sudo losetup -P -f --show solarnode-deb8-pi-1GB.img

That will print out the name of the loop device that was attached, for example

/dev/loop0. That will also have created devices like /dev/loop0p1 for any

partitions found in the image file. In the case of the Raspberry Pi, there are

two partitions: a boot partition and a root (SOLARNODE) partition. The root

partition is the one you'll want to mount, like this:

mkdir /mnt/SOLARNODE

sudo mount /dev/loop0p2 /mnt/SOLARNODE

Those commands will mount the SolarNode image as /mnt/SOLARNODE and from

there you can start to modify the image. Once you've finished your changes,

you must both unmount the filesystem and disconnect the loop device:

sudo umount /mnt/SOLARNODE

sudo losetup -d /dev/loop0

At this point, your image file can be copied to the boot media (e.g. an

SD card). For example you can use a USB based SD card reader and copy

the image using dd along the lines of:

sudo dd of=/dev/sdd conv=sync,noerror,notrunc bs=2M if=solarnode-deb8-pi-1GB.img

In that example, dev/sdd is the SD card device assigned when it was inserted

into a USB card reader. You would need to adjust that to match whatever device

is assigned on your system.

Another way to customize the node image is to use the SolarNode Image Maker app. That tool allows you to automate the image customization process by taking a base node image, running scripts to customize the image, and then publish the customized image to a location like a S3 bucket.

Which ever way you end up customizing the SolarNode OS image file, part of that customization must include the S3 credentials and other settings needed for the S3 Backup and S3 Setup plugins to work immediately on boot. This can be accomplished by adding a few of configuration files to your customized image.

First, the conf/services/net.solarnetwork.node.backup.s3.S3BackupService.cfg file

is where the S3 credentials, bucket name, and region settings are configured. A

typical file looks like this:

regionName = us-west-1

bucketName = mybucket

accessToken = ABABABABABABABABABAB

accessSecret = asdfjkl/asdfjk/asdfjkl/asdfjkl/asdfjkl/a

objectKeyPrefix = solarnode-backups/

Next, the conf/services/net.solarnetwork.node.setup.s3.S3SetupManager.cfg file

is where you can customize the S3 path that SolarNode will look for setup

package versions at. By default, it will look in solarnode-backups/setup-meta/

but if you need to manage different sets of nodes with different configuration needs,

then you can customize that in this file. For example you might need to manage

sets of nodes deployed on different types of hardware. In that scenario you could

create different configuration groups by adding a hardware name to the S3 path.

A typical file looks like this:

objectKeyPrefix = solarnode-backups/raspberry-pi/

Note that setup-meta/ will automatically be appended to this value, so you

shouldn't add that here.

Finally, the conf/services/net.solarnetwork.node.backup.DefaultBackupManager.cfg

file is where you can configure SolarNode to use the S3 Backup service

by default, rather than the local file-based service. A typical file looks like this:

preferredBackupServiceKey = net.solarnetwork.node.backup.s3.S3BackupService

After a time, imagine you'd like to update the managed nodes to a new setup

package version. You'd want to first copy the new package metadata to S3, then

any new data files that package references. Then you could use the

SolarUser queue instruction API to pass a UpdatePlatform

instruction to your nodes. When the nodes execute that instruction, they

will download the new setup package version and install it.

For example, let's assume an updated

set of additional components is available. You could create a version

0000000000000000002.json metadata file with this content:

{

"syncPaths":[

"{sn.home}/app"

],

"cleanPaths":[

"{osgi.configuration.area}/config.ini"

],

"objects":[

"solarnode-backups/setup-data/aws-equinox-solarnode-core-20171013.tgz",

"solarnode-backups/setup-data/aws-equinox-solarnode-awssdk-20171013.tgz",

"solarnode-backups/setup-data/node-bundles-20180101.tgz",

"solarnode-backups/setup-data/yasdi-i586.tgz",

"solarnode-backups/setup-data/common-config-ebox.tgz"

],

"restartRequired":true

}Note the only difference between this version and the previous one is that

node-bundles-20171016.tgz has been replaced by node-bundles-20180101.tgz.

After uploading the metadata to solarnode-backups/ebox/setup-meta/0000000000000000002.json

and the new tar archive to solarnode-backups/setup-data/yasdi-i586.tgz, you'd

send the UpdatePlatform instruction to the node using the SolarUser API like:

/solaruser/api/v1/sec/instr/add?topic=UpdatePlatform&nodeId=123

Note the nodeId=123 would use the actual ID of your node, not 123.