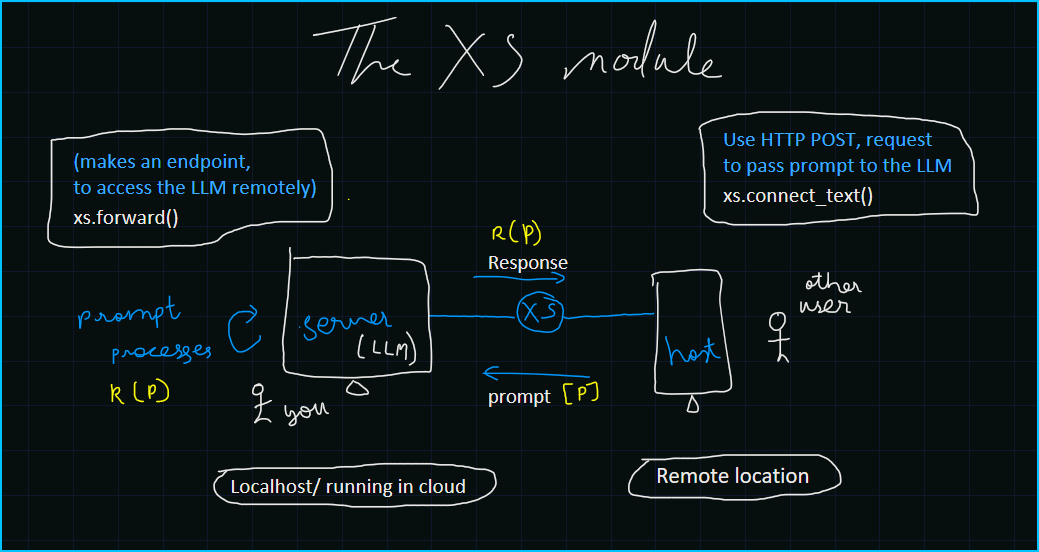

The workflow of the XS module, with a tagline : xs makes you access : install using

pip install llm-xs

This module leverages Flask, Pyngrok, and Waitress to create a simple API endpoint for text generation of all Open Source LLMs.

- 🌐 API Endpoint Creation: Easily create an API endpoint to access the text generation function.

- 🔀 Dynamic Port Allocation: Automatically find an available port to avoid conflicts.

- 🚧 Ngrok Tunneling: Expose your local server to the internet using Ngrok.

- 🔄 Flushing Tunnels: Clear previous Ngrok tunnels to avoid the max tunnel limit.

To use this module, you need to install by the following command :

pip install 'git+https://github.com/xprabhudayal/xs.git'

|

|

|

It dynamically finds an available port.

The xs.forward() function sets up the Ngrok tunnel and starts the server using Waitress. NGROK_API is required here.

The connect_text function allows remote devices to access the API and use the text generation option. It sends a POST request to the API endpoint with the provided data and prints the generated text response.

The flush function clears all previous Ngrok tunnels. In the free tier, you can have 3 tunnels only! This is useful if you encounter the maximum tunnel limit error from Ngrok.

- Port Killing: Automatically kill processes using specific ports before starting the server.

- Enhanced Error Handling: Improve the robustness of error handling mechanisms.

- Ngrok Tunnel Error: If you encounter a max tunnel limit, use the

flushfunction to clear previous tunnels. - JSON Decode Error: Ensure the API response is correctly formatted JSON.

This project is licensed under the MIT License.

For any inquiries, please reach me at : MAIL

Enjoy using the xs.py module! 🚀