sdcat* Sliced Detection and Clustering Analysis Toolkit*

This tool processes images using a sliced detection and clustering workflow. This is a two-step process:

- Detection: Detects objects in images using a fine-grained saliency-based detection model, and/or one of the object detection models run with the SAHI algorithm. These two algorithms can be combined through NMS (Non-Maximum Suppression) to produce the final detections.

- The detection models include YOLOv8s, YOLOS, and various MBARI-specific models for midwater and UAV images.

- The SAHI algorithm slices images into smaller windows and runs a detection model on the windows to improve detection accuracy.

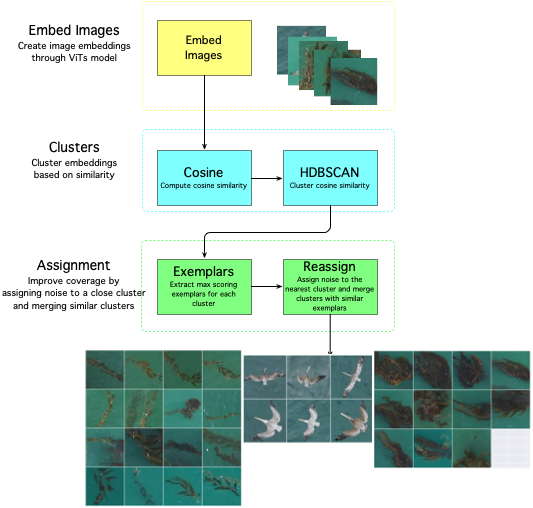

- Clustering: Clusters the detections using a Vision Transformer (ViT) model and the HDBSCAN algorithm.

- Visualization: (optional) Visualizes the detections and clusters in a user-friendly way with grid plots and images with bounding boxes.

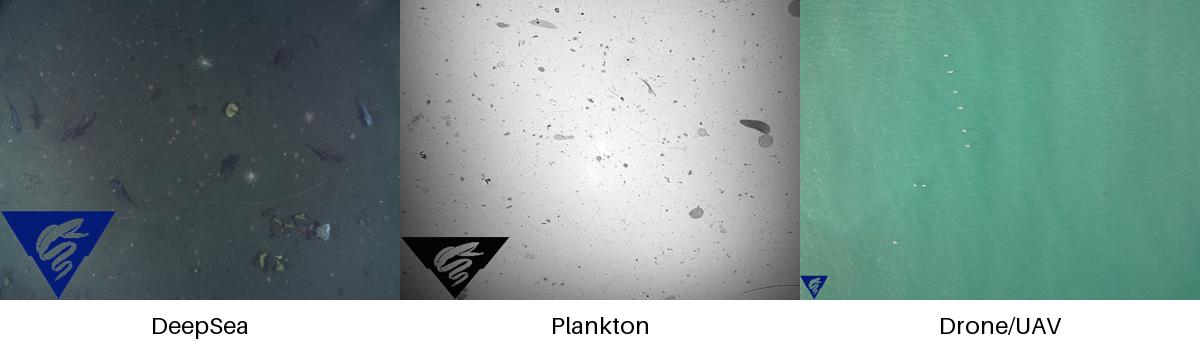

If your images look something like the image below, and you want to detect objects in the images, and optionally cluster the detections, then this repository may be useful to you, particularly for discovery to quickly gather training data. The repository is designed to be run from the command line, and can be run in a Docker container, without or with a GPU (recommended).

To use with a multiple gpus, use the --device cuda option To use with a single gpu, use the --device cuda:0,1 option

Author: Danelle, [email protected] . Reach out if you have questions, comments, or suggestions.

Detection can be done with a fine-grained saliency-based detection model, and/or one the following models run with the SAHI algorithm. Both detections algorithms (saliency and object detection) are run by default and combined to produce the final detections. SAHI is short for Slicing Aided Hyper Inference, and is a method to slice images into smaller windows and run a detection model on the windows.

| Object Detection Model | Description |

|---|---|

| yolov8s | YOLOv8s model from Ultralytics |

| hustvl/yolos-small | YOLOS model a Vision Transformer (ViT) |

| hustvl/yolos-tiny | YOLOS model a Vision Transformer (ViT) |

| MBARI-org/megamidwater (default) | MBARI midwater YOLOv5x for general detection in midwater images |

| MBARI-org/uav-yolov5 | MBARI UAV YOLOv5x for general detection in UAV images |

| MBARI-org/yolov5x6-uavs-oneclass | MBARI UAV YOLOv5x for general detection in UAV images single class |

| FathomNet/MBARI-315k-yolov5 | MBARI YOLOv5x for general detection in benthic images |

To skip saliency detection, use the --skip-saliency option.

sdcat detect --skip-saliency --image-dir <image-dir> --save-dir <save-dir> --model <model> --slice-size-width 900 --slice-size-height 900To skip using the SAHI algorithm, use --skip-sahi.

sdcat detect --skip-sahi --image-dir <image-dir> --save-dir <save-dir> --model <model> --slice-size-width 900 --slice-size-height 900Once the detections are generated, the detections can be clustered. Alternatively, detections can be clustered from a collection of images, sometimes referred to as region of interests (ROIs) by providing the detections in a folder with the roi option.

sdcat cluster roi --roi <roi> --save-dir <save-dir> --model <model>The clustering is done with a Vision Transformer (ViT) model, and a cosine similarity metric with the HDBSCAN algorithm. The ViT model is used to generate embeddings for the detections, and the HDBSCAN algorithm is used to cluster the detections. What is an embedding? An embedding is a vector representation of an object in an image.

The defaults are set to produce fine-grained clusters, but the parameters can be adjusted to produce coarser clusters. The algorithm workflow looks like this:

| Vision Transformer (ViT) Models | Description |

|---|---|

| google/vit-base-patch16-224(default) | 16 block size trained on ImageNet21k with 21k classes |

| facebook/dino-vits8 | trained on ImageNet which contains 1.3 M images with labels from 1000 classes |

| facebook/dino-vits16 | trained on ImageNet which contains 1.3 M images with labels from 1000 classes |

| MBARI-org/mbari-uav-vit-b-16 | MBARI UAV vits16 model trained on 10425 UAV images with labels from 21 classes |

Smaller block_size means more patches and more accurate fine-grained clustering on smaller objects, so ViTS models with 8 block size are recommended for fine-grained clustering on small objects, and 16 is recommended for coarser clustering on larger objects. We recommend running with multiple models to see which model works best for your data, and to experiment with the --min-samples and --min-cluster-size options to get good clustering results.

Pip install the sdcat package with:

pip install sdcatAlternatively, Docker can be used to run the code. A pre-built docker image is available at Docker Hub with the latest version of the code.

Detection

```shell

docker run -it -v $(pwd):/data mbari/sdcat detect --image-dir /data/images --save-dir /data/detections --model MBARI-org/uav-yolov5Followed by clustering

docker run -it -v $(pwd):/data mbari/sdcat cluster detections --det-dir /data/detections/ --save-dir /data/detections --model MBARI-org/uav-yolov5A GPU is recommended for clustering and detection. If you don't have a GPU, you can still run the code, but it will be slower. If running on a CPU, multiple cores are recommended to speed up processing. Once your clustering is complete, subsequent runs will be faster as the necessary information is cached to support fast iteration.

docker run -it --gpus all -v $(pwd):/data mbari/sdcat:cuda124 detect --image-dir /data/images --save-dir /data/detections --model MBARI-org/uav-yolov5To get all options available, use the --help option. For example:

sdcat --helpwhich will print out the following:

Usage: sdcat [OPTIONS] COMMAND [ARGS]...

Process images from a command line.

Options:

-V, --version Show the version and exit.

-h, --help Show this message and exit.

Commands:

cluster Cluster detections.

detect Detect objects in images

To get details on a particular command, use the --help option with the command. For example, with the cluster command:

sdcat cluster --helpwhich will print out the following:

Usage: sdcat cluster [OPTIONS] COMMAND [ARGS]...

Commands related to clustering images

Options:

-h, --help Show this message and exit.

Commands:

detections Cluster detections.

roi Cluster roi.The sdcat toolkit generates data in the following folders.

For detections, the output is organized in a folder with the following structure:

/data/20230504-MBARI/

└── detections

└── hustvl

└── yolos-small # The model used to generate the detections

├── det_raw # The raw detections from the model

│ └── csv

│ ├── DSC01833.csv

│ ├── DSC01859.csv

│ ├── DSC01861.csv

│ └── DSC01922.csv

├── det_filtered # The filtered detections from the model

├── crops # Crops of the detections

├── dino_vits8...date # The clustering results - one folder per each run of the clustering algorithm

├── dino_vits8..detections.csv # The detections with the cluster id

├── stats.txt # Statistics of the detections

└── vizresults # Visualizations of the detections (boxes overlaid on images)

├── DSC01833.jpg

├── DSC01859.jpg

├── DSC01861.jpg

└── DSC01922.jpg

For clustering, the output is organized in a folder with the following structure:

/data/20230504-MBARI/

└── clusters

└── crops # The detection crops/rois, embeddings and predictions

└── dino_vit8..._cluster_detections.parquet # The detections with the cluster id and predictions in parquet format

└── dino_vit8..._cluster_detections.csv # The detections with the cluster id and predictions

└── dino_vit8..._cluster_config.ini # Copy of the config file used to run the clustering

└── dino_vit8..._cluster_summary.json # Summary of the clustering results

└── dino_vit8..._cluster_summary.png # 2D plot of the clustering results

└── dino_vit8...

├── dino_vits8.._cluster_1_p0.png # Cluster 1 page 1 grid plot

├── dino_vits8.._cluster_1_p1.png # Cluster 1 page 2 grid plot

├── dino_vits8.._cluster_2_p0.png # Cluster 2 page 0 grid plot

├── dino_vits8.._cluster_noise_p0.png # Noise (unclustered) page 0 grid plot

Example grid plot of the clustering results:

The YOLOv8s model is not as accurate as other models, but is fast and good for detecting larger objects in images, and good for experiments and quick results. Slice size is the size of the detection window. The default is to allow the SAHI algorithm to determine the slice size; a smaller slice size will take longer to process.

sdcat detect --image-dir <image-dir> --save-dir <save-dir> --model yolov8s --slice-size-width 900 --slice-size-height 900Cluster the detections from the YOLOv8s model. The detections are clustered using cosine similarity and embedding

features from the default Vision Transformer (ViT) model google/vit-base-patch16-224

sdcat cluster --det-dir <det-dir>/yolov8s/det_filtered --save-dir <save-dir> --use-vits🚀 The RAPIDS package is supported for speed-up with CUDA. No detailed documentation just yet. Enable by using the --cuhdbscan option and installing RAPIDS

Large collections of images the HDBSCAN is inherently non-parallel, so to support processing large colletions of detections/ROIs, e.g. 500000, you need to experiment with the batch sizes. To enable batching, use the --vits-batch-size option to set the batch size for your ViTS model. The default is 32. This means that the ViTS model will process 32 images at a time. To enable batching for HDBSCAN, use the --hdbscan-batch-size option to set the batch size for HDBSCAN. The default is 50000. This means that HDBSCAN will process 50000 detections at a time. You want to maximize both of these batch sizes to speed up processing.

Temporary Directory Sometimes it is useful to set an alternative temporary directory on systems with limited disk space, or if you want to use a faster disk for temporary files.

To set a temporary directory, you can set the TMPDIR environment variable to the path of the directory you want to use.

This directory is used to store temporary files created by the sdcat toolkit during processing.

Most, but not all data is stored to what is specified with the --save-dir option, but some temporary files

are stored in the system's default temporary directory.

export TMPDIR=/path/to/your/tmpdir- https://github.com/obss/sahi SAHI

- https://arxiv.org/abs/2010.11929 An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

- https://github.com/facebookresearch/dinov2 DINOv2

- https://arxiv.org/pdf/1911.02282.pdf HDBSCAN

- https://github.com/muratkrty/specularity-removal Specularity Removal