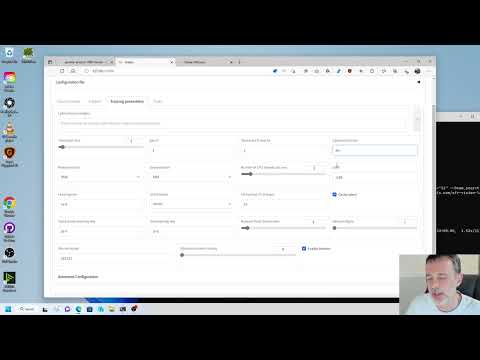

This repository provides a Windows-focused Gradio GUI for Kohya's Stable Diffusion trainers. The GUI allows you to set the training parameters and generate and run the required CLI commands to train the model.

If you run on Linux and would like to use the GUI, there is now a port of it as a docker container. You can find the project here.

- Tutorials

- Required Dependencies

- Installation

- Upgrading

- Launching the GUI

- Dreambooth

- Finetune

- Train Network

- LoRA

- Troubleshooting

- Change History

How to Create a LoRA Part 1: Dataset Preparation:

How to Create a LoRA Part 2: Training the Model:

- Install Python 3.10

- make sure to tick the box to add Python to the 'PATH' environment variable

- Install Git

- Install Visual Studio 2015, 2017, 2019, and 2022 redistributable

Follow the instructions found in this discussion: bmaltais#379

In the terminal, run

git clone https://github.com/bmaltais/kohya_ss.git

cd kohya_ss

bash macos_setup.sh

During the accelerate config screen after running the script answer "This machine", "None", "No" for the remaining questions.

In the terminal, run

git clone https://github.com/bmaltais/kohya_ss.git

cd kohya_ss

bash ubuntu_setup.sh

then configure accelerate with the same answers as in the Windows instructions when prompted.

Give unrestricted script access to powershell so venv can work:

- Run PowerShell as an administrator

- Run

Set-ExecutionPolicy Unrestrictedand answer 'A' - Close PowerShell

Open a regular user Powershell terminal and run the following commands:

git clone https://github.com/bmaltais/kohya_ss.git

cd kohya_ss

python -m venv venv

.\venv\Scripts\activate

pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

pip install --use-pep517 --upgrade -r requirements.txt

pip install -U -I --no-deps https://github.com/C43H66N12O12S2/stable-diffusion-webui/releases/download/f/xformers-0.0.14.dev0-cp310-cp310-win_amd64.whl

cp .\bitsandbytes_windows\*.dll .\venv\Lib\site-packages\bitsandbytes\

cp .\bitsandbytes_windows\cextension.py .\venv\Lib\site-packages\bitsandbytes\cextension.py

cp .\bitsandbytes_windows\main.py .\venv\Lib\site-packages\bitsandbytes\cuda_setup\main.py

accelerate configThis step is optional but can improve the learning speed for NVIDIA 30X0/40X0 owners. It allows for larger training batch size and faster training speed.

Due to the file size, I can't host the DLLs needed for CUDNN 8.6 on Github. I strongly advise you download them for a speed boost in sample generation (almost 50% on 4090 GPU) you can download them here.

To install, simply unzip the directory and place the cudnn_windows folder in the root of the this repo.

Run the following commands to install:

.\venv\Scripts\activate

python .\tools\cudann_1.8_install.py

When a new release comes out, you can upgrade your repo with the following commands in the root directory:

upgrade_macos.shOnce the commands have completed successfully you should be ready to use the new version. MacOS support is not tested and has been mostly taken from https://gist.github.com/jstayco/9f5733f05b9dc29de95c4056a023d645

When a new release comes out, you can upgrade your repo with the following commands in the root directory:

git pull

.\venv\Scripts\activate

pip install --use-pep517 --upgrade -r requirements.txtOnce the commands have completed successfully you should be ready to use the new version.

The script can be run with several optional command line arguments:

--listen: the IP address to listen on for connections to Gradio. --username: a username for authentication. --password: a password for authentication. --server_port: the port to run the server listener on. --inbrowser: opens the Gradio UI in a web browser. --share: shares the Gradio UI.

These command line arguments can be passed to the UI function as keyword arguments. To launch the Gradio UI, run the script in a terminal with the desired command line arguments, for example:

gui.ps1 --listen 127.0.0.1 --server_port 7860 --inbrowser --share

or

gui.bat --listen 127.0.0.1 --server_port 7860 --inbrowser --share

To run the GUI, simply use this command:

.\venv\Scripts\activate

python.exe .\kohya_gui.py

You can find the dreambooth solution specific here: Dreambooth README

You can find the finetune solution specific here: Finetune README

You can find the train network solution specific here: Train network README

Training a LoRA currently uses the train_network.py code. You can create a LoRA network by using the all-in-one gui.cmd or by running the dedicated LoRA training GUI with:

.\venv\Scripts\activate

python lora_gui.py

Once you have created the LoRA network, you can generate images via auto1111 by installing this extension.

- X error relating to

page file: Increase the page file size limit in Windows.

- Re-install Python 3.10 on your system.

This is usually related to an installation issue. Make sure you do not have any python modules installed locally that could conflict with the ones installed in the venv:

- Open a new powershell terminal and make sure no venv is active.

- Run the following commands:

pip freeze > uninstall.txt

pip uninstall -r uninstall.txt

This will store your a backup file with your current locally installed pip packages and then uninstall them. Then, redo the installation instructions within the kohya_ss venv.

-

2023/03/30 (v21.3.8)

- Fix issue with LyCORIS version not being found: bmaltais#481

-

2023/03/29 (v21.3.7)

- Allow for 0.1 increment in Network and Conv alpha values: bmaltais#471 Thanks to @srndpty

- Updated Lycoris module version

-

2023/03/28 (v21.3.6)

- Fix issues when

--persistent_data_loader_workersis specified.- The batch members of the bucket are not shuffled.

--caption_dropout_every_n_epochsdoes not work.- These issues occurred because the epoch transition was not recognized correctly. Thanks to u-haru for reporting the issue.

- Fix an issue that images are loaded twice in Windows environment.

- Add Min-SNR Weighting strategy. Details are in #308. Thank you to AI-Casanova for this great work!

- Add

--min_snr_gammaoption to training scripts, 5 is recommended by paper. - The Min SNR gamma fiels can be found unser the advanced training tab in all trainers.

- Add

- Fixed the error while images are ended with capital image extensions. Thanks to @kvzn. bmaltais#454

- Fix issues when

-

2023/03/26 (v21.3.5)

- Fix for bmaltais#230

- Added detection for Google Colab to not bring up the GUI file/folder window on the platform. Instead it will only use the file/folder path provided in the input field.

-

2023/03/25 (v21.3.4)

- Added untested support for MacOS base on this gist: https://gist.github.com/jstayco/9f5733f05b9dc29de95c4056a023d645

Let me know how this work. From the look of it it appear to be well tought out. I modified a few things to make it fit better with the rest of the code in the repo.

- Fix for issue bmaltais#433 by implementing default of 0.

- Removed non applicable save_model_as choices for LoRA and TI.

-

2023/03/24 (v21.3.3)

- Add support for custom user gui files. THey will be created at installation time or when upgrading is missing. You will see two files in the root of the folder. One named

gui-user.batand the othergui-user.ps1. Edit the file based on your prefered terminal. Simply add the parameters you want to pass the gui in there and execute it to start the gui with them. Enjoy!

- Add support for custom user gui files. THey will be created at installation time or when upgrading is missing. You will see two files in the root of the folder. One named

-

2023/03/23 (v21.3.2)

- Fix issue reported: bmaltais#439

-

2023/03/23 (v21.3.1)

- Merge PR to fix refactor naming issue for basic captions. Thank @zrma

-

2023/03/22 (v21.3.0)

- Add a function to load training config with

.tomlto each training script. Thanks to Linaqruf for this great contribution!- Specify

.tomlfile with--config_file..tomlfile haskey=valueentries. Keys are same as command line options. See #241 for details. - All sub-sections are combined to a single dictionary (the section names are ignored.)

- Omitted arguments are the default values for command line arguments.

- Command line args override the arguments in

.toml. - With

--output_configoption, you can output current command line options to the.tomlspecified with--config_file. Please use as a template.

- Specify

- Add

--lr_scheduler_typeand--lr_scheduler_argsarguments for custom LR scheduler to each training script. Thanks to Isotr0py! #271- Same as the optimizer.

- Add sample image generation with weight and no length limit. Thanks to mio2333! #288

( ),(xxxx:1.2)and[ ]can be used.

- Fix exception on training model in diffusers format with

train_network.pyThanks to orenwang! #290 - Add warning if you are about to overwrite an existing model: bmaltais#404

- Add

--vae_batch_sizefor faster latents caching to each training script. This batches VAE calls.- Please start with

2or4depending on the size of VRAM.

- Please start with

- Fix a number of training steps with

--gradient_accumulation_stepsand--max_train_epochs. Thanks to tsukimiya! - Extract parser setup to external scripts. Thanks to robertsmieja!

- Fix an issue without

.npzand with--full_pathin training. - Support extensions with upper cases for images for not Windows environment.

- Fix

resize_lora.pyto work with LoRA with dynamic rank (includingconv_dim != network_dim). Thanks to toshiaki! - Fix issue: bmaltais#406

- Add device support to LoRA extract.

- Add a function to load training config with