-

Notifications

You must be signed in to change notification settings - Fork 5

Getting started page tweaks #324

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

Merged

Changes from all commits

Commits

Show all changes

7 commits

Select commit

Hold shift + click to select a range

18f01d1

Getting started page tweaks

ffe7c5b

More wording/flow fixes, less emphasis on API Token creation

3847d1c

Switch to grabbing frames from youtube

26dc9c2

make sure getting-started code works

9d4aefc

more rewritign

2901981

More tweaks

1836524

More fixups

File filter

Filter by extension

Conversations

Failed to load comments.

Loading

Jump to

Jump to file

Failed to load files.

Loading

Diff view

Diff view

There are no files selected for viewing

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,157 +1,166 @@ | ||

| # Getting Started with Groundlight | ||

|

|

||

| # Getting Started | ||

|

|

||

| ## How to Build a Computer Vision Application with Groundlight's Python SDK | ||

|

|

||

| If you're new to Groundlight AI, this is a good place to start. This is the equivalent of building a "Hello, world!" application. | ||

|

|

||

| Don't code? [Reach out to Groundlight AI](mailto:[email protected]) so we can build a custom computer vision application for you. | ||

|

|

||

|

|

||

| ### What's below? | ||

|

|

||

| - [Prerequisites](#prerequisites) | ||

| - [Environment Setup](#environment-setup) | ||

| - [Authentication](#authentication) | ||

| - [Writing the code](#writing-the-code) | ||

| - [Using your application](#using-your-computer-vision-application) | ||

| ## Build Powerful Computer Vision Applications in Minutes | ||

|

|

||

| Welcome to Groundlight AI! This guide will walk you through creating powerful computer vision applications in minutes using our Python SDK. | ||

| No machine learning expertise required! Groundlight empowers businesses across industries - | ||

| from [revolutionizing industrial quality control](https://www.groundlight.ai/blog/lkq-corporation-uses-groundlight-ai-to-revolutionize-quality-control-and-inspection) | ||

| and [monitoring workplace safety compliance](https://www.groundlight.ai/use-cases/ppe-detection-in-the-workplace) | ||

| to [optimizing inventory management](https://www.groundlight.ai/use-cases/inventory-monitoring-using-vision-ai). | ||

| Our human-in-the-loop technology delivers accurate results while continuously improving over time, making | ||

| sophisticated computer vision accessible to everyone. | ||

|

|

||

| Don't code? No problem! [Contact our team](mailto:[email protected]) and we'll build a custom solution tailored to your needs. | ||

|

|

||

| ### Prerequisites | ||

|

|

||

| Before getting started: | ||

|

|

||

| - Make sure you have python installed | ||

| - Install VSCode | ||

| - Make sure your device has a c compiler. On Mac, this is provided through XCode while in Windows you can use the Microsoft Visual Studio Build Tools | ||

|

|

||

| ### Environment Setup | ||

|

|

||

| Before you get started, you need to make sure you have python installed. Additionally, it’s good practice to set up a dedicated environment for your project. | ||

|

|

||

| You can download python from https://www.python.org/downloads/. Once installed, you should be able to run the following in the command line to create a new environment | ||

|

|

||

| ```bash | ||

| python3 -m venv gl_env | ||

| ``` | ||

| Once your environment is created, you can activate it with | ||

| ```bash | ||

| source gl_env/bin/activate | ||

| ``` | ||

| For Linux and Mac or if you’re on Windows you can run | ||

| ```bash | ||

| gl_env\Scripts\activate | ||

| ``` | ||

| The last step to setting up your python environment is to run | ||

| ```bash | ||

| pip install groundlight | ||

| pip install framegrab | ||

| ``` | ||

| in order to download Groundlight’s SDK and image capture libraries. | ||

|

|

||

|

|

||

| Before diving in, you'll need: | ||

| 1. A [Groundlight account](https://dashboard.groundlight.ai/) (sign up is quick and easy!) | ||

| 2. An API token from your [Groundlight dashboard](https://dashboard.groundlight.ai/reef/my-account/api-tokens). Check out our [API Tokens guide](/docs/getting-started/api-tokens) for details. | ||

| 3. Python 3.9 or newer installed on your system. | ||

|

|

||

| ### Setting Up Your Environment | ||

|

|

||

| Let's set up a clean Python environment for your Groundlight project! The Groundlight SDK is available on PyPI and can be installed with [pip](https://packaging.python.org/en/latest/tutorials/installing-packages/#use-pip-for-installing). | ||

|

|

||

| First, let's create a virtual environment to keep your Groundlight dependencies isolated from other Python projects: | ||

| ```bash | ||

| python3 -m venv groundlight-env | ||

| ``` | ||

| Now, activate your virtual environment: | ||

| ```bash | ||

| # MacOS / Linux | ||

| source groundlight-env/bin/activate | ||

| ``` | ||

| ``` | ||

| # Windows | ||

| .\groundlight-env\Scripts\activate | ||

| ``` | ||

|

|

||

| With your environment ready, install the Groundlight SDK with a simple pip command: | ||

| ```bash | ||

| pip install groundlight | ||

| ``` | ||

|

|

||

| Let's also install [framegrab](https://github.com/groundlight/framegrab) with YouTube support - | ||

| this useful library will let us capture frames from YouTube livestreams, webcams, and other video | ||

| sources, making it easy to get started! | ||

| ```bash | ||

| pip install framegrab[youtube] | ||

| ``` | ||

| :::tip Camera Support | ||

| Framegrab is versatile! It works with: | ||

| - Webcams and USB cameras | ||

| - RTSP streams (security cameras) | ||

| - Professional cameras (Basler USB/GigE) | ||

| - Depth cameras (Intel RealSense) | ||

| - Video files and streams (mp4, mov, mjpeg, avi) | ||

| - YouTube livestreams | ||

|

|

||

| This makes it perfect for quickly prototyping your computer vision applications! | ||

| ::: | ||

|

|

||

| Need more options? Check out our detailed [installation guide](/docs/installation/) for advanced setup instructions. | ||

|

|

||

| ### Authentication | ||

|

|

||

| In order to verify your identity while connecting to your custom ML models through our SDK, you’ll need to create an API token. | ||

|

|

||

| 1. Head over to [https://dashboard.groundlight.ai/](https://dashboard.groundlight.ai/) and create or log into your account | ||

|

|

||

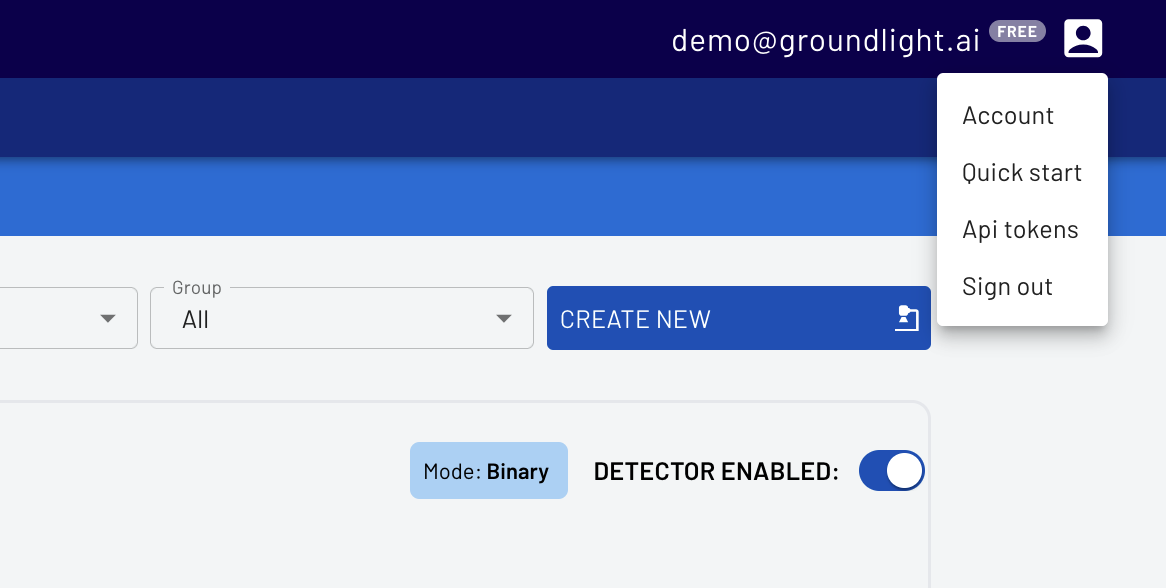

| 2. Once in, click on your username in the upper right hand corner of your dashboard: | ||

|

|

||

|  | ||

|

|

||

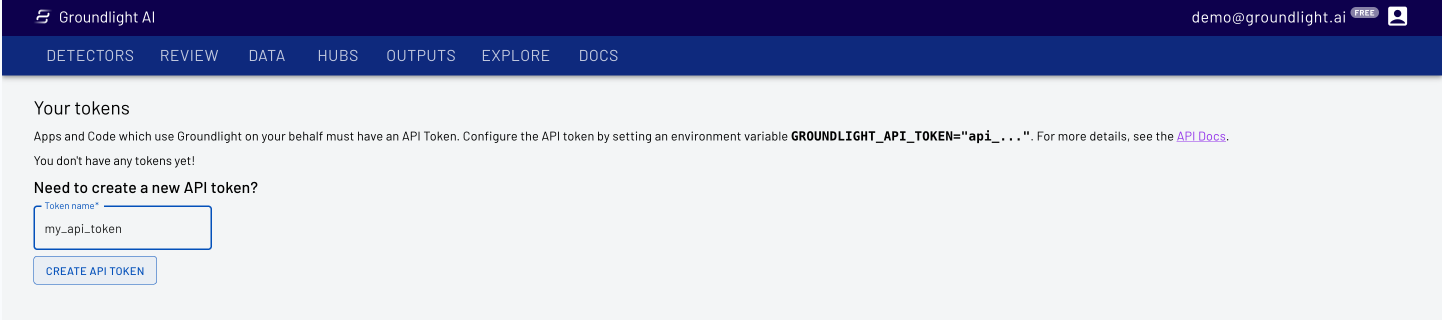

| 3. Select API Tokens, then enter a name, like ‘personal-laptop-token’ for your api token. | ||

|

|

||

|  | ||

|

|

||

| 4. Copy the API Token for use in your code | ||

|

|

||

| IMPORTANT: Keep your API token secure! Anyone who has access to it can impersonate you and will have access to your Groundlight data | ||

|

|

||

|

|

||

| ```bash | ||

| $env:GROUNDLIGHT_API_TOKEN="YOUR_API_TOKEN_HERE" | ||

| ``` | ||

| Or on Mac | ||

| ```bash | ||

| export GROUNDLIGHT_API_TOKEN="YOUR_API_TOKEN_HERE" | ||

| ``` | ||

|

|

||

|

|

||

| ### Writing the code | ||

|

|

||

| For your first and simple application you can build a binary detector, which is computer vision model where the answer will either be 'Yes' or 'No'. Groundlight AI will confirm if the thumb is facing up or down ("Is the thumb facing up?"). | ||

|

|

||

| You can start using Groundlight using just your laptop camera, but you can also use a USB camera if you have one. | ||

|

|

||

| ```python | ||

| import groundlight | ||

| import cv2 | ||

| from framegrab import FrameGrabber | ||

| import time | ||

|

|

||

| gl = groundlight.Groundlight() | ||

|

|

||

| detector_name = "trash_detector" | ||

| detector_query = "Is the trash can overflowing" | ||

|

|

||

| detector = gl.get_or_create_detector(detector_name, detector_query) | ||

|

|

||

| grabber = list(FrameGrabber.autodiscover().values())[0] | ||

|

|

||

| WAIT_TIME = 5 | ||

| last_capture_time = time.time() - WAIT_TIME | ||

|

|

||

| while True: | ||

| frame = grabber.grab() | ||

|

|

||

| cv2.imshow('Video Feed', frame) | ||

| key = cv2.waitKey(30) | ||

|

|

||

| if key == ord('q'): | ||

| break | ||

| # # Press enter to submit an image query | ||

| # elif key in (ord('\r'), ord('\n')): | ||

| # print(f'Asking question: {detector_query}') | ||

| # image_query = gl.submit_image_query(detector, frame) | ||

| # print(f'The answer is {image_query.result.label.value}') | ||

|

|

||

| # # Press 'y' or 'n' to submit a label | ||

| # elif key in (ord('y'), ord('n')): | ||

| # if key == ord('y'): | ||

| # label = 'YES' | ||

| # else: | ||

| # label = 'NO' | ||

| # image_query = gl.ask_async(detector, frame, human_review="NEVER") | ||

| # gl.add_label(image_query, label) | ||

| # print(f'Adding label {label} for image query {image_query.id}') | ||

|

|

||

| # Submit image queries in a timed loop | ||

| now = time.time() | ||

| if last_capture_time + WAIT_TIME < now: | ||

| last_capture_time = now | ||

|

|

||

| print(f'Asking question: {detector_query}') | ||

| image_query = gl.submit_image_query(detector, frame) | ||

| print(f'The answer is {image_query.result.label.value}') | ||

|

|

||

| grabber.release() | ||

| cv2.destroyAllWindows() | ||

| ``` | ||

| This code will take an image from your connected camera every minute and ask Groundlight a question in natural language, before printing out the answer. | ||

|

|

||

|

|

||

|

|

||

| ### Using your computer vision application | ||

|

|

||

| Just like that, you have a complete computer vision application. You can change the code and configure a detector for your specific use case. Also, you can monitor and improve the performance of your detector at [https://dashboard.groundlight.ai/](https://dashboard.groundlight.ai/). Groundlight’s human-in-the-loop technology will monitor your image feed for unexpected changes and anomalies, and by verifying answers returned by Groundlight you can improve the process. At app.groundlight.ai, you can also set up text and email notifications, so you can be alerted when something of interest happens in your video stream. | ||

|

|

||

|

|

||

|

|

||

| ### If You're Looking for More: | ||

|

|

||

| Now that you've built your first application, learn how to [write queries](https://code.groundlight.ai/python-sdk/docs/getting-started/writing-queries). | ||

|

|

||

| Want to play around with sample applications built by Groundlight AI? Visit [Guides](https://www.groundlight.ai/guides) to build example applications, from detecting birds outside of your window to running Groundlight AI on a Raspberry Pi. | ||

| Now let's set up your credentials so you can start making API calls. Groundlight uses API tokens to securely authenticate your requests. | ||

|

|

||

| If you don't have an API token yet, refer to our [API Tokens guide](/docs/getting-started/api-tokens) to create one. | ||

|

|

||

| The SDK will automatically look for your token in the `GROUNDLIGHT_API_TOKEN` environment variable. Set it up with: | ||

| ```bash | ||

| # MacOS / Linux | ||

| export GROUNDLIGHT_API_TOKEN='your-api-token' | ||

| ``` | ||

| ```powershell | ||

| # Windows | ||

| setx GROUNDLIGHT_API_TOKEN "your-api-token" | ||

| ``` | ||

| :::important API Tokens | ||

| Keep your API token secure! Anyone who has access to it can impersonate you and can access to your Groundlight data. | ||

| ::: | ||

|

|

||

| ### Call the Groundlight API | ||

|

|

||

| Call the Groundlight API by creating a `Detector` and submitting an `ImageQuery`. A `Detector` represents a specific | ||

| visual question you want to answer, while an `ImageQuery` is a request to analyze an image with that question. | ||

|

|

||

| The Groundlight system is designed to provide consistent, highly confident answers for similar images | ||

| (such as frames from the same camera) when asked the same question repeatedly. This makes it ideal for | ||

| scenarios where you need reliable visual detection. | ||

|

|

||

| Let's see how to use Groundlight to analyze an image: | ||

| ```python title="ask.py" | ||

| from framegrab import FrameGrabber | ||

| from groundlight import Groundlight, Detector, ImageQuery | ||

|

|

||

| gl = Groundlight() | ||

| detector: Detector = gl.get_or_create_detector( | ||

| name="eagle-detector", | ||

| query="Is there an eagle visible?", | ||

| ) | ||

|

|

||

| # Big Bear Bald Eagle Nest livestream | ||

| youtube_live_url = 'https://www.youtube.com/watch?v=B4-L2nfGcuE' | ||

|

|

||

| framegrab_config = { | ||

| 'input_type': 'youtube_live', | ||

| 'id': {'youtube_url': youtube_live_url}, | ||

| } | ||

|

|

||

| with FrameGrabber.create_grabber(framegrab_config) as grabber: | ||

| frame = grabber.grab() | ||

| if frame is None: | ||

| raise RuntimeError("No frame captured") | ||

|

|

||

| iq: ImageQuery = gl.submit_image_query(detector=detector, image=frame) | ||

|

|

||

| print(f"{detector.query} -- Answer: {iq.result.label} with confidence={iq.result.confidence:.3f}\n") | ||

| print(iq) | ||

| ``` | ||

|

|

||

| Run the code using `python ask.py`. The code will submit an image from the live-stream to the Groundlight API and print the result: | ||

| ``` | ||

| Is there an eagle visible? -- Answer: YES with confidence=0.988 | ||

|

|

||

| ImageQuery( | ||

| id='iq_2pL5wwlefaOnFNQx1X6awTOd119', | ||

| query="Is there an eagle visible?, | ||

| detector_id='det_2owcsT7XCsfFlu7diAKgPKR4BXY', | ||

| result=BinaryClassificationResult( | ||

| confidence=0.9884857543478209, | ||

| label=<Label.YES: 'YES'> | ||

| ), | ||

| created_at=datetime.datetime(2025, 2, 25, 11, 5, 57, 38627, tzinfo=tzutc()), | ||

| patience_time=30.0, | ||

| confidence_threshold=0.9, | ||

| type=<ImageQueryTypeEnum.image_query: 'image_query'>, | ||

| result_type=<ResultTypeEnum.binary_classification: 'binary_classification'>, | ||

| metadata=None | ||

| ) | ||

| ``` | ||

| ## What's Next? | ||

|

|

||

| **Amazing job!** You've just built your first computer vision application with Groundlight. | ||

| In just a few lines of code, you've created an eagle detector that can analyze live video streams! | ||

|

|

||

| ### Supercharge Your Application | ||

|

|

||

| Take your application to the next level: | ||

|

|

||

| - **Monitor in real-time** through the [Groundlight Dashboard](https://dashboard.groundlight.ai/) - see your detections, review results, and track performance | ||

| - **Get instant alerts** when important events happen - [set up text and email notifications](/docs/guide/alerts) for critical detections | ||

| - **Improve continuously** with Groundlight's human-in-the-loop technology that learns from your feedback | ||

|

|

||

| ### Next Steps | ||

|

|

||

| | What You Want To Do | Resource | | ||

| |---|---| | ||

| | 📝 Create better detectors | [Writing effective queries](/docs/getting-started/writing-queries) | | ||

| | 📷 Connect to cameras, RTSP, or other sources | [Grabbing images from various sources](/docs/guide/grabbing-images) | | ||

| | 🎯 Fine-tune detection accuracy | [Managing confidence thresholds](/docs/guide/managing-confidence) | | ||

| | 📚 Explore the full API | [SDK Reference](/docs/api-reference/) | | ||

|

|

||

| Ready to explore more possibilities? Visit our [Guides](https://www.groundlight.ai/guides) to discover sample | ||

| applications built with Groundlight AI — from [industrial inspection workflows](https://www.groundlight.ai/blog/lkq-corporation-uses-groundlight-ai-to-revolutionize-quality-control-and-inspection) | ||

| to [hummingbird detection systems](https://www.groundlight.ai/guides/detecting-hummingbirds-with-groundlight-ai). | ||

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -4,10 +4,14 @@ | |

| } | ||

|

|

||

| a { | ||

| color: inherit; | ||

| color: #3498db; | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Correctly color clickable links |

||

| display: inline-block; | ||

| } | ||

|

|

||

| a:hover { | ||

| color: #2980b9; | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. slightly lighter blue (plus underlining) when hovering on a link |

||

| } | ||

|

|

||

| h1, | ||

| h2, | ||

| h3 { | ||

|

|

||

Oops, something went wrong.

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Uh oh!

There was an error while loading. Please reload this page.