Background:

(a) https://open5gs.org/

(b) https://github.com/open5gs/open5gs

(c) https://github.com/aligungr/UERANSIM

Prerequisites:

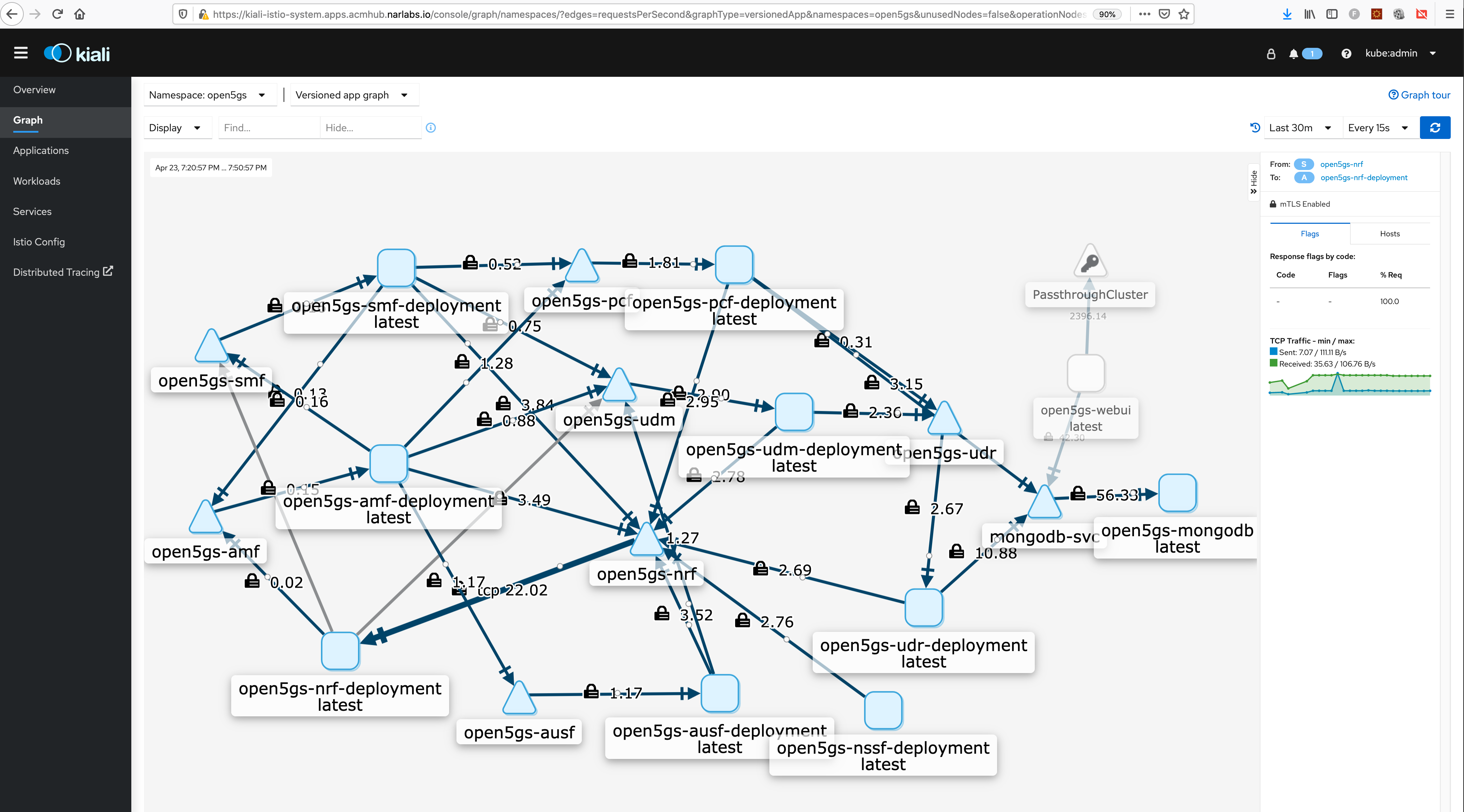

(i) OCP with OSM installed and configured

(ii) Enable SCTP on OCP Reference

oc create -f enablesctp.yaml

Wait for machine config to be applied on all worker nodes and all worker nodes come back in to ready state. Check with;

oc get nodes

(1) Run 0-deploy5gcore.sh that creates the project, add project to service mesh member-roll, provisions necessary role bindings , deploy helm-charts for you and also also creates virtual istio ingress for webui.

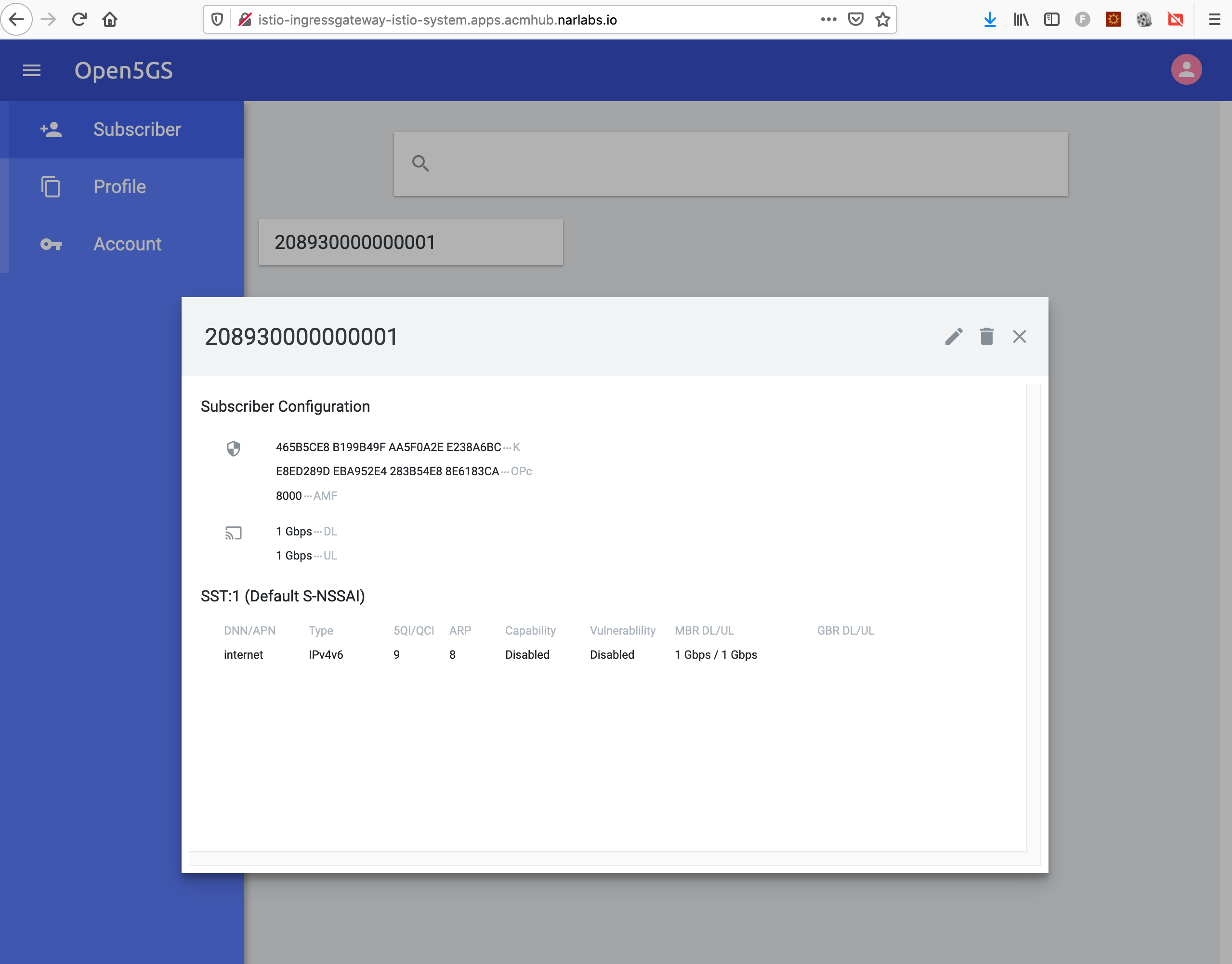

(2) Provision user equipment (UE) imsi (see ueransim/ueransim-ue-configmap.yaml, defaul imsi is 208930000000001) to 5gcore so your ue registration (ie running ueransim ue mode) will be allowed.

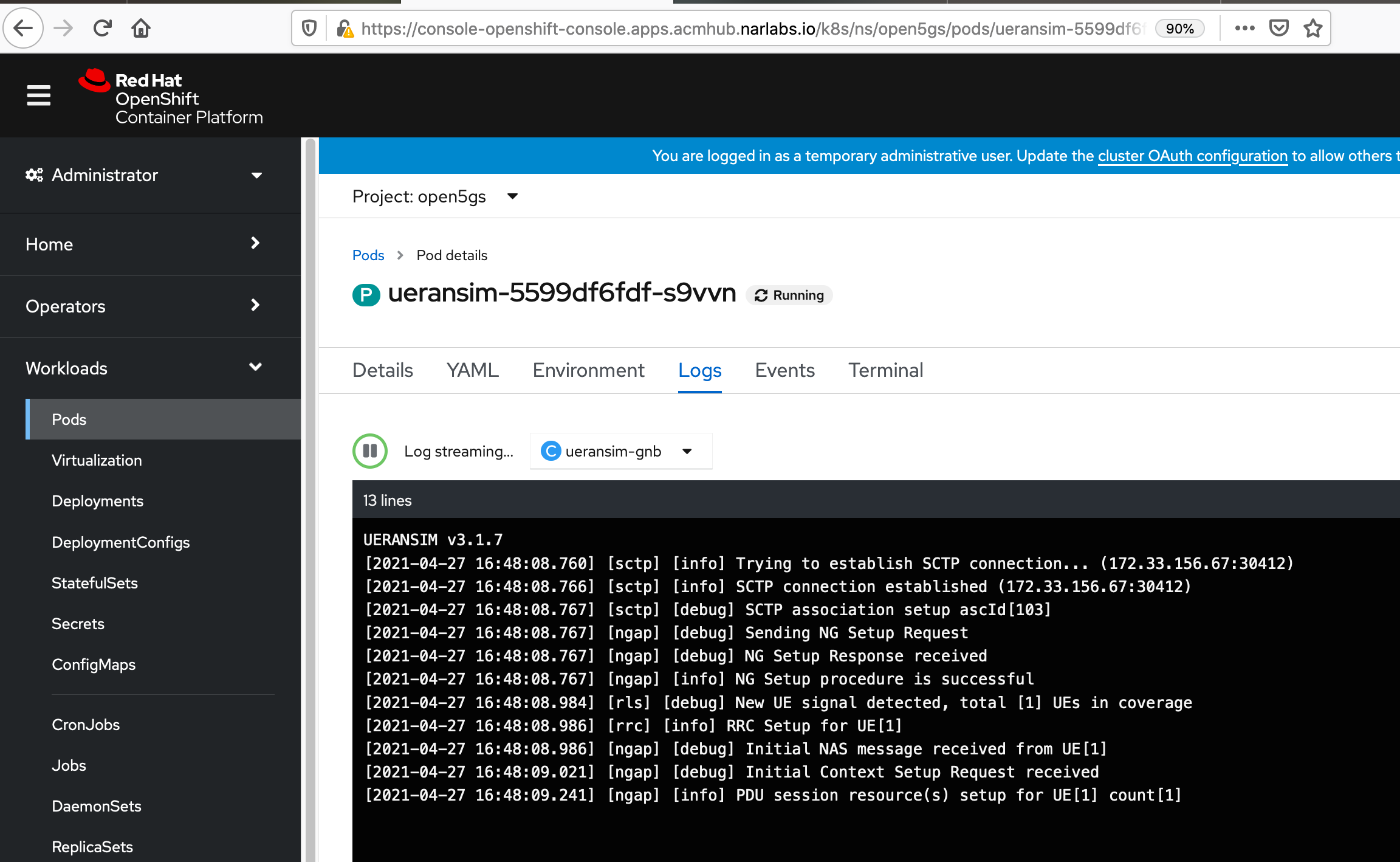

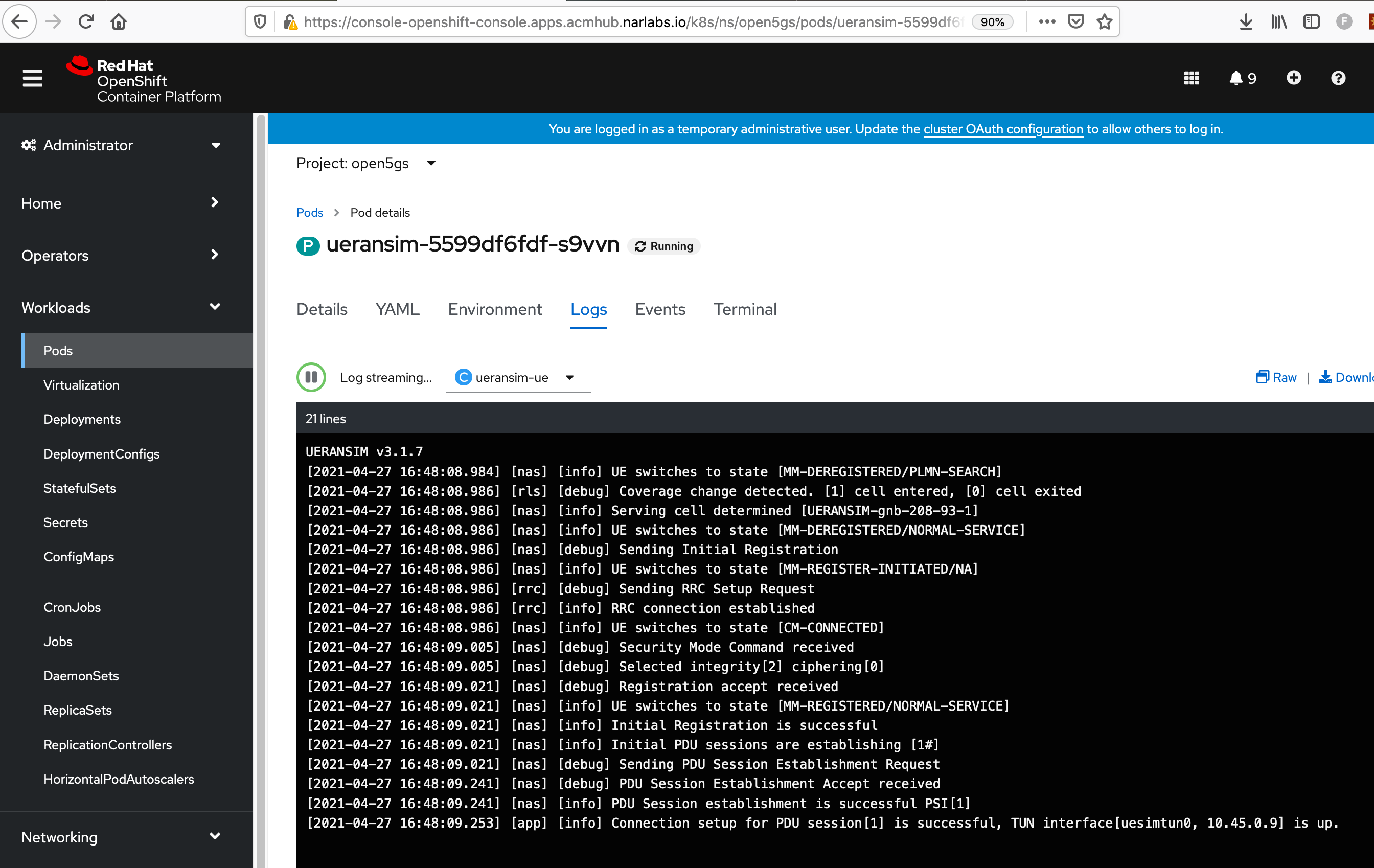

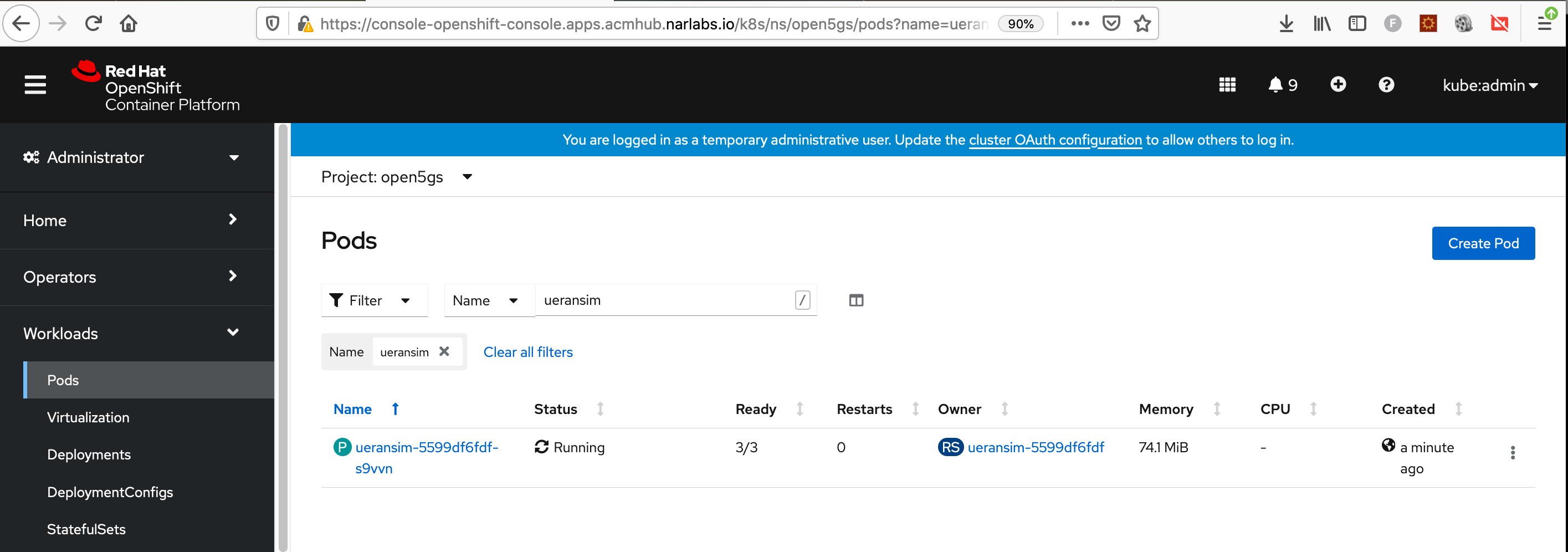

(3) Use 1-deploy5gran.sh that creates the config maps and ueransim deployment with one pod that has multiple containers (gnb, ue as separate containers inside same pod)

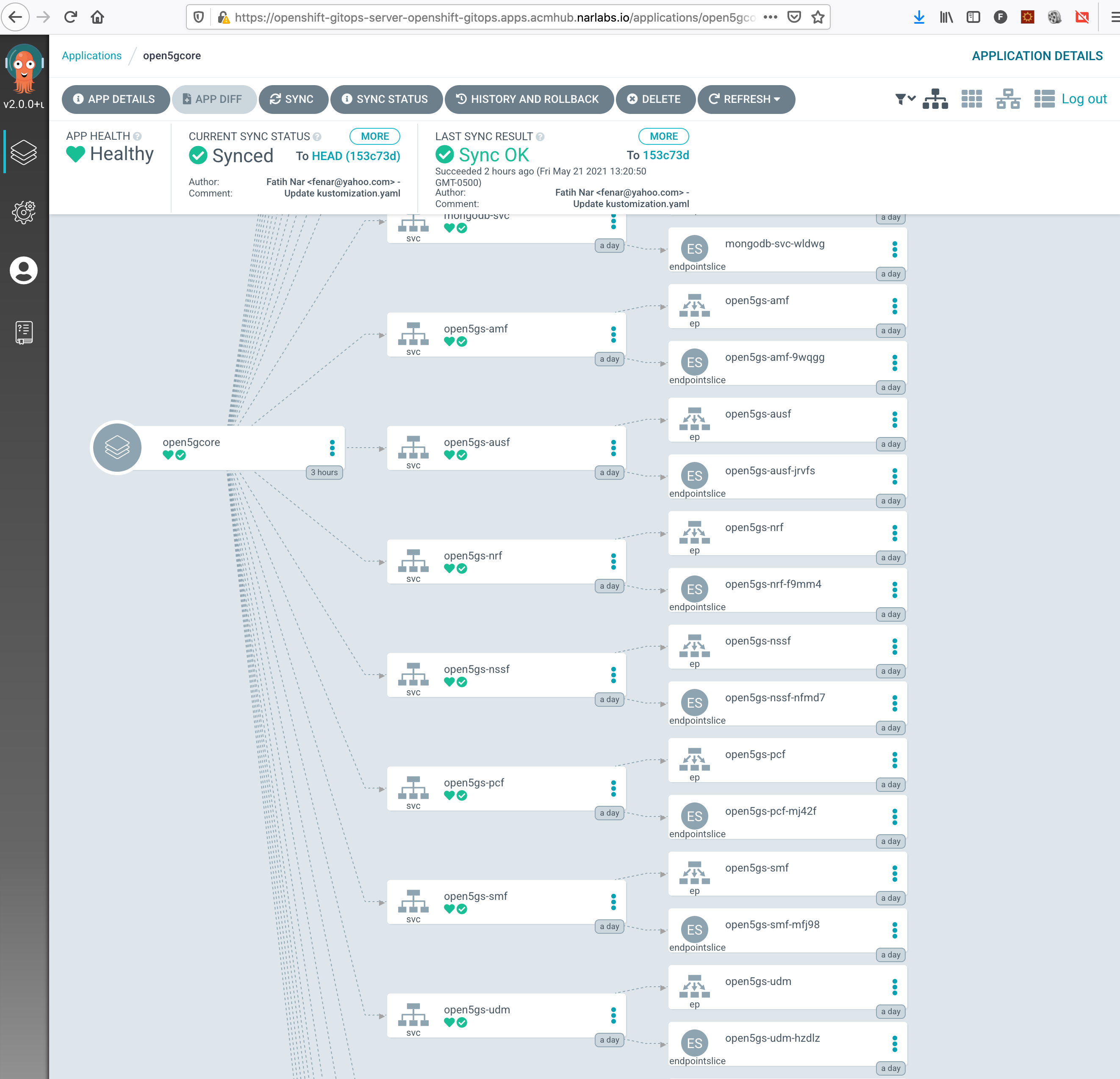

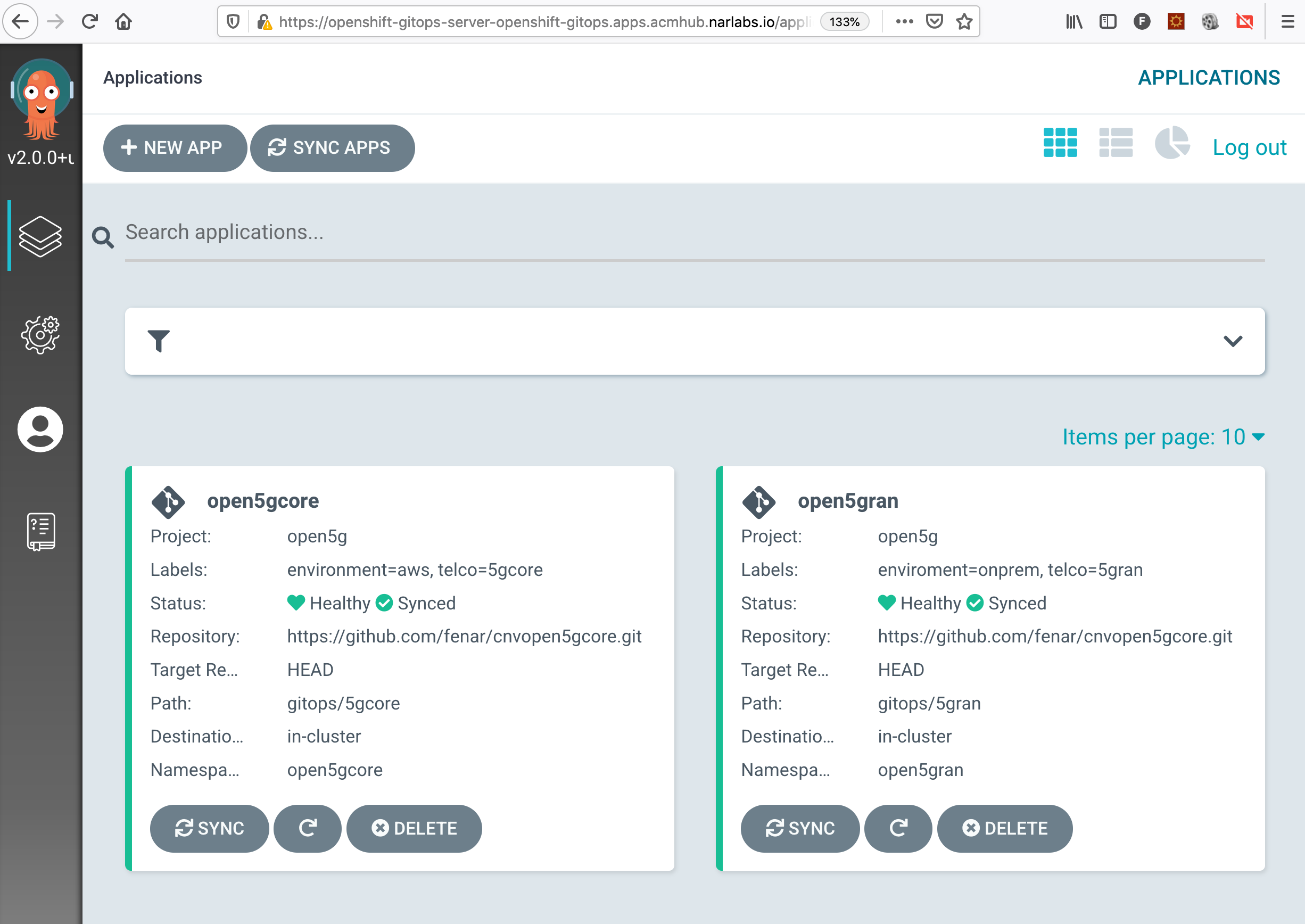

(4) If you like to leverage GitOps on your deployment you can use Red Hat Openshift GitOps operator and simply point this repo with 5gcore helm path and kickstart your deployment.

Ref: Red Hat GitOps Operator

If you fail using ArgoCD due to permission errors on your project, worth to check/add necessary role to your argocd controller.

oc adm policy add-cluster-role-to-user cluster-admin -z openshift-gitops-argocd-application-controller -n openshift-gitops

ArgoCD Applications; 5GCore and 5GRAN

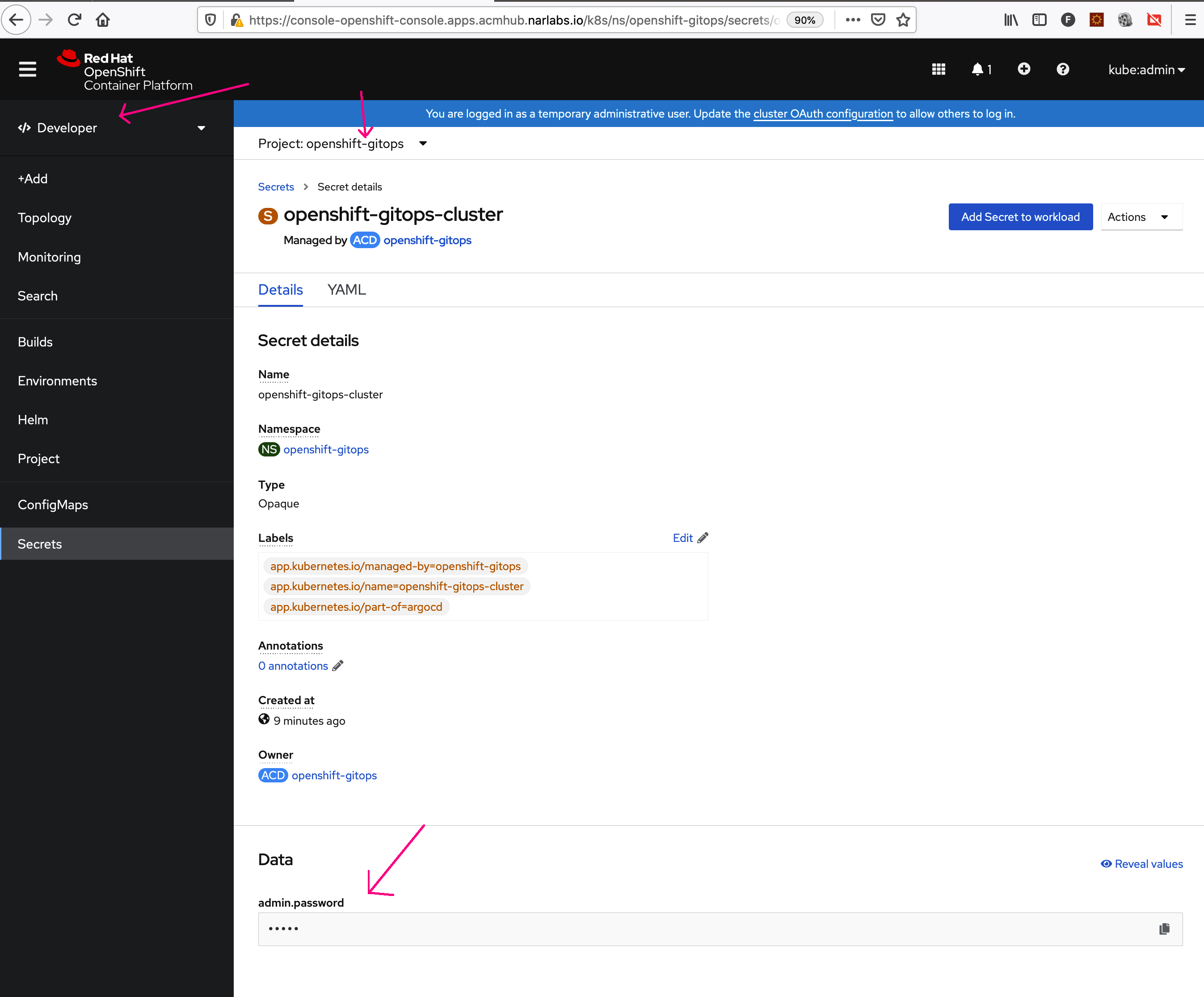

PS: If you wonder from where to get the default ArgoCD admin password, here it is :-).

Alternatively you can get at cli:

oc get secret openshift-gitops-cluster -n openshift-gitops -o jsonpath='{.data.admin\.password}' | base64 -d

>>> Adding More Target Clusters as target deployment environment

(5) Use ./3-delete5gran.sh to wipe ueransim microservices deployment

(6) Clear Enviroment run ./5-delete5gcore.sh to wipe 5gcore deployment