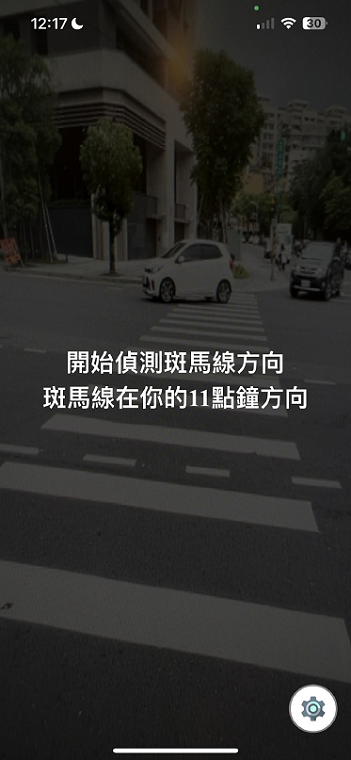

- The main project objective is to develop a iOS mobile app that helps visually impaired individuals navigate safely and independently, especially when crossing roads.

- The app will use AI image recognition technology to identify safe crossing areas and obstacles.

- It will provide real-time information about the surroundings and enhance the user experience.

- Interviews were conducted with visually impaired individuals, including those with partial vision and full blindness.

- Different types of visual impairments and their specific needs were identified.

- Challenges include difficulties in transportation, identifying objects, and using technology effectively.

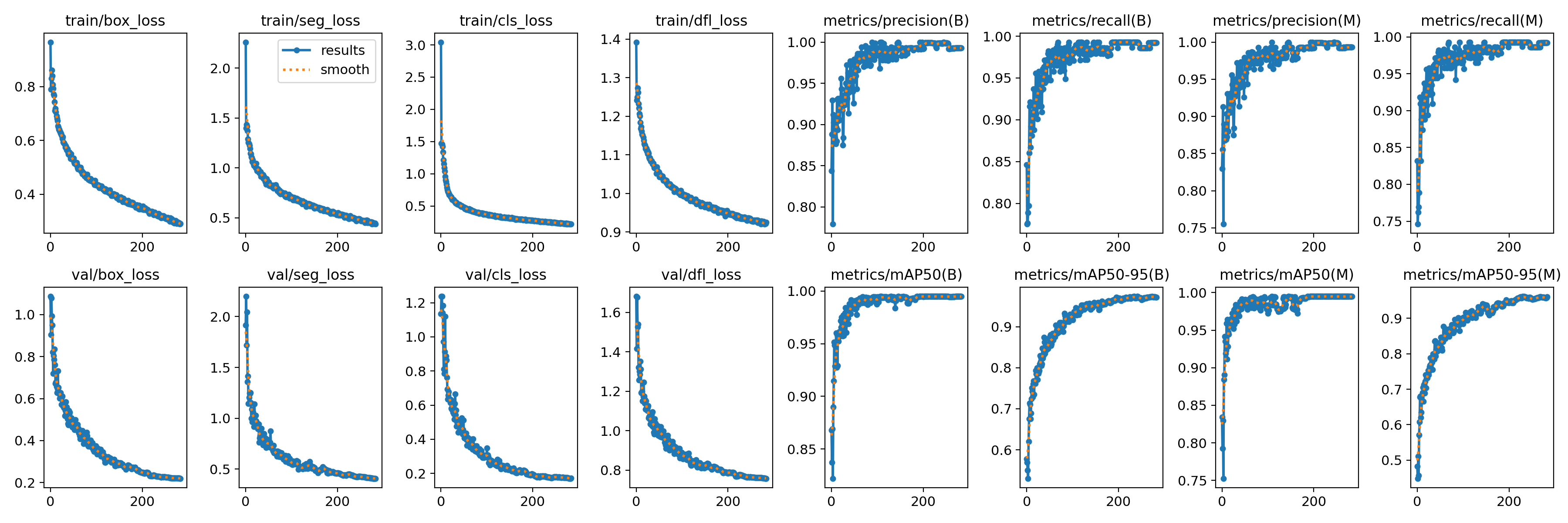

- Instance Segmentation (Ultralytics YOLOv8l-seg)

- OpenCV for crosswalk angle detection and obstacle detection

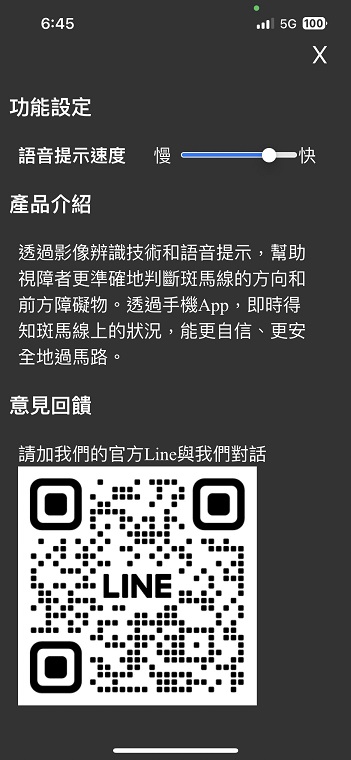

- Voice prompts, with adjustable voice speed in real-time.

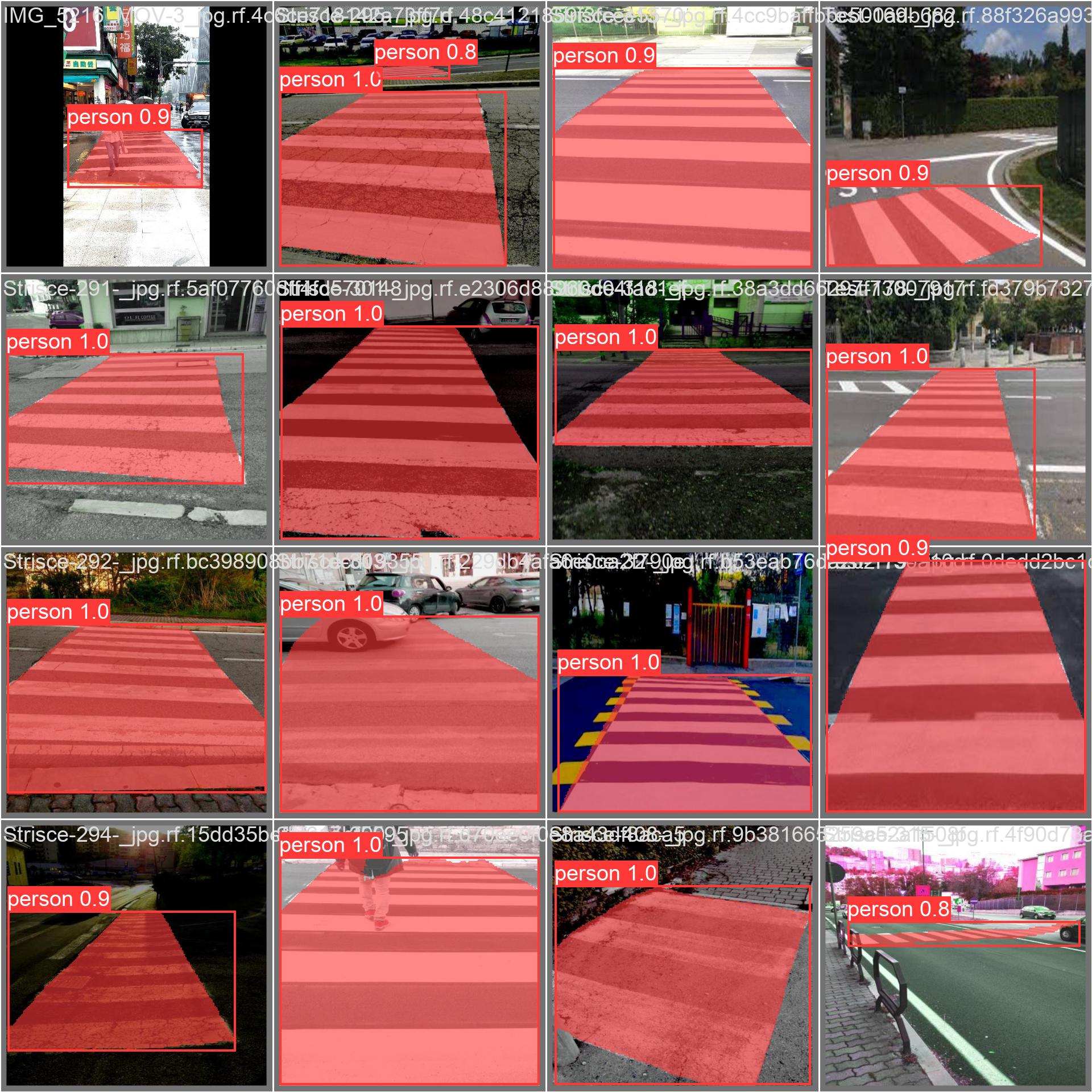

- GitHub open data (Crosswalks-Detection-using-YOLO) / Self-labeling / 713 images

- All training data opensource in Roboflow Open datasets

- Self-captured photos and videos (designed for different daytime, nighttime, crowd, weather, and regional variations) / Self-labeling

| Box | Mask | |

|---|---|---|

| Precision | 99.9% | 97.4% |

| Recall | 99.3% | 99.3% |

| mAP50 | 99.5% | 99.5% |

| mAP50-95 | 97.4% | 96.1% |

Create a clean environment

conda create --name cleanenv python=3.8.1

conda activate cleanenv

Install dependencies

pip install ultralytics aiohttp

or install from requirements.txt

pip install -r requirements.txt

start python server

python server.py

open the address http://localhost:8080/

This work is under GNU GENERAL PUBLIC LICENSE, check LICENSE file for details. All rights reserved to Ultralytics for the YOLO model.