Javascript server with parity feature, except for function calling

Initial release:

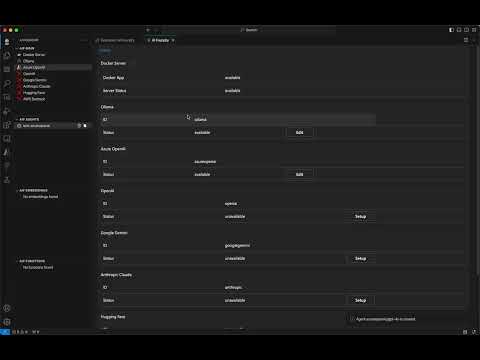

- Support 7 LLM providers

- RAG/embedding

- Function calling

Setup video:

- Download VS Code and install

- Download AI Foundry VS Code extension version 0.4.0

- Launch VS Code and choose

Extensionsfrom the activity bar (on the left by default) - Click the 3 dots at top right

- Click

Install from VSIXfrom the menu and choose the VS Code extension file (*.vsix)

Choose at least one of the following language model providers:

- Ollama: Download Ollama https://ollama.com/download, install and run

ollama servefrom Terminal - OpenAI: setup link

- Azure OpenAI: setup link

- Google Gemini: setup link

- AWS Bedrock: Register AWS account and setup AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY (you may need AWS_SESSION_TOKEN depends on the configurations) with

aws configure(link) and then request the model access.

Intro video:

- Move mouse to "AIF Agents", click the three dots on the right

- Choose "Create" and input a name for your agent

- Choose "ollama://mistral" as base model

- Click "OK" for the next step

- Choose the new created agent and then click "Playground"

- Type your questions and then wait for the LLM responses

- Go back to the agent menu, click the edit button (the pen icon on the right), you can add the system prompt for LLM.

- Move mouse to "AIF Embeddings", click the three dots on the right

- Choose "Create" and input a name for your documents

- Choose "ollama://mxbai-embed-large" as embedding model

- Choose one or more documents for searching, currently, AI Foundry only supports TXT files. You can add restaurant menu, resume or any documents you want to search for.

- Create an "agent" with the steps in section "Basic LLM chat with Ollama", but choose your documents name from the list

- In "Playground", ask questions about your documents, LLM can give you the answers. For example, if you uploads hotel check-in, check-out rules and then ask LLM "I would like to check in at Sept 11 and then stay for 2 nights, what date and what time should I check out?", LLM should be able to tell you the date and time based on the hotel rules from the document.

Download source code: https://github.com/YusakuNo1/AiFoundry

AI Foundry uses URI as resource identifier as well as keys for map. A typical AI Foundry URI is: [provider]://[category]/[path1]/[path2]/[path3]?[param1=value1]&[param2=value2], a concrete example is aif://agents/[agent-id], azureopenai://models/gpt-4o-mini?version=2024-07-01-preview

- In folder of AiFoundry, run

npm i

- Start 3 different terminals to monitor code changes

- In folder of AiFoundry, run

cd packages\vscode-shared && npm run watchand keep this Terminal openned - In folder of AiFoundry, run

cd packages\vscode-ui && npm run watchand keep this Terminal openned - In folder of AiFoundry, run

cd packages\server-js && npm run watchand keep this Terminal openned

- In folder of AiFoundry, run

- Start VS Code extension debug mode

- Launch a VS Code instance

- Choose

Run and Debugfrom the activity bar - Select configuration

Launch AI Foundry

- For AI Foundry VS Code extension debugging, it's Node.js code, use the VS Code instance

- For AI Foundry VS Code extension webview, debug from new VS Code instance, by launching

Inspectwith menuHelp -> Toggle Developer Tools-- it's the same inspect like Chromium/Chrome/Edge, because VS Code is an Electron app.

- Download vsce from this special branch: https://github.com/YusakuNo1/vscode-vsce/tree/main.aifoundry

- In vscode-vsce run command:

npm i && npm run compile - Update

packages/vscode/build.shwith the path forvscode-vsce, e.g. ifvscode-vsceis in/Users/david/vscode-vsce, setVSCE_HOME=/Users/david/vscode-vsce