example repository

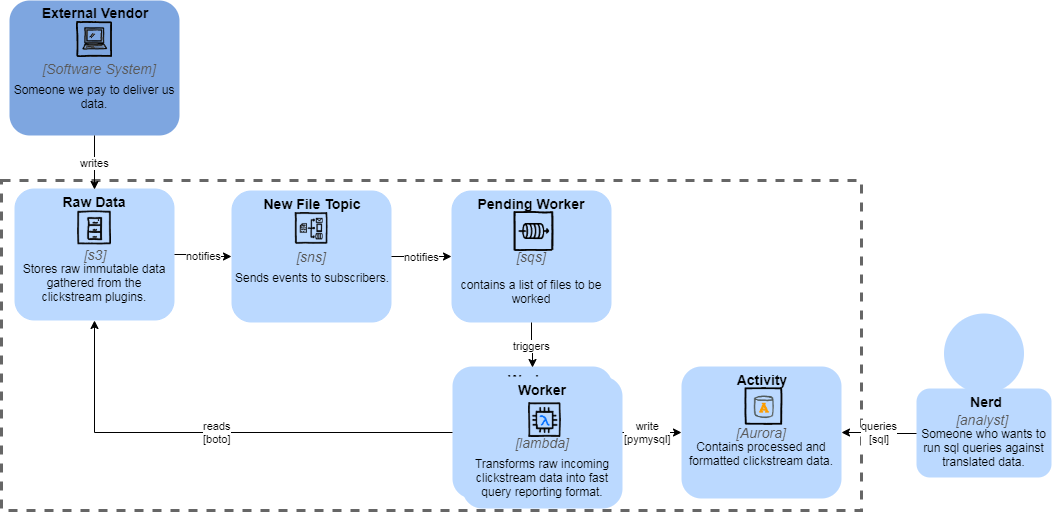

- C4 Diagrams

- System Requirements

- Running Local

- Test Overview

- Deploy Overview

- Contributing

- Adding a new Lambda

- Ubuntu 18.x

- python 3.7

pythonnotpython3

- docker

- docker-compose

- awscli

Before running locally, ensure that the proper system requirements are met. Then,

make install

make shim

These will establish all dependencies for local runs.

Calling make run will rebuild the target script in the .build/ direectory,

and execute the main.py with any provided run arguments.

make run FUNC=worker RUN_ARGS=' \

--read_queue=pending-worker \

--sqs_endpoint=http://localhost:4576 \

--db_host=127.0.0.1 \

--db_port=13306 \

--db_name=activity \

--db_user=root \

--db_pass=password \

'

Before running tests, ensure that the proper system requirements are met.

Then, make install.

Unit tests can be called with make test.

Additionally linting is available for both the business logic language and IaC (terraform) with make lint.

Both commands should be wired up in any CI/CD solution.

make test

make lint

Manual deploys is possible directly from the command line if the appropriate permissions are configured.

export AWS_ACCESS_KEY_ID=AAAAAAAABBBBBBBCCCCCC

export AWS_SECRET_ACCESS_KEY=******************************

export AWS_DEFAULT_REGION=us-west-2

export TF_VAR_rds_user=bot

export TF_VAR_rds_pass=password

make build TARGET=role ENV=lab

make build TARGET=network ENV=lab

make build TARGET=queue ENV=lab

make build TARGET=aurora ENV=lab

make build TARGET=layer ENV=lab

make build TARGET=lambda ENV=lab

make deploy TARGET=role ENV=lab

make deploy TARGET=network ENV=lab

make deploy TARGET=queue ENV=lab

make deploy TARGET=aurora ENV=lab

make deploy TARGET=layer ENV=lab

make deploy TARGET=lambda ENV=lab

These commands can be easily wired up to a CI/CD pipeline. The builds and deploys can be triggered by events specified by the team (on push, on merge to master, on tag, etc).

- Scripts

./src/lambda, const./config/{scriptFileName}.yml, and wrapper./src/common/lambda_wrapper.pyare copied into a build directory for zip archiving. - Terraform does a unique hash on the resulting lambda(s) zip that serves as a way to diff changes.

- Terraform will create/update the lambda function.

- Other

./infra/*folders are examined by terrraform and compared against the running aws environment - When there are differences in the

.tffiles from the live environment, then terraform will create/update/destroy live aws resources

Changing the system, adding a new method or updating an existing method.

- Tests should be invoked with

make testafter changes. - A test runner can be activated with

make watch. - Run

make lintbefore push and fix any hangups.

There are a few places that need to be touched in order to create a new function.

src/func/func_name.pytests/func_name.pyinfra/queue/func_name.tfinfra/lambda/func_name.tf

Once development is satisfactory;

5. make deploy TARGET=lambda ENV=lab

- or use CI/CD triggers

pyenv+awscli

curl -L https://github.com/pyenv/pyenv-installer/raw/master/bin/pyenv-installer | bash

...(configure your shell)...

pyenv virtualenv 3.7.0 example

pyenv activate example

make install

docker-compose

sudo curl -L "https://github.com/docker/compose/releases/download/1.22.0/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose \

&& sudo mv /usr/local/bin/docker-compose /usr/bin/docker-compose \

&& sudo chmod +x /usr/bin/docker-compose

- need to complete the ECR/ECS terraform example

- spellcheck

- show how to connect to the rds behind vpc

- likely jump host

- potentially show global state in example instead of local terraform state