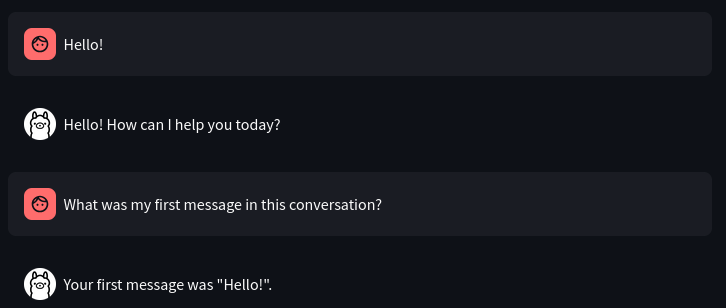

Llama-Lit is a streamlit page providing a front-end to interact with Ollama.

This is still in development and needs some adjustments to offer a good user experience.

The backend was made using the ollama library.

The frontend was made with streamlit.

If one wants to host this application themselves, they would need to install all requirements and run the script via streamlit run app.py.

As this software is not available on the community cloud, one needs it to run on their own machine.

1. Install the AI model

ollama pull llama3.1:latestNote

Always check for the newest model to install.

2. Install the required packages

pip install streamlit ollamaImportant

On Windows systems, pip comes automatically with python.

On other systems such as the Linux family of systems, you need to install pip seperately.

3. Download this repository

Locate using the cd command to the parent folder, where the llama-lit folder with all the code should be stored at.

Then execute the following command, to clone the repository onto your file system.

git clone https://github.com/Simoso68/llama-lit.gitAfter this, a new folder named 'llama-lit' should have been created in the parent directory, that you chose.

4. Locate into the llama-lit directory

After successfully cloning llama-lit onto your device, one should be able to locate into this folder from the chosen parent folder using:

cd llama-lit5. Running Llama-Lit

From the llama-lit directory, you can just run the following command, to make the application start:

streamlit run app.pyNow, you should have a functioning copy of llama-lit.

To start llama-lit in the future, locate into your llama-lit directory and run the same command as in step 5 of the installation.

To stop the application, hit CTRL and C while you are in your terminal to shut it down.