This is a high level library to extend the functionality of native fetch() with everything necessary and no overhead, so to wrap and reuse common patterns and functionalities in a simple and declarative manner. It is designed to be used in high-throughput, high-performance applications.

Also, managing multitude of API connections in large applications can be complex, time-consuming and hard to scale. fetchff simplifies the process by offering a simple, declarative approach to API handling using Repository Pattern. It reduces the need for extensive setup, middlewares, retries, custom caching, and heavy plugins, and lets developers focus on data handling and application logic.

Click to expand

Some of challenges with Native Fetch that fetchff solves:

- Error Status Handling: Fetch does not throw errors for HTTP error statuses, making it difficult to distinguish between successful and failed requests based on status codes alone.

- Error Visibility: Error responses with status codes like 404 or 500 are not automatically propagated as exceptions, which can lead to inconsistent error handling.

- No Built-in Retry Mechanism: Native

fetch()lacks built-in support for retrying requests. Developers need to implement custom retry logic to handle transient errors or intermittent failures, which can be cumbersome and error-prone. - Network Errors Handling: Native

fetch()only rejects the Promise for network errors or failure to reach the server. Issues such as timeout errors or server unavailability do not trigger rejection by default, which can complicate error management. - Limited Error Information: The error information provided by native

fetch()is minimal, often leaving out details such as the request headers, status codes, or response bodies. This can make debugging more difficult, as there's limited visibility into what went wrong. - Lack of Interceptors: Native

fetch()does not provide a built-in mechanism for intercepting requests or responses. Developers need to manually manage request and response processing, which can lead to repetitive code and less maintainable solutions. - No Built-in Caching: Native

fetch()does not natively support caching of requests and responses. Implementing caching strategies requires additional code and management, potentially leading to inconsistencies and performance issues.

To address these challenges, the fetchf() provides several enhancements:

-

Consistent Error Handling:

- In JavaScript, the native

fetch()function does not reject the Promise for HTTP error statuses such as 404 (Not Found) or 500 (Internal Server Error). Instead,fetch()resolves the Promise with aResponseobject, where theokproperty indicates the success of the request. If the request encounters a network error or fails due to other issues (e.g., server downtime),fetch()will reject the Promise. - The

fetchffplugin aligns error handling with common practices and makes it easier to manage errors consistently by rejecting erroneous status codes.

- In JavaScript, the native

-

Enhanced Retry Mechanism:

- Retry Configuration: You can configure the number of retries, delay between retries, and exponential backoff for failed requests. This helps to handle transient errors effectively.

- Custom Retry Logic: The

shouldRetryasynchronous function allows for custom retry logic based on the error fromresponse.errorand attempt count, providing flexibility to handle different types of failures. - Retry Conditions: Errors are only retried based on configurable retry conditions, such as specific HTTP status codes or error types.

-

Improved Error Visibility:

- Error Wrapping: The

createApiFetcher()andfetchf()wrap errors in a customResponseErrorclass, which provides detailed information about the request and response. This makes debugging easier and improves visibility into what went wrong.

- Error Wrapping: The

-

Extended settings:

- Check Settings table for more information about all settings.

✅ Lightweight: Minimal code footprint of ~4KB gzipped for managing extensive APIs.

✅ High-Performance: Optimized for speed and efficiency, ensuring fast and reliable API interactions.

✅ Secure: Secure by default rather than "permissive by default", with built-in sanitization mechanisms.

✅ Immutable: Every request has its own instance.

✅ Isomorphic: Compatible with Node.js, Deno and modern browsers.

✅ Type Safe: Strongly typed and written in TypeScript.

✅ Scalable: Easily scales from a few calls to complex API networks with thousands of APIs.

✅ Tested: Battle tested in large projects, fully covered by unit tests.

✅ Customizable: Fully compatible with a wide range configuration options, allowing for flexible and detailed request customization.

✅ Responsible Defaults: All settings are opt-in.

✅ Framework Independent: Pure JavaScript solution, compatible with any framework or library, both client and server side.

✅ Browser and Node.js 18+ Compatible: Works flawlessly in both modern browsers and Node.js environments.

✅ Maintained: Since 2021 publicly through Github.

- Smart Retry Mechanism: Features exponential backoff for intelligent error handling and retry mechanisms.

- Request Deduplication: Set the time during which requests are deduplicated (treated as same request).

- Cache Management: Dynamically manage cache with configurable expiration, custom keys, and selective invalidation.

- Network Revalidation: Automatically revalidate data on window focus and network reconnection for fresh data.

- Dynamic URLs Support: Easily manage routes with dynamic parameters, such as

/user/:userId. - Error Handling: Flexible error management at both global and individual request levels.

- Request Cancellation: Utilizes

AbortControllerto cancel previous requests automatically. - Adaptive Timeouts: Smart timeout adjustment based on connection speed for optimal user experience.

- Fetching Strategies: Handle failed requests with various strategies - promise rejection, silent hang, soft fail, or default response.

- Requests Chaining: Easily chain multiple requests using promises for complex API interactions.

- Native

fetch()Support: Utilizes the built-infetch()API, providing a modern and native solution for making HTTP requests. - Custom Interceptors: Includes

onRequest,onResponse, andonErrorinterceptors for flexible request and response handling.

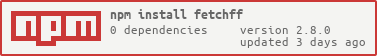

Using NPM:

npm install fetchffUsing Pnpm:

pnpm install fetchffUsing Yarn:

yarn add fetchffAlias: fetchff(url, config)

A simple function that wraps the native fetch() and adds extra features like retries and better error handling. Use fetchf() directly for quick, enhanced requests - no need to set up createApiFetcher(). It works independently and is easy to use in any codebase.

import { fetchf } from 'fetchff';

const { data, error } = await fetchf('/api/user-details', {

timeout: 5000,

cancellable: true,

retry: { retries: 3, delay: 2000 },

// Specify some other settings here... The fetch() settings work as well...

});Click to expand

Returns the current global default configuration used for all requests. This is useful for inspecting or debugging the effective global settings.

import { getDefaultConfig } from 'fetchff';

// Retrieve the current global default config

const config = getDefaultConfig();

console.log('Current global fetchff config:', config);Click to expand

Allows you to globally override the default configuration for all requests. This is useful for setting application-wide defaults like timeouts, headers, or retry policies.

import { setDefaultConfig } from 'fetchff';

// Set global defaults for all requests

setDefaultConfig({

timeout: 10000, // 10 seconds for all requests

headers: {

Authorization: 'Bearer your-token',

},

retry: {

retries: 2,

delay: 1500,

},

});

// All subsequent requests will use these defaults

const { data } = await fetchf('/api/data'); // Uses 10s timeout and retry configClick to expand

It is a powerful factory function for creating API fetchers with advanced features. It provides a convenient way to configure and manage multiple API endpoints using a declarative approach. This function offers integration with retry mechanisms, error handling improvements, and all the other settings. Unlike traditional methods, createApiFetcher() allows you to set up and use API endpoints efficiently with minimal boilerplate code.

import { createApiFetcher } from 'fetchff';

// Create some endpoints declaratively

const api = createApiFetcher({

baseURL: 'https://example.com/api',

endpoints: {

getUser: {

url: '/user-details/:id/',

method: 'GET',

// Each endpoint accepts all settings declaratively

retry: { retries: 3, delay: 2000 },

timeout: 5000,

cancellable: true,

},

// Define more endpoints as needed

},

// You can set all settings globally

strategy: 'softFail', // no try/catch required in case of errors

});

// Make a GET request to http://example.com/api/user-details/2/?rating[]=1&rating[]=2

const { data, error } = await api.getUser({

params: { rating: [1, 2] }, // Passed arrays, objects etc. will be parsed automatically

urlPathParams: { id: 2 }, // Replace :id with 2 in the URL

});All the Request Settings can be directly used in the function as global settings for all endpoints. They can be also used within the endpoints property (on per-endpoint basis). The exposed endpoints property is as follows:

endpoints: Type:EndpointsConfig<EndpointTypes>List of your endpoints. Each endpoint is an object that accepts all the Request Settings (see the Basic Settings below). The endpoints are required to be specified.

The createApiFetcher() automatically creates and returns API methods based on the endpoints object provided. It also exposes some extra methods and properties that are useful to handle global config, dynamically add and remove endpoints etc.

Where yourEndpoint is the name of your endpoint, the key from endpoints object passed to the createApiFetcher().

requestConfig (optional) object - To have more granular control over specific endpoints you can pass Request Config for particular endpoint. Check Basic Settings below for more information.

Returns: Response Object (see below).

The api.request() helper function is a versatile method provided for making API requests with customizable configurations. It allows you to perform HTTP requests to any endpoint defined in your API setup and provides a straightforward way to handle queries, path parameters, and request configurations dynamically.

import { createApiFetcher } from 'fetchff';

const api = createApiFetcher({

apiUrl: 'https://example.com/api',

endpoints: {

updateUser: {

url: '/update-user/:id',

method: 'POST',

},

// Define more endpoints as needed

},

});

// Using api.request to make a POST request

const { data, error } = await api.request('updateUser', {

body: {

name: 'John Doe', // Data Payload

},

urlPathParams: {

id: '123', // URL Path Param :id will be replaced with 123

},

});

// Using api.request to make a GET request to an external API

const { data, error } = await api.request('https://example.com/api/user', {

params: {

name: 'John Smith', // Query Params

},

});You can access api.config property directly to modify global headers and other settings on the fly. This is a property, not a function.

You can access api.endpoints property directly to modify the endpoints list. This can be useful if you want to append or remove global endpoints. This is a property, not a function.

Click to expand

Programmatically update cached data without making a network request. Useful for optimistic updates or reflecting changes from other operations.

Parameters:

key(string): The cache key to updatenewData(any): The new data to store in cachesettings(object, optional): Configuration optionsrevalidate(boolean): Whether to trigger background revalidation after update

import { mutate } from 'fetchff';

// Update cache for a specific cache key

await mutate('/api/users', newUserData);

// Update with options

await mutate('/api/users', updatedData, {

revalidate: true, // Trigger background revalidation

});Directly retrieve cached data for a specific cache key. Useful for reading the current cached response without triggering a network request.

Parameters:

key(string): The cache key to retrieve (equivalent tocacheKeyfrom request config orconfig.cacheKeyfrom response object)

Returns: The cached response object, or null if not found

import { getCache } from 'fetchff';

// Get cached data for a specific key assuming you set {cacheKey: ''/api/user-profile'} in config

const cachedResponse = getCache('/api/user-profile');

if (cachedResponse) {

console.log('Cached user profile:', cachedResponse.data);

}Directly set cache data for a specific key. Unlike mutate(), this doesn't trigger revalidation by default. This is a low level function to directly set cache data based on particular key. If unsure, use the mutate() with revalidate: false instead.

Parameters:

key(string): The cache key to set. It must match the cache key of the request.response(any): The full response object to store in cachettl(number, optional): Time to live for the cache entry, in seconds. Determines how long the cached data remains valid before expiring. If not specified, the default0value will be used (discard cache immediately), if-1specified then the cache will be held until manually removed usingdeleteCache(key)function.staleTime(number, optional): Duration, in seconds, for which cached data is considered "fresh" before it becomes eligible for background revalidation. If not specified, the default stale time applies.

import { setCache } from 'fetchff';

// Set cache data with custom ttl and staleTime

setCache('/api/user-profile', userData, 600, 60); // Cache for 10 minutes, fresh for 1 minute

// Set cache for specific endpoint infinitely

setCache('/api/user-settings', userSettings, -1);Remove cached data for a specific cache key. Useful for cache invalidation when you know data is stale.

Parameters:

key(string): The cache key to delete

import { deleteCache } from 'fetchff';

// Delete specific cache entry

deleteCache('/api/user-profile');

// Delete cache after user logout

const logout = () => {

deleteCache('/api/user/*'); // Delete all user-related cache

};Manually trigger revalidation for a specific cache entry, forcing a fresh network request to update the cached data.

Parameters:

key(string): The cache key to revalidateisStaleRevalidation(boolean, optional): Whether this is a background revalidation that doesn't mark as in-flight

import { revalidate } from 'fetchff';

// Revalidate specific cache entry

await revalidate('/api/user-profile');

// Revalidate with custom cache key

await revalidate('custom-cache-key');

// Background revalidation (doesn't mark as in-flight)

await revalidate('/api/user-profile', true);Trigger revalidation for all cache entries associated with a specific event type (focus or online).

Parameters:

type(string): The revalidation event type ('focus' or 'online')isStaleRevalidation(boolean, optional): Whether this is a background revalidation

import { revalidateAll } from 'fetchff';

// Manually trigger focus revalidation for all relevant entries

revalidateAll('focus');

// Manually trigger online revalidation for all relevant entries

revalidateAll('online');Clean up revalidation event listeners for a specific event type. Useful for preventing memory leaks when you no longer need automatic revalidation.

Parameters:

type(string): The revalidation event type to remove ('focus' or 'online')

import { removeRevalidators } from 'fetchff';

// Remove all focus revalidation listeners

removeRevalidators('focus');

// Remove all online revalidation listeners

removeRevalidators('online');

// Typically called during cleanup

// e.g., in React useEffect cleanup or when unmounting componentsSubscribe to cache updates and data changes. Receive notifications when specific cache entries are updated.

Parameters:

key(string): The cache key to subscribe tocallback(function): Function called when cache is updatedresponse(any): The full response object

Returns: Function to unsubscribe from updates

import { subscribe } from 'fetchff';

// Subscribe to cache changes for a specific key

const unsubscribe = subscribe('/api/user-data', (response) => {

console.log('Cache updated with response:', response);

console.log('Response data:', response.data);

console.log('Response status:', response.status);

});

// Clean up subscription when no longer needed

unsubscribe();Programmatically abort in-flight requests for a specific cache key or URL pattern.

Parameters:

key(string): The cache key or URL pattern to aborterror(Error, optional): Custom error to throw for aborted requests

import { abortRequest } from 'fetchff';

// Abort specific request by cache key

abortRequest('/api/slow-operation');

// Useful for cleanup when component unmounts or route changes

const cleanup = () => {

abortRequest('/api/user-dashboard');

};Check if the user is on a slow network connection (2G/3G). Useful for adapting application behavior based on connection speed.

Parameters: None

Returns: Boolean indicating if connection is slow

import { isSlowConnection } from 'fetchff';

// Check connection speed and adapt behavior

if (isSlowConnection()) {

console.log('User is on a slow connection');

// Reduce image quality, disable auto-refresh, etc.

}

// Use in conditional logic

const shouldAutoRefresh = !isSlowConnection();

const imageQuality = isSlowConnection() ? 'low' : 'high';Click to expand

You can pass the settings:

- globally for all requests when calling

createApiFetcher() - per-endpoint basis defined under

endpointsin global config when callingcreateApiFetcher() - per-request basis when calling

fetchf()(second argument of the function) or in theapi.yourEndpoint()(third argument)

You can also use all native fetch() settings.

| Type | Default | Description | |

|---|---|---|---|

| baseURL (alias: apiUrl) |

string |

undefined |

Your API base url. |

| url | string |

undefined |

URL path e.g. /user-details/get |

| method | string |

'GET' |

Default request method e.g. GET, POST, DELETE, PUT etc. All methods are supported. |

| params | objectURLSearchParamsNameValuePair[] |

undefined |

Query Parameters - a key-value pairs added to the URL to send extra information with a request. If you pass an object, it will be automatically converted. It works with nested objects, arrays and custom data structures similarly to what jQuery used to do in the past. If you use createApiFetcher() then it is the first argument of your api.yourEndpoint() function. You can still pass configuration in 3rd argument if want to.You can pass key-value pairs where the values can be strings, numbers, or arrays. For example, if you pass { foo: [1, 2] }, it will be automatically serialized into foo[]=1&foo[]=2 in the URL. |

| body (alias: data) |

objectstringFormDataURLSearchParamsBlobArrayBufferReadableStream |

undefined |

The body is the data sent with the request, such as JSON, text, or form data, included in the request payload for POST, PUT, or PATCH requests. |

| urlPathParams | object |

undefined |

It lets you dynamically replace segments of your URL with specific values in a clear and declarative manner. This feature is especially handy for constructing URLs with variable components or identifiers. For example, suppose you need to update user details and have a URL template like /user-details/update/:userId. With urlPathParams, you can replace :userId with a real user ID, such as 123, resulting in the URL /user-details/update/123. |

| flattenResponse | boolean |

false |

When set to true, this option flattens the nested response data. This means you can access the data directly without having to use response.data.data. It works only if the response structure includes a single data property. |

| select | (data: any) => any |

undefined |

Function to transform or select a subset of the response data before it is returned. Called with the raw response data and should return the transformed data. Useful for mapping, picking, or shaping the response. |

| defaultResponse | any |

null |

Default response when there is no data or when endpoint fails depending on the chosen strategy |

| withCredentials | boolean |

false |

Indicates whether credentials (such as cookies) should be included with the request. This equals to credentials: "include" in native fetch(). In Node.js, cookies are not managed automatically. Use a fetch polyfill or library that supports cookies if needed. |

| timeout | number |

30000 / 60000 |

You can set a request timeout in milliseconds. Default is adaptive: 30 seconds (30000 ms) for normal connections, 60 seconds (60000 ms) on slow connections (2G/3G). The timeout option applies to each individual request attempt including retries and polling. 0 means that the timeout is disabled. |

| dedupeTime | number |

0 |

Time window, in milliseconds, during which identical requests are deduplicated (treated as single request). If set to 0, deduplication is disabled. |

| cacheTime | number |

undefined |

Specifies the duration, in seconds, for which a cache entry is considered "fresh." Once this time has passed, the entry is considered stale and may be refreshed with a new request. Set to -1 for indefinite cache. By default no caching. |

| staleTime | number |

undefined |

Specifies the duration, in seconds, for which cached data is considered "fresh." During this period, cached data will be returned immediately, but a background revalidation (network request) will be triggered to update the cache. If set to 0, background revalidation is disabled and revalidation is triggered on every access. |

| refetchOnFocus | boolean |

false |

When set to true, automatically revalidates (refetches) data when the browser window regains focus. Note: This bypasses the cache and always makes a fresh network request to ensure users see the most up-to-date data when they return to your application from another tab or window. Particularly useful for applications that display real-time or frequently changing data, but should be used judiciously to avoid unnecessary network traffic. |

| refetchOnReconnect | boolean |

false |

When set to true, automatically revalidates (refetches) data when the browser regains internet connectivity after being offline. This uses background revalidation to silently update data without showing loading states to users. Helps ensure your application displays fresh data after network interruptions. Works by listening to the browser's online event. |

| logger | Logger |

null |

You can additionally specify logger object with your custom logger to automatically log the errors to the console. It should contain at least error and warn functions. |

| fetcher | CustomFetcher |

undefined |

A custom fetcher async function. By default, the native fetch() is used. If you use your own fetcher, default response parsing e.g. await response.json() call will be skipped. Your fetcher should return response object / data directly. |

📋 Additional Settings Available:

The table above shows the most commonly used settings. Many more advanced configuration options are available and documented in their respective sections below, including:

- 🔄 Retry Mechanism -

retries,delay,maxDelay,backoff,resetTimeout,retryOn,shouldRetry- 📶 Polling Configuration -

pollingInterval,pollingDelay,maxPollingAttempts,shouldStopPolling- 🗄️ Cache Management -

cacheKey,cacheBuster,skipCache,cacheErrors- ✋ Request Cancellation -

cancellable,rejectCancelled- 🌀 Interceptors -

onRequest,onResponse,onError,onRetry- 🔍 Error Handling -

strategy

Understanding the performance impact of different settings helps you optimize for your specific use case:

Minimize Network Requests:

// Aggressive caching for static data

const staticConfig = {

cacheTime: 3600, // 1 hour cache

staleTime: 1800, // 30 minutes freshness

dedupeTime: 10000, // 10 seconds deduplication

};

// Result: 90%+ reduction in network requestsOptimize for Mobile/Slow Connections:

const mobileOptimized = {

timeout: 60000, // Longer timeout for slow connections (auto-adaptive)

retry: {

retries: 5, // More retries for unreliable connections

delay: 2000, // Longer initial delay (auto-adaptive)

backoff: 2.0, // Aggressive backoff

},

cacheTime: 900, // Longer cache on mobile

};Memory-Efficient (Low Cache):

const memoryEfficient = {

cacheTime: 60, // Short cache (1 minute)

staleTime: undefined, // No stale-while-revalidate

dedupeTime: 1000, // Short deduplication

};

// Pros: Low memory usage

// Cons: More network requests, slower perceived performanceNetwork-Efficient (High Cache):

const networkEfficient = {

cacheTime: 1800, // Long cache (30 minutes)

staleTime: 300, // 5 minutes stale-while-revalidate

dedupeTime: 5000, // Longer deduplication

};

// Pros: Fewer network requests, faster user experience

// Cons: Higher memory usage, potentially stale data| Feature | Performance Impact | Best Use Case |

|---|---|---|

| Caching | ⬇️ 70-90% fewer requests | Static or semi-static data |

| Deduplication | ⬇️ 50-80% fewer concurrent requests | High-traffic applications |

| Stale-while-revalidate | ⬆️ 90% faster perceived loading | Dynamic data that tolerates brief staleness |

| Request cancellation | ⬇️ Reduced bandwidth waste | Search-as-you-type, rapid navigation |

| Retry mechanism | ⬆️ 95%+ success rate | Mission-critical operations |

| Polling | ⬆️ Real-time updates | Live data monitoring |

FetchFF automatically adapts timeouts and retry delays based on connection speed:

// Automatic adaptation (no configuration needed)

const adaptiveRequest = fetchf('/api/data');

// On fast connections (WiFi/4G):

// - timeout: 30 seconds

// - retry delay: 1 second → 1.5s → 2.25s...

// - max retry delay: 30 seconds

// On slow connections (2G/3G):

// - timeout: 60 seconds

// - retry delay: 2 seconds → 3s → 4.5s...

// - max retry delay: 60 secondsProgressive Loading (Best UX):

// Layer 1: Instant response with cache

const quickData = await fetchf('/api/summary', {

cacheTime: 300,

staleTime: 60,

});

// Layer 2: Background enhancement

fetchf('/api/detailed-data', {

strategy: 'silent',

cacheTime: 600,

onResponse(response) {

updateUIWithDetailedData(response.data);

},

});Bandwidth-Conscious Loading:

// Check connection before expensive operations

import { isSlowConnection } from 'fetchff';

const loadUserDashboard = async () => {

const isSlowConn = isSlowConnection();

// Essential data always loads

const userData = await fetchf('/api/user', {

cacheTime: isSlowConn ? 600 : 300, // Longer cache on slow connections

});

// Optional data only on fast connections

if (!isSlowConn) {

fetchf('/api/user/analytics', { strategy: 'silent' });

fetchf('/api/user/recommendations', { strategy: 'silent' });

}

};Track key metrics to optimize your settings:

const performanceConfig = {

onRequest(config) {

console.time(`request-${config.url}`);

},

onResponse(response) {

console.timeEnd(`request-${response.config.url}`);

// Track cache hit rate

if (response.fromCache) {

incrementMetric('cache.hits');

} else {

incrementMetric('cache.misses');

}

},

onError(error) {

incrementMetric('requests.failed');

console.warn('Request failed:', error.config.url, error.status);

},

};ℹ️ Note: This is just an example. You need to implement the

incrementMetricfunction yourself to record or report performance metrics as needed in your application.

Click to expand

fetchff provides robust support for handling HTTP headers in your requests. You can configure and manipulate headers at both global and per-request levels. Here’s a detailed overview of how to work with headers using fetchff.

Note: Header keys are case-sensitive when specified in request objects. Ensure that the keys are provided in the correct case to avoid issues with header handling.

You can set default headers that will be included in all requests made with a specific createApiFetcher instance. This is useful for setting common headers like authentication tokens or content types.

import { createApiFetcher } from 'fetchff';

const api = createApiFetcher({

baseURL: 'https://api.example.com/',

headers: {

'Content-Type': 'application/json',

Authorization: 'Bearer YOUR_TOKEN',

},

// other configurations

});In addition to global default headers, you can also specify headers on a per-request basis. This allows you to override global headers or set specific headers for individual requests.

import { fetchf } from 'fetchff';

// Example of making a GET request with custom headers

const { data } = await fetchf('https://api.example.com/endpoint', {

headers: {

Authorization: 'Bearer YOUR_ACCESS_TOKEN',

'Custom-Header': 'CustomValue',

},

});The fetchff plugin automatically injects a set of default headers into every request. These default headers help ensure that requests are consistent and include necessary information for the server to process them correctly.

-

Accept:application/json, text/plain, */*Indicates the media types that the client is willing to receive from the server. This includes JSON, plain text, and any other types. -

Accept-Encoding:gzip, deflate, brSpecifies the content encoding that the client can understand, including gzip, deflate, and Brotli compression.

⚠️ Accept-Encoding in Node.js:

In Node.js, decompression is handled by the fetch implementation, and users should ensure their environment supports the encodings.

-

Content-Type:

Set automatically based on the request body type:- For JSON-serializable bodies (objects, arrays, etc.):

application/json; charset=utf-8 - For

URLSearchParams:

application/x-www-form-urlencoded - For

ArrayBuffer/typed arrays:

application/octet-stream - For

FormData,Blob,File, orReadableStream:

Not set as the header is handled automatically by the browser and by Node.js 18+ native fetch.

The

Content-Typeheader is never overridden if you set it manually. - For JSON-serializable bodies (objects, arrays, etc.):

Summary:

You only need to set headers manually if you want to override these defaults. Otherwise, fetchff will handle the correct headers for most use cases, including advanced scenarios like file uploads, form submissions, and binary data.

Click to expand

Interceptor functions can be provided to customize the behavior of requests and responses. These functions are invoked at different stages of the request lifecycle and allow for flexible handling of requests, responses, and errors.

const { data } = await fetchf('https://api.example.com/', {

onRequest(config) {

// Add a custom header before sending the request

config.headers['Authorization'] = 'Bearer your-token';

},

onResponse(response) {

// Log the response status

console.log(`Response Status: ${response.status}`);

},

onError(error, config) {

// Handle errors and log the request config

console.error('Request failed:', error);

console.error('Request config:', config);

},

onRetry(response, attempt) {

// Log retry attempts for monitoring and debugging

console.warn(

`Retrying request (attempt ${attempt + 1}):`,

response.config.url,

);

// Modify config for the upcoming retry request

response.config.headers['Authorization'] = 'Bearer your-new-token';

// Log error details for failed attempts

if (response.error) {

console.warn(

`Retry reason: ${response.error.status} - ${response.error.statusText}`,

);

}

// You can implement custom retry logic or monitoring here

// For example, send retry metrics to your analytics service

},

retry: {

retries: 3,

delay: 1000,

backoff: 1.5,

},

});The following options are available for configuring interceptors in the fetchff settings:

-

onRequest(config) => config:

Type:RequestInterceptor | RequestInterceptor[]

A function or an array of functions that are invoked before sending a request. Each function receives the request configuration object as its argument, allowing you to modify request parameters, headers, or other settings. Default:undefined(no modification). -

onResponse(response) => response:

Type:ResponseInterceptor | ResponseInterceptor[]

A function or an array of functions that are invoked when a response is received. Each function receives the full response object, enabling you to process the response, handle status codes, or parse data as needed.

Default:undefined(no modification). -

onError(error) => error:

Type:ErrorInterceptor | ErrorInterceptor[]

A function or an array of functions that handle errors when a request fails. Each function receives the error and request configuration as arguments, allowing you to implement custom error handling logic or logging.

Default:undefined(no modification). -

onRetry(response, attempt) => response:

Type:RetryInterceptor | RetryInterceptor[]

A function or an array of functions that are invoked before each retry attempt. Each function receives the response object (containing error information) and the current attempt number as arguments, allowing you to implement custom retry logging, monitoring, or conditional retry logic.

Default:undefined(no retry interception).

All interceptors are asynchronous and can modify the provided config or response objects. You don't have to return a value, but if you do, any returned properties will be merged into the original argument.

fetchff follows specific execution patterns for interceptor chains:

Request interceptors execute in the order they are defined - from global to specific:

// Execution order: 1 → 2 → 3 → 4

const api = createApiFetcher({

onRequest: (config) => {

/* 1. Global interceptor */

},

endpoints: {

getData: {

onRequest: (config) => {

/* 2. Endpoint interceptor */

},

},

},

});

await api.getData({

onRequest: (config) => {

/* 3. Request interceptor */

},

});Response interceptors execute in reverse order - from specific to global:

// Execution order: 3 → 2 → 1

const api = createApiFetcher({

onResponse: (response) => {

/* 3. Global interceptor (executes last) */

},

endpoints: {

getData: {

onResponse: (response) => {

/* 2. Endpoint interceptor */

},

},

},

});

await api.getData({

onResponse: (response) => {

/* 1. Request interceptor (executes first) */

},

});This pattern ensures that:

- Request interceptors can progressively enhance configuration from general to specific

- Response interceptors can process data from specific to general, allowing request-level interceptors to handle the response first before global cleanup or logging

-

Request Interception:

Before a request is sent, theonRequestinterceptors are invoked. These interceptors can modify the request configuration, such as adding headers or changing request parameters. -

Response Interception:

Once a response is received, theonResponseinterceptors are called. These interceptors allow you to handle the response data, process status codes, or transform the response before it is returned to the caller. -

Error Interception:

If a request fails and an error occurs, theonErrorinterceptors are triggered. These interceptors provide a way to handle errors, such as logging or retrying requests, based on the error and the request configuration. -

Custom Handling:

Each interceptor function provides a flexible way to customize request and response behavior. You can use these functions to integrate with other systems, handle specific cases, or modify requests and responses as needed.

Click to expand

fetchff provides intelligent network revalidation features that automatically keep your data fresh based on user interactions and network connectivity. These features help ensure users always see up-to-date information without manual intervention.

When refetchOnFocus is enabled, requests are automatically triggered when the browser window regains focus (e.g., when users switch back to your tab).

const { data } = await fetchf('/api/user-profile', {

refetchOnFocus: true, // Revalidate when window gains focus

cacheTime: 300, // Cache for 5 minutes, but still revalidate on focus

});The refetchOnReconnect feature automatically revalidates data when the browser detects that internet connectivity has been restored after being offline.

const { data } = await fetchf('/api/notifications', {

refetchOnReconnect: true, // Revalidate when network reconnects

cacheTime: 600, // Cache for 10 minutes, but revalidate when back online

});fetchff automatically adjusts request timeouts based on connection speed to provide optimal user experience:

// Automatically uses:

// - 30 seconds timeout on normal connections

// - 60 seconds timeout on slow connections (2G/3G)

const { data } = await fetchf('/api/data');

// You can still override with custom timeout

const { data: customTimeout } = await fetchf('/api/data', {

timeout: 10000, // Force 10 seconds regardless of connection speed

});

// Check connection speed manually

import { isSlowConnection } from 'fetchff';

if (isSlowConnection()) {

console.log('User is on a slow connection');

// Adjust your app behavior accordingly

}- Event Listeners:

fetchffautomatically attaches global event listeners forfocusandonlineevents when needed - Background Revalidation: Network revalidation uses background requests that don't show loading states to users

- Automatic Cleanup: Event listeners are properly managed and cleaned up to prevent memory leaks

- Smart Caching: Revalidation works alongside caching - fresh data updates the cache for future requests

- Stale-While-Revalidate: Use

staleTimeto control when background revalidation happens automatically - Connection Awareness: Automatically detects connection speed and adjusts timeouts for better reliability

Both revalidation features can be configured globally or per-request, and work seamlessly with cache timing:

import { createApiFetcher } from 'fetchff';

const api = createApiFetcher({

baseURL: 'https://api.example.com',

// Global settings apply to all endpoints

refetchOnFocus: true,

refetchOnReconnect: true,

cacheTime: 300, // Cache for 5 minutes

staleTime: 60, // Consider fresh for 1 minute, then background revalidate

endpoints: {

getCriticalData: {

url: '/critical-data',

// Override global settings for specific endpoints

refetchOnFocus: true,

refetchOnReconnect: true,

staleTime: 30, // More aggressive background revalidation for critical data

},

getStaticData: {

url: '/static-data',

// Disable revalidation for static data

refetchOnFocus: false,

refetchOnReconnect: false,

staleTime: 3600, // Background revalidate after 1 hour

},

},

});Focus Revalidation is ideal for:

- Real-time dashboards and analytics

- Social media feeds and chat applications

- Financial data and trading platforms

- Any data that changes frequently while users are away

Reconnection Revalidation is perfect for:

- Mobile applications with intermittent connectivity

- Offline-capable applications

- Critical data that must be current when online

- Applications used in areas with unstable internet

-

Combine with appropriate cache and stale times:

const { data } = await fetchf('/api/live-data', { cacheTime: 300, // Cache for 5 minutes staleTime: 30, // Consider fresh for 30 seconds refetchOnFocus: true, // Also revalidate on focus refetchOnReconnect: true, });

-

Use

staleTimefor automatic background updates - Data stays fresh without user interaction:// Good: Automatic background revalidation for dynamic data const { data: notifications } = await fetchf('/api/notifications', { cacheTime: 600, // Cache for 10 minutes staleTime: 60, // Background revalidate after 1 minute refetchOnFocus: true, }); // Good: Less frequent updates for semi-static data const { data: userProfile } = await fetchf('/api/profile', { cacheTime: 1800, // Cache for 30 minutes staleTime: 600, // Background revalidate after 10 minutes refetchOnReconnect: true, });

-

Use selectively - Don't enable for all requests to avoid unnecessary network traffic:

// Good: Enable for critical, changing data const { data: userNotifications } = await fetchf('/api/notifications', { refetchOnFocus: true, refetchOnReconnect: true, }); // Avoid: Don't enable for static configuration data const { data: appConfig } = await fetchf('/api/config', { cacheTime: 3600, // Cache for 1 hour staleTime: 0, // Disable background revalidation refetchOnFocus: false, refetchOnReconnect: false, });

-

Consider user experience - Network revalidation happens silently in the background, providing smooth UX without loading spinners.

⚠️ Browser Support: These features work in all modern browsers that support thefocusandonlineevents. In server-side environments (Node.js), these options are safely ignored.

Click to expand

The caching mechanism in fetchf() and createApiFetcher() enhances performance by reducing redundant network requests and reusing previously fetched data when appropriate. This system ensures that cached responses are managed efficiently and only used when considered "fresh". Below is a breakdown of the key parameters that control caching behavior and their default values.

⚠️ When using in Node.js:

Cache and deduplication are in-memory and per-process. For distributed or serverless environments, consider external caching if persistence is needed.

const { data } = await fetchf('https://api.example.com/', {

cacheTime: 300, // Cache is valid for 5 minutes, set -1 for indefinite cache. By default no cache.

cacheKey: (config) => `${config.url}-${config.method}`, // Custom cache key based on URL and method, default automatically generated

cacheBuster: (config) => config.method === 'POST', // Bust cache for POST requests, by default no busting.

skipCache: (response, config) => response.status !== 200, // Skip caching on non-200 responses, by default no skipping

cacheErrors: false, // Cache error responses as well as successful ones, default false

staleTime: 600, // Data is considered fresh for 10 minutes before background revalidation (0 by default, meaning no background revalidation)

});The caching system can be fine-tuned using the following options when configuring the:

-

cacheTime:

Type:number

Specifies the duration, in seconds, for which a cache entry is considered "fresh." Once this time has passed, the entry is considered stale and may be refreshed with a new request. Set to -1 for indefinite cache. Default:undefined(no caching). -

cacheKey:

Type:CacheKeyFunction | string

A string or function used to generate a custom cache key for the request cache, deduplication etc. If not provided, a default key is created by hashing various parts of the request, includingMethod,URL, query parameters, and headers etc. Providing string can help to greatly improve the performance of the requests, avoid unnecessary request flooding etc.You can provide either:

- A string: Used directly as the cache key for all requests using matching string.

- A function: Receives the full request config as an argument and should return a unique string key. This allows you to include any relevant part of the request (such as URL, method, params, body, or custom logic) in the cache key.

Example:

cacheKey: (config) => `${config.method}:${config.url}:${JSON.stringify(config.params)}`;

This flexibility ensures you can control cache granularity—whether you want to cache per endpoint, per user, or based on any other criteria.

Default: Auto-generated based on request properties (see below).

-

cacheBuster:

Type:CacheBusterFunction

A function that allows you to invalidate or refresh the cache under certain conditions, such as specific request methods or response properties. This is useful for ensuring that certain requests (e.g.,POST) bypass the cache.

Default:(config) => false(no cache busting). -

skipCache:

Type:CacheSkipFunction

A function that determines whether caching should be skipped based on the response. This allows for fine-grained control over whether certain responses are cached or not, such as skipping non-200responses.

Default:(response, config) => false(no skipping). -

cacheErrors:

Type:boolean

Determines whether error responses (such as HTTP 4xx or 5xx) should also be cached. If set totrue, both successful and error responses are stored in the cache. Iffalse, only successful responses are cached.

Default:false. -

staleTime:

Specifies the time in seconds during which cached data is considered "fresh" before it becomes stale and triggers background revalidation (SWR: stale-while-revalidate).- Set to a number greater than

0to enable SWR: cached data will be served instantly, and a background request will update the cache after this period. - Set to

0to treat data as stale immediately (always eligible for refetch). - Set to

undefinedto disable SWR: data is never considered stale and background revalidation is not performed.

Default:undefinedto disable SWR pattern (data is never considered stale) or300(5 minutes) in libraries like React.

-

Cache Lookup:

When a request is made,fetchfffirst checks the internal cache for a matching entry using the generated cache key. If a valid and "fresh" cache entry exists (withincacheTime), the cached response is returned immediately. If the nativefetch()optioncache: 'reload'is set, the internal cache is bypassed and a fresh request is made. -

Cache Key Generation:

Each request is uniquely identified by a cache key, which is auto-generated from the URL, method, params, headers, and other relevant options. You can override this by providing a customcacheKeystring or function for fine-grained cache control. -

Cache Busting:

If acacheBusterfunction is provided, it determines whether to invalidate (bust) the cache for a given request. This is useful for scenarios like forcing fresh data onPOSTrequests or after certain actions. -

Conditional Caching:

TheskipCachefunction allows you to decide, per response, whether it should be stored in the cache. For example, you can skip caching for error responses (like HTTP 4xx/5xx) or based on custom logic. -

Network Request and Cache Update:

If no valid cache entry is found, or if caching is skipped or busted, the request is sent to the network. The response is then cached according to your configuration, making it available for future requests.

- Set to a number greater than

Understanding how caching works together with request deduplication is crucial for optimal performance:

// Multiple components requesting the same data

const userProfile1 = useFetcher('/api/user/123', { cacheTime: 300 });

const userProfile2 = useFetcher('/api/user/123', { cacheTime: 300 });

const userProfile3 = useFetcher('/api/user/123', { cacheTime: 300 });

// Flow:

// 1. First request checks cache → cache miss → network request initiated

// 2. Second request checks cache → cache miss → joins in-flight request (deduplication)

// 3. Third request checks cache → cache miss → joins in-flight request (deduplication)

// 4. When network response arrives → cache is populated → all requests receive same data// First request (cache miss - goes to network)

const request1 = fetchf('/api/data', { cacheTime: 300, dedupeTime: 5000 });

// After 2 seconds - cache hit (no deduplication needed)

setTimeout(() => {

const request2 = fetchf('/api/data', { cacheTime: 300, dedupeTime: 5000 });

// Returns cached data immediately, no network request

}, 2000);

// After 10 minutes - cache expired, new request

setTimeout(() => {

const request3 = fetchf('/api/data', { cacheTime: 300, dedupeTime: 5000 });

// Cache expired → new network request → potential for deduplication again

}, 600000);dedupeTime: Prevents duplicate requests during a short time window (milliseconds)cacheTime: Stores successful responses for longer periods (seconds)- Integration: Deduplication handles concurrent requests, caching handles subsequent requests

const config = {

dedupeTime: 2000, // 2 seconds - for rapid concurrent requests

cacheTime: 300, // 5 minutes - for longer-term storage

};

// Timeline example:

// T+0ms: Request A initiated → network call starts

// T+500ms: Request B initiated → joins Request A (deduplication)

// T+1500ms: Request C initiated → joins Request A (deduplication)

// T+2500ms: Request D initiated → deduplication window expired, but cache hit!

// T+6000ms: Request E initiated → cache hit (no network call needed)The relationship between staleTime and cacheTime enables sophisticated data freshness strategies:

const fetchWithTimings = fetchf('/api/user-feed', {

cacheTime: 600, // Cache for 10 minutes

staleTime: 60, // Consider fresh for 1 minute

});

// Data lifecycle:

// T+0: Fresh data - served from cache, no background request

// T+30s: Still fresh - served from cache, no background request

// T+90s: Stale but cached - served from cache + background revalidation

// T+300s: Still stale - served from cache + background revalidation

// T+650s: Cache expired - network request required, shows loading stateHigh-Frequency Updates (Real-time Data)

const realtimeData = {

cacheTime: 30, // Cache for 30 seconds

staleTime: 5, // Fresh for 5 seconds only

// Result: Frequent background updates, always responsive UI

};Balanced Performance (User Data)

const userData = {

cacheTime: 300, // Cache for 5 minutes

staleTime: 60, // Fresh for 1 minute

// Result: Good performance + reasonable freshness

};Static Content (Configuration)

const staticConfig = {

cacheTime: 3600, // Cache for 1 hour

staleTime: 1800, // Fresh for 30 minutes

// Result: Minimal network usage for rarely changing data

};// When staleTime expires but cacheTime hasn't:

const { data } = await fetchf('/api/notifications', {

cacheTime: 600, // 10 minutes total cache

staleTime: 120, // 2 minutes of "freshness"

});

// T+0: Returns cached data immediately, no background request

// T+150s: Returns cached data immediately + triggers background request

// T+150s: Background request completes → cache silently updated

// T+650s: Cache expired → full loading state + network requestBy default, fetchff generates a cache key automatically using a combination of the following request properties:

| Property | Description | Default Value |

|---|---|---|

method |

The HTTP method used for the request (e.g., GET, POST). | 'GET' |

url |

The full request URL, including the base URL and endpoint path. | '' |

headers |

Request headers, filtered to include only cache-relevant headers (see below). | |

body |

The request payload (for POST, PUT, PATCH, etc.), stringified if it's an object or array. | |

credentials |

Indicates whether credentials (cookies) are included in the request. | 'same-origin' |

params |

Query parameters serialized into the URL (objects, arrays, etc. are stringified). | |

urlPathParams |

Dynamic URL path parameters (e.g., /user/:id), stringified and encoded. |

|

withCredentials |

Whether credentials (cookies) are included in the request. |

To ensure stable cache keys and prevent unnecessary cache misses, fetchff only includes headers that affect response content in cache key generation. The following headers are included:

Content Negotiation:

accept- Affects response format (JSON, HTML, etc.)accept-language- Affects localization of responseaccept-encoding- Affects response compression

Authentication & Authorization:

authorization- Affects access to protected resourcesx-api-key- Token-based access controlcookie- Session-based authentication

Request Context:

content-type- Affects how request body is interpretedorigin- Relevant for CORS or tenant-specific APIsreferer- May influence API behavioruser-agent- Only if server returns client-specific content

Custom Headers:

x-requested-with- Distinguishes AJAX requestsx-client-id- Per-client/partner identityx-tenant-id- Multi-tenant segmentationx-user-id- Explicit user contextx-app-version- Version-specific behaviorx-feature-flag- Feature rollout controlsx-device-id- Device-specific responsesx-platform- Platform-specific content (iOS, Android, web)x-session-id- Session-specific responsesx-locale- Locale-specific content

Headers like user-agent, accept-encoding, connection, cache-control, tracking IDs, and proxy-related headers are excluded from cache key generation as they don't affect the actual response content.

These properties are combined and hashed to create a unique cache key for each request. This ensures that requests with different parameters, bodies, or cache-relevant headers are cached separately while maintaining stable cache keys across requests that only differ in non-essential headers. If that does not suffice, you can always use cacheKey (string | function) and supply it to particular requests. You can also build your own cacheKey function and simply update defaults to reflect it in all requests. Auto key generation would be entirely skipped in such scenarios.

Click to expand

fetchff automatically deduplicates identical requests that are made within a configurable time window, ensuring that only one network request is sent for the same endpoint and parameters. This is especially useful for scenarios where multiple components or users might trigger the same request simultaneously (e.g., rapid user input, concurrent UI updates).

⚠️ When using in Node.js:

Request queueing and deduplication are per-process. In multi-process or serverless environments, requests are not deduplicated across instances.

- When a request is made,

fetchffchecks if an identical request (same URL, method, params, and body) is already in progress or was recently completed within thededupeTimewindow. - If such a request exists, the new request will "join" the in-flight request and receive the same response when it completes, rather than triggering a new network call.

- This mechanism reduces unnecessary network traffic and ensures consistent data across your application.

dedupeTime:- Type:

number - Default:

0(milliseconds) - Specifies the time window during which identical requests are deduplicated. If set to

0, deduplication is disabled.

- Type:

import { fetchf } from 'fetchff';

// Multiple rapid calls to the same endpoint will be deduplicated

fetchf('/api/search', { params: { q: 'test' }, dedupeTime: 2000 });

fetchf('/api/search', { params: { q: 'test' }, dedupeTime: 2000 });

// Only one network request will be sent within the 2-second window- Prevents duplicate network requests for the same resource.

- Reduces backend load and improves frontend performance.

- Ensures that all consumers receive the same response for identical requests made in quick succession.

This deduplication logic is applied both to standalone fetchf() calls and to endpoints created with createApiFetcher().

Click to expand

fetchff simplifies making API requests by allowing customizable features such as request cancellation, retries, and response flattening. When a new request is made to the same API endpoint, the plugin automatically cancels any previous requests that haven't completed, ensuring that only the most recent request is processed.

It also supports:

- Automatic retries for failed requests with configurable delay and exponential backoff.

- Optional flattening of response data for easier access, removing nested

datafields.

You can choose to reject cancelled requests or return a default response instead through the defaultResponse setting.

import { fetchf } from 'fetchff';

// Function to send the request

const sendRequest = () => {

// In this example, the previous requests are automatically cancelled

// You can also control "dedupeTime" setting in order to fire the requests more or less frequently

fetchf('https://example.com/api/messages/update', {

method: 'POST',

cancellable: true,

rejectCancelled: true,

});

};

// Attach keydown event listener to the input element with id "message"

document.getElementById('message')?.addEventListener('keydown', sendRequest);-

cancellable: Type:booleanDefault:falseIf set totrue, any ongoing previous requests to the same API endpoint will be automatically cancelled when a subsequent request is made before the first one completes. This is useful in scenarios where repeated requests are made to the same endpoint (e.g., search inputs) and only the latest response is needed, avoiding unnecessary requests to the backend. -

rejectCancelled: Type:booleanDefault:falseWorks in conjunction with thecancellableoption. If set totrue, the promise of a cancelled request will be rejected. By default (false), when a request is cancelled, instead of rejecting the promise, adefaultResponsewill be returned, allowing graceful handling of cancellation without errors.

Click to expand

Polling can be configured to repeatedly make requests at defined intervals until certain conditions are met. This allows for continuously checking the status of a resource or performing background updates.

const { data } = await fetchf('https://api.example.com/', {

pollingInterval: 5000, // Poll every 5 seconds (useful for regular polling at intervals)

pollingDelay: 1000, // Wait 1 second before each polling attempt begins

maxPollingAttempts: 10, // Stop polling after 10 attempts

shouldStopPolling(response, attempt) {

if (response && response.status === 200) {

return true; // Stop polling if the response status is 200 (OK)

}

if (attempt >= 10) {

return true; // Stop polling after 10 attempts

}

return false; // Continue polling otherwise

},

});The following options are available for configuring polling in the RequestHandler:

-

pollingInterval:

Type:number

Interval in milliseconds between polling attempts. If set to0, polling is disabled. This allows you to control the frequency of requests when polling is enabled. It is useful for regular, periodic polling. Default:0(polling disabled). -

pollingDelay:

Type:number

The time (in milliseconds) to wait before each polling attempt begins. It is useful if you want to throttle or stagger requests, or wait a bit before each poll. It basically adds a delay before each poll is started (including the first one). Default:0(no delay). -

maxPollingAttempts:

Type:number

Maximum number of polling attempts before stopping. Set to0or negative number for unlimited attempts.

Default:0(unlimited). -

shouldStopPolling:

Type:(response: any, attempt: number) => boolean

A function to determine if polling should stop based on the response, error, or the current polling attempt number (attempt starts with1). Returntrueto stop polling, andfalseto continue polling. This allows for custom logic to decide when to stop polling based on the conditions of the response or error.

Default:(response, attempt) => false(polling continues indefinitely unless manually stopped).

-

Polling Interval:

WhenpollingIntervalis set to a non-zero value, polling begins after the initial request. The request is repeated at intervals defined by thepollingIntervalsetting. -

Polling Delay:

ThepollingDelaysetting introduces a delay before each polling attempt, allowing for finer control over the timing of requests. -

Maximum Polling Attempts:

ThemaxPollingAttemptssetting limits the number of polling attempts. If the maximum number of attempts is reached, polling stops automatically. -

Stopping Polling:

TheshouldStopPollingfunction is invoked after each polling attempt. If it returnstrue, polling will stop. Otherwise, polling will continue until the condition to stop is met, or polling is manually stopped. -

Custom Logic:

TheshouldStopPollingfunction provides flexibility to implement custom logic based on the response, error, or the number of attempts. This makes it easy to stop polling when the desired outcome is reached or after a maximum number of attempts.

Click to expand

The retry mechanism can be used to handle transient errors and improve the reliability of network requests. This mechanism automatically retries requests when certain conditions are met, providing robustness in the face of temporary failures. Below is an overview of how the retry mechanism works and how it can be configured.

const { data } = await fetchf('https://api.example.com/', {

retry: {

retries: 5,

delay: 100, // Override default adaptive delay (normally 1s/2s based on connection)

maxDelay: 5000, // Override default adaptive maxDelay (normally 30s/60s based on connection)

resetTimeout: true, // Resets the timeout for each retry attempt

backoff: 1.5,

retryOn: [500, 503],

// Retry on specific errors or based on custom logic

shouldRetry(response, attempt) {

// Retry if the status text is Not Found (404)

if (response.error && response.error.statusText === 'Not Found') {

return true;

}

// Use `response.data` to access any data from fetch() response

const data = response.data;

// Let's say your backend returns bookId as "none". You can force retry by returning "true".

if (data?.bookId === 'none') {

return true;

}

return attempt < 3; // Retry up to 3 times.

},

},

});In this example, the request will retry only on HTTP status codes 500 and 503, as specified in the retryOn array. The resetTimeout option ensures that the timeout is restarted for each retry attempt. The custom shouldRetry function adds further logic: if the server response contains {"bookId": "none"}, a retry is forced. Otherwise, the request will retry only if the current attempt number is less than 3. Although the retries option is set to 5, the shouldRetry function limits the maximum attempts to 3 (the initial request plus 2 retries).

Note: When not overridden, fetchff automatically adapts retry delays based on connection speed:

- Normal connections: 1s initial delay, 30s max delay

- Slow connections (2G/3G): 2s initial delay, 60s max delay

Additionally, you can handle "Not Found" (404) responses or other specific status codes in your retry logic. For example, you might want to retry when the status text is "Not Found":

shouldRetry(response, attempt) {

// Retry if the status text is Not Found (404)

if (response.error && response.error.statusText === 'Not Found') {

return true;

}

// ...other logic

return null; // Fallback to `retryOn` status code check

}This allows you to customize retry behavior for cases where a resource might become available after a short delay, or when you want to handle transient 404 errors gracefully.

The whole Error object is under response.error generally.

The retry mechanism is configured via the retry option when instantiating the RequestHandler. You can customize the following parameters:

-

retries:

Type:number

Number of retry attempts to make after an initial failure.

Default:0(no retries). -

delay:

Type:number

Initial delay (in milliseconds) before the first retry attempt. Default is adaptive: 1 second (1000 ms) for normal connections, 2 seconds (2000 ms) on slow connections (2G/3G). Subsequent retries use an exponentially increasing delay based on thebackoffparameter.

Default:1000/2000(adaptive based on connection speed). -

maxDelay:

Type:number

Maximum delay (in milliseconds) between retry attempts. Default is adaptive: 30 seconds (30000 ms) for normal connections, 60 seconds (60000 ms) on slow connections (2G/3G). The delay will not exceed this value, even if the exponential backoff would suggest a longer delay.

Default:30000/60000(adaptive based on connection speed). -

backoff:

Type:number

Factor by which the delay is multiplied after each retry. For example, abackofffactor of1.5means each retry delay is 1.5 times the previous delay. It means that after the first failure, wait for x seconds. After the second failure, wait for x _ 1.5 seconds. After the third failure, wait for x _ 1.5^2 seconds, and so on. Default:1.5. -

resetTimeout:

Type:boolean

If set totrue, the timeout for the request is reset for each retry attempt. This ensures that the timeout applies to each individual retry rather than the entire request lifecycle.

Default:true. -

retryOn:

Type:number[]

Array of HTTP status codes that should trigger a retry. By default, retries are triggered for the following status codes:408- Request Timeout409- Conflict425- Too Early429- Too Many Requests500- Internal Server Error502- Bad Gateway503- Service Unavailable504- Gateway Timeout

If used in conjunction with shouldRetry, the shouldRetry function takes priority, and falls back to retryOn only if it returns null.

shouldRetry(response: FetchResponse, currentAttempt: Number) => boolean:

Type:RetryFunction<ResponseData, RequestBody, QueryParams, PathParams>

Function that determines whether a retry should be attempted based on the error or successful response (ifshouldRetryis provided) object, and the current attempt number. This function receives the error object and the attempt number as arguments. The boolean returned indicates decision. Iftruethen it should retry, iffalsethen abort and don't retry, ifnullthen fallback toretryOnstatus codes check. Default:undefined.

-

Initial Request: When a request fails, the retry mechanism captures the failure and checks if it should retry based on the

retryOnconfiguration and the result of theshouldRetryfunction. -

Retry Attempts: If a retry is warranted:

- The request is retried up to the specified number of attempts (

retries). - Each retry waits for a delay before making the next attempt. The delay starts at the initial

delayvalue and increases exponentially based on thebackofffactor, but will not exceed themaxDelay. - If

resetTimeoutis enabled, the timeout is reset for each retry attempt.

- The request is retried up to the specified number of attempts (

-

Logging: During retries, the mechanism logs warnings indicating the retry attempts and the delay before the next attempt, which helps in debugging and understanding the retry behavior.

-

Final Outcome: If all retry attempts fail, the request will throw an error, and the final failure is processed according to the configured error handling logic.

When a request receives a 429 Too Many Requests response, fetchff will automatically check for the Retry-After header and use its value to determine the delay before the next retry attempt. This works for both seconds and HTTP-date formats, and falls back to your configured delay if the header is missing or invalid.

How it works:

- If the server responds with 429 and a

Retry-Afterheader, the delay for the next retry will be set to the value from that header (in ms). - If the header is missing or invalid, the default retry delay is used.

Example:

const { data } = await fetchf('https://api.example.com/', {

retry: {

retries: 2,

delay: 1000, // fallback if Retry-After is missing

retryOn: [429], // 429 is already checked by default so it is not necessary to add it

},

});If the server responds with:

HTTP/1.1 429 Too Many Requests

Retry-After: 5

The next retry will wait 5000ms before attempting again.

If the header is an HTTP-date, the delay will be calculated as the difference between the date and the current time.

Click to expand

The fetchff plugin automatically handles response data transformation for any instance of Response returned by the fetch() (or a custom fetcher) based on the Content-Type header, ensuring that data is parsed correctly according to its format.

- JSON (

application/json): Parses the response as JSON. - Form Data (

multipart/form-data): Parses the response asFormData. - Binary Data (

application/octet-stream): Parses the response as aBlob. - URL-encoded Form Data (

application/x-www-form-urlencoded): Parses the response asFormData. - Text (

text/*): Parses the response as plain text.

If the Content-Type header is missing or not recognized, the plugin defaults to attempting JSON parsing. If that fails, it will try to parse the response as text.

This approach ensures that the fetchff plugin can handle a variety of response formats, providing a flexible and reliable method for processing data from API requests.

⚠️ When using in Node.js:

In Node.js, using FormData, Blob, or ReadableStream may require additional polyfills or will not work unless your fetch polyfill supports them.

You can use the onResponse interceptor to customize how the response is handled before it reaches your application. This interceptor gives you access to the raw Response object, allowing you to transform the data or modify the response behavior based on your needs.

Click to expand

Every request returns a standardized response object from native

fetch() extended by a few handful properties:

interface FetchResponse<

ResponseData = any,

RequestBody = any,

QueryParams = any,

PathParams = any,

> extends Response {

data: ResponseData | null; // The parsed response data, or null/defaultResponse if unavailable

error: ResponseError<

ResponseData,

RequestBody,

QueryParams,

PathParams

> | null; // Error details if the request failed, otherwise null

config: RequestConfig; // The configuration used for the request

status: number; // HTTP status code

statusText: string; // HTTP status text

headers: HeadersObject; // Response headers as a key-value object

}-

data:

The actual data returned from the API, ornull/defaultResponseif not available. -

error:

An object containing error details if the request failed, ornullotherwise. Includes properties such asname,message,status,statusText,request,config, and the fullresponse. -

config:

The complete configuration object used for the request, including URL, method, headers, and parameters. -

status:

The HTTP status code of the response (e.g., 200, 404, 500). -

statusText:

The HTTP status text (e.g., 'OK', 'Not Found', 'Internal Server Error'). -

headers:

The response headers as a plain key-value object.

The whole response of the native fetch() is attached as well.

Error object in error looks as follows:

-

Type:

ResponseError<ResponseData, RequestBody, QueryParams, PathParams> | null -

An object with details about any error that occurred or

nullotherwise. -

name: The name of the error, that isResponseError. -

message: A descriptive message about the error. -

status: The HTTP status code of the response (e.g., 404, 500). -

statusText: The HTTP status text of the response (e.g., 'Not Found', 'Internal Server Error'). -

request: Details about the HTTP request that was sent (e.g., URL, method, headers). -

config: The configuration object used for the request, including URL, method, headers, and query parameters. -

response: The full response object received from the server, including all headers and body. -

isCancelled: A boolean property on the error object indicating whether the request was cancelled before completion

Click to expand

Error handling strategies define how to manage errors that occur during requests. You can configure the strategy option to specify what should happen when an error occurs. This affects whether promises are rejected, if errors are handled silently, or if default responses are provided. You can also combine it with onError interceptor for more tailored approach.

The native fetch() API function doesn't throw exceptions for HTTP errors like 404 or 500 — it only rejects the promise if there is a network-level error (e.g. the request fails due to a DNS error, no internet connection, or CORS issues). The fetchf() function brings consistency and lets you align the behavior depending on chosen strategy. By default, all errors are rejected.

reject: (default)

Promises are rejected, and global error handling is triggered. You must use try/catch blocks to handle errors.

import { fetchf } from 'fetchff';

try {

const { data } = await fetchf('https://api.example.com/users', {

strategy: 'reject', // Default strategy - can be omitted

timeout: 5000,

});

console.log('Users fetched successfully:', data);

} catch (error) {

// Handle specific error types

if (error.status === 404) {

console.error('API endpoint not found');

} else if (error.status >= 500) {

console.error('Server error:', error.statusText);

} else {

console.error('Request failed:', error.message);

}

}softFail:

Returns a response object with additional property of error when an error occurs and does not throw any error. This approach helps you to handle error information directly within the response's error object without the need for try/catch blocks.

⚠️ Always Check the error Property:

When using the softFail or defaultResponse strategies, the promise will not throw on error. You must always check the error property in the response object to detect and handle errors.

import { fetchf } from 'fetchff';

const { data, error } = await fetchf('https://api.example.com/users', {

strategy: 'softFail',

timeout: 5000,

});

if (error) {

// Handle errors without try/catch

console.error('Request failed:', {

status: error.status,

message: error.message,

url: error.config?.url,

});

// Show user-friendly error message

if (error.status === 429) {

console.log('Rate limited. Please try again later.');

} else if (error.status >= 500) {

console.log('Server temporarily unavailable. Please try again.');

}

} else {

console.log('Users fetched successfully:', data);

}Check Response Object section below to see how error object is structured.

defaultResponse:

Returns a default response specified in case of an error. The promise will not be rejected. This can be used in conjunction with flattenResponse and defaultResponse: {} to provide sensible defaults.

⚠️ Always Check the error Property:

When using the softFail or defaultResponse strategies, the promise will not throw on error. You must always check the error property in the response object to detect and handle errors.

import { fetchf } from 'fetchff';

const { data, error } = await fetchf(

'https://api.example.com/user-preferences',

{

strategy: 'defaultResponse',

defaultResponse: {

theme: 'light',

language: 'en',

notifications: true,

},

timeout: 5000,

},

);

if (error) {

console.warn('Failed to load user preferences, using defaults:', data);

// Log error for debugging but continue with default values

console.error('Preferences API error:', error.message);

} else {

console.log('User preferences loaded:', data);

}

// Safe to use data regardless of error state

document.body.className = data.theme;silent: