-

Notifications

You must be signed in to change notification settings - Fork 20

How to use kerberos authentication in streamsx.hdfs toolkit

The streamsx.hdfs toolkit supports kerberos authentication.

This document describes a step by step procedure to:

-

Installation and configuration of Ambari

-

Installation of HDFS and HBASE start the services

-

Enabling kerberos

-

Using of principal and *keytab" in stremasx.hdfs toolkit.

A keytab is a file containing pairs of Kerberos principals and encrypted keys that are derived from the Kerberos password.

Here is a short installation and configuration guide for Ambari. For detail information about the installation of Ambari check this link: https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.2.2/bk_ambari-installation/content/install-ambari-server.html

login as root:

cd /etc/yum.repos.d/

wget http://public-repo-1.hortonworks.com/ambari/centos7/2.x/updates/2.6.1.5/ambari.repo

for redhat 6

wget http://public-repo-1.hortonworks.com/ambari/centos6/2.x/updates/2.6.1.5/ambari.repo

Now install ambari-server and ambari-agent

yum install ambari-server

yum install ambari-agent

ambari-server setup -s -v

# accept all defaults and go to the next:

ambari-server start

ambari-server status

edit /etc/ambari-agent/conf/ambari-agent.ini file and adding the following configuration property to the security section:

[security]

force_https_protocol=PROTOCOL_TLSv1_2

Install and start ambari-agent in every hosts of your HBASE cluster.

ambari-agent start

ambari-agent status

If everything goes well you can see the following status:

Found ambari-agent PID: 1429

ambari-agent running.

Agent PID at: /run/ambari-agent/ambari-agent.pid

Agent out at: /var/log/ambari-agent/ambari-agent.out

Agent log at: /var/log/ambari-agent/ambari-agent.log

Now check the status of ambari-server

ambari-server status

Using python /usr/bin/python

Ambari-server status

Ambari Server running

Found Ambari Server PID: 16227 at: /var/run/ambari-server/ambari-server.pid

Start the ambari web configuration GUI. open this link in your browse.

http:/<your-hdp-server>:8080

default port is 8080 default user: admin default password: admin It is possible to change all these defaults. More details in:

https://ambari.apache.org/1.2.3/installing-hadoop-using-ambari/content/ambari-chap2-2a.html

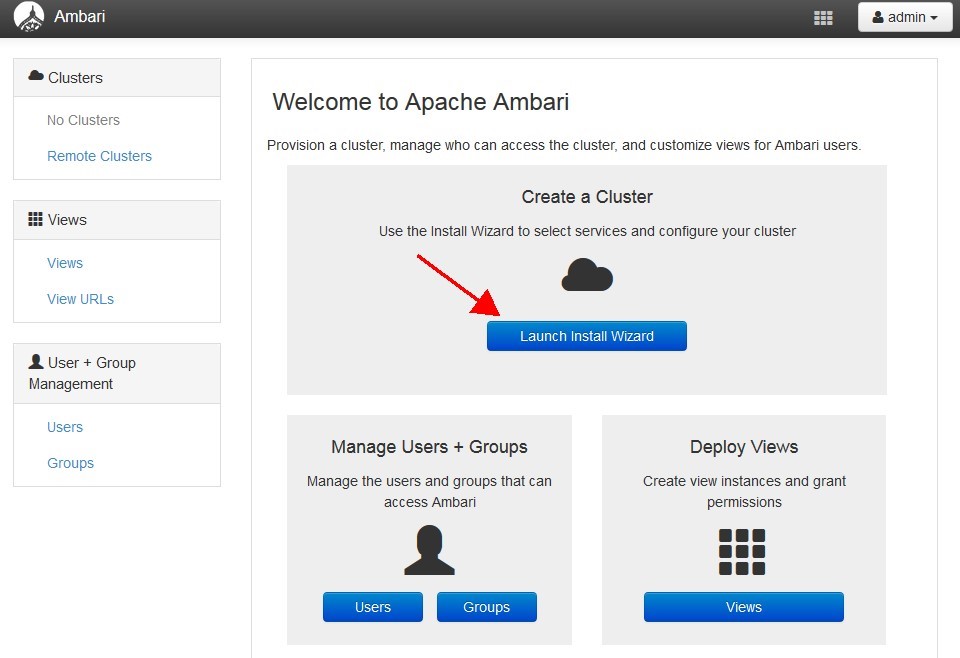

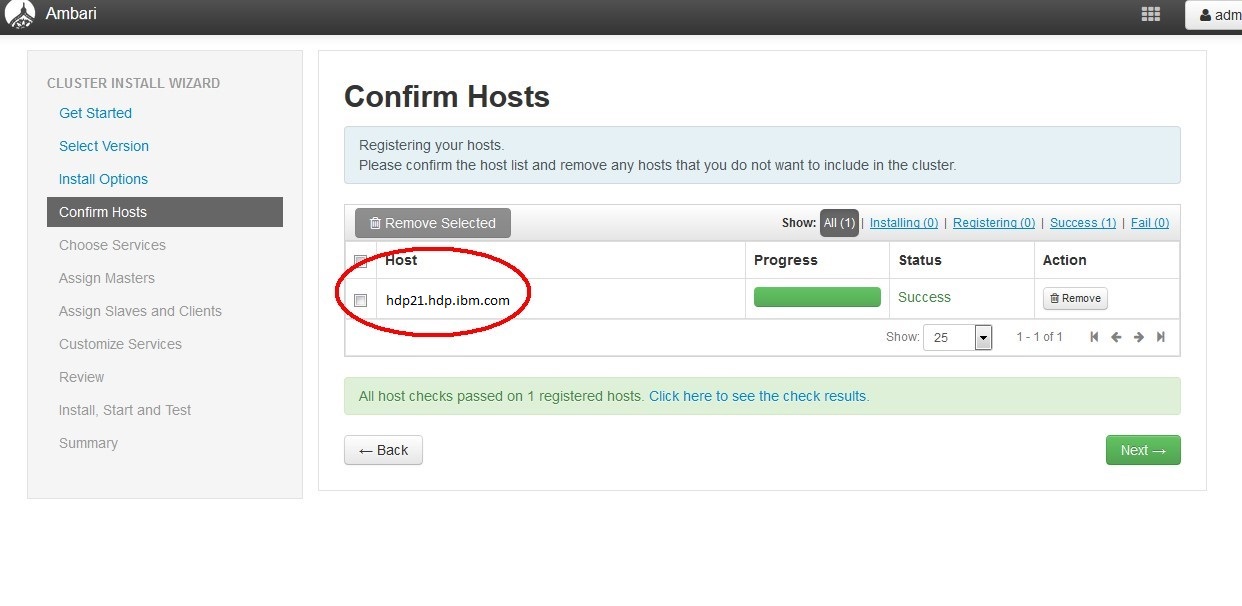

Launch the Ambari Install Wizard and follow the installation and configuration. Registration of hosts.

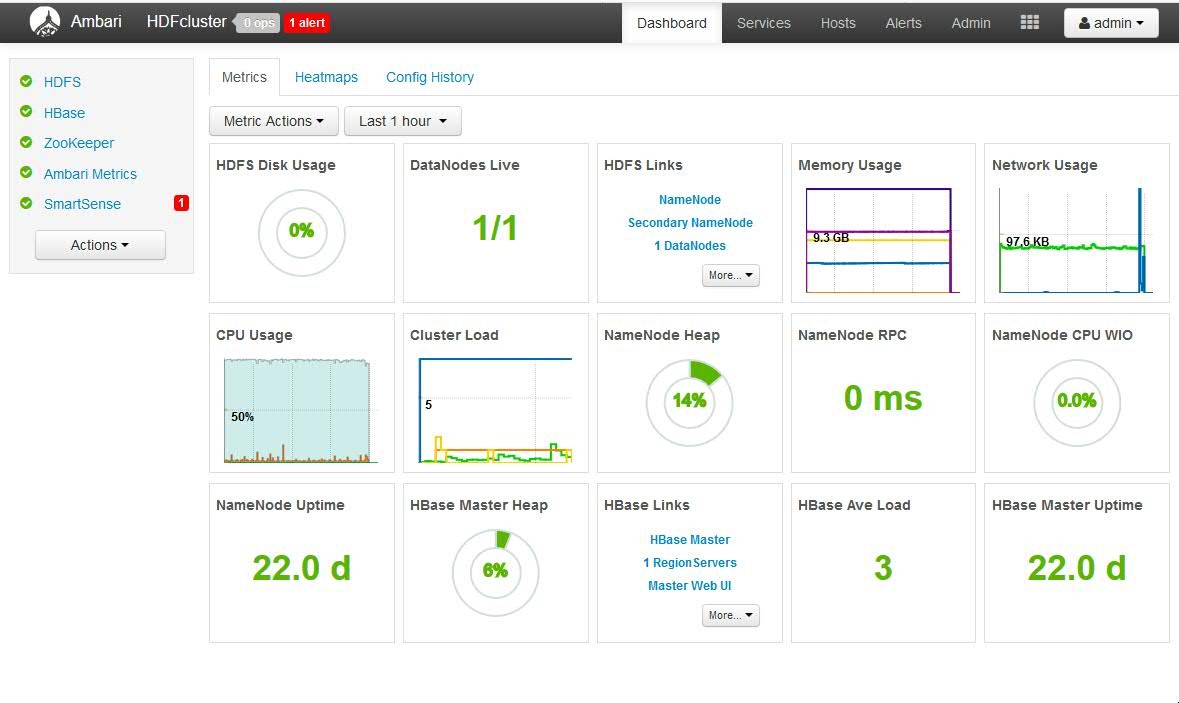

Customizing of services: For HBASE and HBASE test it is recommended to install only the following services: HBASE, MapReduce2, HBase, ZooKepper.

Install and start the services:

It takes about 30 minutes (or more dependence to the number of your services) to install and start the services . Started Services

Now you can login as root on your HDP server and copy the configuration files to your client: Hadoop configuration file: /usr/hdp/current/haddop-client/conf/core-seite.xml

HBASE configuration file: /usr/hdp/current/hbase-client/conf/hbase-site.xml

Login as root in your hadoop server and install kerberos.

yum install krb5* -y

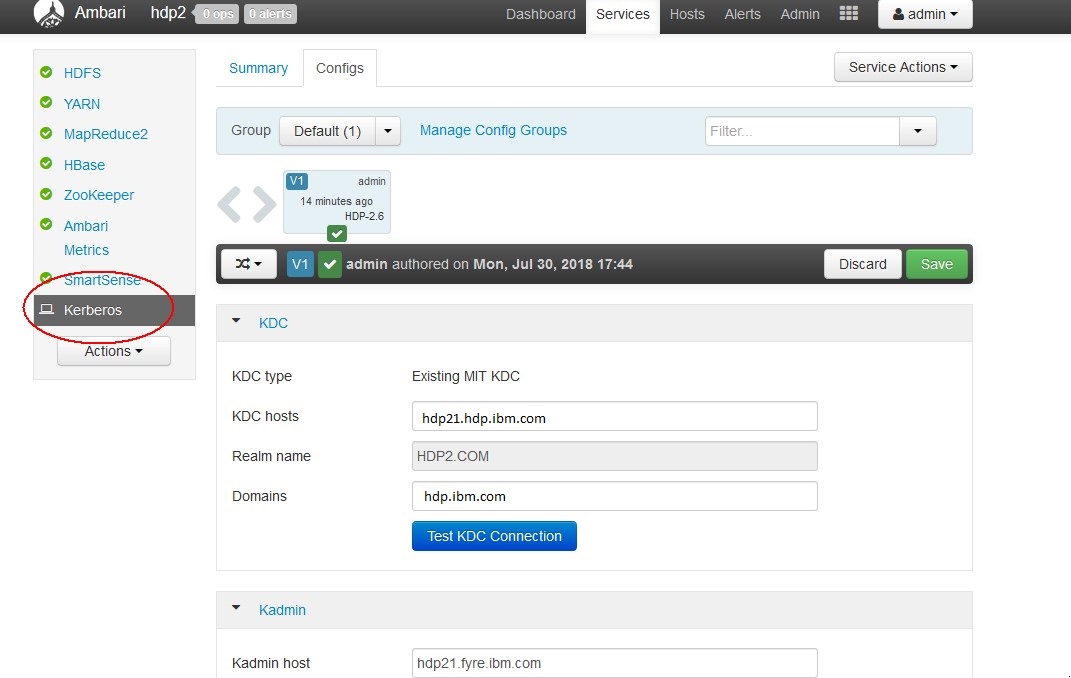

In the following example the realm name is HDP2 and the server is hdp21.hdp.ibm.com. You can change it with your realm name and your server name.

Edit Kerberos configuration file

vi /etc/krb5.conf

[libdefaults]

default_realm = HDP2.COM

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

forwardable = true

udp_preference_limit = 1000000

default_tkt_enctypes = des-cbc-md5 des-cbc-crc des3-cbc-sha1

default_tgs_enctypes = des-cbc-md5 des-cbc-crc des3-cbc-sha1

permitted_enctypes = des-cbc-md5 des-cbc-crc des3-cbc-sha1

[realms]

HDP2.COM = {

kdc = hdp21.hdp.ibm.com:88

admin_server = hdp21.hdp.ibm.com:749

default_domain = hdp.ibm.com

}

[domain_realm]

.hdp.ibm.com = HDP2.COM

hdp.ibm.com = HDP2.COM

[logging]

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmin.log

default = FILE:/var/log/krb5lib.log

edit /var/kerberos/krb5kdc/kdc.conf

default_realm = HDP2.COM

[kdcdefaults]

v4_mode = nopreauth

kdc_ports = 0

[realms]

HDP2.COM = {

kdc_ports = 88

admin_keytab = /etc/kadm5.keytab

database_name = /var/kerberos/krb5kdc/principal

acl_file = /var/kerberos/krb5kdc/kadm5.acl

key_stash_file = /var/kerberos/krb5kdc/stash

max_life = 10h 0m 0s

max_renewable_life = 7d 0h 0m 0s

master_key_type = des3-hmac-sha1

supported_enctypes = arcfour-hmac:normal des3-hmac-sha1:normal des-cbc-crc:normal des:normal des:v4 des:norealm des:onlyrealm des:afs3

default_principal_flags = +preauth

}

Edit /var/kerberos/krb5kdc/kadm5.acl

3- Creating the KDC database

kdb5_util create -r HDP2.COM -s

4- Add a principal for root user

/usr/sbin/kadmin.local -q "addprinc root/admin"

5- Restart krb5kdc and kadmin services

/sbin/service krb5kdc stop

/sbin/service kadmin stop

/sbin/service krb5kdc start

/sbin/service kadmin start

Check the status of servers: if everything goes well you can see like this status:

/sbin/service krb5kdc status

Redirecting to /bin/systemctl status krb5kdc.service

krb5kdc.service - Kerberos 5 KDC

Loaded: loaded (/usr/lib/systemd/system/krb5kdc.service; disabled; vendor preset: disabled)

Active: active (running) since Mon 2018-07-30 08:13:40 PDT; 20h ago

Main PID: 2030 (krb5kdc)

CGroup: /system.slice/krb5kdc.service

└─2030 /usr/sbin/krb5kdc -P /var/run/krb5kdc.pid

Jul 30 08:13:40 hdp21.hdp.ibm.com systemd[1]: Starting Kerberos 5 KDC...

Jul 30 08:13:40 hdp21.hdp.ibm.com systemd[1]: Started Kerberos 5 KDC.

/sbin/service kadmin status

Redirecting to /bin/systemctl status kadmin.service

kadmin.service - Kerberos 5 Password-changing and Administration

Loaded: loaded (/usr/lib/systemd/system/kadmin.service; disabled; vendor preset: disabled)

Active: active (running) since Mon 2018-07-30 08:13:48 PDT; 20h ago

Main PID: 2045 (kadmind)

CGroup: /system.slice/kadmin.service

└─2045 /usr/sbin/kadmind -P /var/run/kadmind.pid

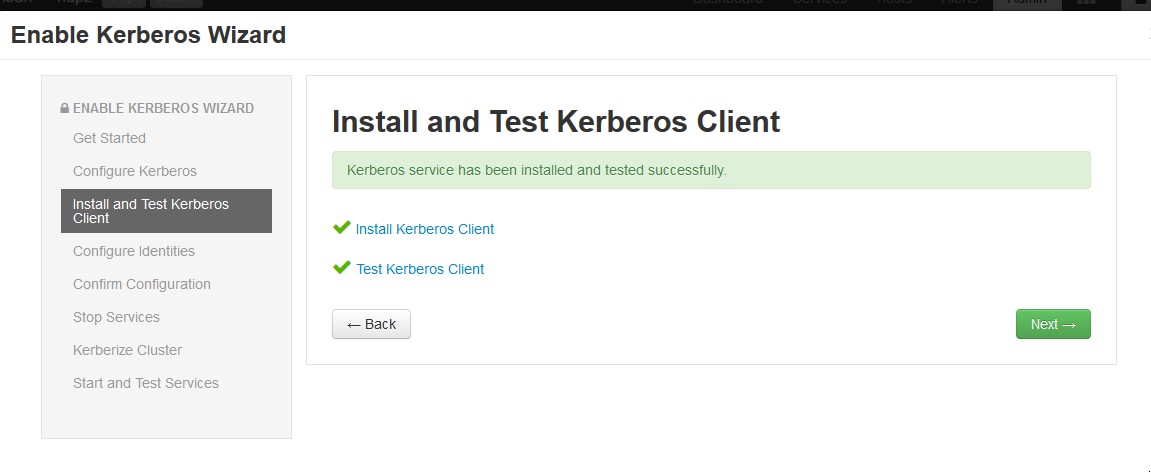

Now you can open your ambari GUI and enable Kerberos.

It restarts all service and create principals and keytab files for every user.

It is also possible to create your principals and keytab manually via kadmin tool.

kadmin -p root/[email protected]

Authenticating as principal root/[email protected] with password.

Password for root/[email protected]:

kadmin: listprincs

HTTP/[email protected]

K/[email protected]

activity_analyzer/[email protected]

activity_explorer/[email protected]

[email protected]

[email protected]

amshbase/[email protected]

amszk/[email protected]

dn/[email protected]

[email protected]

hbase/[email protected]

[email protected]

hbase/[email protected]

jhs/[email protected]

kadmin/[email protected]

kadmin/[email protected]

kadmin/[email protected]

kiprop/[email protected]

krbtgt/[email protected]

nfs/[email protected]

nm/[email protected]

nn/[email protected]

rm/[email protected]

root/[email protected]

yarn/[email protected]

zookeeper/[email protected]

It creates keytab files and saves them in /etc directory

/etc/security/keytabs

ls /etc/security/keytabs/

activity-analyzer.headless.keytab

activity-explorer.headless.keytab

ambari.server.keytab

ams.collector.keytab

ams-hbase.master.keytab

ams-hbase.regionserver.keytab

ams-zk.service.keytab

dn.service.keytab

hbase.headless.keytab

hbase.service.keytab

hbase.headless.keytab

jhs.service.keytab

nfs.service.keytab

nm.service.keytab

nn.service.keytab

rm.service.keytab

smokeuser.headless.keytab

spnego.service.keytab

yarn.service.keytab

zk.service.keytab

The csv file with list of principals configured can be download using following Ambari URL

https://<ambari_hostname:port>/api/v1/clusters/<Cluster_name>/kerberos_identities?fields=*&format=csv

Where the placeholders <ambari_hostname:port> , and <Cluster_name> has to be replaced with your server corresponding values.

If you have another hadoop server, you can enable Kerberos authentication on your Hadoop cluster.

The following links shows "how to enable the kerberos authentication in Hortenworks, Cloudera and IOP".

https://www.cloudera.com/documentation/enterprise/latest/topics/cm_sg_s4_kerb_wizard.html

Login as root in your hadoop server

useradd streamsadmin

su - hdfs

hadoop fs -mkdir /user/streamsadmin

hadoop fs -mkdir /user/streamsadmin/testDirectory

hadoop fs -chown -R streamsadmin:streamsadmin /user/streamsadmin

In the above sample the name of streamsuser is streamsadmin.

If your streamsuser has another username, you can create a HDFS user with your user name.

Kerberos authentication is a network protocol to provide strong authentication for client/server applications.

The streamsx.hdfs toolkit support kerberos authentication. All operators have 2 additional parameters for kerberos authentication:

The authKeytab parameter specifies the kerberos keytab file that is created for the principal. The authPrincipal parameter specifies the Kerberos principal, which is typically the principal that is created for the HDFS server.

-

1- Copy core-site.xml file from Hadoop server on your Streams server in etc directory of your SPL application.

-

2- Copy the keytab file from Hadoop server on your Streams server in etc directory of your SPL application.

-

3- Test the keytab For example:

kinit -k -t hdfs.headless.keytab <your-hdfs-pricipal>

If your configuration is correct, it creates a file in /tmp directory like this:

/tmp/crb5_xxxx

xxxx is your user id for example 1005

-

4- Add the hostname and the IP address of your HDFS server (cluster) in your /etc/hosts file on stream server.

-

5- If you have any problem to access to the realm, copy the crb5.conf file from your HDFS server into a directory and add the following vmArg parameter to all your HDFS2 operators in your SPL files: In this case the path of krb5.conf file is an absolute path For example:

vmArg : "-Djava.security.krb5.conf=/etc/krb5.conf";vmArgs is a set of arguments to be passed to the Java VM within which this operator will be run.

-

5- Create a test file in data directory and add some lines into the test file data/LineInput.txt

The following SPL application demonstrates how to connect to an HDFS via kerberos authentication.

It reads the lines from the file data/LineInput.txt via FileSource operator.

Then the HDFS2FileSink operator writes all incoming lines into a file in your HDFS server in your user directory.

For example:

/user/streamsadmin/HDFS-LineInput.txt

In the next step the HDFS2FileSource reads lines from the HDFS file /user/streamsadmin/HDFS-LineInput.txt.

In the last step the FileSink operator writes all incoming lines from HDFS file in your local system into data/result.txt

All project files are in :

https://github.com/IBMStreams/streamsx.hdfs/tree/gh-pages/samples/HdfsKerberos

namespace application ;

use com.ibm.streamsx.hdfs::HDFS2FileSink ;

use com.ibm.streamsx.hdfs::HDFS2FileSource ;

composite HdfsKerberos

{

param

expression<rstring> $authKeytab : getSubmissionTimeValue("authKeytab", "etc/hdfs.headless.keytab") ;

expression<rstring> $authPrincipal : getSubmissionTimeValue("authPrincipal", "[email protected]") ;

expression<rstring> $configPath : getSubmissionTimeValue("configPath", "etc") ;

graph

stream<rstring lines> LineIn = FileSource()

{

param

file : "LineInput.txt" ;

}

() as lineSink1 = HDFS2FileSink(LineIn)

{

param

authKeytab : $authKeytab ;

authPrincipal : $authPrincipal ;

configPath : $configPath ;

file : "HDFS-LineInput.txt" ;

}

stream<rstring lines> LineStream = HDFS2FileSource()

{

param

authKeytab : $authKeytab;

authPrincipal : $authPrincipal ;

configPath : $configPath ;

file : "HDFS-LineInput.txt" ;

initDelay : 10.0l ;

}

() as MySink = FileSink(LineStream)

{

param

file : "result.txt" ;

flush : 1u ;

}

}