-

Notifications

You must be signed in to change notification settings - Fork 55

Contribution Guide

The structured_experiments directory within the app houses all the experiments for the project. Given the R&D nature of the problem we are trying to solve, we wanted a place where we could house experiments and make them easily recreatable. This is where we can experiment with different preprocessing techniques, hyper-parameter tuning, or training data to hopefully decrease the character error rate and word error rate.

There is a guidelines.md file in the directory that outlines the template the stakeholder asked us to follow that is based on the Scientific Method.

The reason we Dockerized the experiments was for ease in recreating the experiment locally and asynchronously. For the test experiment that Niger created, we only have to run the following script in the command line interface within the root directory to recreate his test experiment:

docker-compose -f docker-compose.yml build train && docker-compose -f structured_experiments/2022.02.01.test/docker-compose.yml up --build train_test_experiment

What composes a structured experiment? In this context:

Dockerfiledocker-compose.ymlexperiment.shexperiments.mdrequirements.txtsetup_ground_truth.pytest_model.py

A Dockerfile is a text file that contains all the command line scripts needed to create a Docker image. Within the test_experiment's Dockerfile we have:

FROM scribble-ocr-train as tesseract_base_image

ADD requirements.txt /tmp/requirements.txt

RUN pip install -r /tmp/requirements.txt

COPY experiment.sh /train/tesstrain/experiment.sh

COPY setup_ground_truth.py /train/tesstrain/setup_ground_truth.py

COPY test_model.py /train/tesstrain/test_model.py

CMD ["sh" "/train/tesstrain/experiment.sh"]

What is happening in the Dockerfile? Essentially they are command line scripts that we are using to setup the environment in the Docker container:

- The first line is taking the scribble training set and using it as the base Tesseract image

- Then we add the

requirements.txtto the Docker environment - Then we pip install those requirements

- Next, we copy the

experiment.sh,setup_ground_truth.py, andtest_model.pyfiles from our local machine to our container - Lastly, we use the

CMDcommand to run the experiment shell script, which houses more command line scripts that setup the Kaggle training data ground truth and test model

This YAML file creates the services that the container will use. Since we have a Docker container for Tesseract, we need to use Docker's Compose to run our structured experiments.

version: "3"

services:

train_test_experiment:

image: test_experiment

container_name: test_experiment

build:

context: .

dockerfile: ./Dockerfile

volumes:

- "../../data:/train/tesstrain/data"

command: sh /train/tesstrain/experiment.sh

The important things to note about the docker-compose.yml file:

services: <- What follows next is what's in the service

train_test_experiment: <- Name of the service

image: <- Name of the Docker image

container_name: <- Name of the Docker container

build: <- This tells the service to build the app

context: <- This is where to look to build the app

*Note: the . denotes to look at the root directory*

dockerfile: <- Which Dockerfile to use

volumes: <- Used to persist data, this case, training data

*Note: the "../../data:/train/tesstrain/data"

sets the directory thats 2 levels up to the

/train/tesstrain/data directory in our

container

command: <- runs a command

*Note: sh says that next arg is going to be the

filepath that leads to your shell script

This is the shell file that houses the command line scripts to run the "bones" of the experiment as it calls on the setup_ground_truth.py to provide Tesseract with additional Kaggle data, as well as calling on the test_model.py to actually train and test the model.

The

docker-compose.ymluses theexperiment.sh's filepath in its command argument to help cut down on length and complexity in theDockerfile

We can also tune the model's hyper-parameters in the fourth line's make command

echo 'Setting Up Kaggle Test Data for Tesseract Training'

python setup_ground_truth.py /train/tesstrain/data/kaggle-ground-truth

echo 'Training Kaggle Test Model'

make training MODEL_NAME=kaggle START_MODEL=eng TESSDATA=/train/tessdata MAX_ITERATIONS=100

cp data/kaggle.traineddata /train/tessdata/kaggle.traineddata

echo 'Finished Training Model'

echo 'Testing Kaggle Model'

python test_model.py

echo 'Finished Testing Model'

This is where our container's virtual environment's libraries are stored.

There are two templates to consider when creating documentation: guidelines.md and experiment.md. The guidelines.md has outlined the scientific method and how to apply it to your experiment. This should be in your experiment's markdown file following the template that Niger laid out in his experiments.md

This is where Niger sets up the ground truth files for Tesseract for the Kaggle data set. This is something you will need to change if you are looking to augment the training the data as it is currently only configured for the test experiment Kaggle training data.

This is where the model is housed. Currently, it is set to the Story Squad test data.

Tesseract requires training data that appears in very particular format. All the training data must be placed in a folder nested within data/. This folder must be named <MODEL_NAME>-ground-truth where <MODEL_NAME> is any name of your choice. Each training image in the folder must be in .tiff or .png format. Each image must have an accompanying file with the same file name but the extension .gt.txt and not the image extension. This textfile contains the "ground truth" or the text you'd like Tesseract to learn to extract.

- Place ground truth consisting of line images and transcriptions in the folder

data/MODEL_NAME-ground-truth. This list of files will be split into training and evaluation data, the ratio is defined by theRATIO_TRAINvariable. - Images must be TIFF and have the extension

.tifor PNG and have the extension.png,.bin.pngor.nrm.png. - Transcriptions must be single-line plain text and have the same name as the line image but with the image extension replaced by

.gt.txt. - The repository contains a ZIP archive with sample ground truth, see ocrd-testset.zip. Extract it to

./data/foo-ground-truthand run make training.

NOTE: If you want to generate line images for transcription from a full page, see tips in issue 7 and in particular @shreeshrii's shell script.

-

.gt.txtfiles should only contain characters as they appear in the corresponding image (one line/row only). -

.gt.txtfiles should match the image file character for character, including backwards letters and misspelled words where possible.

-

Sideways/perpendicular text cannot be processed by the OCR - Automatic rejection

-

Watermarks will interfere with the OCR - Automatic rejection

Use the following to run the API in the background of your machine:

docker-compose -f docker-compose.yml up --build -d api

Note!: The API will fail to initiate unless you have a valid GOOGLE_CREDS environment variable set. This environment variable points to the contents of a credential file (application or service) generated from the google cloud console. If you have a credential file downloaded, simply run the following to place the file contents into the environment variable:

export GOOGLE_CREDS=$(cat <PATH TO CREDENTIAL FILE>)

☝️ you can place the above code in your .bashrc or .zshrc file to have your shell automatically execute this everytime you open it.

The logs will be available via the Docker UI or by using docker-compose logs train to see logged outupts.

-

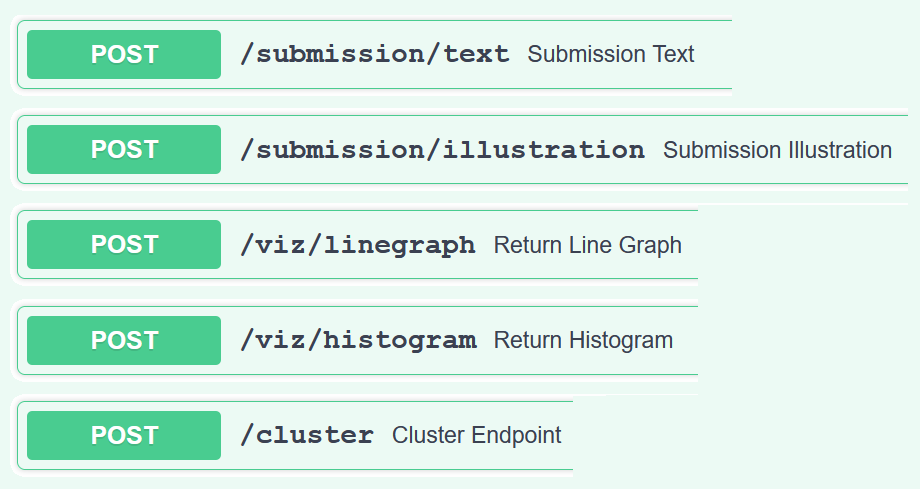

This subroute deals with original copy uploads of the user generated content (UGC).

-

URLs in these endpoints are verified via a SHA512 checksum that accompanies the file. These hashes are used to add an extra layer of protection to make sure that the file that is passed to the web backend and uploaded to the S3 bucket is indeed the file that we are grading.

-

In the

/textendpoint, the transcription service andsquad_scoremethod are used to transcribe, flag, and score submissions, -

A particular note about the

/clusterendpoint. Because of the limitations with Pydantic data modeling package, we could not structure a proper request body model. Our request body was structured with cohort IDs as the dictionary keys, then nested dictionaries inside each contained submissionIDs as keys. Given that the cluster endpoint was a late implementation into the project, we were bound by these limitations and therefore built a model around the existing request body. in future iterations we would HIGHLY RECOMMEND changing the request body of this endpoint so that a proper Pydantic model can be used to build out the SwaggerUI example request body.

- API

- app/api/wordcloud_database.py is an endpoint that works with the front end to create a word cloud from the most complex words in a story submission. app/utils/wordcloud contains the algorithms used to determine complex words. This API fetches demo data from an RDS database that holds 167 stories. The endpoint requires the user to choose the story ID (1-167), and then returns all the words in the story, along with the weight of the words according to how complex it is (all the weights of the document added together equals 1). Front end takes the words and weights and creates an animated word cloud with it.

-

Crop Cloud animation deployment

- Whitelist your IP on the relevant RDS database -> (crop-cloud-database)

- Fast API should be up and deployable, ready to provide gif with default values

- Input correct username, data-base name, and other credentials for access in .env

-

Static picture Deployment (located in crop_cloud_original.py)

- Obtain story_images.zip file from instructors

- Place zip file in the '/data/' folder

- Install Tesseract Binary

- make sure your program can find where tesseract has been installed, and you should be good to go.

-

Functionality

- Currently there is an APIRoute class that can plug into the application router for different endpoints. This function takes all incoming requests and separates the authorization header from the request client to check if that client is authorized to use the API endpoints.

-

Process

- Initially this functionality was going to get handled by the FastAPI.middleware decorator to declare a custom function as middleware which is supposed to have the exact same functionality as the class that we implemented. However, during testing it was clear that the middleware function was ineffective. The function has a limited scope that it can work with, which for FastAPI that is restricted to HTTP requests made from a web browser. When hitting the endpoints from an unauthorized client through Python, the middleware function would not trigger and check the request client header. To get around this limited functionality of the built-in middleware, we customized the service that the middleware uses to receive its hook. The documentation for doing so can be found on the FastAPI Documentation here

-

Future Considerations

- This method is clearly a bit of a workaround. In future iterations, this class could be completely reworked into a framework for adding custom middleware solutions that are not restricted to HTTP network traffic.

- In our application, the value of

DS_SECRET_TOKENis static. In future iterations this should be given routes to regenerate new tokens and deprecate compromised or old tokens. Adding this functionality would increase the security of the tokens and decrease the attack vectors that could be exploited in a production server.

The infrastructure of the DS API is handled by AWS Elastic Beanstalk Service using Docker containers.

These links were important to learn where to start with AWS: