-

Notifications

You must be signed in to change notification settings - Fork 1.5k

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Adding best practices for large scale deep learning (#2144)

Adding best-practices for large-scale deep learning workloads.

- Loading branch information

Showing

35 changed files

with

3,163 additions

and

0 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,3 @@ | ||

| [submodule "best-practices/largescale-deep-learning/Training/Bloom-Pretrain/src/Megatron-DeepSpeed"] | ||

| path = best-practices/largescale-deep-learning/Training/Bloom-Pretrain/src/Megatron-DeepSpeed | ||

| url = https://github.com/bigscience-workshop/Megatron-DeepSpeed.git |

Binary file added

BIN

+73.1 KB

best-practices/largescale-deep-learning/.images/AzureML Training Stack.png

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

581 changes: 581 additions & 0 deletions

581

best-practices/largescale-deep-learning/Data-loading/data-loading.md

Large diffs are not rendered by default.

Oops, something went wrong.

Binary file added

BIN

+42.6 KB

best-practices/largescale-deep-learning/Data-loading/media/Blob-to-Tensor.vsdx

Binary file not shown.

Binary file added

BIN

+154 KB

best-practices/largescale-deep-learning/Data-loading/media/blob-to-tensor.png

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

91 changes: 91 additions & 0 deletions

91

best-practices/largescale-deep-learning/Environment/ACPT.md

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,91 @@ | ||

| ## Optimized Environment for large scale distributed training | ||

|

|

||

| To effectively run optimized and significantly faster training and inference for large models on AzureML, we recommend the new Azure Container for PyTorch (ACPT) environment which includes the best of Microsoft technologies for training with PyTorch on Azure. In addition to AzureML packages, this environment includes latest Training Optimization Technologies: [Onnx / Onnx Runtime / Onnx Runtime Training](https://onnxruntime.ai/), | ||

| [ORT MoE](https://github.com/pytorch/ort/tree/main/ort_moe), [DeepSpeed](https://www.deepspeed.ai/), [MSCCL](https://github.com/microsoft/msccl), Nebula checkpointing and others to significantly boost the performance. | ||

|

|

||

| ## Azure Container for PyTorch (ACPT) | ||

|

|

||

| ### Curated Environment | ||

|

|

||

| There are multiple ready to use curated images published with latest pytorch, cuda versions, ubuntu for [ACPT curated environment](https://learn.microsoft.com/en-us/azure/machine-learning/resource-curated-environments#azure-container-for-pytorch-acpt-preview). You can find the ACPT curated environments by filtering by “ACPT” in the Studio: | ||

|

|

||

|  | ||

|

|

||

| Once you’ve selected a curated environment that has the packages you need, you can refer to it in your YAML file. For example, if you want to use one of the ACPT curated environments, your command job YAML file might look like the following: | ||

|

|

||

| ```sh | ||

| $schema: https://azuremlschemas.azureedge.net/latest/commandJob.schema.json | ||

| type: command | ||

| description: Trains a 175B GPT model | ||

| experiment_name: "large_model_experiment" | ||

| compute: azureml:cluster-gpu | ||

| code: ../../src | ||

| environment: azureml:AzureML-ACPT-pytorch-1.12-py39-cuda11.6-gpu@latest | ||

| environment_variables: | ||

| NCCL_DEBUG: 'WARN' | ||

| NCCL_DEBUG_SUBSYS: 'WARN' | ||

| CUDA_DEVICE_ORDER: 'PCI_BUS_ID' | ||

| NCCL_SOCKET_IFNAME: 'eth0' | ||

| NCCL_IB_PCI_RELAXED_ORDERING: '1' | ||

| ``` | ||

|

|

||

| You can also use SDK to specify the environment name | ||

| ```sh | ||

| job = command( | ||

|

|

||

| environment="AzureML-ACPT-pytorch-1.12-py39-cuda11.6-gpu@latest" | ||

| ) | ||

| ``` | ||

| @latest tag to the end of the environment name will pull the latest image. If you want to be specific about the curated environment version number, you can specify it using the following syntax: | ||

| ```sh | ||

| $schema: https://azuremlschemas.azureedge.net/latest/commandJob.schema.json | ||

| ... | ||

| environment: azureml:AzureML-ACPT-pytorch-1.12-py39-cuda11.6-gpu:3 | ||

| ... | ||

| ``` | ||

|

|

||

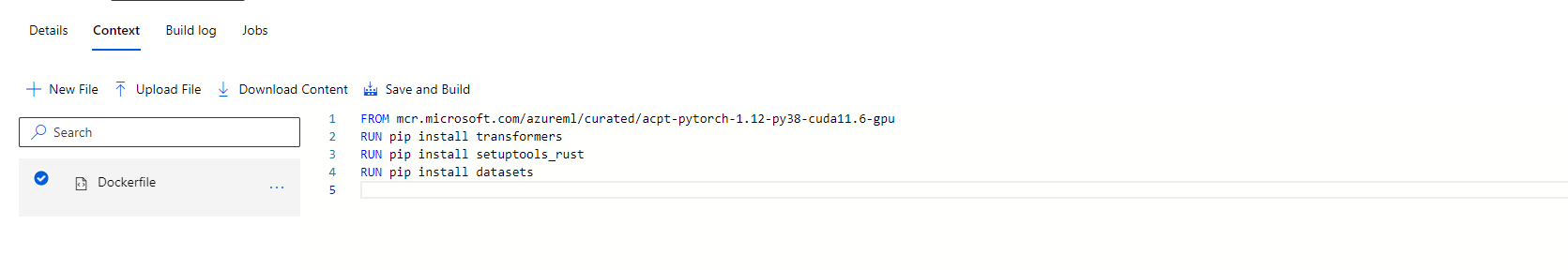

| ### Custom Environment | ||

| If you are looking to extend curated environment and add HF transformers or datasets to be installed, you can create a new env with docker context containing ACPT curated environment as base image and additional packages on top of it as below: | ||

|

|

||

|  | ||

|

|

||

| In new docker context, use curated env of ACPT as base image and add pip install of transformers, datasets and others. | ||

|  | ||

|

|

||

| In addition, you can also save dockerfile to your local path in a environment.yaml | ||

| ```sh | ||

| $schema: https://azuremlschemas.azureedge.net/latest/environment.schema.json | ||

| name: custom_aml_environment | ||

| build: | ||

| path: docker | ||

| ``` | ||

| Create the custom environment using: | ||

| ```sh | ||

| az ml environment create -f cloud/environment/environment.yml | ||

| ``` | ||

|

|

||

| ```sh | ||

| $schema: https://azuremlschemas.azureedge.net/latest/commandJob.schema.json | ||

| type: command | ||

| description: Trains a 175B GPT model | ||

| experiment_name: "large_model_experiment" | ||

| compute: azureml:cluster-gpu | ||

|

|

||

| code: ../../src | ||

| environment: azureml:custom_aml_environment@latest | ||

| ``` | ||

| You can find more detail at [Custom Environment using SDK and Cli](https://learn.microsoft.com/en-us/azure/machine-learning/how-to-manage-environments-v2?tabs=cli#create-an-environment) | ||

|

|

||

| ## Benefits | ||

|

|

||

| Benefits of using the ACPT curated environment include: | ||

|

|

||

| - Significantly faster training and inference when using ACPT environment. | ||

| - Optimized Training framework to set up, develop, accelerate PyTorch model on large workloads. | ||

| - Up-to-date stack with the latest compatible versions of Ubuntu, Python, PyTorch, CUDA\RocM, etc. | ||

| - Ease of use: All components installed and validated against dozens of Microsoft workloads to reduce setup costs and accelerate time to value | ||

| - Latest Training Optimization Technologies: Onnx / Onnx Runtime / Onnx Runtime Training, ORT MoE, DeepSpeed, MSCCL, and others.. | ||

| - Integration with Azure ML: Track your PyTorch experiments on ML Studio or using the AzureML SDK | ||

| - As-IS use with pre-installed packages or build on top of the curated environment | ||

| - The image is also available as a DSVM | ||

| - Azure Customer Support |

67 changes: 67 additions & 0 deletions

67

best-practices/largescale-deep-learning/Environment/README.md

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,67 @@ | ||

| ## Introduction | ||

|

|

||

| An environment is typically the first thing to start with when doing deep learning training for several reasons: | ||

|

|

||

| * <b>Reproducibility</b>: Setting up a proper environment ensures that the training process is repeatable and can be easily replicated by others, which is crucial for scientific research and collaboration. | ||

|

|

||

| * <b>Dependency management</b>: Deep learning requires a lot of dependencies and libraries, such as TensorFlow, PyTorch, or Keras, to name a few. An environment provides a way to manage these dependencies and avoid conflicts with other packages or libraries installed on the system. | ||

|

|

||

| * <b>Portability</b>: Environments can be easily exported and imported, making it possible to move the training process to another system or even cloud computing resources. | ||

|

|

||

| * <b>Auditing</b>: Environments come with full lineage tracking to be able to associate experiments with a particular environment configuration that was used during training. | ||

|

|

||

| Azure Machine Learning environments are an encapsulation of the environment where your machine learning training happens. The environments are managed and versioned entities within your Machine Learning workspace that enable reproducible, auditable, and portable machine learning workflows across a variety of compute targets. | ||

|

|

||

| ### Types | ||

| Generally, for can broadly be divided into two main categories: curated and user-managed. | ||

|

|

||

| Curated environments are provided by Azure Machine Learning and are available in your workspace by default. Intended to be used as is, they contain collections of Python packages and settings to help you get started with various machine learning frameworks. These pre-created environments also allow for faster deployment time. For a full list, see the curated environments article. | ||

|

|

||

| User-managed environments, you're responsible for setting up your environment and installing every package that your training script needs on the compute target. Also be sure to include any dependencies needed for model deployment. | ||

|

|

||

| ## Building the environment for training | ||

| We recommend starting from a curated environment and adding on top of it the remaining libraries / dependencies that are specific for your model training. For Pytorch workloads, we recommend starting from our Azure Container for Pytorch and following the steps outlined [here](./ACPT.md). | ||

|

|

||

| ## Validation | ||

| Before running an actual training using the environment that you just created, it's always recommended to validate it. We've built a sample job to run some standard health checks on a GPU cluster to test performance and correctness of distributed multinode GPU trainings. This helps with troubleshooting performance issues related to the environment & container that you plan on using for long training jobs. | ||

|

|

||

| One such validation includes running Nvidia NCCL tests on the environment. Nvidia NCCL tests are relevant for this because they are a set of tools to measure the performance of NCCL, which is a library providing inter-GPU communication primitives that are topology-aware and can be easily integrated into [applications](https://developer.nvidia.com/blog/scaling-deep-learning-training-nccl/). NCCL has found great application in deep learning frameworks, where the AllReduce collective is heavily used for neural network training. Efficient scaling of neural network training is possible with the multi-GPU and multi-node communication provided by NCCL | ||

|

|

||

| NVIDIA NCCL tests can help you verify the optimal bandwidth and latency of your NCCL operations, such as all-gather, all-reduce, broadcast, reduce, reduce-scatter as well as point-to-point send and receive. They can also help you identify any bottlenecks or errors in your network or hardware configuration, such as NVLinks, PCIe links, or network interfaces. By running NVIDIA NCCL tests before starting a large machine learning model training, you can ensure that your training environment is optimized and ready for efficient and reliable distributed inter-node GPU communications. | ||

|

|

||

| Please see example [here](https://github.com/Azure/azureml-examples/tree/main/cli/jobs/single-step/gpu_perf) along with some expected baselines for some of the most common GPUs in Azure: | ||

|

|

||

| ### Standard_ND40rs_v2 (V100), 2 nodes | ||

|

|

||

| ``` | ||

| # out-of-place in-place | ||

| # size count type redop time algbw busbw error time algbw busbw error | ||

| # (B) (elements) (us) (GB/s) (GB/s) (us) (GB/s) (GB/s) | ||

| db7284be16ae4f7d81abf17cb8e41334000002:3876:3876 [0] NCCL INFO Launch mode Parallel | ||

| 33554432 8388608 float sum 4393.9 7.64 14.32 4e-07 4384.4 7.65 14.35 4e-07 | ||

| 67108864 16777216 float sum 8349.4 8.04 15.07 4e-07 8351.5 8.04 15.07 4e-07 | ||

| 134217728 33554432 float sum 16064 8.36 15.67 4e-07 16032 8.37 15.70 4e-07 | ||

| 268435456 67108864 float sum 31486 8.53 15.99 4e-07 31472 8.53 15.99 4e-07 | ||

| 536870912 134217728 float sum 62323 8.61 16.15 4e-07 62329 8.61 16.15 4e-07 | ||

| 1073741824 268435456 float sum 124011 8.66 16.23 4e-07 123877 8.67 16.25 4e-07 | ||

| 2147483648 536870912 float sum 247301 8.68 16.28 4e-07 247285 8.68 16.28 4e-07 | ||

| 4294967296 1073741824 float sum 493921 8.70 16.30 4e-07 493850 8.70 16.31 4e-07 | ||

| 8589934592 2147483648 float sum 987274 8.70 16.31 4e-07 986984 8.70 16.32 4e-07 | ||

| ``` | ||

|

|

||

| ### Standard_ND96amsr_A100_v4 (A100), 2 nodes | ||

| ``` | ||

| # out-of-place in-place | ||

| # size count type redop time algbw busbw error time algbw busbw error | ||

| # (B) (elements) (us) (GB/s) (GB/s) (us) (GB/s) (GB/s) | ||

| 47c46425da29465eb4f752ffa03dd537000001:4122:4122 [0] NCCL INFO Launch mode Parallel | ||

| 33554432 8388608 float sum 593.7 56.52 105.97 5e-07 590.2 56.86 106.60 5e-07 | ||

| 67108864 16777216 float sum 904.7 74.18 139.09 5e-07 918.0 73.11 137.07 5e-07 | ||

| 134217728 33554432 float sum 1629.6 82.36 154.43 5e-07 1654.3 81.13 152.12 5e-07 | ||

| 268435456 67108864 float sum 2996.0 89.60 167.99 5e-07 3056.7 87.82 164.66 5e-07 | ||

| 536870912 134217728 float sum 5631.9 95.33 178.74 5e-07 5639.2 95.20 178.51 5e-07 | ||

| 1073741824 268435456 float sum 11040 97.26 182.36 5e-07 10985 97.74 183.27 5e-07 | ||

| 2147483648 536870912 float sum 21733 98.81 185.27 5e-07 21517 99.81 187.14 5e-07 | ||

| 4294967296 1073741824 float sum 42843 100.25 187.97 5e-07 42745 100.48 188.40 5e-07 | ||

| 8589934592 2147483648 float sum 85710 100.22 187.91 5e-07 85070 100.98 189.33 5e-07 | ||

| ``` |

Oops, something went wrong.