| title | sidebar_label | slug |

|---|---|---|

Debugging |

Debugging |

/sdk/debugging |

import Tabs from '@theme/Tabs'; import TabItem from '@theme/TabItem';

When debugging why a certain user got a certain value, there are a number of tools at your disposal. Here are some troubleshooting tools:

Every config in the Statsig ecosystem (meaning Feature Gates, Dynamic Configs, Experiments, and Layers) has a Setup tab and a Diagnostics tab.

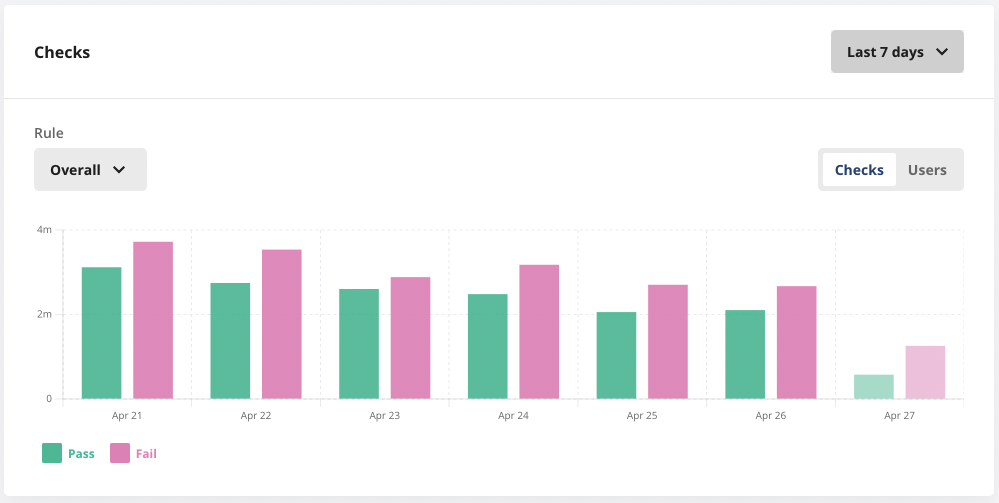

The diagnostics tab is useful for seeing higher level pass/fail/bucketing population sizes over time, via the checks chart at the top.

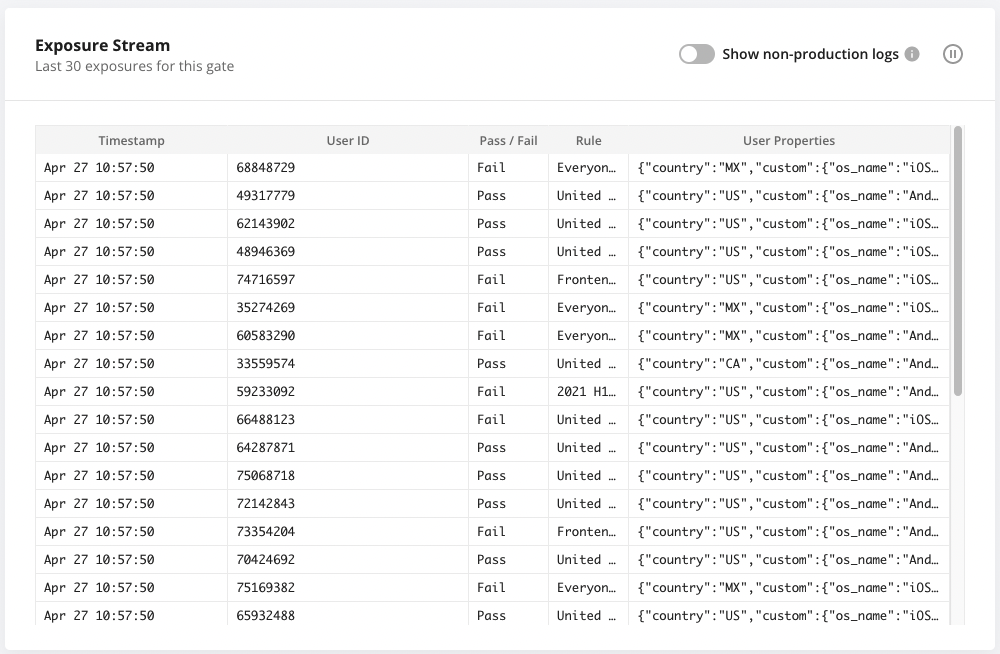

For debugging specific checks, the logstream at the bottom is useful and shows both production and non production exposures in near real time:

<small>To see logs from non-production environments, toggle the "Show non-production logs" in the upper right corner.</small>

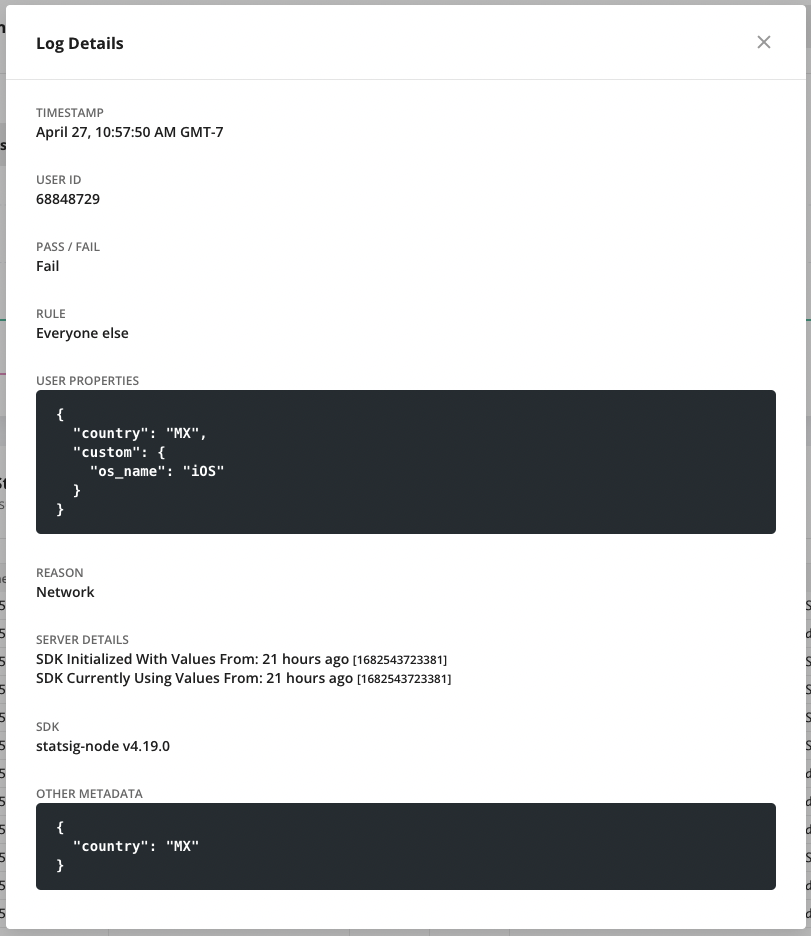

Clicking on a specific exposure shows more details about its evaluation. While the rule is visible in the exposure stream, additional factors, known as the evaluation Reason, can be found in the details view.

Evaluation reasons are a way to understand why a certain value was returned for a given check. The reasons are slightly different between client and server SDKs,

and the newer SDKs have a slightly different formatting. These reasons are used for debugging and internal logging purposes only and may be updated as needed.

Newer versions of the sdk will contain two tags: one regarding the initialization state of the sdk, and the other qualifying different sources for that value.

So in addition to the reasons above, you may have:

- `Recognized`: the value was recognized in the set of configs the client was operating with

- `Unrecognized`: the value was not included in the set of configs the client was operating with

- `Sticky`: the value is from `keepDeviceValue = true` on the method call

- `LocalOverride`: the value is from a local override set on the sdk

For example: `Network:Recognized` means the sdk had up to date values from a successful initialization network request, and the gate/config/experiment you were checking was defined in the payload.

If you are not sure why the config was not included in the payload, it could be excluded due to [Target Apps](/sdk-keys/target-apps), or [Client Bootstrapping](/client/concepts/bootstrapping).

</TabItem>

<TabItem value="server" label="Server SDKS">

For server SDKs, these reasons for the value you are seeing can be:

- `Network`: fetched at SDK initialization time from the network

- `Bootstrap`: derived from bootstrapping the server SDK with a set of values

- `DataAdapter`: derived from the provided data adapter or data store

- `LocalOverride`: from an override set locally on the SDK via an override API

- `Unrecognized`: the sdk was initialized, but this config did not exist in the set of values

- `Uninitialized`: the sdk was not yet successfully initialized

In newer server SDKs, the reasons will be a combination of initialization source and evaluation reason:

**Initialization Source**

- `Network`: fetched at SDK initialization time from the network

- `Bootstrap`: derived from bootstrapping the server SDK with a set of values

- `DataAdapter`: derived from the provided data adapter or data store

- `Uninitialized`: the sdk was not yet successfully initialized

- `StatsigNetwork`: this refers to when custom proxy/grpc streaming has triggered the fallback behavior, thus falling back to statsig api.

* If no network config/overrides were used, the sdk default uses statsig apis but the reason will be Network.

**Evaluation Reason**

- `LocalOverride`: from an override set locally on the SDK via an override API

- `Unrecognized`: this config did not exist in the set of values

- `Unsupported`: the sdk does not support this type of condition type/operator. Usually this means the sdk is out of date and missing new features

- `error`: an error happened during evaluation

- no reason: successful evaluation

So `Network` means the sdk was initialized with values from the network, and the evaluation was successful. If you see `Network:Unrecognized`, it means the sdk was initialized with values from the network,

but the gate/config/experiment you were checking was not included in the payload.

</TabItem>

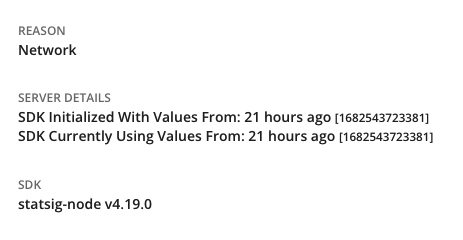

In addition to these reasons, the most recent versions of server SDKs will also give you two times to watch: the time at which config definitions initialized the SDK,

and the time at which the SDK was currently evaluating those definitions. When you change a gate/config/experiment, the project time will update

and server SDKs will download the new definition. If you have not changed your project in two hours, and the evaluation time is saying

the SDK is up to date as of 2 hours ago, then you're evaluating the most up to date definition of that gate/experiment.

In this example, the project was last updated yesterday, and the SDK was initialized with those values. The project has not updated since that time,

and the SDK is still using that same set of definitions which it fetched from the network. You can also see the SDK type and version associated with a given check.

To facilitate testing with Statsig, we provide a few tools to help you test your code without fetching values from statsig network:

- Local Mode: By setting the localMode parameter to true, the SDK will operate without making network calls, returning only default values. This is ideal for dummy or test environments that should remain disconnected from the network.

- Override APIs: Utilize the overrideGate and overrideConfig APIs on the global Statsig interface. These allow you to set overrides for gates or configurations either for specific users or for all users by omitting the user ID.

We recommend enabling localMode and applying overrides for gates, configurations, or experiments to specific values to thoroughly test the various code flows you are developing.

For specific SDK implementation: refer to StatsigOptions in the respective SDK documentation.

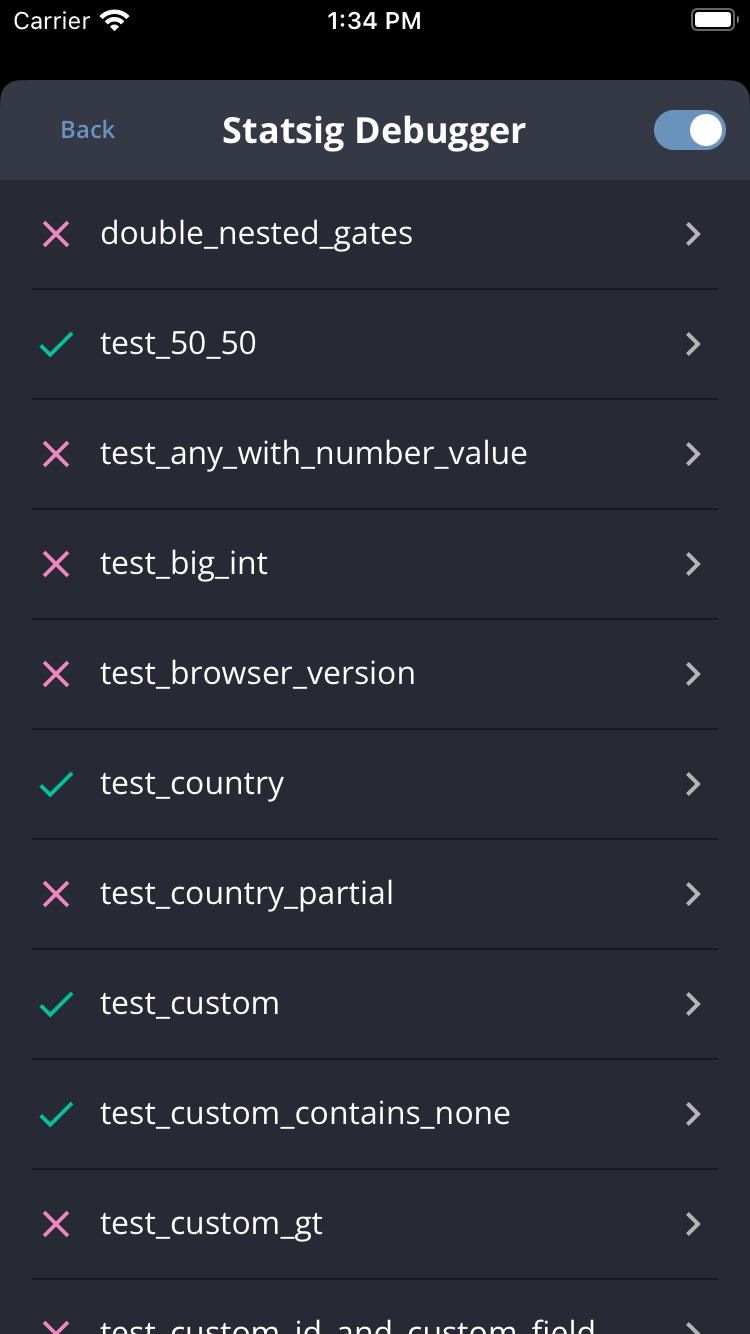

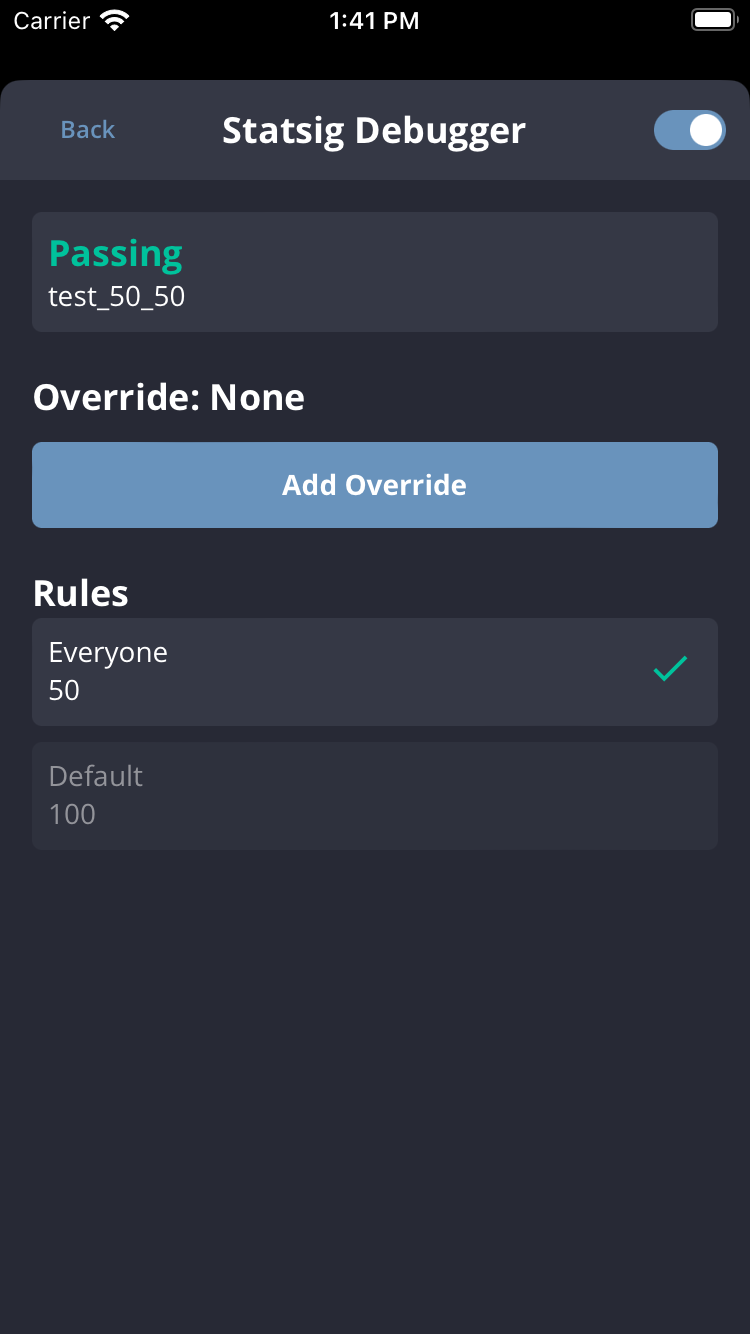

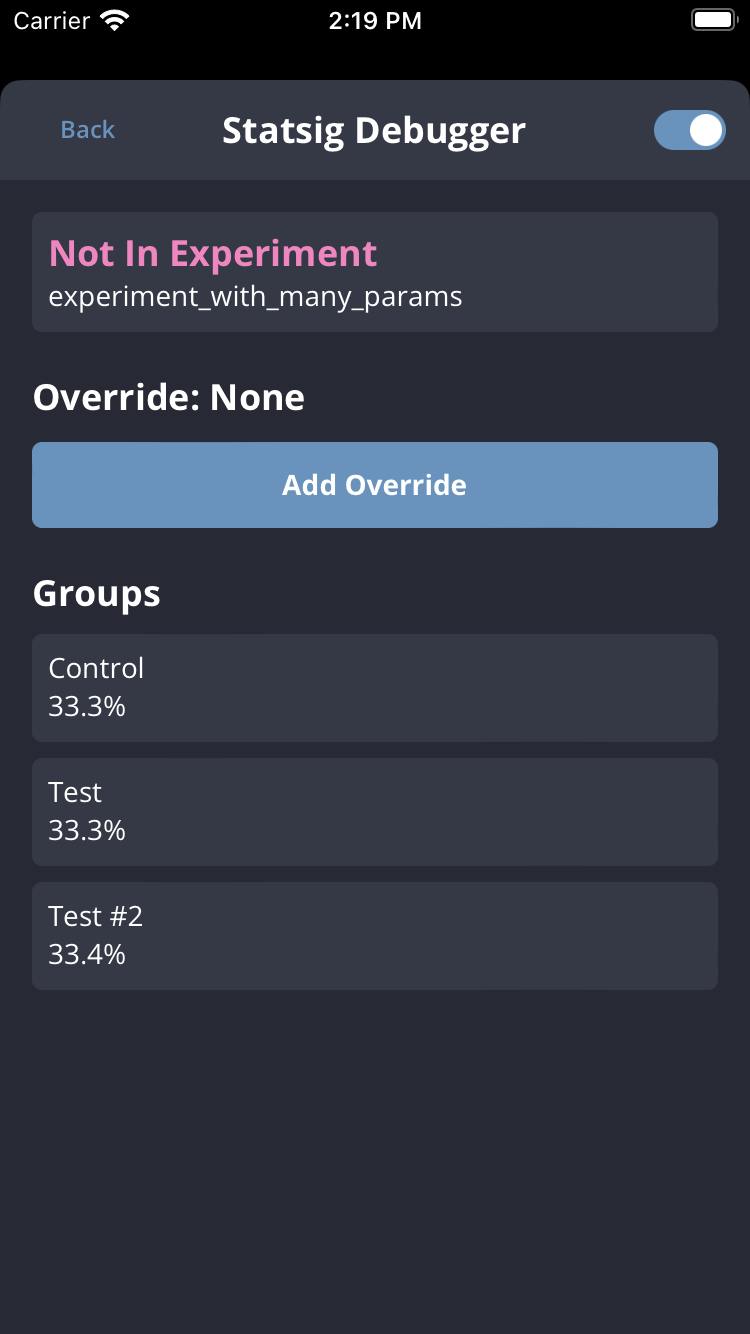

It can be useful to inspect the current values that a Client SDK is using internally. For this, we have a Client SDK Debugger.

With this tool, you can see the current User object the SDK is using as well as the gate/config values associated with it.

Javascript/React: Via a Chrome Extension https://github.com/statsig-io/statsig-sdk-debugger-chrome-extension

NOTE: Accounts signing in to the Statsig console via Google SSO are not supported by this debugging tool.

iOS: Available with `Statsig.openDebugView()`. Available in [v1.26.0](https://github.com/statsig-io/ios-sdk/releases/tag/v1.26.0) and [above](https://github.com/statsig-io/ios-sdk/releases).

Android: Available with `Statsig.openDebugView()`. Available in [v4.29.0](https://github.com/statsig-io/android-sdk/releases/tag/4.29.0) and [above](https://github.com/statsig-io/android-sdk/releases/).

|Landing|Gates List|Gate Details|Experiment Details|

|--|--|--|--|

|||||

For more sdk specific questions, check out the FAQs on the respective SDK pages. If you have more questions, feel free to reach out directly in our Slack Community.

This can occur when you are [Bootstrapping](https://docs.statsig.com/search?q=Client+SDK+Bootstrapping) a Statsig Client SDK with your own prefetched or generated values.

The InvalidBootstrap reason is signally that the current user the Client SDK is operating against is not the same as the one

used to generate the bootstrap values.

The following pseudo code highlights how this can occur:

```js

// Server Side

userA = { userID: 'user-a' };

bootstrapValues = Statsig.getClientInitializeResponse(userA);

// Client Side

bootstrapValues = fetchStatsigValuesFromMyServers(); // <- Network request that executes the above logic

userB = { userID: 'user-b' }; // <- This is not the same User

Statsig.initialize("client-key", userB, { initializeValues: bootstrapValues });

```

Users must also be a 1 to 1 match. The SDK will treat a user with slightly different values as a completely different user.

For example, the following two user objects would also trigger InvalidBootstrap even though they have the same UserID.

```js

userA = { userID: 'user-a' };

userAExt = { userID: 'user-a', customIDs: { employeeID: 'employee-a' }};

```

SDKs get the environment configurations from initialization options. If no environment is provided, the SDK will default to the production environment.

If you are wondering why a certain user is not passing an environment-based condition and what you SDK is initialized with, you can check the user properties in any of the log streams.

The `statsigEnvironment` property will show you the environment the SDK is operating in.

:::note

This is currently only applicable to Python SDK v0.45.0+

:::

The SDK batches and flushes events in the background to our server. When the volume of incoming events exceeds the SDK's flushing capacity, some events may be dropped after a certain number of retries. To reduce the chances of event loss, you can adjust several settings in the Statsig options:

- Event Queue Size: Determines how many events are sent in a single batch.

- Increasing the event queue size allows more events to be flushed at once, but it will consume more memory. It's recommended not to exceed 1800 events per batch, as larger payloads may result in failed requests.

- Retry Queue Size: Specifies how many batches of events the SDK will hold and retry.

- By default, the SDK keeps 10 batches in the retry queue. Increasing this limit allows more batches to be retried, but also increases memory usage.

Tuning these options can help manage event volume more effectively and minimize the risk of event drops.