-

Notifications

You must be signed in to change notification settings - Fork 18

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

the issue about fit_generator #4

Comments

|

啊,三個輸出是因為我之前在嘗試age estimation時有(classification loss, regression loss, center loss)的組合才有這樣的。另外我這個project是按照 根據你的error你的l2_loss是(?,?,?,?)還蠻奇怪的。四個維度都變成batchsize的感覺,先檢查一下吧。 類似這樣 data generator例子: |

|

@shamangary hi, I wanna know if I use the function flow_flow_directory to get the data generator, How I define the Embedding's input. that means, I don't get the labels(the target_input). Can you help me to solve it? I am not sure about the embedding's input. Can I give it a one-hot vector? |

|

@tinazliu @shamangary @lily0101 y_train is the labels. If I do like this custom_vgg_model.fit(y = {'fc2':dummy1,'predictions':y_train}),the model will train successful. The dummy1 have same shape with 'fc2' output(feature). |

大大您好,謝謝你提供這麼棒的source code,讓我受益良多 :)

以下我有一些關於fit generator的問題,還望大大能夠解惑~

問題1:

以上是大大提供fit_generator的範本,我好奇的是為何

Y=[y_train_a_class,y_train_a, random_y_train_a]有3個輸出 ?y_train_a代表什麼意思?會有這個好奇點是因為我看在

TTY.mnist.py是用.fit實踐的 -->model_centerloss.fit([x_train,y_train_value], [y_train, random_y_train], batch_size=batch_size, epochs=epochs, verbose=1, validation_data=([x_test,y_test_value], [y_test,random_y_test]), callbacks=[histories]),照我的理解,其為雙輸入雙輸出的格式。故我覺得.fit_generator也要為雙輸入雙輸出~問題2

我依照雙輸入和雙輸出的想法建構自己的fit_generator,出現一個很奇怪的問題

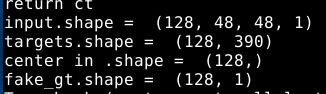

以下是我輸入和輸出的 .shape,感覺大小是正確的

所以有點摸不著頭緒,是不是我的generator和l2_loss的格是不相符,不過我看l2_loss的型態都是?,感覺怪怪的QQ

補充

以下是我實作generator的方式:,我的generator會return這些東西

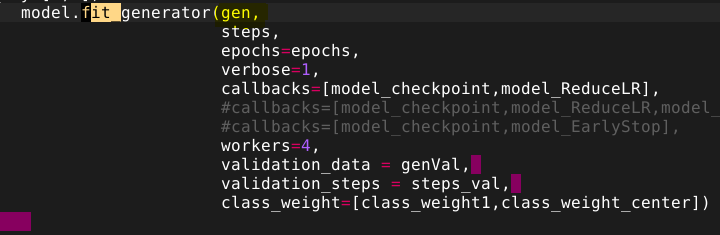

然後我的fit_generator是這樣實踐的

不好意思打擾您,真的很謝謝你提供那麼棒的程式,讓我在實踐center loss時有一個很棒的參考對象~謝謝

,Tina

The text was updated successfully, but these errors were encountered: