Please go to https://github.com/ocp-power-demos/sock-shop-demo for the latest code.

The purpose of this application is to use a reference microservices demo to show a real-life multiarchitecture compute.

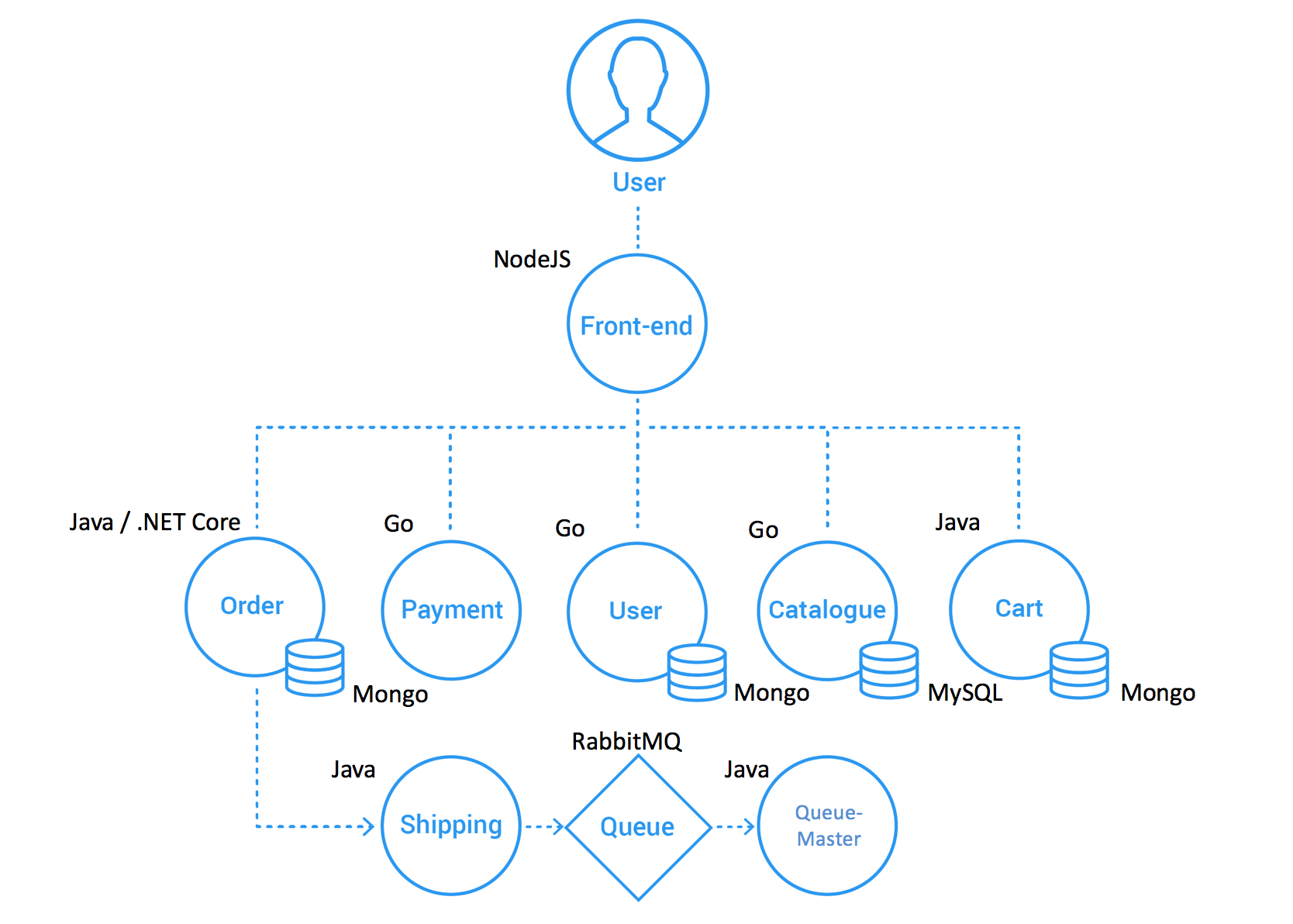

- front-end

- orders

- payment

- user user-db is Intel only.

- catalogue catalogue-db is Intel only.

- cart

- shipping

- queue-master

The catalogue and user have a built-in wait to READY as the dependent databases are started up.

fyre

To deploy to fyre, use the following:

- Update manifests/overlays/fyre/env.secret with username and password:

username=

password=

- Build sock shop for fyre:

❯ kustomize build manifests/overlays/fyre | oc apply -f -

- Destroy sock shop for fyre:

❯ kustomize build manifests/overlays/fyre | oc delete -f -

multiarch compute

To deploy to a multiarch compute cluster, use the following:

- Update manifests/base/env.secret with username and password:

username=

password=

- Build sock shop for multi:

❯ kustomize build manifests/overlays/multi | oc apply -f -

- Destroy sock shop for multi:

❯ kustomize build manifests/overlays/multi | oc delete -f -

- Get the route to the host

❯ oc get routes -n sock-shop

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

sock-shop sock-shop.apps.demo.xyz front-end 8079 edge/None None

-

Navigate to https://sock-shop.apps.demo.xyz

-

Pick a test user from User Accounts

Have fun and use it.

The applications are compiled into images that are hosted at quay.io/repository/cbade_cs/openshift-demo. There is a manifest-listed image for each application.

To build the images, use:

amd64

ARCH=amd64

REGISTRY=quay.io/repository/cbade_cs/openshift-demo

make cross-build-amd64

All other arches

ARCH=ppc64le

REGISTRY=quay.io/repository/cbade_cs/openshift-demo

make cross-build-amd64

To push the manifest-listed images, use:

REGISTRY=quay.io/repository/cbade_cs/openshift-demo

ARM_REGISTRY=quay.io/repostiroy/pbastide_rh/openshift-demo

APP=front-end

make push-ml

The architecture is:

The application looks like:

Please login in via the https route to sock-shop and register a new user.

Thank you to the WeaveWorks team and supporters.