-

Notifications

You must be signed in to change notification settings - Fork 26

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Runs out of memory while doing local scan of big library on Raspberry PI Zero W #22

Comments

|

Effective configuration: |

|

Have you tried with mopidy-local-sqlite? That is the preferred local library to use. |

|

Thanks for the tip! I installed mopidy-local-sqlite, added it as a library of choice in config. |

|

26k files should be fine. This has been used with larger collections. Can you provide some data on the process size? How much ram have you allocated to system memory? Is it crashing when it hits a limit? |

|

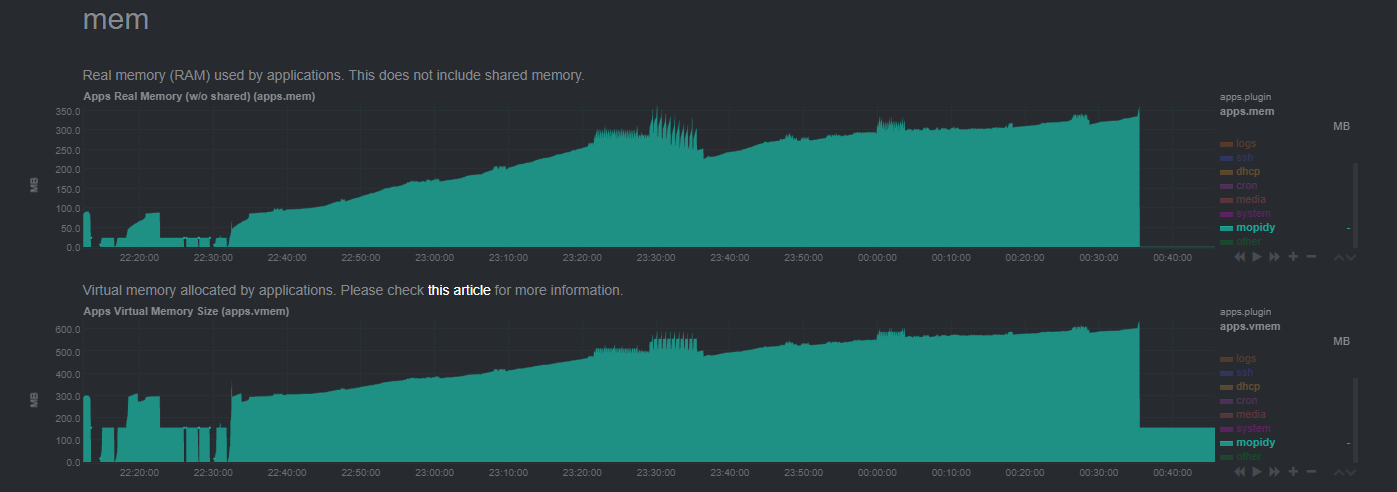

I've collected some data on process size and memory dynamics. It looks like it does run out of free+cached+swap memory. Judging by log-file, process itself does not crash. Log-file: Here is netdata snapshot. You can use any netdata demo site to import it and check metrics on your own. Most of the files in my library are lossless, if it matters. I used stretch lite image for mopidy installation. It is a dedicated system for mopidy, so only services coming by default in stretch lite are running. My config.txt is almost vanilla, except for disabled onboard audio and enabled Allo Mini Boss gpio extension board. I could free up some memory by allocating less GPU mem next time. |

|

I can confirm I have seen this problem with mopidy and with other MPD servers. The scan function appears to have an in-memory cache and will fill memory to the point where the hardware will become unresponsive - I had this on a server with 8GB of RAM as well as RPis. I suspect there needs to be a method of flushing to disk more frequently. |

|

I have the same behavior on a server using mopidy-local-sqlite with option "scan_flush_threshold = 50". It doesn't seem to free memory until it finishes scan. Had to hard reset machine several times before I finished initial scan. This for sure must be fixed. |

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

I think I'm experiencing similar behavior with my instance of Mopidy + Mopidy-local. I've had to setup Mopidy twice - once with Mopidy on top of Python2, and now with Python3. I'm running Mopidy on Raspberry 3+ with 1Gb of RAM and Raspbian Stretch. |

|

Experiencing the same with mopidy 3.0.1 on a Rpi3 raspbian buster. |

|

It's not possible to scan in more than 4000 songs without sucking up the RPi3's full RAM and having to kill mopidy. |

|

When using 'limit' (3000), the scan works, but that means to restart the scan over and over until all files are scanned. |

|

Using limit 5000 works, but with 150k files it's not the glorious solution |

|

Hi, I face the same problem here Any ideas on how to tackle this would be helpful. @Uatschitchun : What do you mean by "limit", i could find no such parameter in the documentation. |

|

Ok, just found it myself, it is a parameter when calling mopidyctl local scan: |

|

It seemed to me that memory filled up very quickly when I had scan timeouts. And I had lots of them. I do not understand, how timeouts can occur on a local file system, but then I do not know how the mopidy scanner works. Anyway, I set the timeout to 10 seconds: And ran the scan in a loop of 1000 tracks each That finally helped to complete the scan. |

|

Sadly the script didn't do it for me. I only have 11500 files and its bombing every 1000 or so. I have a timeout of 10000 and a limit of 1000 as per script, but no joy. Pi B3+ |

|

In proceedings to the topic: I had to refresh my collection today, and tried out the limit-trick that was proposed in this thread. Anyways, just wanted to keep the thread alive and well, since the freeze takes down my whole server if I'm not careful and is quite a showstopper. |

|

Hi, Yet another "Met to".. Running on a PiZero a scan would hang the Pi after an hour or so, the script above seems to be working great, I started low with a limit of 200 but after some monitoring took it up to 500, I could probably go more but just wanted something reliable. It's taken a while, 16H or so but I've scanned nearly 28K files... Big thanks to @holtermp ! |

|

Thanks everyone who commented. I am having the same issue. I modified @holtermp 's script a bit: |

Last output:

By the way, log file didn't catch that for some reason. Last line in log file is last progress report on scanning.

I'm running:

The text was updated successfully, but these errors were encountered: