diff --git a/00-course-setup/README.md b/00-course-setup/README.md

index a1f9952d8..dc0d1a9ee 100644

--- a/00-course-setup/README.md

+++ b/00-course-setup/README.md

@@ -26,7 +26,7 @@ Keeping your API keys safe and secure is important when building any type of app

## How to Run locally on your computer

-To run the code locally on your computer, you would need to have some version of [Python installed](https://www.python.org/downloads/).

+To run the code locally on your computer, you would need to have some version of [Python installed](https://www.python.org/downloads/?WT.mc_id=academic-105485-koreyst).

To then use the repository, you need to clone it:

@@ -39,7 +39,7 @@ Now you have everything checked out and can start learning and work with the cod

### Installing miniconda (optional step)

-There are advantages to installing **[miniconda](https://conda.io/en/latest/miniconda.html)** - it is rather lightweight installation that supports `conda` package manager for different Python **virtual environments**. `conda` makes it easy to install and switch between different Python versions and packages, and also to install packages that are not available via `pip`.

+There are advantages to installing **[miniconda](https://conda.io/en/latest/miniconda.html?WT.mc_id=academic-105485-koreyst)** - it is rather lightweight installation that supports `conda` package manager for different Python **virtual environments**. `conda` makes it easy to install and switch between different Python versions and packages, and also to install packages that are not available via `pip`.

After you install miniconda, you need to clone the repository (if you haven't already done so) and create a virtual environment to be used for this course:

@@ -65,7 +65,7 @@ conda env create --name ai4beg --file .devcontainer/environment.yml

conda activate ai4beg

```

-Refer to this link on creating a [conda environments](https://docs.conda.io/projects/conda/en/latest/user-guide/tasks/manage-environments.html) if you run into trouble.

+Refer to this link on creating a [conda environments](https://docs.conda.io/projects/conda/en/latest/user-guide/tasks/manage-environments.html?WT.mc_id=academic-105485-koreyst) if you run into trouble.

### Using Visual Studio Code with Python Extension

@@ -103,7 +103,7 @@ One of the best ways to keep your API keys secure when using GitHub Codespaces i

The course has 6 concept lessons and 6 coding lessons.

-For the coding lessons, we are using the Azure OpenAI Service. You will need access to the Azure OpenAI service and an API key to run this code. You can apply to get access by [completing this application](https://customervoice.microsoft.com/Pages/ResponsePage.aspx?id=v4j5cvGGr0GRqy180BHbR7en2Ais5pxKtso_Pz4b1_xUOFA5Qk1UWDRBMjg0WFhPMkIzTzhKQ1dWNyQlQCN0PWcu&culture=en-us&country=us).

+For the coding lessons, we are using the Azure OpenAI Service. You will need access to the Azure OpenAI service and an API key to run this code. You can apply to get access by [completing this application](https://customervoice.microsoft.com/Pages/ResponsePage.aspx?id=v4j5cvGGr0GRqy180BHbR7en2Ais5pxKtso_Pz4b1_xUOFA5Qk1UWDRBMjg0WFhPMkIzTzhKQ1dWNyQlQCN0PWcu&culture=en-us&country=us&WT.mc_id=academic-105485-koreyst).

While you wait for your application to be processed, each coding lesson also includes a `README.md` file where you can view the code and outputs.

@@ -113,9 +113,9 @@ If this is your first time working with the Azure OpenAI service, please follow

## Meet Other Learners

-We have created channels in our official [AI Community Discord server](https://aka.ms/genai-discord) for meeting other learners. This is a great way to network with other like-minded entrepreneurs, builders, students, and anyone looking to level up in Generative AI.

+We have created channels in our official [AI Community Discord server](https://aka.ms/genai-discord?WT.mc_id=academic-105485-koreyst) for meeting other learners. This is a great way to network with other like-minded entrepreneurs, builders, students, and anyone looking to level up in Generative AI.

-[](https://aka.ms/genai-discord)

+[](https://aka.ms/genai-discord?WT.mc_id=academic-105485-koreyst)

The project team will also be on this Discord server to help any learners.

diff --git a/01-introduction-to-genai/README.md b/01-introduction-to-genai/README.md

index 7439d3c26..6ad7786bc 100644

--- a/01-introduction-to-genai/README.md

+++ b/01-introduction-to-genai/README.md

@@ -1,6 +1,6 @@

# Introduction to Generative AI and Large Language Models

-[](https://youtu.be/vf_mZrn8ibc)

+[](https://youtu.be/vf_mZrn8ibc?WT.mc_id=academic-105485-koreyst)

*(Click the image above to view video of this lesson)*

@@ -110,7 +110,7 @@ Also, the output of a generative AI model is not perfect and sometimes the creat

## Assignment

-Your assignment is to read up more on [generative AI](https://en.wikipedia.org/wiki/Generative_artificial_intelligence) and try to identify an area where you would add generative AI today that doesn't have it. How would the impact be different from doing it the "old way", can you do something you couldn't before, or are you faster? Write a 300 word summary on what your dream AI startup would look like and include headers like "Problem", "How I would use AI", "Impact" and optionally a business plan.

+Your assignment is to read up more on [generative AI](https://en.wikipedia.org/wiki/Generative_artificial_intelligence?WT.mc_id=academic-105485-koreyst) and try to identify an area where you would add generative AI today that doesn't have it. How would the impact be different from doing it the "old way", can you do something you couldn't before, or are you faster? Write a 300 word summary on what your dream AI startup would look like and include headers like "Problem", "How I would use AI", "Impact" and optionally a business plan.

If you did this task, you might even be ready to apply to Microsoft's incubator, [Microsoft for Startups Founders Hub](https://www.microsoft.com/startups?WT.mc_id=academic-105485-koreyst) we offer credits for both Azure, OpenAI, mentoring and much more, check it out!

@@ -126,6 +126,6 @@ A: 3, an LLM is non-deterministic, the response vary, however, you can control i

## Great Work! Continue the Journey

-After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

Head over to Lesson 2 where we will look at how to [explore and compare different LLM types](../02-exploring-and-comparing-different-llms/README.md?WT.mc_id=academic-105485-koreyst)!

diff --git a/02-exploring-and-comparing-different-llms/README.md b/02-exploring-and-comparing-different-llms/README.md

index 0f164e609..a8effd68b 100644

--- a/02-exploring-and-comparing-different-llms/README.md

+++ b/02-exploring-and-comparing-different-llms/README.md

@@ -1,6 +1,6 @@

# Exploring and comparing different LLMs

-[](https://youtu.be/J1mWzw0P74c)

+[](https://youtu.be/J1mWzw0P74c?WT.mc_id=academic-105485-koreyst)

> *Click the image above to view video of this lesson*

@@ -32,17 +32,17 @@ There are many different types of LLM models, your choice of model depends on wh

Depending on if you aim to use the models for text, audio, video, image generation and so on, you might opt for a different type of model.

-- **Audio and speech recognition**. For this purpose, Whisper-type models are a great choice as they're general-purpose and aimed at speech recognition. It's trained on diverse audio and can perform multilingual speech recognition. Learn more about [Whisper type models here](https://platform.openai.com/docs/models/whisper).

+- **Audio and speech recognition**. For this purpose, Whisper-type models are a great choice as they're general-purpose and aimed at speech recognition. It's trained on diverse audio and can perform multilingual speech recognition. Learn more about [Whisper type models here](https://platform.openai.com/docs/models/whisper?WT.mc_id=academic-105485-koreyst).

-- **Image generation**. For image generation, DALL-E and Midjourney are two very known choices. DALL-E is offered by Azure OpenAI. [Read more about DALL-E here](https://platform.openai.com/docs/models/dall-e) and also in Chapter 9 of this curriculum.

+- **Image generation**. For image generation, DALL-E and Midjourney are two very known choices. DALL-E is offered by Azure OpenAI. [Read more about DALL-E here](https://platform.openai.com/docs/models/dall-e?WT.mc_id=academic-105485-koreyst) and also in Chapter 9 of this curriculum.

-- **Text generation**. Most models are trained on text generation and you have a large variety of choices from GPT-3.5 to GPT-4. They come at different costs with GPT-4 being the most expensive. It's worth looking into the [Azure Open AI playground](https://oai.azure.com/portal/playground) to evaluate which models best fit your needs in terms of capability and cost.

+- **Text generation**. Most models are trained on text generation and you have a large variety of choices from GPT-3.5 to GPT-4. They come at different costs with GPT-4 being the most expensive. It's worth looking into the [Azure Open AI playground](https://oai.azure.com/portal/playground?WT.mc_id=academic-105485-koreyst) to evaluate which models best fit your needs in terms of capability and cost.

Selecting a model means you get some basic capabilities, that might not be enough however. Often you have company specific data that you somehow need to tell the LLM about. There are a few different choices on how to approach that, more on that in the upcoming sections.

### Foundation Models versus LLMs

-The term Foundation Model was [coined by Stanford researchers](https://arxiv.org/abs/2108.07258) and defined as an AI model that follows some criteria, such as:

+The term Foundation Model was [coined by Stanford researchers](https://arxiv.org/abs/2108.07258?WT.mc_id=academic-105485-koreyst) and defined as an AI model that follows some criteria, such as:

- **They are trained using unsupervised learning or self-supervised learning**, meaning they are trained on unlabeled multi-modal data, and they do not require human annotation or labeling of data for their training process.

- **They are very large models**, based on very deep neural networks trained on billions of parameters.

@@ -57,29 +57,29 @@ To further clarify this distinction, let’s take ChatGPT as an example. To buil

-Image source: [2108.07258.pdf (arxiv.org)](https://arxiv.org/pdf/2108.07258.pdf)

+Image source: [2108.07258.pdf (arxiv.org)](https://arxiv.org/pdf/2108.07258.pdf?WT.mc_id=academic-105485-koreyst)

### Open Source versus Proprietary Models

Another way to categorize LLMs is whether they are open source or proprietary.

-Open-source models are models that are made available to the public and can be used by anyone. They are often made available by the company that created them, or by the research community. These models are allowed to be inspected, modified, and customized for the various use cases in LLMs. However, they are not always optimized for production use, and may not be as performant as proprietary models. Plus, funding for open-source models can be limited, and they may not be maintained long term or may not be updated with the latest research. Examples of popular open source models include [Alpaca](https://crfm.stanford.edu/2023/03/13/alpaca.html), [Bloom](https://sapling.ai/llm/bloom) and [LLaMA](https://sapling.ai/llm/llama).

+Open-source models are models that are made available to the public and can be used by anyone. They are often made available by the company that created them, or by the research community. These models are allowed to be inspected, modified, and customized for the various use cases in LLMs. However, they are not always optimized for production use, and may not be as performant as proprietary models. Plus, funding for open-source models can be limited, and they may not be maintained long term or may not be updated with the latest research. Examples of popular open source models include [Alpaca](https://crfm.stanford.edu/2023/03/13/alpaca.html?WT.mc_id=academic-105485-koreyst), [Bloom](https://sapling.ai/llm/bloom?WT.mc_id=academic-105485-koreyst) and [LLaMA](https://sapling.ai/llm/llama?WT.mc_id=academic-105485-koreyst).

-Proprietary models are models that are owned by a company and are not made available to the public. These models are often optimized for production use. However, they are not allowed to be inspected, modified, or customized for different use cases. Plus, they are not always available for free, and may require a subscription or payment to use. Also, users do not have control over the data that is used to train the model, which means they should entrust the model owner with ensuring commitment to data privacy and responsible use of AI. Examples of popular proprietary models include [OpenAI models](https://platform.openai.com/docs/models/overview), [Google Bard](https://sapling.ai/llm/bard) or [Claude 2](https://www.anthropic.com/index/claude-2).

+Proprietary models are models that are owned by a company and are not made available to the public. These models are often optimized for production use. However, they are not allowed to be inspected, modified, or customized for different use cases. Plus, they are not always available for free, and may require a subscription or payment to use. Also, users do not have control over the data that is used to train the model, which means they should entrust the model owner with ensuring commitment to data privacy and responsible use of AI. Examples of popular proprietary models include [OpenAI models](https://platform.openai.com/docs/models/overview?WT.mc_id=academic-105485-koreyst), [Google Bard](https://sapling.ai/llm/bard?WT.mc_id=academic-105485-koreyst) or [Claude 2](https://www.anthropic.com/index/claude-2?WT.mc_id=academic-105485-koreyst).

### Embedding versus Image generation versus Text and Code generation

LLMs can also be categorized by the output they generate.

-Embeddings are a set of models that can convert text into a numerical form, called embedding, which is a numerical representation of the input text. Embeddings make it easier for machines to understand the relationships between words or sentences and can be consumed as inputs by other models, such as classification models, or clustering models that have better performance on numerical data. Embedding models are often used for transfer learning, where a model is built for a surrogate task for which there’s an abundance of data, and then the model weights (embeddings) are re-used for other downstream tasks. An example of this category is [OpenAI embeddings](https://platform.openai.com/docs/models/embeddings).

+Embeddings are a set of models that can convert text into a numerical form, called embedding, which is a numerical representation of the input text. Embeddings make it easier for machines to understand the relationships between words or sentences and can be consumed as inputs by other models, such as classification models, or clustering models that have better performance on numerical data. Embedding models are often used for transfer learning, where a model is built for a surrogate task for which there’s an abundance of data, and then the model weights (embeddings) are re-used for other downstream tasks. An example of this category is [OpenAI embeddings](https://platform.openai.com/docs/models/embeddings?WT.mc_id=academic-105485-koreyst).

-Image generation models are models that generate images. These models are often used for image editing, image synthesis, and image translation. Image generation models are often trained on large datasets of images, such as [LAION-5B](https://laion.ai/blog/laion-5b/), and can be used to generate new images or to edit existing images with inpainting, super-resolution, and colorization techniques. Examples include [DALL-E-3](https://openai.com/dall-e-3) and [Stable Diffusion models](https://github.com/Stability-AI/StableDiffusion).

+Image generation models are models that generate images. These models are often used for image editing, image synthesis, and image translation. Image generation models are often trained on large datasets of images, such as [LAION-5B](https://laion.ai/blog/laion-5b/?WT.mc_id=academic-105485-koreyst), and can be used to generate new images or to edit existing images with inpainting, super-resolution, and colorization techniques. Examples include [DALL-E-3](https://openai.com/dall-e-3?WT.mc_id=academic-105485-koreyst) and [Stable Diffusion models](https://github.com/Stability-AI/StableDiffusion?WT.mc_id=academic-105485-koreyst).

-Text and code generation models are models that generate text or code. These models are often used for text summarization, translation, and question answering. Text generation models are often trained on large datasets of text, such as [BookCorpus](https://www.cv-foundation.org/openaccess/content_iccv_2015/html/Zhu_Aligning_Books_and_ICCV_2015_paper.html), and can be used to generate new text, or to answer questions. Code generation models, like [CodeParrot](https://huggingface.co/codeparrot), are often trained on large datasets of code, such as GitHub, and can be used to generate new code, or to fix bugs in existing code.

+Text and code generation models are models that generate text or code. These models are often used for text summarization, translation, and question answering. Text generation models are often trained on large datasets of text, such as [BookCorpus](https://www.cv-foundation.org/openaccess/content_iccv_2015/html/Zhu_Aligning_Books_and_ICCV_2015_paper.html?WT.mc_id=academic-105485-koreyst), and can be used to generate new text, or to answer questions. Code generation models, like [CodeParrot](https://huggingface.co/codeparrot?WT.mc_id=academic-105485-koreyst), are often trained on large datasets of code, such as GitHub, and can be used to generate new code, or to fix bugs in existing code.

@@ -106,7 +106,7 @@ Models are just the Neural Network, with the parameters, weights, and others. Al

## How to test and iterate with different models to understand performance on Azure

Once our team has explored the current LLMs landscape and identified some good candidates for their scenarios, the next step is testing them on their data and on their workload. This is an iterative process, done by experiments and measures.

-Most of the models we mentioned in previous paragraphs (OpenAI models, open source models like Llama2, and Hugging Face transformers) are available in the [Foundation Models](https://learn.microsoft.com/azure/machine-learning/concept-foundation-models?WT.mc_id=academic-105485-koreyst) catalog in [Azure Machine Learning studio](https://ml.azure.com/).

+Most of the models we mentioned in previous paragraphs (OpenAI models, open source models like Llama2, and Hugging Face transformers) are available in the [Foundation Models](https://learn.microsoft.com/azure/machine-learning/concept-foundation-models?WT.mc_id=academic-105485-koreyst) catalog in [Azure Machine Learning studio](https://ml.azure.com/?WT.mc_id=academic-105485-koreyst).

[Azure Machine Learning](https://azure.microsoft.com/products/machine-learning/?WT.mc_id=academic-105485-koreyst) is a Cloud Service designed for data scientists and ML engineers to manage the whole ML lifecycle (train, test, deploy and handle MLOps) in a single platform. The Machine Learning studio offers a graphical user interface to this service and enables the user to:

@@ -145,7 +145,7 @@ deploy an LLM in production, with different levels of complexity, cost, and qual

-Img source: [Four Ways that Enterprises Deploy LLMs | Fiddler AI Blog](https://www.fiddler.ai/blog/four-ways-that-enterprises-deploy-llms)

+Img source: [Four Ways that Enterprises Deploy LLMs | Fiddler AI Blog](https://www.fiddler.ai/blog/four-ways-that-enterprises-deploy-llms?WT.mc_id=academic-105485-koreyst)

### Prompt Engineering with Context

@@ -192,6 +192,6 @@ Read up more on how you can [use RAG](https://learn.microsoft.com/azure/search/r

## Great Work, Continue Your Learning

-After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

Head over to Lesson 3 where we will look at how to [build with Generative AI Responsibly](../03-using-generative-ai-responsibly/README.md?WT.mc_id=academic-105485-koreyst)!

diff --git a/03-using-generative-ai-responsibly/README.md b/03-using-generative-ai-responsibly/README.md

index 37d20ad16..92c04eadf 100644

--- a/03-using-generative-ai-responsibly/README.md

+++ b/03-using-generative-ai-responsibly/README.md

@@ -46,7 +46,7 @@ The model produces a response like the one below:

_11zon.webp?WT.mc_id=academic-105485-koreyst)

-> *(Source: [Flying bisons](https://flyingbisons.com))*

+> *(Source: [Flying bisons](https://flyingbisons.com?WT.mc_id=academic-105485-koreyst))*

This is a very confident and thorough answer. Unfortunately, it is incorrect. Even with a minimal amount of research, one would discover there was more than one survivor of the Titanic survivor. For a student who is just starting to research this topic, this answer can be persuasive enough to not be questioned and treated as fact. The consequences of this can lead to the AI system being unreliable and negatively impact the reputation of our startup.

@@ -128,6 +128,6 @@ Read up on [Azure AI Content Saftey](https://learn.microsoft.com/azure/ai-servic

## Great Work, Continue Your Learning

-After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

Head over to Lesson 4 where we will look at [Prompt Engineering Fundamentals](../04-prompt-engineering-fundamentals/README.md?WT.mc_id=academic-105485-koreyst)!

diff --git a/04-prompt-engineering-fundamentals/1-introduction.ipynb b/04-prompt-engineering-fundamentals/1-introduction.ipynb

index 1e71671de..01972de2f 100644

--- a/04-prompt-engineering-fundamentals/1-introduction.ipynb

+++ b/04-prompt-engineering-fundamentals/1-introduction.ipynb

@@ -21,7 +21,7 @@

"source": [

"### Exercise 1: Tokenization\n",

"Explore Tokenization using tiktoken, an open-source fast tokenizer from OpenAI\n",

- "See [OpenAI Cookbook](https://github.com/openai/openai-cookbook/blob/main/examples/How_to_count_tokens_with_tiktoken.ipynb) for more examples.\n"

+ "See [OpenAI Cookbook](https://github.com/openai/openai-cookbook/blob/main/examples/How_to_count_tokens_with_tiktoken.ipynb?WT.mc_id=academic-105485-koreyst) for more examples.\n"

]

},

{

diff --git a/04-prompt-engineering-fundamentals/README.md b/04-prompt-engineering-fundamentals/README.md

index 00ac9d598..9a92c0814 100644

--- a/04-prompt-engineering-fundamentals/README.md

+++ b/04-prompt-engineering-fundamentals/README.md

@@ -1,6 +1,6 @@

# Prompt Engineering Fundamentals

-[](https://youtu.be/r2ItK3UMVTk)

+[](https://youtu.be/r2ItK3UMVTk?WT.mc_id=academic-105485-koreyst)

How you write your prompt to the LLM matters, a carefully crafted prompt can achieve achieve a better result than one that isn't. But what even are these concepts, prompt, prompt engineering and how do I improve what I send to the LLM? Questions like these are what this chapter and the upcoming chapter are looking to answer.

@@ -75,15 +75,15 @@ This is necessarily a trial-and-error process that requires user intuition and e

An LLM sees prompts as a _sequence of tokens_ where different models (or versions of a model) can tokenize the same prompt in different ways. Since LLMs are trained on tokens (and not on raw text), the way prompts get tokenized has a direct impact on the quality of the generated response.

-To get an intuition for how tokenization works, try tools like the [OpenAI Tokenizer](https://platform.openai.com/tokenizer) shown below. Copy in your prompt - and see how that gets converted into tokens, paying attention to how whitespace characters and punctuation marks are handled. Note that this example shows an older LLM (GPT-3) - so trying this with a newer model may produce a different result.

+To get an intuition for how tokenization works, try tools like the [OpenAI Tokenizer](https://platform.openai.com/tokenizer?WT.mc_id=academic-105485-koreyst) shown below. Copy in your prompt - and see how that gets converted into tokens, paying attention to how whitespace characters and punctuation marks are handled. Note that this example shows an older LLM (GPT-3) - so trying this with a newer model may produce a different result.

### Concept: Foundation Models

-Once a prompt is tokenized, the primary function of the ["Base LLM"](https://blog.gopenai.com/an-introduction-to-base-and-instruction-tuned-large-language-models-8de102c785a6) (or Foundation model) is to predict the token in that sequence. Since LLMs are trained on massive text datasets, they have a good sense of the statistical relationships between tokens and can make that prediction with some confidence. Not that they don't understand the _meaning_ of the words in the prompt or token; they just see a pattern they can "complete" with their next prediction. They can continue predicting the sequence till terminated by user intervention or some pre-established condition.

+Once a prompt is tokenized, the primary function of the ["Base LLM"](https://blog.gopenai.com/an-introduction-to-base-and-instruction-tuned-large-language-models-8de102c785a6?WT.mc_id=academic-105485-koreyst) (or Foundation model) is to predict the token in that sequence. Since LLMs are trained on massive text datasets, they have a good sense of the statistical relationships between tokens and can make that prediction with some confidence. Not that they don't understand the _meaning_ of the words in the prompt or token; they just see a pattern they can "complete" with their next prediction. They can continue predicting the sequence till terminated by user intervention or some pre-established condition.

-Want to see how prompt-based completion works? Enter the above prompt into the Azure OpenAI Studio [_Chat Playground_](https://oai.azure.com/playground) with the default settings. The system is configured to treat prompts as requests for information - so you should see a completion that satisfies this context.

+Want to see how prompt-based completion works? Enter the above prompt into the Azure OpenAI Studio [_Chat Playground_](https://oai.azure.com/playground?WT.mc_id=academic-105485-koreyst) with the default settings. The system is configured to treat prompts as requests for information - so you should see a completion that satisfies this context.

But what if the user wanted to see something specific that met some criteria or task objective? This is where _instruction-tuned_ LLMs come into the picture.

@@ -91,7 +91,7 @@ But what if the user wanted to see something specific that met some criteria or

### Concept: Instruction Tuned LLMs

-An [Instruction Tuned LLM](https://blog.gopenai.com/an-introduction-to-base-and-instruction-tuned-large-language-models-8de102c785a6) starts with the foundation model and fine-tunes it with examples or input/output pairs (e.g., multi-turn "messages") that can contain clear instructions - and the response from the AI attempt to follow that instruction.

+An [Instruction Tuned LLM](https://blog.gopenai.com/an-introduction-to-base-and-instruction-tuned-large-language-models-8de102c785a6?WT.mc_id=academic-105485-koreyst) starts with the foundation model and fine-tunes it with examples or input/output pairs (e.g., multi-turn "messages") that can contain clear instructions - and the response from the AI attempt to follow that instruction.

This uses techniques like Reinforcement Learning with Human Feedback (RLHF) that can train the model to _follow instructions_ and _learn from feedback_ so that it produces responses that are better-suited to practical applications and more-relevant to user objectives.

@@ -178,7 +178,7 @@ We've seen why prompt engineering is important - now let's understand how prompt

### Basic Prompt

-Let's start with the basic prompt: a text input sent to the model with no other context. Here's an example - when we send the first few words of the US national anthem to the OpenAI [Completion API](https://platform.openai.com/docs/api-reference/completions) it instantly _completes_ the response with the next few lines, illustrating the basic prediction behavior.

+Let's start with the basic prompt: a text input sent to the model with no other context. Here's an example - when we send the first few words of the US national anthem to the OpenAI [Completion API](https://platform.openai.com/docs/api-reference/completions?WT.mc_id=academic-105485-koreyst) it instantly _completes_ the response with the next few lines, illustrating the basic prediction behavior.

| Prompt (Input) | Completion (Output) |

|:---|:---|

@@ -272,9 +272,9 @@ Another technique for using primary content is to provide _cues_ rather than exa

### Prompt Templates

-A prompt template is a _pre-defined recipe for a prompt_ that can be stored and reused as needed, to drive more consistent user experiences at scale. In its simplest form, it is simply a collection of prompt examples like [this one from OpenAI](https://platform.openai.com/examples) that provides both the interactive prompt components (user and system messages) and the API-driven request format - to support reuse.

+A prompt template is a _pre-defined recipe for a prompt_ that can be stored and reused as needed, to drive more consistent user experiences at scale. In its simplest form, it is simply a collection of prompt examples like [this one from OpenAI](https://platform.openai.com/examples?WT.mc_id=academic-105485-koreyst) that provides both the interactive prompt components (user and system messages) and the API-driven request format - to support reuse.

-In it's more complex form like [this example from LangChain](https://python.langchain.com/docs/modules/model_io/prompts/prompt_templates/) it contains _placeholders_ that can be replaced with data from a variety of sources (user input, system context, external data sources etc.) to generate a prompt dynamically. This allows us to create a library of reusable prompts that can be used to drive consistent user experiences **programmatically** at scale.

+In it's more complex form like [this example from LangChain](https://python.langchain.com/docs/modules/model_io/prompts/prompt_templates/?WT.mc_id=academic-105485-koreyst) it contains _placeholders_ that can be replaced with data from a variety of sources (user input, system context, external data sources etc.) to generate a prompt dynamically. This allows us to create a library of reusable prompts that can be used to drive consistent user experiences **programmatically** at scale.

Finally, the real value of templates lies in the ability to create and publish _prompt libraries_ for vertical application domains - where the prompt template is now _optimized_ to reflect application-specific context or examples that make the responses more relevant and accurate for the targeted user audience. The [Prompts For Edu](https://github.com/microsoft/prompts-for-edu?WT.mc_id=academic-105485-koreyst) repository is a great example of this approach, curating a library of prompts for the education domain with emphasis on key objectives like lesson planning, curriculum design, student tutoring etc.

@@ -319,7 +319,7 @@ Prompt Engineering is a trial-and-error process so keep three broad guiding fact

## Best Practices

-Now let's look at common best practices that are recommended by [Open AI](https://help.openai.com/en/articles/6654000-best-practices-for-prompt-engineering-with-openai-api) and [Azure OpenAI](https://learn.microsoft.com/azure/ai-services/openai/concepts/prompt-engineering#best-practices?WT.mc_id=academic-105485-koreyst) practitioners.

+Now let's look at common best practices that are recommended by [Open AI](https://help.openai.com/en/articles/6654000-best-practices-for-prompt-engineering-with-openai-api?WT.mc_id=academic-105485-koreyst) and [Azure OpenAI](https://learn.microsoft.com/azure/ai-services/openai/concepts/prompt-engineering#best-practices?WT.mc_id=academic-105485-koreyst) practitioners.

| What | Why |

|:---|:---|

@@ -360,7 +360,7 @@ For our assignment, we'll be using a Jupyter Notebook with exercises you can com

### Next, configure your environment variables

-- Copt the `.env.copy` file in repo root to `.env` and fill in the `OPENAI_API_KEY` value. You can find your API Key in your [OpenAI Dashboard](https://beta.openai.com/account/api-keys).

+- Copt the `.env.copy` file in repo root to `.env` and fill in the `OPENAI_API_KEY` value. You can find your API Key in your [OpenAI Dashboard](https://beta.openai.com/account/api-keys?WT.mc_id=academic-105485-koreyst).

### Next, open the Jupyter Notebook

diff --git a/05-advanced-prompts/README.md b/05-advanced-prompts/README.md

index 0ad4768a0..29c448218 100644

--- a/05-advanced-prompts/README.md

+++ b/05-advanced-prompts/README.md

@@ -1,6 +1,6 @@

# Creating Advanced prompts

-[](https://youtu.be/32GBH6BTWZQ)

+[](https://youtu.be/32GBH6BTWZQ?WT.mc_id=academic-105485-koreyst)

Let's recap some learnings from the previous chapter:

@@ -619,6 +619,6 @@ You just used the self-refine technique in the assignment. Take any program you

## Great Work! Continue Your Learning

-After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

Head over to Lesson 6 where we will apply our knowledge of Prompt Engineering by [building text generation apps](../06-text-generation-apps/README.md?WT.mc_id=academic-105485-koreyst)

diff --git a/06-text-generation-apps/README.md b/06-text-generation-apps/README.md

index cebb015fb..85af890c1 100644

--- a/06-text-generation-apps/README.md

+++ b/06-text-generation-apps/README.md

@@ -1,6 +1,6 @@

# Building Text Generation Applications

-[](https://youtu.be/5jKHzY6-4s8)

+[](https://youtu.be/5jKHzY6-4s8?WT.mc_id=academic-105485-koreyst)

> *(Click the image above to view video of this lesson)*

@@ -99,7 +99,7 @@ You need to carry out the following steps:

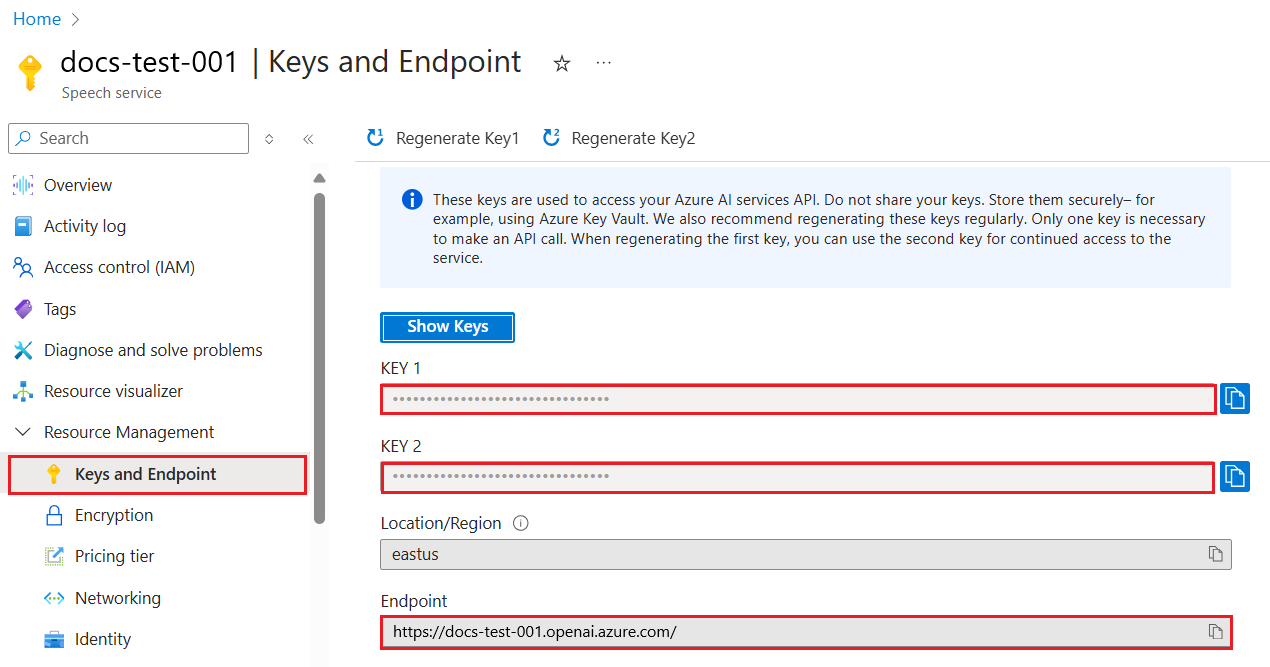

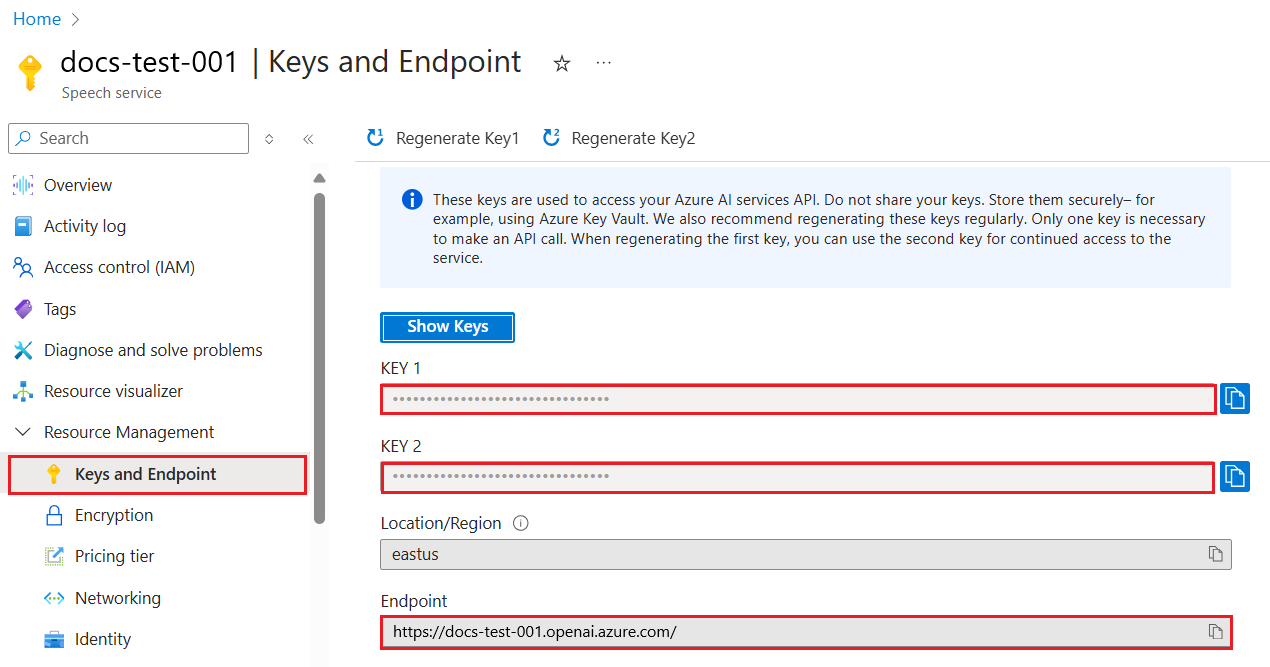

At this point, you need to tell your `openai` library what API key to use. To find your API key, go to "Keys and Endpoint" section of your Azure Open AI resource and copy the "Key 1" value.

-

+

Now that you have this information copied, let's instruct the libraries to use it.

@@ -654,6 +654,6 @@ When working on the assignment, try to vary the temperature, try set it to 0, 0.

## Great Work! Continue Your Learning

-After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

Head over to Lesson 7 where we will look at how to [build chat applications](../07-building-chat-applications/README.md?WT.mc_id=academic-105485-koreyst)!

diff --git a/06-text-generation-apps/notebook-azure-openai.ipynb b/06-text-generation-apps/notebook-azure-openai.ipynb

index 23f885d1c..90004b980 100644

--- a/06-text-generation-apps/notebook-azure-openai.ipynb

+++ b/06-text-generation-apps/notebook-azure-openai.ipynb

@@ -95,14 +95,14 @@

" > At the time of writing, you need to apply for access to Azure Open AI.\n",

"\n",

"- Install Python \n",

- "- Have created an Azure OpenAI Service resource. See this guide for how to [create a resource](https://learn.microsoft.com/azure/ai-services/openai/how-to/create-resource?pivots=web-portal).\n",

+ "- Have created an Azure OpenAI Service resource. See this guide for how to [create a resource](https://learn.microsoft.com/azure/ai-services/openai/how-to/create-resource?pivots=web-portal&WT.mc_id=academic-105485-koreyst).\n",

"\n",

"\n",

"### Locate API key and endpoint\n",

"\n",

"At this point, you need to tell your `openai` library what API key to use. To find your API key, go to \"Keys and Endpoint\" section of your Azure Open AI resource and copy the \"Key 1\" value.\n",

"\n",

- " \n",

+ " \n",

"\n",

"Now that you have this information copied, let's instruct the libraries to use it.\n",

"\n",

diff --git a/07-building-chat-applications/README.md b/07-building-chat-applications/README.md

index 704fa9286..d951e2128 100644

--- a/07-building-chat-applications/README.md

+++ b/07-building-chat-applications/README.md

@@ -1,6 +1,6 @@

# Building Generative AI-Powered Chat Applications

-[](https://youtu.be/Kw4i-tlKMrQ)

+[](https://youtu.be/Kw4i-tlKMrQ?WT.mc_id=academic-105485-koreyst)

> *(Click the image above to view video of this lesson)*

@@ -179,6 +179,6 @@ See [assignment](./notebook-azure-openai.ipynb?WT.mc_id=academic-105485-koreyst)

## Great Work! Continue the Journey

-After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

Head over to Lesson 8 to see how you can start [building search applications](../08-building-search-applications/README.md?WT.mc_id=academic-105485-koreyst)!

diff --git a/07-building-chat-applications/notebook-azure-openai.ipynb b/07-building-chat-applications/notebook-azure-openai.ipynb

index fc2f07cec..95401c2cb 100644

--- a/07-building-chat-applications/notebook-azure-openai.ipynb

+++ b/07-building-chat-applications/notebook-azure-openai.ipynb

@@ -27,11 +27,11 @@

},

"source": [

"## Overview\n",

- "This notebook is adapted from the [Azure OpenAI Samples Repository](https://github.com/Azure/azure-openai-samples) that includes notebooks that also access the [OpenAI](notebooks-oai.ipynb) service.\n",

+ "This notebook is adapted from the [Azure OpenAI Samples Repository](https://github.com/Azure/azure-openai-samples?WT.mc_id=academic-105485-koreyst) that includes notebooks that also access the [OpenAI](notebook-openai.ipynb) service.\n",

"\n",

- "The Python OpenAI API works with Azure OpenAI as well, with a few modifications. Learn more about the differences here: [How to switch between OpenAI and Azure OpenAI endpoints with Python](https://learn.microsoft.com/azure/ai-services/openai/how-to/switching-endpoints?WT_mc_id=academic-109527-jasmineg)\n",

+ "The Python OpenAI API works with Azure OpenAI as well, with a few modifications. Learn more about the differences here: [How to switch between OpenAI and Azure OpenAI endpoints with Python](https://learn.microsoft.com/azure/ai-services/openai/how-to/switching-endpoints?WT.mc_id=academic-109527-jasmineg)\n",

"\n",

- "For more quickstart examples please refer to the official Azure Open AI Quickstart Documentation https://learn.microsoft.com/azure/cognitive-services/openai/quickstart?pivots=programming-language-studio?WT.mc_id=academic-105485-koreyst"

+ "For more quickstart examples please refer to the official Azure Open AI Quickstart Documentation https://learn.microsoft.com/azure/cognitive-services/openai/quickstart?pivots=programming-language-studio&WT.mc_id=academic-105485-koreyst"

]

},

{

@@ -74,10 +74,10 @@

"source": [

"### Getting started with Azure OpenAI Service\n",

"\n",

- "New customers will need to [apply for access](https://aka.ms/oai/access) to Azure OpenAI Service. \n",

+ "New customers will need to [apply for access](https://aka.ms/oai/access?WT.mc_id=academic-105485-koreyst) to Azure OpenAI Service. \n",

"After approval is complete, customers can log into the Azure portal, create an Azure OpenAI Service resource, and start experimenting with models via the studio \n",

"\n",

- "[Great resource for getting started quickly](https://techcommunity.microsoft.com/t5/educator-developer-blog/azure-openai-is-now-generally-available/ba-p/3719177 )\n"

+ "[Great resource for getting started quickly](https://techcommunity.microsoft.com/t5/educator-developer-blog/azure-openai-is-now-generally-available/ba-p/3719177?WT.mc_id=academic-105485-koreyst)\n"

]

},

{

@@ -206,7 +206,7 @@

"### 3. Finding the right model \n",

"The GPT-3.5-turbo or GPT-4 models can understand and generate natural language. The service offers four model capabilities, each with different levels of power and speed suitable for different tasks. \n",

"\n",

- "[Azure OpenAI models](https://learn.microsoft.com/azure/cognitive-services/openai/concepts/models) \n",

+ "[Azure OpenAI models](https://learn.microsoft.com/azure/cognitive-services/openai/concepts/models?WT.mc_id=academic-105485-koreyst) \n",

" \n"

]

},

@@ -835,8 +835,8 @@

},

"source": [

"# References \n",

- "- [Azure Documentation - Azure Open AI Models](https://learn.microsoft.com/azure/cognitive-services/openai/concepts/models) \n",

- "- [OpenAI Studio Examples](https://oai.azure.com/portal) "

+ "- [Azure Documentation - Azure Open AI Models](https://learn.microsoft.com/azure/cognitive-services/openai/concepts/models?WT.mc_id=academic-105485-koreyst) \n",

+ "- [OpenAI Studio Examples](https://oai.azure.com/portal?WT.mc_id=academic-105485-koreyst) "

]

},

{

@@ -852,7 +852,7 @@

"source": [

"# For More Help \n",

"[OpenAI Commercialization Team](AzureOpenAITeam@microsoft.com) \n",

- "AI Specialized CSAs [aka.ms/airangers](aka.ms/airangers)"

+ "AI Specialized CSAs [aka.ms/airangers](https://aka.ms/airangers?WT.mc_id=academic-105485-koreyst)"

]

},

{

diff --git a/07-building-chat-applications/notebook-openai.ipynb b/07-building-chat-applications/notebook-openai.ipynb

index 37c5cb677..978a141ac 100644

--- a/07-building-chat-applications/notebook-openai.ipynb

+++ b/07-building-chat-applications/notebook-openai.ipynb

@@ -14,9 +14,9 @@

"# Chapter 7: Building Chat Applications\n",

"## OpenAI API Quickstart\n",

"\n",

- "This notebook is adapted from the [Azure OpenAI Samples Repository](https://github.com/Azure/azure-openai-samples) that includes notebooks that access [Azure OpenAI](notebooks-azure-oai.ipynb) services.\n",

+ "This notebook is adapted from the [Azure OpenAI Samples Repository](https://github.com/Azure/azure-openai-samples?WT.mc_id=academic-105485-koreyst) that includes notebooks that access [Azure OpenAI](notebook-azure-openai.ipynb) services.\n",

"\n",

- "The Python OpenAI API works with Azure OpenAI Models as well, with a few modifications. Learn more about the differences here: [How to switch between OpenAI and Azure OpenAI endpoints with Python](https://learn.microsoft.com/azure/ai-services/openai/how-to/switching-endpoints?WT_mc_id=academic-109527-jasmineg)"

+ "The Python OpenAI API works with Azure OpenAI Models as well, with a few modifications. Learn more about the differences here: [How to switch between OpenAI and Azure OpenAI endpoints with Python](https://learn.microsoft.com/azure/ai-services/openai/how-to/switching-endpoints?WT.mc_id=academic-109527-jasmineg)"

]

},

{

@@ -589,9 +589,9 @@

},

"source": [

"# References \n",

- "- [Openai Cookbook](https://github.com/openai/openai-cookbook) \n",

- "- [OpenAI Studio Examples](https://oai.azure.com/portal) \n",

- "- [Best practices for fine-tuning GPT-3 to classify text](https://docs.google.com/document/d/1rqj7dkuvl7Byd5KQPUJRxc19BJt8wo0yHNwK84KfU3Q/edit#)"

+ "- [Openai Cookbook](https://github.com/openai/openai-cookbook?WT.mc_id=academic-105485-koreyst) \n",

+ "- [OpenAI Studio Examples](https://oai.azure.com/portal?WT.mc_id=academic-105485-koreyst) \n",

+ "- [Best practices for fine-tuning GPT-3 to classify text](https://docs.google.com/document/d/1rqj7dkuvl7Byd5KQPUJRxc19BJt8wo0yHNwK84KfU3Q/edit#?WT.mc_id=academic-105485-koreyst)"

]

},

{

@@ -607,7 +607,7 @@

"source": [

"# For More Help \n",

"[OpenAI Commercialization Team](AzureOpenAITeam@microsoft.com) \n",

- "AI Specialized CSAs [aka.ms/airangers](aka.ms/airangers)"

+ "AI Specialized CSAs [aka.ms/airangers](https://aka.ms/airangers?WT.mc_id=academic-105485-koreyst)"

]

},

{

diff --git a/08-building-search-applications/README.md b/08-building-search-applications/README.md

index 047710830..cb719b594 100644

--- a/08-building-search-applications/README.md

+++ b/08-building-search-applications/README.md

@@ -31,11 +31,11 @@ After completing this lesson, you will be able to:

Creating a search application will help you understand how to use Embeddings to search for data. You will also learn how to build a search application that can be used by students to find information quickly.

-The lesson includes an Embedding Index of the YouTube transcripts for the Microsoft [AI Show](https://www.youtube.com/playlist?list=PLlrxD0HtieHi0mwteKBOfEeOYf0LJU4O1) YouTube channel. The AI Show is a YouTube channel that teaches you about AI and machine learning. The Embedding Index contains the Embeddings for each of the YouTube transcripts up until Oct 2023. You will use the Embedding Index to build a search application for our startup. The search application returns a link to the place in the video where the answer to the question is located. This is a great way for students to find the information they need quickly.

+The lesson includes an Embedding Index of the YouTube transcripts for the Microsoft [AI Show](https://www.youtube.com/playlist?list=PLlrxD0HtieHi0mwteKBOfEeOYf0LJU4O1?WT.mc_id=academic-105485-koreyst) YouTube channel. The AI Show is a YouTube channel that teaches you about AI and machine learning. The Embedding Index contains the Embeddings for each of the YouTube transcripts up until Oct 2023. You will use the Embedding Index to build a search application for our startup. The search application returns a link to the place in the video where the answer to the question is located. This is a great way for students to find the information they need quickly.

The following is an example of a semantic query for the question 'can you use rstudio with azure ml?'. Check out the YouTube url, you'll see the url contains a timestamp that takes you to the place in the video where the answer to the question is located.

-

+

## What is semantic search?

@@ -45,7 +45,7 @@ Here is an example of a semantic search. Let's say you were looking to buy a car

## What are Text Embeddings?

-[Text embeddings](https://en.wikipedia.org/wiki/Word_embedding) are a text representation technique used in [natural language processing](https://en.wikipedia.org/wiki/Natural_language_processing). Text embeddings are semantic numerical representations of text. Embeddings are used to represent data in a way that is easy for a machine to understand. There are many models for building text embeddings, in this lesson, we will focus on generating embeddings using the OpenAI Embedding Model.

+[Text embeddings](https://en.wikipedia.org/wiki/Word_embedding?WT.mc_id=academic-105485-koreyst) are a text representation technique used in [natural language processing](https://en.wikipedia.org/wiki/Natural_language_processing?WT.mc_id=academic-105485-koreyst). Text embeddings are semantic numerical representations of text. Embeddings are used to represent data in a way that is easy for a machine to understand. There are many models for building text embeddings, in this lesson, we will focus on generating embeddings using the OpenAI Embedding Model.

Here's an example, image the following text is in a transcript from one of the episodes on the AI Show YouTube channel:

@@ -65,7 +65,7 @@ The Embedding index for this lesson was created with a series of Python scripts.

The scripts perform the following operations:

-1. The transcript for each YouTube video in the [AI Show](https://www.youtube.com/playlist?list=PLlrxD0HtieHi0mwteKBOfEeOYf0LJU4O1) playlist is downloaded.

+1. The transcript for each YouTube video in the [AI Show](https://www.youtube.com/playlist?list=PLlrxD0HtieHi0mwteKBOfEeOYf0LJU4O1?WT.mc_id=academic-105485-koreyst) playlist is downloaded.

2. Using [OpenAI Functions](https://learn.microsoft.com/azure/ai-services/openai/how-to/function-calling?WT.mc_id=academic-105485-koreyst), an attempt is made to extract the speaker name from the first 3 minutes of the YouTube transcript. The speaker name for each video is stored in the Embedding Index named `embedding_index_3m.json`.

3. The transcript text is then chunked into **3 minute text segments**. The segment includes about 20 words overlapping from the next segment to ensure that the Embedding for the segment is not cut off and to provide better search context.

4. Each text segment is then passed to the OpenAI Chat API to summarize the text into 60 words. The summary is also stored in the Embedding Index `embedding_index_3m.json`.

@@ -73,7 +73,7 @@ The scripts perform the following operations:

### Vector Databases

-For lesson simplicity, the Embedding Index is stored in a JSON file named `embedding_index_3m.json` and loaded into a Pandas Dataframe. However, in production, the Embedding Index would be stored in a vector database such as [Azure Cognitive Search](https://learn.microsoft.com/training/modules/improve-search-results-vector-search?WT.mc_id=academic-105485-koreyst), [Redis](https://cookbook.openai.com/examples/vector_databases/redis/readme), [Pinecone](https://cookbook.openai.com/examples/vector_databases/pinecone/readme), [Weaviate](https://cookbook.openai.com/examples/vector_databases/weaviate/readme), to name but a few.

+For lesson simplicity, the Embedding Index is stored in a JSON file named `embedding_index_3m.json` and loaded into a Pandas Dataframe. However, in production, the Embedding Index would be stored in a vector database such as [Azure Cognitive Search](https://learn.microsoft.com/training/modules/improve-search-results-vector-search?WT.mc_id=academic-105485-koreyst), [Redis](https://cookbook.openai.com/examples/vector_databases/redis/readme?WT.mc_id=academic-105485-koreyst), [Pinecone](https://cookbook.openai.com/examples/vector_databases/pinecone/readme?WT.mc_id=academic-105485-koreyst), [Weaviate](https://cookbook.openai.com/examples/vector_databases/weaviate/readme?WT.mc_id=academic-105485-koreyst), to name but a few.

## Understanding cosine similarity

@@ -83,13 +83,13 @@ We've learned about text embeddings, the next step is to learn how to use text e

Cosine similarity is a measure of similarity between two vectors, you'll also hear this referred to as `nearest neighbor search`. To perform a cosine similarity search you need to _vectorize_ for _query_ text using the OpenAI Embedding API. Then calculate the _cosine similarity_ between the query vector and each vector in the Embedding Index. Remember, the Embedding Index has a vector for each YouTube transcript text segment. Finally, sort the results by cosine similarity and the text segments with the highest cosine similarity are the most similar to the query.

-From a mathematic perspective, cosine similarity measures the cosine of the angle between two vectors projected in a multidimensional space. This measurement is beneficial, because if two documents are far apart by Euclidean distance because of size, they could still have a smaller angle between them and therefore higher cosine similarity. For more information about cosine similarity equations, see [Cosine similarity](https://en.wikipedia.org/wiki/Cosine_similarity).

+From a mathematic perspective, cosine similarity measures the cosine of the angle between two vectors projected in a multidimensional space. This measurement is beneficial, because if two documents are far apart by Euclidean distance because of size, they could still have a smaller angle between them and therefore higher cosine similarity. For more information about cosine similarity equations, see [Cosine similarity](https://en.wikipedia.org/wiki/Cosine_similarity?WT.mc_id=academic-105485-koreyst).

## Building your first search application

Next, we're going to learn how to build a search application using Embeddings. The search application will allow students to search for a video by typing a question. The search application will return a list of videos that are relevant to the question. The search application will also return a link to the place in the video where the answer to the question is located.

-This solution was built and tested on Windows 11, macOS, and Ubuntu 22.04 using Python 3.10 or later. You can download Python from [python.org](https://www.python.org/downloads/).

+This solution was built and tested on Windows 11, macOS, and Ubuntu 22.04 using Python 3.10 or later. You can download Python from [python.org](https://www.python.org/downloads/?WT.mc_id=academic-105485-koreyst).

## Assignment - building a search application, to enable students

@@ -99,7 +99,7 @@ In this assignment, you will create the Azure OpenAI Services that will be used

### Start the Azure Cloud Shell

-1. Sign in to the [Azure portal](https://portal.azure.com/).

+1. Sign in to the [Azure portal](https://portal.azure.com/?WT.mc_id=academic-105485-koreyst).

2. Select the Cloud Shell icon in the upper-right corner of the Azure portal.

3. Select **Bash** for the environment type.

@@ -107,7 +107,7 @@ In this assignment, you will create the Azure OpenAI Services that will be used

> For these instructions, we're using the resource group named "semantic-video-search" in East US.

> You can change the name of the resource group, but when changing the location for the resources,

-> check the [model availability table](https://aka.ms/oai/models).

+> check the [model availability table](https://aka.ms/oai/models?WT.mc_id=academic-105485-koreyst).

```shell

az group create --name semantic-video-search --location eastus

@@ -154,10 +154,10 @@ Open the [solution notebook](./solution.ipynb?WT.mc_id=academic-105485-koreyst)

When you run the notebook, you'll be prompted to enter a query. The input box will look like this:

-

+

## Great Work! Continue Your Learning

-After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

Head over to Lesson 9 where we will look at how to [build image generation applications](../09-building-image-applications/README.md?WT.mc_id=academic-105485-koreyst)!

diff --git a/08-building-search-applications/scripts/README.md b/08-building-search-applications/scripts/README.md

index f0b9da845..1e812ef13 100644

--- a/08-building-search-applications/scripts/README.md

+++ b/08-building-search-applications/scripts/README.md

@@ -15,7 +15,7 @@ The transcription data prep scripts have been tested on the latest releases Wind

> [!NOTE]

> For these instructions we're using the resource group named "semantic-video-search" in East US.

> You can change the name of the resource group, but when changing the location for the resources,

-> check the [model availability table](https://aka.ms/oai/models).

+> check the [model availability table](https://aka.ms/oai/models?WT.mc_id=academic-105485-koreyst).

```console

az group create --name semantic-video-search --location eastus

@@ -63,7 +63,7 @@ az cognitiveservices account deployment create \

## Required software

-- [Python 3.9](https://www.python.org/downloads/) or greater

+- [Python 3.9](https://www.python.org/downloads/?WT.mc_id=academic-105485-koreyst) or greater

## Environment variables

@@ -103,7 +103,7 @@ export GOOGLE_DEVELOPER_API_KEY=

## Install the required Python libraries

-1. Install the [git client](https://git-scm.com/downloads) if it's not already installed.

+1. Install the [git client](https://git-scm.com/downloads?WT.mc_id=academic-105485-koreyst) if it's not already installed.

1. From a `Terminal` window, clone the sample to your preferred repo folder.

```bash

diff --git a/09-building-image-applications/README.md b/09-building-image-applications/README.md

index 9fa236fb4..1b4e0de9f 100644

--- a/09-building-image-applications/README.md

+++ b/09-building-image-applications/README.md

@@ -44,13 +44,13 @@ using a prompt like

## What is DALL-E and Midjourney?

-[DALL-E](https://openai.com/dall-e-2) and [Midjourney](https://www.midjourney.com/) are two of the most popular image generation models, they allow you to use prompts to generate images.

+[DALL-E](https://openai.com/dall-e-2?WT.mc_id=academic-105485-koreyst) and [Midjourney](https://www.midjourney.com/?WT.mc_id=academic-105485-koreyst) are two of the most popular image generation models, they allow you to use prompts to generate images.

### DALL-E

Let's start with DALL-E, which is a Generative AI model that generates images from text descriptions.

-> [DALL-E is a combination of two models, CLIP and diffused attention](https://towardsdatascience.com/openais-dall-e-and-clip-101-a-brief-introduction-3a4367280d4e).

+> [DALL-E is a combination of two models, CLIP and diffused attention](https://towardsdatascience.com/openais-dall-e-and-clip-101-a-brief-introduction-3a4367280d4e?WT.mc_id=academic-105485-koreyst).

- **CLIP**, is a model that generates embeddings, which are numerical representations of data, from images and text.

@@ -60,12 +60,12 @@ Let's start with DALL-E, which is a Generative AI model that generates images fr

Midjourney works in a similar way to DALL-E, it generates images from text prompts. Midjourney, can also be used to generate images using prompts like “a cat in a hat”, or a “dog with a mohawk”.

-

+

*Image cred Wikipedia, image generated by Midjourney*

## How does DALL-E and Midjourney Work

-First, [DALL-E](https://arxiv.org/pdf/2102.12092.pdf). DALL-E is a Generative AI model based on the transformer architecture with an *autoregressive transformer*.

+First, [DALL-E](https://arxiv.org/pdf/2102.12092.pdf?WT.mc_id=academic-105485-koreyst). DALL-E is a Generative AI model based on the transformer architecture with an *autoregressive transformer*.

An *autoregressive transformer* defines how a model generates images from text descriptions, it generates one pixel at a time, and then uses the generated pixels to generate the next pixel. Passing through multiple layers in a neural network, until the image is complete.

@@ -463,6 +463,6 @@ except openai.error.InvalidRequestError as err:

## Great Work! Continue Your Learning

-After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

Head over to Lesson 10 where we will look at how to [build AI applications with low-code](../10-building-low-code-ai-applications/README.md?WT.mc_id=academic-105485-koreyst)

diff --git a/09-building-image-applications/notebook-azureopenai.ipynb b/09-building-image-applications/notebook-azureopenai.ipynb

index 74f41cd70..3c065e563 100644

--- a/09-building-image-applications/notebook-azureopenai.ipynb

+++ b/09-building-image-applications/notebook-azureopenai.ipynb

@@ -46,13 +46,13 @@

"\n",

"## What is DALL-E and Midjourney? \n",

"\n",

- "[DALL-E](https://openai.com/dall-e-2) and [Midjourney](https://www.midjourney.com/) are two of the most popular image generation models, they allow you to use prompts to generate images.\n",

+ "[DALL-E](https://openai.com/dall-e-2?WT.mc_id=academic-105485-koreyst) and [Midjourney](https://www.midjourney.com/?WT.mc_id=academic-105485-koreyst) are two of the most popular image generation models, they allow you to use prompts to generate images.\n",

"\n",

"### DALL-E\n",

"\n",

"Let's start with DALL-E, which is a Generative AI model that generates images from text descriptions. \n",

"\n",

- "> [DALL-E is a combination of two models, CLIP and diffused attention](https://towardsdatascience.com/openais-dall-e-and-clip-101-a-brief-introduction-3a4367280d4e). \n",

+ "> [DALL-E is a combination of two models, CLIP and diffused attention](https://towardsdatascience.com/openais-dall-e-and-clip-101-a-brief-introduction-3a4367280d4e?WT.mc_id=academic-105485-koreyst). \n",

"\n",

"- **CLIP**, is a model that generates embeddings, which are numerical representations of data, from images and text. \n",

"\n",

@@ -64,12 +64,12 @@

"\n",

" \n",

"\n",

- "\n",

+ "\n",

"*Image cred Wikipedia, image generated by Midjourney*\n",

"\n",

"## How does DALL-E and Midjourney Work \n",

"\n",

- "First, [DALL-E](https://arxiv.org/pdf/2102.12092.pdf). DALL-E is a Generative AI model based on the transformer architecture with an *autoregressive transformer*. \n",

+ "First, [DALL-E](https://arxiv.org/pdf/2102.12092.pdf?WT.mc_id=academic-105485-koreyst). DALL-E is a Generative AI model based on the transformer architecture with an *autoregressive transformer*. \n",

"\n",

"An *autoregressive transformer* defines how a model generates images from text descriptions, it generates one pixel at a time, and then uses the generated pixels to generate the next pixel. Passing through multiple layers in a neural network, until the image is complete. \n",

"\n",

diff --git a/10-building-low-code-ai-applications/README.md b/10-building-low-code-ai-applications/README.md

index c25fbe409..53f906142 100644

--- a/10-building-low-code-ai-applications/README.md

+++ b/10-building-low-code-ai-applications/README.md

@@ -1,6 +1,6 @@

# Building Low Code AI Applications

-[](https://youtu.be/XX8491SAF44)

+[](https://youtu.be/XX8491SAF44?WT.mc_id=academic-105485-koreyst)

> *(Click the image above to view video of this lesson)*

@@ -79,21 +79,21 @@ The educators at our startup have been struggling to keep track of student assig

You will build the app using Copilot in Power Apps following the steps below:

-1. Navigate to the [Power Apps](https://make.powerapps.com) home screen.

+1. Navigate to the [Power Apps](https://make.powerapps.com?WT.mc_id=academic-105485-koreyst) home screen.

1. Use the text area on the home screen to describe the app you want to build. For example, ***I want to build an app to track and manage student assignments***. Click on the **Send** button to send the prompt to the AI Copilot.

-

+

1. The AI Copilot will suggest a Dataverse Table with the fields you need to store the data you want to track and some sample data. You can then customize the table to meet your needs using the AI Copilot assistant feature through conversational steps.

> **Important**: Dataverse is the underlying data platform for Power Platform. It is a low-code data platform for storing the app's data. It is a fully managed service that securely stores data in the Microsoft Cloud and is provisioned within your Power Platform environment. It comes with built-in data governance capabilities, such as data classification, data lineage, fine-grained access control, and more. You can learn more about Dataverse [here](https://docs.microsoft.com/powerapps/maker/data-platform/data-platform-intro?WT.mc_id=academic-109639-somelezediko).

-

+

1. Educators want to send emails to the students who have submitted their assignments to keep them updated on the progress of their assignments. You can use Copilot to add a new field to the table to store the student email. For example, you can use the following prompt to add a new field to the table: ***I want to add a column to store student email***. Click on the **Send** button to send the prompt to the AI Copilot.

-

+

1. The AI Copilot will generate a new field and you can then customize the field to meet your needs.

@@ -103,7 +103,7 @@ You will build the app using Copilot in Power Apps following the steps below:

1. For educators to send emails to students, you can use Copilot to add a new screen to the app. For example, you can use the following prompt to add a new screen to the app: ***I want to add a screen to send emails to students***. Click on the **Send** button to send the prompt to the AI Copilot.

-

+

1. The AI Copilot will generate a new screen and you can then customize the screen to meet your needs.

@@ -135,19 +135,19 @@ Now that you know what Dataverse is and why you should use it, let's look at how

To create a table in Dataverse using Copilot, follow the steps below:

-1. Navigate to the [Power Apps](https://make.powerapps.com) home screen.

+1. Navigate to the [Power Apps](https://make.powerapps.com?WT.mc_id=academic-105485-koreyst) home screen.

2. On the left navigation bar, select on **Tables** and then click on **Describe the new Table**.

-

+

1. On the **Describe the new Table** screen, use the text area to describe the table you want to create. For example, ***I want to create a table to store invoice information***. Click on the **Send** button to send the prompt to the AI Copilot.

-

+

1. The AI Copilot will suggest a Dataverse Table with the fields you need to store the data you want to track and some sample data. You can then customize the table to meet your needs using the AI Copilot assistant feature through conversational steps.

-

+

1. The finance team wants to send an email to the supplier to update them with the current status of their invoice. You can use Copilot to add a new field to the table to store the supplier email. For example, you can use the following prompt to add a new field to the table: ***I want to add a column to store supplier email***. Click on the **Send** button to send the prompt to the AI Copilot.

@@ -176,7 +176,7 @@ Some of the Prebuilt AI Models available in Power Platform include:

With Custom AI Models you can bring your own model into AI Builder so that it can function like any AI Builder custom model, allowing you to train the model using your own data. You can use these models to automate processes and predict outcomes in both Power Apps and Power Automate. When using your own model there are limitations that apply. Read more on these [limitations](https://learn.microsoft.com/ai-builder/byo-model#limitations?WT.mc_id=academic-105485-koreyst).

-

+

## Assignment #2 - Build an Invoice Processing Flow for Our Startup

@@ -186,11 +186,11 @@ Now that you know what AI Builder is and why you should use it, let's look at ho

To build a workflow that will help the finance team process invoices using the Invoice Processing AI Model in AI Builder, follow the steps below:

-1. Navigate to the [Power Automate](https://make.powerautomate.com) home screen.

+1. Navigate to the [Power Automate](https://make.powerautomate.com?WT.mc_id=academic-105485-koreyst) home screen.

2. Use the text area on the home screen to describe the workflow you want to build. For example, ***Process an invoice when it arrives in my mailbox***. Click on the **Send** button to send the prompt to the AI Copilot.

-

+

3. The AI Copilot will suggest the actions you need to perform the task you want to automate. You can click on the **Next** button to go through the next steps.

@@ -204,7 +204,7 @@ To build a workflow that will help the finance team process invoices using the I

8. Remove the **Condition** action from the flow because you will not be using it. It should look like the following screenshot:

-

+

9. Click on the **Add an action** button and search for **Dataverse**. Select the **Add a new row** action.

@@ -219,7 +219,7 @@ To build a workflow that will help the finance team process invoices using the I

- Status - Set the **Status** to **Pending**.

- Supplier Email - Use the **From** dynamic content from the **When a new email arrives** trigger.

-

+

12. Once you are done with the flow, click on the **Save** button to save the flow. You can then test the flow by sending an email with an invoice to the folder you specified in the trigger.

@@ -233,12 +233,12 @@ GPT models undergo extensive training on vast amounts of data, enabling them to

For example, you can build flows to automatically generate text for a variety of use cases, such as: drafts of emails, product descriptions, and more. You can also use the model to generate text for a variety of apps, such as chatbots and customer service apps that enable customer service agents to respond effectively and efficiently to customer inquiries.

-

+

To learn how to use this AI Model in Power Automate, go through the [Add intelligence with AI Builder and GPT](https://learn.microsoft.com/training/modules/ai-builder-text-generation/?WT.mc_id=academic-109639-somelezediko) module.

## Great Work! Continue Your Learning

-After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

Head over to Lesson 11 where we will look at how to [integrate Generative AI with Function Calling](../11-integrating-with-function-calling/README.md?WT.mc_id=academic-105485-koreyst)!

diff --git a/11-integrating-with-function-calling/README.md b/11-integrating-with-function-calling/README.md

index d21b6a0a6..fbec79312 100644

--- a/11-integrating-with-function-calling/README.md

+++ b/11-integrating-with-function-calling/README.md

@@ -446,6 +446,6 @@ To continue your learning of Azure Open AI Function Calling you can build:

## Great Work! Continue the Journey

-After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

Head over to Lesson 12 where we will look at how to [design UX for AI applications](../12-designing-ux-for-ai-applications/README.md?WT.mc_id=academic-105485-koreyst)!

diff --git a/12-designing-ux-for-ai-applications/README.md b/12-designing-ux-for-ai-applications/README.md

index 8466e2cdd..be39fb685 100644

--- a/12-designing-ux-for-ai-applications/README.md

+++ b/12-designing-ux-for-ai-applications/README.md

@@ -1,6 +1,6 @@

# Designing UX for AI Applications

-[](https://youtu.be/bO7h2_hOhR0)

+[](https://youtu.be/bO7h2_hOhR0?WT.mc_id=academic-105485-koreyst)

> *(Click the image above to view video of this lesson)*

@@ -115,6 +115,6 @@ Take any AI apps you've built so far, consider implementing the below steps in y

## Congratulations, you have finished this course

-After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this lesson, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

-Congratulations, you have completed this course! The building should not stop here. Hopefully, you have been inspired to start building your own Generative AI startup. Head over to the [Microsoft Founders Hub](https://aka.ms/genai-foundershub) and apply for the program to receive support on your journey.

+Congratulations, you have completed this course! The building should not stop here. Hopefully, you have been inspired to start building your own Generative AI startup. Head over to the [Microsoft Founders Hub](https://aka.ms/genai-foundershub?WT.mc_id=academic-105485-koreyst) and apply for the program to receive support on your journey.

diff --git a/13-continued-learning/README.md b/13-continued-learning/README.md

index 1db501727..2b15ed3ce 100644

--- a/13-continued-learning/README.md

+++ b/13-continued-learning/README.md

@@ -6,11 +6,11 @@ Are we missing a great resource? Let us know by submitting a PR!

## 🧠 One Collection to Rule Them ALl

-After completing this course, check out our [Generative AI Learning collection](https://aka.ms/genai-collection) to continue leveling up your Generative AI knowledge!

+After completing this course, check out our [Generative AI Learning collection](https://aka.ms/genai-collection?WT.mc_id=academic-105485-koreyst) to continue leveling up your Generative AI knowledge!

## Lesson 1 - Introduction to Generative AI and LLMs

-🔗 [How GPT models work: accessible to everyone](https://bea.stollnitz.com/blog/how-gpt-works/)

+🔗 [How GPT models work: accessible to everyone](https://bea.stollnitz.com/blog/how-gpt-works/?WT.mc_id=academic-105485-koreyst)

🔗 [Fundamentals of Generative AI](https://learn.microsoft.com/training/modules/fundamentals-generative-ai?&WT.mc_id=academic-105485-koreyst)

@@ -22,9 +22,9 @@ After completing this course, check out our [Generative AI Learning collection](

🔗 [How to use Open Source foundation models curated by Azure Machine Learning (preview) - Azure Machine Learning | Microsoft Learn](https://learn.microsoft.com/azure/machine-learning/how-to-use-foundation-models?WT.mc_id=academic-105485-koreyst)

-🔗 [The Large Language Model (LLM) Index | Sapling](https://sapling.ai/llm/index)

+🔗 [The Large Language Model (LLM) Index | Sapling](https://sapling.ai/llm/index?WT.mc_id=academic-105485-koreyst)

-🔗 [[2304.04052] Decoder-Only or Encoder-Decoder? Interpreting Language Model as a Regularized Encoder-Decoder (arxiv.org)](https://arxiv.org/abs/2304.04052)

+🔗 [[2304.04052] Decoder-Only or Encoder-Decoder? Interpreting Language Model as a Regularized Encoder-Decoder (arxiv.org)](https://arxiv.org/abs/2304.04052?WT.mc_id=academic-105485-koreyst)

🔗 [Retrieval Augmented Generation using Azure Machine Learning prompt flow](https://learn.microsoft.com/azure/machine-learning/concept-retrieval-augmented-generation?WT.mc_id=academic-105485-koreyst)

@@ -38,7 +38,7 @@ After completing this course, check out our [Generative AI Learning collection](

🔗 [Fundamentals of Responsible Generative AI](https://learn.microsoft.com/training/modules/responsible-generative-ai/?&WT.mc_id=academic-105485-koreyst)

-🔗 [Grounding LLMs](https://techcommunity.microsoft.com/t5/fasttrack-for-azure/grounding-llms/ba-p/3843857)

+🔗 [Grounding LLMs](https://techcommunity.microsoft.com/t5/fasttrack-for-azure/grounding-llms/ba-p/3843857?WT.mc_id=academic-105485-koreyst)

🔗 [Fundamentals of Responsible Generative AI](https://learn.microsoft.com/training/modules/responsible-generative-ai?WT.mc_id=academic-105485-koreyst)

@@ -58,7 +58,7 @@ After completing this course, check out our [Generative AI Learning collection](

🔗 [Prompt Engineering Overview](https://learn.microsoft.com/semantic-kernel/prompt-engineering?WT.mc_id=academic-105485-koreyst)

-🔗 [Azure OpenAI for Education Prompts](https://techcommunity.microsoft.com/t5/e1.ucation-blog/azure-openai-for-education-prompts-ai-and-a-guide-from-ethan-and/ba-p/3938259)

+🔗 [Azure OpenAI for Education Prompts](https://techcommunity.microsoft.com/t5/e1.ucation-blog/azure-openai-for-education-prompts-ai-and-a-guide-from-ethan-and/ba-p/3938259?WT.mc_id=academic-105485-koreyst)

## Lesson 5 - Creating Advanced Prompts

@@ -94,9 +94,9 @@ After completing this course, check out our [Generative AI Learning collection](

🔗 [OpenAI's CLIP paper](https://arxiv.org/pdf/2103.00020.pdf?wt.mc_id=github_S-1231_webpage_reactor)

-🔗 [OpenAI's DALL-E and CLIP 101: A Brief Introduction](https://towardsdatascience.com/openais-dall-e-and-clip-101-a-brief-introduction-3a4367280d4e)