diff --git a/.codecov.yml b/.codecov.yml

deleted file mode 100644

index 6694b2a5..00000000

--- a/.codecov.yml

+++ /dev/null

@@ -1,14 +0,0 @@

-coverage:

- precision: 2

- round: down

- range: "70...100"

-

- status:

- project: yes

- patch: no

- changes: no

-

-comment:

- layout: "header, reach, diff, flags, files, footer"

- behavior: default

- require_changes: no

diff --git a/CITATION.cff b/CITATION.cff

deleted file mode 100644

index 8e3c763a..00000000

--- a/CITATION.cff

+++ /dev/null

@@ -1,37 +0,0 @@

-# This CITATION.cff file was generated with cffinit.

-# Visit https://bit.ly/cffinit to generate yours today!

-

-cff-version: 1.2.0

-title: VisCy

-message: >-

- If you use this software, please cite it using the

- metadata from this file.

-type: software

-authors:

- - given-names: Ziwen

- family-names: Liu

- email: ziwen.liu@czbiohub.org

- affiliation: Chan Zuckerberg Biohub San Francisco

- orcid: 'https://orcid.org/0000-0001-7482-1299'

- - given-names: Eduardo

- family-names: Hirata-Miyasaki

- affiliation: Chan Zuckerberg Biohub San Francisco

- orcid: 'https://orcid.org/0000-0002-1016-2447'

- - given-names: Christian

- family-names: Foley

- - given-names: Soorya

- family-names: Pradeep

- affiliation: Chan Zuckerberg Biohub San Francisco

- orcid: 'https://orcid.org/0000-0002-0926-1480'

- - given-names: Shalin

- family-names: Mehta

- affiliation: Chan Zuckerberg Biohub San Francisco

- orcid: 'https://orcid.org/0000-0002-2542-3582'

-repository-code: 'https://github.com/mehta-lab/VisCy'

-url: 'https://github.com/mehta-lab/VisCy'

-abstract: computer vision models for single-cell phenotyping

-keywords:

- - machine-learning

- - computer-vision

- - bioimage-analysis

-license: BSD-3-Clause

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

deleted file mode 100644

index 44db5bbc..00000000

--- a/CONTRIBUTING.md

+++ /dev/null

@@ -1,31 +0,0 @@

-# Contributing to viscy

-

-## Development installation

-

-Clone or fork the repository,

-then make an editable installation with all the optional dependencies:

-

-```sh

-# in project root directory (parent folder of pyproject.toml)

-pip install -e ".[dev,visual,metrics]"

-```

-

-## CI requirements

-

-Lint with Ruff:

-

-```sh

-ruff check viscy

-```

-

-Format the code with Black:

-

-```sh

-black viscy

-```

-

-Run tests with `pytest`:

-

-```sh

-pytest -v

-```

diff --git a/LICENSE b/LICENSE

index 4520d7a9..485e6b04 100644

--- a/LICENSE

+++ b/LICENSE

@@ -1,6 +1,6 @@

BSD 3-Clause License

-Copyright (c) 2023, CZ Biohub SF

+Copyright (c) 2023, DynaCLR authors

Redistribution and use in source and binary forms, with or without

modification, are permitted provided that the following conditions are met:

diff --git a/README.md b/README.md

index 11c54191..45cb71c4 100644

--- a/README.md

+++ b/README.md

@@ -1,137 +1,82 @@

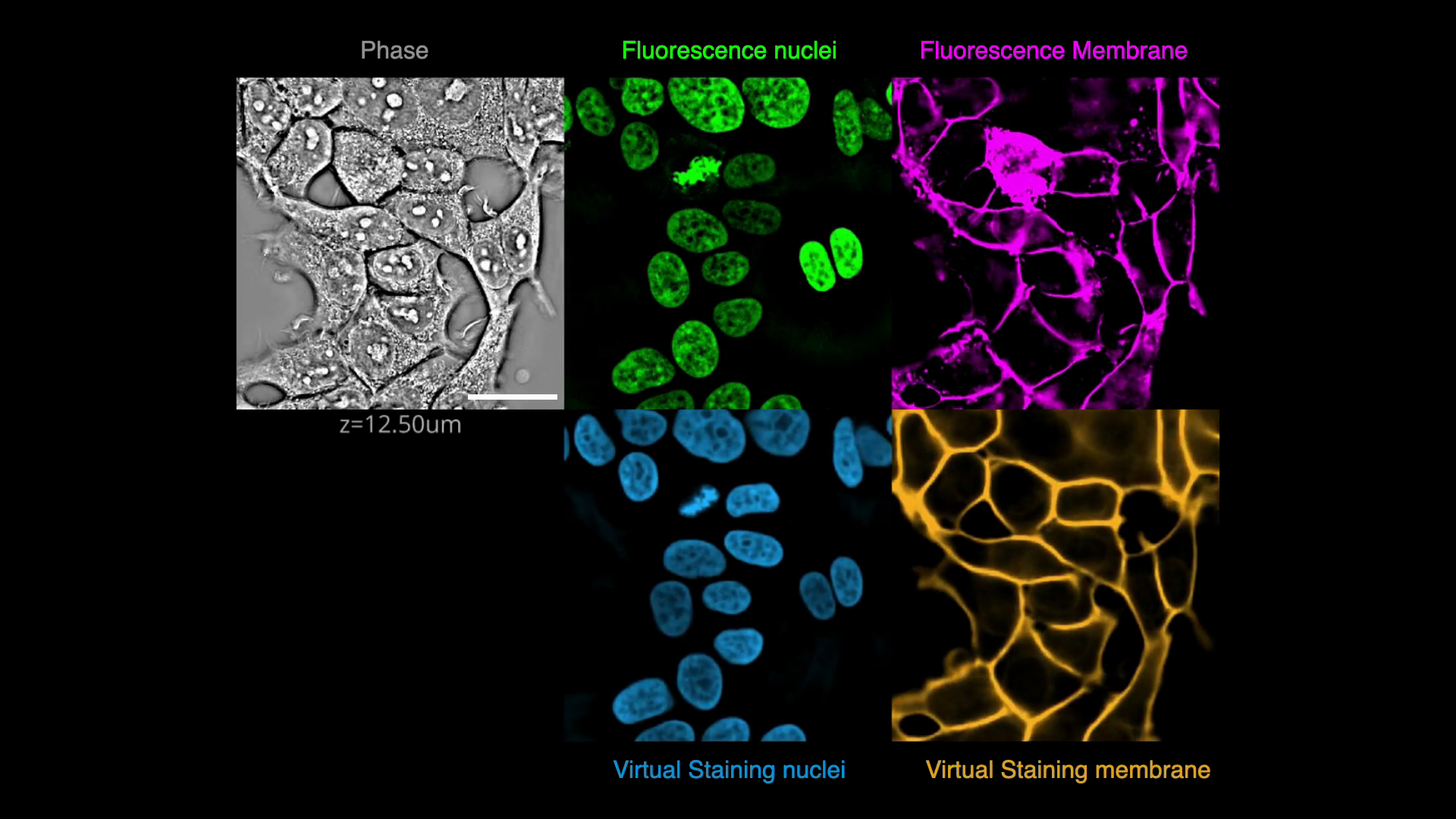

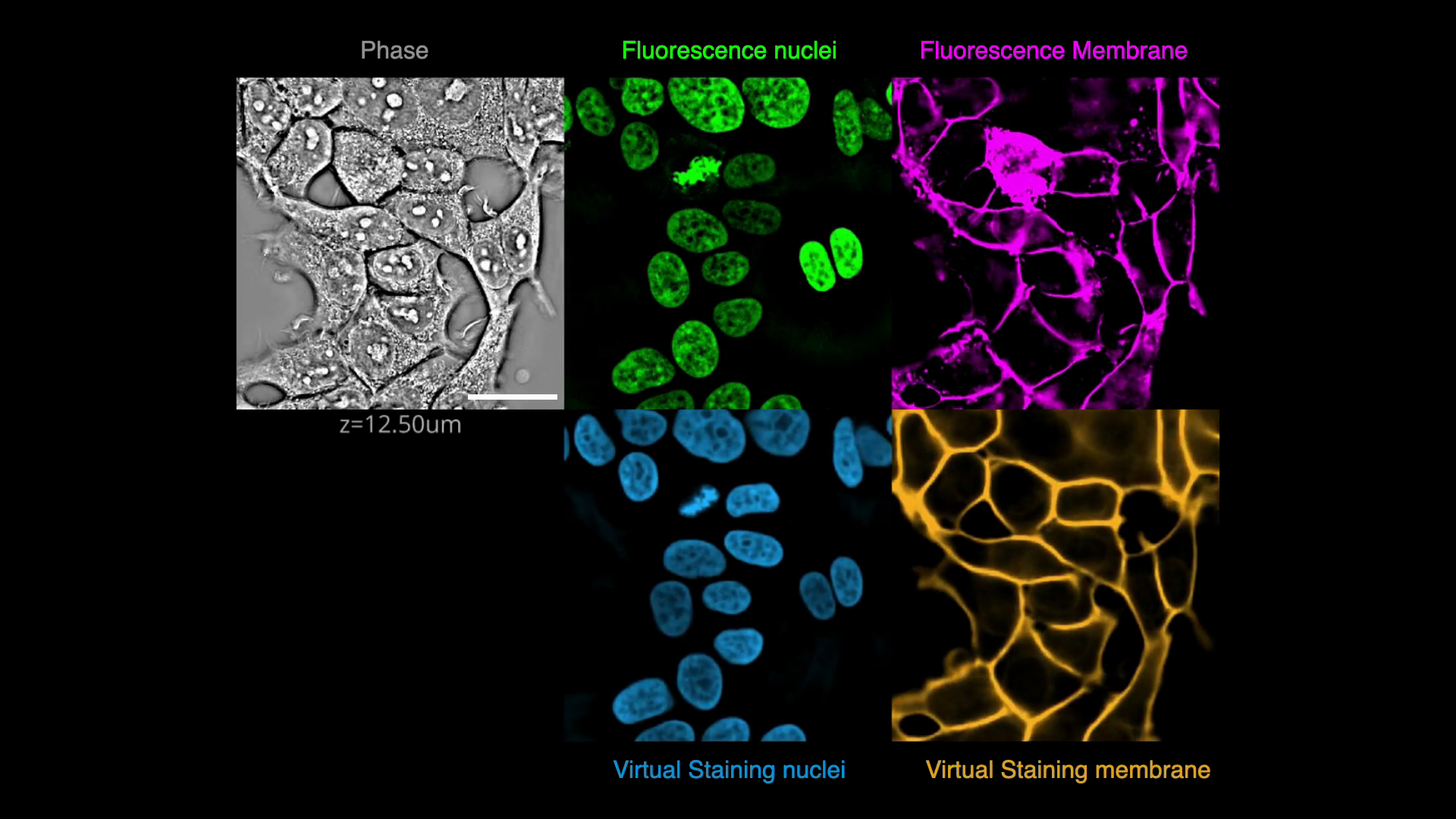

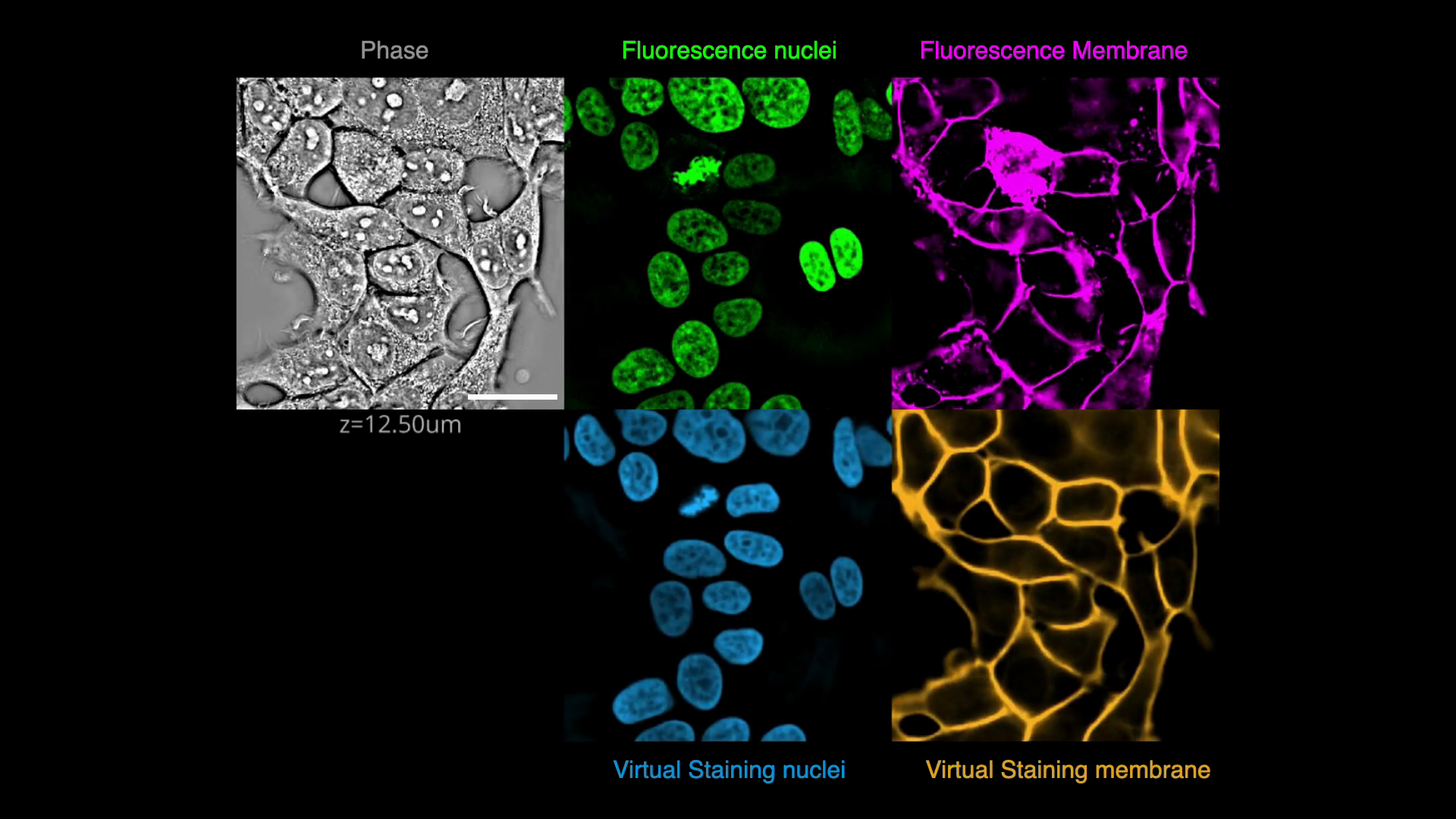

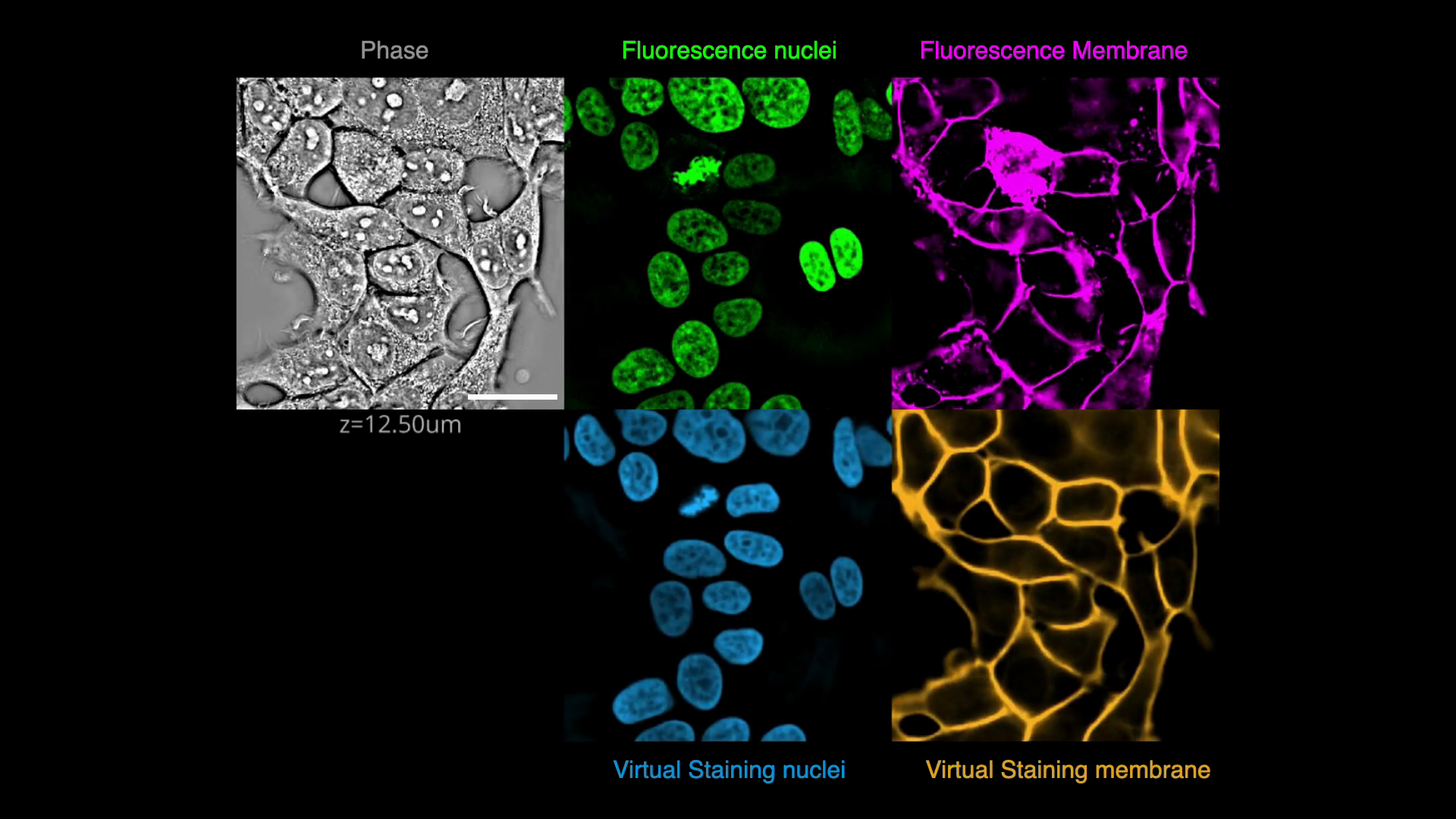

-# VisCy

-

-VisCy (abbreviation of `vision` and `cyto`) is a deep learning pipeline for training and deploying computer vision models for image-based phenotyping at single-cell resolution.

-

-This repository provides a pipeline for the following.

-- Image translation

- - Robust virtual staining of landmark organelles

-- Image classification

- - Supervised learning of of cell state (e.g. state of infection)

-- Image representation learning

- - Self-supervised learning of the cell state and organelle phenotypes

-

-> **Note:**

-> VisCy has been extensively tested for the image translation task. The code for other tasks is under active development. Frequent breaking changes are expected in the main branch as we unify the codebase for above tasks. If you are looking for a well-tested version for virtual staining, please use release `0.2.1` from PyPI.

-

-

-## Virtual staining

-

-### Demos

-- [Virtual staining exercise](https://github.com/mehta-lab/VisCy/blob/46beba4ecc8c4f312fda0b04d5229631a41b6cb5/examples/virtual_staining/dlmbl_exercise/solution.ipynb):

-Notebook illustrating how to use VisCy to train, predict and evaluate the VSCyto2D model. This notebook was developed for the [DL@MBL2024](https://github.com/dlmbl/DL-MBL-2024) course and uses UNeXt2 architecture.

-

-- [Image translation demo](https://github.com/mehta-lab/VisCy/blob/92215bc1387316f3af49c83c321b9d134d871116/examples/virtual_staining/img2img_translation/solution.ipynb): Fluorescence images can be predicted from label-free images. Can we predict label-free image from fluorescence? Find out using this notebook.

-

-- [Training Virtual Staining Models via CLI](https://github.com/mehta-lab/VisCy/wiki/virtual-staining-instructions):

-Instructions for how to train and run inference on ViSCy's virtual staining models (*VSCyto3D*, *VSCyto2D* and *VSNeuromast*).

-

-### Gallery

-Below are some examples of virtually stained images (click to play videos).

-See the full gallery [here](https://github.com/mehta-lab/VisCy/wiki/Gallery).

-

-| VSCyto3D | VSNeuromast | VSCyto2D |

-|:---:|:---:|:---:|

-| [](https://github.com/mehta-lab/VisCy/assets/67518483/d53a81eb-eb37-44f3-b522-8bd7bddc7755) | [](https://github.com/mehta-lab/VisCy/assets/67518483/4cef8333-895c-486c-b260-167debb7fd64) | [](https://github.com/mehta-lab/VisCy/assets/67518483/287737dd-6b74-4ce3-8ee5-25fbf8be0018) |

-

-### Reference

-

-The virtual staining models and training protocols are reported in our recent [preprint on robust virtual staining](https://www.biorxiv.org/content/10.1101/2024.05.31.596901).

-

-

-This package evolved from the [TensorFlow version of virtual staining pipeline](https://github.com/mehta-lab/microDL), which we reported in [this paper in 2020](https://elifesciences.org/articles/55502).

-

-

- Liu, Hirata-Miyasaki et al., 2024

-

-

- @article {Liu2024.05.31.596901,

- author = {Liu, Ziwen and Hirata-Miyasaki, Eduardo and Pradeep, Soorya and Rahm, Johanna and Foley, Christian and Chandler, Talon and Ivanov, Ivan and Woosley, Hunter and Lao, Tiger and Balasubramanian, Akilandeswari and Liu, Chad and Leonetti, Manu and Arias, Carolina and Jacobo, Adrian and Mehta, Shalin B.},

- title = {Robust virtual staining of landmark organelles},

- elocation-id = {2024.05.31.596901},

- year = {2024},

- doi = {10.1101/2024.05.31.596901},

- publisher = {Cold Spring Harbor Laboratory},

- URL = {https://www.biorxiv.org/content/early/2024/06/03/2024.05.31.596901},

- eprint = {https://www.biorxiv.org/content/early/2024/06/03/2024.05.31.596901.full.pdf},

- journal = {bioRxiv}

- }

-

-

-

-

- Guo, Yeh, Folkesson et al., 2020

-

-

- @article {10.7554/eLife.55502,

- article_type = {journal},

- title = {Revealing architectural order with quantitative label-free imaging and deep learning},

- author = {Guo, Syuan-Ming and Yeh, Li-Hao and Folkesson, Jenny and Ivanov, Ivan E and Krishnan, Anitha P and Keefe, Matthew G and Hashemi, Ezzat and Shin, David and Chhun, Bryant B and Cho, Nathan H and Leonetti, Manuel D and Han, May H and Nowakowski, Tomasz J and Mehta, Shalin B},

- editor = {Forstmann, Birte and Malhotra, Vivek and Van Valen, David},

- volume = 9,

- year = 2020,

- month = {jul},

- pub_date = {2020-07-27},

- pages = {e55502},

- citation = {eLife 2020;9:e55502},

- doi = {10.7554/eLife.55502},

- url = {https://doi.org/10.7554/eLife.55502},

- keywords = {label-free imaging, inverse algorithms, deep learning, human tissue, polarization, phase},

- journal = {eLife},

- issn = {2050-084X},

- publisher = {eLife Sciences Publications, Ltd},

- }

-

-

-

-### Library of virtual staining (VS) models

-The robust virtual staining models (i.e *VSCyto2D*, *VSCyto3D*, *VSNeuromast*), and fine-tuned models can be found [here](https://github.com/mehta-lab/VisCy/wiki/Library-of-virtual-staining-(VS)-Models)

-

-### Pipeline

-A full illustration of the virtual staining pipeline can be found [here](https://github.com/mehta-lab/VisCy/blob/dde3e27482e58a30f7c202e56d89378031180c75/docs/virtual_staining.md).

+# DynaCLR

+Implementation for ICLR 2025 submission:

+Contrastive learning of cell state dynamics in response to perturbations.

## Installation

+> **Note**:

+> The full functionality is tested on Linux `x86_64` with NVIDIA Ampere/Hopper GPUs (CUDA 12.4).

+> The CTC example configs are also tested on macOS with Apple M1 Pro SoCs (macOS 14.7).

+> Apple Silicon users need to make sure that they use

+> the `arm64` build of Python to use MPS acceleration.

+> Tested to work on Linux on the High Performance cluster, and may not work in other environments.

+> The commands below assume a Unix-like system.

+

1. We recommend using a new Conda/virtual environment.

```sh

- conda create --name viscy python=3.10

- # OR specify a custom path since the dependencies are large:

- # conda create --prefix /path/to/conda/envs/viscy python=3.10

+ conda create --name dynaclr python=3.10

```

-2. Install a released version of VisCy from PyPI:

+2. Install the package with `pip`:

```sh

- pip install viscy

+ conda activate dynaclr

+ # in the project root directory

+ # i.e. where this README is located

+ pip install -e ".[visual,metrics]"

```

- If evaluating virtually stained images for segmentation tasks,

- install additional dependencies:

+3. Verify installation by accessing the CLI help message:

```sh

- pip install "viscy[metrics]"

+ viscy --help

```

- Visualizing the model architecture requires `visual` dependencies:

+For development installation, see [the contributing guide](./CONTRIBUTING.md).

- ```sh

- pip install "viscy[visual]"

- ```

+## Reproducing DynaCLR

-3. Verify installation by accessing the CLI help message:

+Due to anonymity requirements during the review process,

+we cannot host the large custom datasets used in the paper.

+Here we demonstrate how to train and evaluate the DynaCLR models with a small public dataset.

+Here we use the training split of a HeLa cell DIC dataset from the

+[Cell Tracking Challenge](http://data.celltrackingchallenge.net/training-datasets/DIC-C2DH-HeLa.zip)

+and convert it to OME-Zarr for convenience (`../Hela_CTC.zarr`).

+This dataset has 2 FOVs, and we use a 1:1 split for training and validation.

- ```sh

- viscy --help

- ```

+Verify the dataset download by running the following command.

+You may need to modify the path in the configuration file to point to the correct dataset location.

+

+```sh

+# modify the path in the configuration file

+# to use the correct dataset location

+iohub info /path/to/Hela_CTC.zarr

+```

+

+It should print something like:

+

+```text

+=== Summary ===

+Format: omezarr v0.4

+Axes: T (time); C (channel); Z (space); Y (space); X (space);

+Channel names: ['DIC', 'labels']

+Row names: ['0']

+Column names: ['0']

+Wells: 1

+```

+

+Training can be performed with the following command:

+

+```sh

+python -m viscy.cli.contrastive_triplet fit -c ./examples/fit_ctc.yml

+```

-For development installation, see [the contributing guide](https://github.com/mehta-lab/VisCy/blob/main/CONTRIBUTING.md).

+The TensorBoard logs and model checkpoints will be saved the `./lightning_logs` directory.

-## Additional Notes

-The pipeline is built using the [PyTorch Lightning](https://www.pytorchlightning.ai/index.html) framework.

-The [iohub](https://github.com/czbiohub-sf/iohub) library is used

-for reading and writing data in [OME-Zarr](https://www.nature.com/articles/s41592-021-01326-w) format.

+Prediction of features on the entire dataset using the trained model can be done with:

-The full functionality is tested on Linux `x86_64` with NVIDIA Ampere GPUs (CUDA 12.4).

-Some features (e.g. mixed precision and distributed training) may not be available with other setups,

-see [PyTorch documentation](https://pytorch.org) for details.

\ No newline at end of file

+```sh

+python -m viscy.cli.contrastive_triplet predict -c ./examples/predict_ctc.yml

+```

diff --git a/applications/contrastive_phenotyping/evaluation/PC_vs_CF.py b/applications/contrastive_phenotyping/evaluation/PC_vs_CF.py

deleted file mode 100644

index f43b121b..00000000

--- a/applications/contrastive_phenotyping/evaluation/PC_vs_CF.py

+++ /dev/null

@@ -1,457 +0,0 @@

-""" Script to compute the correlation between PCA and UMAP features and computed features

-* finds the computed features best representing the PCA and UMAP components

-* outputs a heatmap of the correlation between PCA and UMAP features and computed features

-"""

-

-# %%

-from pathlib import Path

-import sys

-import os

-

-sys.path.append("/hpc/mydata/soorya.pradeep/scratch/viscy_infection_phenotyping/VisCy")

-

-import numpy as np

-import pandas as pd

-from sklearn.decomposition import PCA

-from umap import UMAP

-from sklearn.preprocessing import StandardScaler

-

-from viscy.representation.embedding_writer import read_embedding_dataset

-from viscy.representation.evaluation import (

- FeatureExtractor as FE,

-)

-from viscy.representation.evaluation import dataset_of_tracks

-

-import matplotlib.pyplot as plt

-import seaborn as sns

-

-from scipy.stats import spearmanr

-import pandas as pd

-import plotly.express as px

-

-# %%

-features_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval/predict/feb_test_time_interval_1_epoch_178.zarr"

-)

-data_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/registered_test.zarr"

-)

-tracks_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/track_test.zarr"

-)

-

-# %%

-

-source_channel = ["Phase3D", "RFP"]

-z_range = (28, 43)

-normalizations = None

-# fov_name = "/B/4/5"

-# track_id = 11

-

-embedding_dataset = read_embedding_dataset(features_path)

-embedding_dataset

-

-# load all unprojected features:

-features = embedding_dataset["features"]

-

-# %% PCA analysis of the features

-

-pca = PCA(n_components=5)

-pca_features = pca.fit_transform(features.values)

-features = (

- features.assign_coords(PCA1=("sample", pca_features[:, 0]))

- .assign_coords(PCA2=("sample", pca_features[:, 1]))

- .assign_coords(PCA3=("sample", pca_features[:, 2]))

- .assign_coords(PCA4=("sample", pca_features[:, 3]))

- .assign_coords(PCA5=("sample", pca_features[:, 4]))

- .set_index(sample=["PCA1", "PCA2", "PCA3", "PCA4", "PCA5"], append=True)

-)

-

-# %% convert the xarray to dataframe structure and add columns for computed features

-features_df = features.to_dataframe()

-features_df = features_df.drop(columns=["features"])

-df = features_df.drop_duplicates()

-features = df.reset_index(drop=True)

-

-features = features[features["fov_name"].str.startswith("/B/")]

-

-features["Phase Symmetry Score"] = np.nan

-features["Fluor Symmetry Score"] = np.nan

-features["Sensor Area"] = np.nan

-features["Masked Sensor Intensity"] = np.nan

-features["Entropy Phase"] = np.nan

-features["Entropy Fluor"] = np.nan

-features["Contrast Phase"] = np.nan

-features["Dissimilarity Phase"] = np.nan

-features["Homogeneity Phase"] = np.nan

-features["Contrast Fluor"] = np.nan

-features["Dissimilarity Fluor"] = np.nan

-features["Homogeneity Fluor"] = np.nan

-features["Phase IQR"] = np.nan

-features["Fluor Mean Intensity"] = np.nan

-features["Phase Standard Deviation"] = np.nan

-features["Fluor Standard Deviation"] = np.nan

-features["Phase radial profile"] = np.nan

-features["Fluor radial profile"] = np.nan

-

-# %% compute the computed features and add them to the dataset

-

-fov_names_list = features["fov_name"].unique()

-unique_fov_names = sorted(list(set(fov_names_list)))

-

-

-for fov_name in unique_fov_names:

-

- unique_track_ids = features[features["fov_name"] == fov_name]["track_id"].unique()

- unique_track_ids = list(set(unique_track_ids))

-

- for track_id in unique_track_ids:

-

- prediction_dataset = dataset_of_tracks(

- data_path,

- tracks_path,

- [fov_name],

- [track_id],

- source_channel=source_channel,

- )

-

- whole = np.stack([p["anchor"] for p in prediction_dataset])

- phase = whole[:, 0, 3]

- fluor = np.max(whole[:, 1], axis=1)

-

- for t in range(phase.shape[0]):

- # Compute Fourier descriptors for phase image

- phase_descriptors = FE.compute_fourier_descriptors(phase[t])

- # Analyze symmetry of phase image

- phase_symmetry_score = FE.analyze_symmetry(phase_descriptors)

-

- # Compute Fourier descriptors for fluor image

- fluor_descriptors = FE.compute_fourier_descriptors(fluor[t])

- # Analyze symmetry of fluor image

- fluor_symmetry_score = FE.analyze_symmetry(fluor_descriptors)

-

- # Compute area of sensor

- masked_intensity, area = FE.compute_area(fluor[t])

-

- # Compute higher frequency features using spectral entropy

- entropy_phase = FE.compute_spectral_entropy(phase[t])

- entropy_fluor = FE.compute_spectral_entropy(fluor[t])

-

- # Compute texture analysis using GLCM

- contrast_phase, dissimilarity_phase, homogeneity_phase = (

- FE.compute_glcm_features(phase[t])

- )

- contrast_fluor, dissimilarity_fluor, homogeneity_fluor = (

- FE.compute_glcm_features(fluor[t])

- )

-

- # Compute interqualtile range of pixel intensities

- iqr = FE.compute_iqr(phase[t])

-

- # Compute mean pixel intensity

- fluor_mean_intensity = FE.compute_mean_intensity(fluor[t])

-

- # Compute standard deviation of pixel intensities

- phase_std_dev = FE.compute_std_dev(phase[t])

- fluor_std_dev = FE.compute_std_dev(fluor[t])

-

- # Compute radial intensity gradient

- phase_radial_profile = FE.compute_radial_intensity_gradient(phase[t])

- fluor_radial_profile = FE.compute_radial_intensity_gradient(fluor[t])

-

- # update the features dataframe with the computed features

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Fluor Symmetry Score",

- ] = fluor_symmetry_score

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Phase Symmetry Score",

- ] = phase_symmetry_score

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Sensor Area",

- ] = area

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Masked Sensor Intensity",

- ] = masked_intensity

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Entropy Phase",

- ] = entropy_phase

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Entropy Fluor",

- ] = entropy_fluor

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Contrast Phase",

- ] = contrast_phase

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Dissimilarity Phase",

- ] = dissimilarity_phase

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Homogeneity Phase",

- ] = homogeneity_phase

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Contrast Fluor",

- ] = contrast_fluor

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Dissimilarity Fluor",

- ] = dissimilarity_fluor

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Homogeneity Fluor",

- ] = homogeneity_fluor

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Phase IQR",

- ] = iqr

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Fluor Mean Intensity",

- ] = fluor_mean_intensity

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Phase Standard Deviation",

- ] = phase_std_dev

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Fluor Standard Deviation",

- ] = fluor_std_dev

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Phase radial profile",

- ] = phase_radial_profile

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Fluor radial profile",

- ] = fluor_radial_profile

-

-# %%

-

-# Save the features dataframe to a CSV file

-features.to_csv(

- "/hpc/projects/comp.micro/infected_cell_imaging/Single_cell_phenotyping/ContrastiveLearning/Figure_panels/cell_division/features_twoChan.csv",

- index=False,

-)

-

-# # read the features dataframe from the CSV file

-# features = pd.read_csv(

-# "/hpc/projects/comp.micro/infected_cell_imaging/Single_cell_phenotyping/ContrastiveLearning/Figure_panels/cell_division/features_twoChan.csv"

-# )

-

-# remove the rows with missing values

-features = features.dropna()

-

-# sub_features = features[features["Time"] == 20]

-feature_df_removed = features.drop(

- columns=["fov_name", "track_id", "t", "id", "parent_track_id", "parent_id"]

-)

-

-# Compute correlation between PCA features and computed features

-correlation = feature_df_removed.corr(method="spearman")

-

-# %% display PCA correlation as a heatmap

-

-plt.figure(figsize=(20, 5))

-sns.heatmap(

- correlation.drop(columns=["PCA1", "PCA2", "PCA3", "PCA4", "PCA5"]).loc[

- "PCA1":"PCA5", :

- ],

- annot=True,

- cmap="coolwarm",

- fmt=".2f",

-)

-plt.title("Correlation between PCA features and computed features")

-plt.xlabel("Computed Features")

-plt.ylabel("PCA Features")

-plt.savefig(

- "/hpc/projects/comp.micro/infected_cell_imaging/Single_cell_phenotyping/ContrastiveLearning/Figure_panels/cell_division/PC_vs_CF_2chan_pca.svg"

-)

-

-

-# %% plot PCA vs set of computed features

-

-set_features = [

- "Fluor radial profile",

- "Homogeneity Phase",

- "Phase IQR",

- "Phase Standard Deviation",

- "Sensor Area",

- "Homogeneity Fluor",

- "Contrast Fluor",

- "Phase radial profile",

-]

-

-plt.figure(figsize=(8, 10))

-sns.heatmap(

- correlation.loc[set_features, "PCA1":"PCA5"],

- annot=True,

- cmap="coolwarm",

- fmt=".2f",

- vmin=-1,

- vmax=1,

-)

-

-plt.savefig(

- "/hpc/projects/comp.micro/infected_cell_imaging/Single_cell_phenotyping/ContrastiveLearning/Figure_panels/cell_division/PC_vs_CF_2chan_pca_setfeatures.svg"

-)

-

-# %% find the cell patches with the highest and lowest value in each feature

-

-def save_patches(fov_name, track_id):

- data_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/registered_test.zarr"

- )

- tracks_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/track_test.zarr"

- )

- source_channel = ["Phase3D", "RFP"]

- prediction_dataset = dataset_of_tracks(

- data_path,

- tracks_path,

- [fov_name],

- [track_id],

- source_channel=source_channel,

- )

- whole = np.stack([p["anchor"] for p in prediction_dataset])

- phase = whole[:, 0]

- fluor = whole[:, 1]

- out_dir = "/hpc/projects/comp.micro/infected_cell_imaging/Single_cell_phenotyping/ContrastiveLearning/Figure_panels/data/computed_features/"

- fov_name_out = fov_name.replace("/", "_")

- np.save(

- (os.path.join(out_dir, "phase" + fov_name_out + "_" + str(track_id) + ".npy")),

- phase,

- )

- np.save(

- (os.path.join(out_dir, "fluor" + fov_name_out + "_" + str(track_id) + ".npy")),

- fluor,

- )

-

-

-# PCA1: Fluor radial profile

-highest_fluor_radial_profile = features.loc[features["Fluor radial profile"].idxmax()]

-print("Row with highest 'Fluor radial profile':")

-# print(highest_fluor_radial_profile)

-print(

- f"fov_name: {highest_fluor_radial_profile['fov_name']}, time: {highest_fluor_radial_profile['t']}"

-)

-save_patches(

- highest_fluor_radial_profile["fov_name"], highest_fluor_radial_profile["track_id"]

-)

-

-lowest_fluor_radial_profile = features.loc[features["Fluor radial profile"].idxmin()]

-print("Row with lowest 'Fluor radial profile':")

-# print(lowest_fluor_radial_profile)

-print(

- f"fov_name: {lowest_fluor_radial_profile['fov_name']}, time: {lowest_fluor_radial_profile['t']}"

-)

-save_patches(

- lowest_fluor_radial_profile["fov_name"], lowest_fluor_radial_profile["track_id"]

-)

-

-# PCA2: Entropy phase

-highest_entropy_phase = features.loc[features["Entropy Phase"].idxmax()]

-print("Row with highest 'Entropy Phase':")

-# print(highest_entropy_phase)

-print(

- f"fov_name: {highest_entropy_phase['fov_name']}, time: {highest_entropy_phase['t']}"

-)

-save_patches(highest_entropy_phase["fov_name"], highest_entropy_phase["track_id"])

-

-lowest_entropy_phase = features.loc[features["Entropy Phase"].idxmin()]

-print("Row with lowest 'Entropy Phase':")

-# print(lowest_entropy_phase)

-print(

- f"fov_name: {lowest_entropy_phase['fov_name']}, time: {lowest_entropy_phase['t']}"

-)

-save_patches(lowest_entropy_phase["fov_name"], lowest_entropy_phase["track_id"])

-

-# PCA3: Phase IQR

-highest_phase_iqr = features.loc[features["Phase IQR"].idxmax()]

-print("Row with highest 'Phase IQR':")

-# print(highest_phase_iqr)

-print(f"fov_name: {highest_phase_iqr['fov_name']}, time: {highest_phase_iqr['t']}")

-save_patches(highest_phase_iqr["fov_name"], highest_phase_iqr["track_id"])

-

-tenth_lowest_phase_iqr = features.nsmallest(10, "Phase IQR").iloc[9]

-print("Row with tenth lowest 'Phase IQR':")

-# print(tenth_lowest_phase_iqr)

-print(

- f"fov_name: {tenth_lowest_phase_iqr['fov_name']}, time: {tenth_lowest_phase_iqr['t']}"

-)

-save_patches(tenth_lowest_phase_iqr["fov_name"], tenth_lowest_phase_iqr["track_id"])

-

-# PCA4: Phase Standard Deviation

-highest_phase_std_dev = features.loc[features["Phase Standard Deviation"].idxmax()]

-print("Row with highest 'Phase Standard Deviation':")

-# print(highest_phase_std_dev)

-print(

- f"fov_name: {highest_phase_std_dev['fov_name']}, time: {highest_phase_std_dev['t']}"

-)

-save_patches(highest_phase_std_dev["fov_name"], highest_phase_std_dev["track_id"])

-

-lowest_phase_std_dev = features.loc[features["Phase Standard Deviation"].idxmin()]

-print("Row with lowest 'Phase Standard Deviation':")

-# print(lowest_phase_std_dev)

-print(

- f"fov_name: {lowest_phase_std_dev['fov_name']}, time: {lowest_phase_std_dev['t']}"

-)

-save_patches(lowest_phase_std_dev["fov_name"], lowest_phase_std_dev["track_id"])

-

-# PCA5: Sensor area

-highest_sensor_area = features.loc[features["Sensor Area"].idxmax()]

-print("Row with highest 'Sensor Area':")

-# print(highest_sensor_area)

-print(f"fov_name: {highest_sensor_area['fov_name']}, time: {highest_sensor_area['t']}")

-save_patches(highest_sensor_area["fov_name"], highest_sensor_area["track_id"])

-

-tenth_lowest_sensor_area = features.nsmallest(10, "Sensor Area").iloc[9]

-print("Row with tenth lowest 'Sensor Area':")

-# print(tenth_lowest_sensor_area)

-print(

- f"fov_name: {tenth_lowest_sensor_area['fov_name']}, time: {tenth_lowest_sensor_area['t']}"

-)

-save_patches(tenth_lowest_sensor_area["fov_name"], tenth_lowest_sensor_area["track_id"])

diff --git a/applications/contrastive_phenotyping/evaluation/PC_vs_CF_singleChannel.py b/applications/contrastive_phenotyping/evaluation/PC_vs_CF_singleChannel.py

deleted file mode 100644

index aac8855c..00000000

--- a/applications/contrastive_phenotyping/evaluation/PC_vs_CF_singleChannel.py

+++ /dev/null

@@ -1,252 +0,0 @@

-""" Script to compute the correlation between PCA and UMAP features and computed features

-* finds the computed features best representing the PCA and UMAP components

-* outputs a heatmap of the correlation between PCA and UMAP features and computed features

-"""

-

-# %%

-from pathlib import Path

-import sys

-

-sys.path.append("/hpc/mydata/soorya.pradeep/scratch/viscy_infection_phenotyping/VisCy")

-

-import numpy as np

-import pandas as pd

-from sklearn.decomposition import PCA

-from umap import UMAP

-from sklearn.preprocessing import StandardScaler

-

-from viscy.representation.embedding_writer import read_embedding_dataset

-from viscy.representation.evaluation import (

- FeatureExtractor as FE,

-)

-from viscy.representation.evaluation import dataset_of_tracks

-

-import matplotlib.pyplot as plt

-import seaborn as sns

-

-from scipy.stats import spearmanr

-import pandas as pd

-import plotly.express as px

-

-# %%

-features_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval_phase/predictions/epoch_186/1chan_128patch_186ckpt_Febtest.zarr"

-)

-data_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/registered_test.zarr"

-)

-tracks_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/9-lineage-cell-division/lineages_gt/track.zarr"

-)

-

-# %%

-

-source_channel = ["Phase3D"]

-z_range = (28, 43)

-normalizations = None

-# fov_name = "/B/4/5"

-# track_id = 11

-

-embedding_dataset = read_embedding_dataset(features_path)

-embedding_dataset

-

-# load all unprojected features:

-features = embedding_dataset["features"]

-

-# %% PCA analysis of the features

-

-pca = PCA(n_components=3)

-embedding = pca.fit_transform(features.values)

-features = (

- features.assign_coords(PCA1=("sample", embedding[:, 0]))

- .assign_coords(PCA2=("sample", embedding[:, 1]))

- .assign_coords(PCA3=("sample", embedding[:, 2]))

- .set_index(sample=["PCA1", "PCA2", "PCA3"], append=True)

-)

-

-# %% convert the xarray to dataframe structure and add columns for computed features

-features_df = features.to_dataframe()

-features_df = features_df.drop(columns=["features"])

-df = features_df.drop_duplicates()

-features = df.reset_index(drop=True)

-

-features = features[features["fov_name"].str.startswith("/B/")]

-

-features["Phase Symmetry Score"] = np.nan

-features["Entropy Phase"] = np.nan

-features["Contrast Phase"] = np.nan

-features["Dissimilarity Phase"] = np.nan

-features["Homogeneity Phase"] = np.nan

-features["Phase IQR"] = np.nan

-features["Phase Standard Deviation"] = np.nan

-features["Phase radial profile"] = np.nan

-

-# %% compute the computed features and add them to the dataset

-

-fov_names_list = features["fov_name"].unique()

-unique_fov_names = sorted(list(set(fov_names_list)))

-

-for fov_name in unique_fov_names:

-

- unique_track_ids = features[features["fov_name"] == fov_name]["track_id"].unique()

- unique_track_ids = list(set(unique_track_ids))

-

- for track_id in unique_track_ids:

-

- # load the image patches

-

- prediction_dataset = dataset_of_tracks(

- data_path,

- tracks_path,

- [fov_name],

- [track_id],

- source_channel=source_channel,

- )

-

- whole = np.stack([p["anchor"] for p in prediction_dataset])

- phase = whole[:, 0, 3]

-

- for t in range(phase.shape[0]):

- # Compute Fourier descriptors for phase image

- phase_descriptors = FE.compute_fourier_descriptors(phase[t])

- # Analyze symmetry of phase image

- phase_symmetry_score = FE.analyze_symmetry(phase_descriptors)

-

- # Compute higher frequency features using spectral entropy

- entropy_phase = FE.compute_spectral_entropy(phase[t])

-

- # Compute texture analysis using GLCM

- contrast_phase, dissimilarity_phase, homogeneity_phase = (

- FE.compute_glcm_features(phase[t])

- )

-

- # Compute interqualtile range of pixel intensities

- iqr = FE.compute_iqr(phase[t])

-

- # Compute standard deviation of pixel intensities

- phase_std_dev = FE.compute_std_dev(phase[t])

-

- # Compute radial intensity gradient

- phase_radial_profile = FE.compute_radial_intensity_gradient(phase[t])

-

- # update the features dataframe with the computed features

-

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Phase Symmetry Score",

- ] = phase_symmetry_score

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Entropy Phase",

- ] = entropy_phase

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Contrast Phase",

- ] = contrast_phase

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Dissimilarity Phase",

- ] = dissimilarity_phase

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Homogeneity Phase",

- ] = homogeneity_phase

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Phase IQR",

- ] = iqr

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Phase Standard Deviation",

- ] = phase_std_dev

- features.loc[

- (features["fov_name"] == fov_name)

- & (features["track_id"] == track_id)

- & (features["t"] == t),

- "Phase radial profile",

- ] = phase_radial_profile

-

-# %%

-# Save the features dataframe to a CSV file

-features.to_csv(

- "/hpc/projects/comp.micro/infected_cell_imaging/Single_cell_phenotyping/ContrastiveLearning/Figure_panels/cell_division/features_oneChan.csv",

- index=False,

-)

-

-# read the csv file

-# features = pd.read_csv(

-# "/hpc/projects/comp.micro/infected_cell_imaging/Single_cell_phenotyping/ContrastiveLearning/Figure_panels/cell_division/features_oneChan.csv"

-# )

-

-# remove the rows with missing values

-features = features.dropna()

-

-# sub_features = features[features["Time"] == 20]

-feature_df_removed = features.drop(

- columns=["fov_name", "track_id", "t", "id", "parent_track_id", "parent_id"]

-)

-

-# Compute correlation between PCA features and computed features

-correlation = feature_df_removed.corr(method="spearman")

-

-# %% calculate the p-value and draw volcano plot to show the significance of the correlation

-

-p_values = pd.DataFrame(index=correlation.index, columns=correlation.columns)

-

-for i in correlation.index:

- for j in correlation.columns:

- if i != j:

- p_values.loc[i, j] = spearmanr(

- feature_df_removed[i], feature_df_removed[j]

- )[1]

-

-p_values = p_values.astype(float)

-

-# %% draw an interactive volcano plot showing -log10(p-value) vs fold change

-

-# Flatten the correlation and p-values matrices and create a DataFrame

-correlation_flat = correlation.values.flatten()

-p_values_flat = p_values.values.flatten()

-# Create a list of feature names for the flattened correlation and p-values

-feature_names = [f"{i}_{j}" for i in correlation.index for j in correlation.columns]

-

-data = pd.DataFrame(

- {

- "Correlation": correlation_flat,

- "-log10(p-value)": -np.log10(p_values_flat),

- "feature_names": feature_names,

- }

-)

-

-# Create an interactive scatter plot using Plotly

-fig = px.scatter(

- data,

- x="Correlation",

- y="-log10(p-value)",

- title="Volcano plot showing significance of correlation",

- labels={"Correlation": "Correlation", "-log10(p-value)": "-log10(p-value)"},

- opacity=0.5,

- hover_data=["feature_names"],

-)

-

-fig.show()

-# Save the interactive volcano plot as an HTML file

-fig.write_html(

- "/hpc/projects/comp.micro/infected_cell_imaging/Single_cell_phenotyping/ContrastiveLearning/Figure_panels/cell_division/volcano_plot_1chan.html"

-)

-

-# %%

diff --git a/applications/contrastive_phenotyping/evaluation/analyze_embeddings.py b/applications/contrastive_phenotyping/evaluation/analyze_embeddings.py

deleted file mode 100644

index b7dda83c..00000000

--- a/applications/contrastive_phenotyping/evaluation/analyze_embeddings.py

+++ /dev/null

@@ -1,114 +0,0 @@

-# %% Imports

-from pathlib import Path

-import seaborn as sns

-import matplotlib.pyplot as plt

-import plotly.express as px

-import pandas as pd

-import numpy as np

-from sklearn.preprocessing import StandardScaler

-from sklearn.decomposition import PCA

-from sklearn.preprocessing import StandardScaler

-

-

-from viscy.representation.embedding_writer import read_embedding_dataset

-from viscy.representation.evaluation import load_annotation, compute_pca, compute_umap

-

-# %% Jupyter magic command for autoreloading modules

-# ruff: noqa

-# fmt: off

-%load_ext autoreload

-%autoreload 2

-# fmt: on

-# ruff: noqa

-# %% Paths and parameters

-

-path_embedding = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval/predict/feb_test_time_interval_1_epoch_51.zarr"

-)

-path_annotations_infection = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/supervised_inf_pred/extracted_inf_state.csv"

-)

-path_annotations_division = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/"

-)

-

-path_tracks = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_06_13_SEC61_TOMM20_ZIKV_DENGUE_1/4.1-tracking/test_tracking_4.zarr"

-)

-

-path_images = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_06_13_SEC61_TOMM20_ZIKV_DENGUE_1/2-register/registered_chunked.zarr"

-)

-

-# %% Load embeddings and annotations.

-

-dataset = read_embedding_dataset(path_embedding)

-# load all unprojected features:

-features = dataset["features"]

-# or select a well:

-# features - features[features["fov_name"].str.contains("B/4")]

-features

-

-feb_infection = load_annotation(

- dataset,

- path_annotations_infection,

- "infection_state",

- {0.0: "background", 1.0: "uninfected", 2.0: "infected"},

-)

-

-# %% interactive quality control: principal components

-# Compute principal components and ranks of embeddings and projections.

-

-# compute rank

-rank_features = np.linalg.matrix_rank(dataset["features"].values)

-rank_projections = np.linalg.matrix_rank(dataset["projections"].values)

-

-pca_features, pca_projections, pca_df = compute_pca(dataset)

-

-# Plot explained variance and rank

-plt.plot(

- pca_features.explained_variance_ratio_, label=f"features, rank={rank_features}"

-)

-plt.plot(

- pca_projections.explained_variance_ratio_,

- label=f"projections, rank={rank_projections}",

-)

-plt.legend()

-plt.xlabel("n_components")

-plt.ylabel("explained variance ratio")

-plt.xlim([0, 50])

-plt.show()

-

-# Density plot of first two principal components of features and projections.

-fig, ax = plt.subplots(1, 2, figsize=(10, 5))

-sns.kdeplot(data=pca_df, x="PCA1", y="PCA2", ax=ax[0], fill=True, cmap="Blues")

-sns.kdeplot(data=pca_df, x="PCA1_proj", y="PCA2_proj", ax=ax[1], fill=True, cmap="Reds")

-ax[0].set_title("Density plot of PCA1 vs PCA2 (features)")

-ax[1].set_title("Density plot of PCA1 vs PCA2 (projections)")

-plt.show()

-

-# %% interactive quality control: UMAP

-# Compute UMAP embeddings

-umap_features, umap_projections, umap_df = compute_umap(dataset)

-

-# %%

-# Plot UMAP embeddings as density plots

-fig, ax = plt.subplots(1, 2, figsize=(10, 5))

-sns.kdeplot(data=umap_df, x="UMAP1", y="UMAP2", ax=ax[0], fill=True, cmap="Blues")

-sns.kdeplot(data=umap_df, x="UMAP1_proj", y="UMAP2_proj", ax=ax[1], fill=True, cmap="Reds")

-ax[0].set_title("Density plot of UMAP1 vs UMAP2 (features)")

-ax[1].set_title("Density plot of UMAP1 vs UMAP2 (projections)")

-plt.show()

-

-# %% interactive quality control: pairwise distances

-

-

-# %% Evaluation: infection score

-

-## Overlay UMAP and infection state

-## Linear classification accuracy

-## Clustering accuracy

-

-# %% Evaluation: cell division

-

-# %% Evaluation: correlation between principal components and computed features

diff --git a/applications/contrastive_phenotyping/evaluation/cosine_similarity.py b/applications/contrastive_phenotyping/evaluation/cosine_similarity.py

deleted file mode 100644

index 78a4906c..00000000

--- a/applications/contrastive_phenotyping/evaluation/cosine_similarity.py

+++ /dev/null

@@ -1,546 +0,0 @@

-# %%

-# Import necessary libraries, try euclidean distance for both features and

-from pathlib import Path

-

-import matplotlib.pyplot as plt

-import numpy as np

-import pandas as pd

-import seaborn as sns

-from sklearn.preprocessing import StandardScaler

-from umap import UMAP

-

-from viscy.representation.embedding_writer import read_embedding_dataset

-from viscy.representation.evaluation import (

- calculate_cosine_similarity_cell,

- compute_displacement,

- compute_displacement_mean_std,

-)

-

-# %% Paths and parameters.

-

-

-features_path_30_min = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval/predict/feb_test_time_interval_1_epoch_178.zarr"

-)

-

-

-feature_path_no_track = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/negpair_random_sampling2/feb_fixed_test_predict.zarr"

-)

-

-

-features_path_any_time = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/negpair_difcell_randomtime_sampling/Ver2_updateTracking_refineModel/predictions/Feb_2chan_128patch_32projDim/2chan_128patch_56ckpt_FebTest.zarr"

-)

-

-

-data_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/registered_test.zarr"

-)

-

-

-tracks_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/track_test.zarr"

-)

-

-

-# %% Load embedding datasets for all three sampling

-fov_name = "/B/4/6"

-track_id = 52

-

-embedding_dataset_30_min = read_embedding_dataset(features_path_30_min)

-embedding_dataset_no_track = read_embedding_dataset(feature_path_no_track)

-embedding_dataset_any_time = read_embedding_dataset(features_path_any_time)

-

-# Calculate cosine similarities for each sampling

-time_points_30_min, cosine_similarities_30_min = calculate_cosine_similarity_cell(

- embedding_dataset_30_min, fov_name, track_id

-)

-time_points_no_track, cosine_similarities_no_track = calculate_cosine_similarity_cell(

- embedding_dataset_no_track, fov_name, track_id

-)

-time_points_any_time, cosine_similarities_any_time = calculate_cosine_similarity_cell(

- embedding_dataset_any_time, fov_name, track_id

-)

-

-# %% Plot cosine similarities over time for all three conditions

-

-plt.figure(figsize=(10, 6))

-

-plt.plot(

- time_points_no_track,

- cosine_similarities_no_track,

- marker="o",

- label="classical contrastive (no tracking)",

-)

-plt.plot(

- time_points_any_time, cosine_similarities_any_time, marker="o", label="cell aware"

-)

-plt.plot(

- time_points_30_min,

- cosine_similarities_30_min,

- marker="o",

- label="cell & time aware (interval 30 min)",

-)

-

-plt.xlabel("Time Delay (t)")

-plt.ylabel("Cosine Similarity with First Time Point")

-plt.title("Cosine Similarity Over Time for Infected Cell")

-

-# plt.savefig('infected_cell_example.pdf', format='pdf')

-

-

-plt.grid(True)

-

-plt.legend()

-

-plt.savefig("new_example_cell.svg", format="svg")

-

-

-plt.show()

-# %%

-

-

-# %% import statements

-

-

-# %% Paths to datasets

-features_path_30_min = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval/predict/feb_test_time_interval_1_epoch_178.zarr"

-)

-feature_path_no_track = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/negpair_random_sampling2/feb_fixed_test_predict.zarr"

-)

-# features_path_any_time = Path("/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/negpair_difcell_randomtime_sampling/Ver2_updateTracking_refineModel/predictions/Feb_1chan_128patch_32projDim/1chan_128patch_63ckpt_FebTest.zarr")

-

-

-# %% Read embedding datasets

-embedding_dataset_30_min = read_embedding_dataset(features_path_30_min)

-embedding_dataset_no_track = read_embedding_dataset(feature_path_no_track)

-# embedding_dataset_any_time = read_embedding_dataset(features_path_any_time)

-

-

-# %% Compute displacements for both datasets (using Euclidean distance and Cosine similarity)

-max_tau = 10 # Maximum time shift (tau) to compute displacements

-

-

-# mean_displacement_30_min, std_displacement_30_min = compute_displacement_mean_std(embedding_dataset_30_min, max_tau, use_cosine=False, use_dissimilarity=False)

-# mean_displacement_no_track, std_displacement_no_track = compute_displacement_mean_std(embedding_dataset_no_track, max_tau, use_cosine=False, use_dissimilarity=False)

-# mean_displacement_any_time, std_displacement_any_time = compute_displacement_mean_std(embedding_dataset_any_time, max_tau, use_cosine=False)

-

-

-mean_displacement_30_min_cosine, std_displacement_30_min_cosine = (

- compute_displacement_mean_std(

- embedding_dataset_30_min, max_tau, use_cosine=True, use_dissimilarity=False

- )

-)

-mean_displacement_no_track_cosine, std_displacement_no_track_cosine = (

- compute_displacement_mean_std(

- embedding_dataset_no_track, max_tau, use_cosine=True, use_dissimilarity=False

- )

-)

-# mean_displacement_any_time_cosine, std_displacement_any_time_cosine = compute_displacement_mean_std(embedding_dataset_any_time, max_tau, use_cosine=True)

-# %% Plot 1: Euclidean Displacements

-plt.figure(figsize=(10, 6))

-

-

-taus = list(mean_displacement_30_min_cosine.keys())

-mean_values_30_min = list(mean_displacement_30_min_cosine.values())

-std_values_30_min = list(std_displacement_30_min_cosine.values())

-

-

-mean_values_no_track = list(mean_displacement_no_track_cosine.values())

-std_values_no_track = list(std_displacement_no_track_cosine.values())

-

-

-# mean_values_any_time = list(mean_displacement_any_time.values())

-# std_values_any_time = list(std_displacement_any_time.values())

-

-

-# Plotting Euclidean displacements

-plt.plot(

- taus, mean_values_30_min, marker="o", label="Cell & Time Aware (30 min interval)"

-)

-plt.fill_between(

- taus,

- np.array(mean_values_30_min) - np.array(std_values_30_min),

- np.array(mean_values_30_min) + np.array(std_values_30_min),

- color="gray",

- alpha=0.3,

- label="Std Dev (30 min interval)",

-)

-

-

-plt.plot(

- taus, mean_values_no_track, marker="o", label="Classical Contrastive (No Tracking)"

-)

-plt.fill_between(

- taus,

- np.array(mean_values_no_track) - np.array(std_values_no_track),

- np.array(mean_values_no_track) + np.array(std_values_no_track),

- color="blue",

- alpha=0.3,

- label="Std Dev (No Tracking)",

-)

-

-

-plt.xlabel("Time Shift (τ)")

-plt.ylabel("Displacement")

-plt.title("Embedding Displacement Over Time")

-plt.grid(True)

-plt.legend()

-

-

-# plt.savefig('embedding_displacement_euclidean.svg', format='svg')

-# plt.savefig('embedding_displacement_euclidean.pdf', format='pdf')

-

-

-# Show the Euclidean plot

-plt.show()

-

-

-# %% Plot 2: Cosine Displacements

-plt.figure(figsize=(10, 6))

-

-taus = list(mean_displacement_30_min_cosine.keys())

-

-# Plotting Cosine displacements

-mean_values_30_min_cosine = list(mean_displacement_30_min_cosine.values())

-std_values_30_min_cosine = list(std_displacement_30_min_cosine.values())

-

-

-mean_values_no_track_cosine = list(mean_displacement_no_track_cosine.values())

-std_values_no_track_cosine = list(std_displacement_no_track_cosine.values())

-

-

-plt.plot(

- taus,

- mean_values_30_min_cosine,

- marker="o",

- label="Cell & Time Aware (30 min interval)",

-)

-plt.fill_between(

- taus,

- np.array(mean_values_30_min_cosine) - np.array(std_values_30_min_cosine),

- np.array(mean_values_30_min_cosine) + np.array(std_values_30_min_cosine),

- color="gray",

- alpha=0.3,

- label="Std Dev (30 min interval)",

-)

-

-

-plt.plot(

- taus,

- mean_values_no_track_cosine,

- marker="o",

- label="Classical Contrastive (No Tracking)",

-)

-plt.fill_between(

- taus,

- np.array(mean_values_no_track_cosine) - np.array(std_values_no_track_cosine),

- np.array(mean_values_no_track_cosine) + np.array(std_values_no_track_cosine),

- color="blue",

- alpha=0.3,

- label="Std Dev (No Tracking)",

-)

-

-

-plt.xlabel("Time Shift (τ)")

-plt.ylabel("Cosine Similarity")

-plt.title("Embedding Displacement Over Time")

-

-

-plt.grid(True)

-plt.legend()

-plt.savefig("1_std_cosine_plot.svg", format="svg")

-

-# Show the Cosine plot

-plt.show()

-# %%

-

-

-# %% Paths to datasets

-features_path_30_min = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval/predict/feb_test_time_interval_1_epoch_178.zarr"

-)

-feature_path_no_track = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/negpair_random_sampling2/feb_fixed_test_predict.zarr"

-)

-

-

-# %% Read embedding datasets

-embedding_dataset_30_min = read_embedding_dataset(features_path_30_min)

-embedding_dataset_no_track = read_embedding_dataset(feature_path_no_track)

-

-

-# %% Compute displacements for both datasets (using Cosine similarity)

-max_tau = 10 # Maximum time shift (tau) to compute displacements

-

-

-# Compute displacements for Cell & Time Aware (30 min interval) using Cosine similarity

-displacement_per_tau_aware_cosine = compute_displacement(

- embedding_dataset_30_min,

- max_tau,

- use_cosine=True,

- use_dissimilarity=False,

- use_umap=False,

-)

-

-

-# Compute displacements for Classical Contrastive (No Tracking) using Cosine similarity

-displacement_per_tau_contrastive_cosine = compute_displacement(

- embedding_dataset_no_track,

- max_tau,

- use_cosine=True,

- use_dissimilarity=False,

- use_umap=False,

-)

-

-

-# %% Prepare data for violin plot

-def prepare_violin_data(taus, displacement_aware, displacement_contrastive):

- # Create a list to hold the data

- data = []

-

- # Populate the data for Cell & Time Aware

- for tau in taus:

- displacements_aware = displacement_aware.get(tau, [])

- for displacement in displacements_aware:

- data.append(

- {

- "Time Shift (τ)": tau,

- "Displacement": displacement,

- "Sampling": "Cell & Time Aware (30 min interval)",

- }

- )

-

- # Populate the data for Classical Contrastive

- for tau in taus:

- displacements_contrastive = displacement_contrastive.get(tau, [])

- for displacement in displacements_contrastive:

- data.append(

- {

- "Time Shift (τ)": tau,

- "Displacement": displacement,

- "Sampling": "Classical Contrastive (No Tracking)",

- }

- )

-

- # Convert to a DataFrame

- df = pd.DataFrame(data)

- return df

-

-

-taus = list(displacement_per_tau_aware_cosine.keys())

-

-

-# Prepare the violin plot data

-df = prepare_violin_data(

- taus, displacement_per_tau_aware_cosine, displacement_per_tau_contrastive_cosine

-)

-

-

-# Create a violin plot using seaborn

-plt.figure(figsize=(12, 8))

-sns.violinplot(

- x="Time Shift (τ)",

- y="Displacement",

- hue="Sampling",

- data=df,

- palette="Set2",

- scale="width",

- bw=0.2,

- inner=None,

- split=True,

- cut=0,

-)

-

-

-# Add labels and title

-plt.xlabel("Time Shift (τ)", fontsize=14)

-plt.ylabel("Cosine Similarity", fontsize=14)

-plt.title("Cosine Similarity Distribution on Features", fontsize=16)

-plt.grid(True, linestyle="--", alpha=0.6) # Lighter grid lines for less distraction

-plt.legend(title="Sampling", fontsize=12, title_fontsize=14)

-

-

-# plt.ylim(0.5, 1.0)

-

-

-# Save the violin plot as SVG and PDF

-plt.savefig("1fixed_violin_plot_cosine_similarity.svg", format="svg")

-# plt.savefig('violin_plot_cosine_similarity.pdf', format='pdf')

-

-

-# Show the plot

-plt.show()

-# %% using umap violin plot

-

-# %% Paths to datasets

-features_path_30_min = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval/predict/feb_test_time_interval_1_epoch_178.zarr"

-)

-feature_path_no_track = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/negpair_random_sampling2/feb_fixed_test_predict.zarr"

-)

-

-# %% Read embedding datasets

-embedding_dataset_30_min = read_embedding_dataset(features_path_30_min)

-embedding_dataset_no_track = read_embedding_dataset(feature_path_no_track)

-

-

-# %% Compute UMAP on features

-def compute_umap(dataset):

- features = dataset["features"]

- scaled_features = StandardScaler().fit_transform(features.values)

- umap = UMAP(n_components=2) # Reduce to 2 dimensions

- embedding = umap.fit_transform(scaled_features)

-

- # Add UMAP coordinates using xarray functionality

- umap_features = features.assign_coords(

- UMAP1=("sample", embedding[:, 0]), UMAP2=("sample", embedding[:, 1])

- )

- return umap_features

-

-

-# Apply UMAP to both datasets

-umap_features_30_min = compute_umap(embedding_dataset_30_min)

-umap_features_no_track = compute_umap(embedding_dataset_no_track)

-

-# %%

-print(umap_features_30_min)

-# %% Visualize UMAP embeddings

-# # Visualize UMAP embeddings for the 30 min interval

-# plt.figure(figsize=(8, 6))

-# plt.scatter(umap_features_30_min[:, 0], umap_features_30_min[:, 1], c=embedding_dataset_30_min["t"].values, cmap='viridis')

-# plt.colorbar(label='Timepoints')

-# plt.title('UMAP Projection of Features (30 min Interval)')

-# plt.xlabel('UMAP1')

-# plt.ylabel('UMAP2')

-# plt.show()

-

-# # Visualize UMAP embeddings for the No Tracking dataset

-# plt.figure(figsize=(8, 6))

-# plt.scatter(umap_features_no_track[:, 0], umap_features_no_track[:, 1], c=embedding_dataset_no_track["t"].values, cmap='viridis')

-# plt.colorbar(label='Timepoints')

-# plt.title('UMAP Projection of Features (No Tracking)')

-# plt.xlabel('UMAP1')

-# plt.ylabel('UMAP2')

-# plt.show()

-# %% Compute displacements using UMAP coordinates (using Cosine similarity)

-max_tau = 10 # Maximum time shift (tau) to compute displacements

-

-# Compute displacements for UMAP-processed Cell & Time Aware (30 min interval)

-displacement_per_tau_aware_umap_cosine = compute_displacement(

- umap_features_30_min,

- max_tau,

- use_cosine=True,

- use_dissimilarity=False,

- use_umap=True,

-)

-

-# Compute displacements for UMAP-processed Classical Contrastive (No Tracking)

-displacement_per_tau_contrastive_umap_cosine = compute_displacement(

- umap_features_no_track,

- max_tau,

- use_cosine=True,

- use_dissimilarity=False,

- use_umap=True,

-)

-

-

-# %% Prepare data for violin plot

-def prepare_violin_data(taus, displacement_aware, displacement_contrastive):

- # Create a list to hold the data

- data = []

-

- # Populate the data for Cell & Time Aware

- for tau in taus:

- displacements_aware = displacement_aware.get(tau, [])

- for displacement in displacements_aware:

- data.append(

- {

- "Time Shift (τ)": tau,

- "Displacement": displacement,

- "Sampling": "Cell & Time Aware (30 min interval)",

- }

- )

-

- # Populate the data for Classical Contrastive

- for tau in taus:

- displacements_contrastive = displacement_contrastive.get(tau, [])

- for displacement in displacements_contrastive:

- data.append(

- {

- "Time Shift (τ)": tau,

- "Displacement": displacement,

- "Sampling": "Classical Contrastive (No Tracking)",

- }

- )

-

- # Convert to a DataFrame

- df = pd.DataFrame(data)

- return df

-

-

-taus = list(displacement_per_tau_aware_umap_cosine.keys())

-

-# Prepare the violin plot data

-df = prepare_violin_data(

- taus,

- displacement_per_tau_aware_umap_cosine,

- displacement_per_tau_contrastive_umap_cosine,

-)

-

-# %% Create a violin plot using seaborn

-plt.figure(figsize=(12, 8))

-sns.violinplot(

- x="Time Shift (τ)",

- y="Displacement",

- hue="Sampling",

- data=df,

- palette="Set2",

- scale="width",

- bw=0.2,

- inner=None,

- split=True,

- cut=0,

-)

-

-# Add labels and title

-plt.xlabel("Time Shift (τ)", fontsize=14)

-plt.ylabel("Cosine Similarity", fontsize=14)

-plt.title("Cosine Similarity Distribution using UMAP Features", fontsize=16)

-plt.grid(True, linestyle="--", alpha=0.6) # Lighter grid lines for less distraction

-plt.legend(title="Sampling", fontsize=12, title_fontsize=14)

-

-# plt.ylim(0, 1)

-

-# Save the violin plot as SVG and PDF

-plt.savefig("fixed_plot_cosine_similarity.svg", format="svg")

-# plt.savefig('violin_plot_cosine_similarity_umap.pdf', format='pdf')

-

-# Show the plot

-plt.show()

-

-

-# %%

-# %% Visualize Displacement Distributions (Example Code)

-# Compare displacement distributions for τ = 1

-# plt.figure(figsize=(10, 6))

-# sns.histplot(displacement_per_tau_aware_umap_cosine[1], kde=True, label='UMAP - 30 min Interval', color='blue')

-# sns.histplot(displacement_per_tau_contrastive_umap_cosine[1], kde=True, label='UMAP - No Tracking', color='green')

-# plt.legend()

-# plt.title('Comparison of Displacement Distributions for τ = 1 (UMAP)')

-# plt.xlabel('Displacement')

-# plt.show()

-

-# # Compare displacement distributions for the full feature set (same τ = 1)

-# plt.figure(figsize=(10, 6))

-# sns.histplot(displacement_per_tau_aware_cosine[1], kde=True, label='Full Features - 30 min Interval', color='red')

-# sns.histplot(displacement_per_tau_contrastive_cosine[1], kde=True, label='Full Features - No Tracking', color='orange')

-# plt.legend()

-# plt.title('Comparison of Displacement Distributions for τ = 1 (Full Features)')

-# plt.xlabel('Displacement')

-# plt.show()

-# # %%

diff --git a/applications/contrastive_phenotyping/evaluation/displacement.py b/applications/contrastive_phenotyping/evaluation/displacement.py

deleted file mode 100644

index a0d46c28..00000000

--- a/applications/contrastive_phenotyping/evaluation/displacement.py

+++ /dev/null

@@ -1,118 +0,0 @@

-# %%

-from pathlib import Path

-

-import matplotlib.pyplot as plt

-import numpy as np

-import pandas as pd

-import plotly.express as px

-import seaborn as sns

-from sklearn.decomposition import PCA

-from sklearn.preprocessing import StandardScaler

-from umap import UMAP

-from sklearn.decomposition import PCA

-from matplotlib.font_manager import FontProperties

-

-from viscy.representation.embedding_writer import read_embedding_dataset

-from viscy.representation.evaluation import dataset_of_tracks, load_annotation

-from viscy.representation.evaluation import calculate_normalized_euclidean_distance_cell

-from viscy.representation.evaluation import compute_displacement_mean_std_full

-from sklearn.metrics.pairwise import cosine_similarity

-from collections import defaultdict

-from scipy.ndimage import gaussian_filter1d

-

-# %% paths

-

-features_path_30_min = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval/predict/feb_test_time_interval_1_epoch_178.zarr"

-)

-

-feature_path_no_track = Path("/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/negpair_random_sampling2/feb_fixed_test_predict.zarr")

-

-features_path_any_time = Path("/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/negpair_difcell_randomtime_sampling/Ver2_updateTracking_refineModel/predictions/Feb_2chan_128patch_32projDim/2chan_128patch_56ckpt_FebTest.zarr")

-

-data_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/registered_test.zarr"

-)

-

-tracks_path = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/track_test.zarr"

-)

-

-# %% Load embedding datasets for all three sampling

-fov_name = '/B/4/6'

-track_id = 52

-

-embedding_dataset_30_min = read_embedding_dataset(features_path_30_min)

-embedding_dataset_no_track = read_embedding_dataset(feature_path_no_track)

-embedding_dataset_any_time = read_embedding_dataset(features_path_any_time)

-

-#%%

-# Calculate displacement for each sampling

-time_points_30_min, cosine_similarities_30_min = calculate_normalized_euclidean_distance_cell(embedding_dataset_30_min, fov_name, track_id)

-time_points_no_track, cosine_similarities_no_track = calculate_normalized_euclidean_distance_cell(embedding_dataset_no_track, fov_name, track_id)

-time_points_any_time, cosine_similarities_any_time = calculate_normalized_euclidean_distance_cell(embedding_dataset_any_time, fov_name, track_id)

-

-# %% Plot displacement over time for all three conditions

-

-plt.figure(figsize=(10, 6))

-

-plt.plot(time_points_no_track, cosine_similarities_no_track, marker='o', label='classical contrastive (no tracking)')

-plt.plot(time_points_any_time, cosine_similarities_any_time, marker='o', label='cell aware')

-plt.plot(time_points_30_min, cosine_similarities_30_min, marker='o', label='cell & time aware (interval 30 min)')

-

-plt.xlabel("Time Delay (t)", fontsize=10)

-plt.ylabel("Normalized Euclidean Distance with First Time Point", fontsize=10)

-plt.title("Normalized Euclidean Distance (Features) Over Time for Infected Cell", fontsize=12)

-

-plt.grid(True)

-plt.legend(fontsize=10)

-

-#plt.savefig('4_euc_dist_full.svg', format='svg')

-plt.show()

-

-

-# %% Paths to datasets

-features_path_30_min = Path("/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval/predict/feb_test_time_interval_1_epoch_178.zarr")

-feature_path_no_track = Path("/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/negpair_random_sampling2/feb_fixed_test_predict.zarr")

-

-embedding_dataset_30_min = read_embedding_dataset(features_path_30_min)

-embedding_dataset_no_track = read_embedding_dataset(feature_path_no_track)

-

-

-# %%

-max_tau = 10

-

-mean_displacement_30_min_euc, std_displacement_30_min_euc = compute_displacement_mean_std_full(embedding_dataset_30_min, max_tau)

-mean_displacement_no_track_euc, std_displacement_no_track_euc = compute_displacement_mean_std_full(embedding_dataset_no_track, max_tau)

-

-# %% Plot 2: Cosine Displacements

-plt.figure(figsize=(10, 6))

-

-taus = list(mean_displacement_30_min_euc.keys())

-

-mean_values_30_min_euc = list(mean_displacement_30_min_euc.values())

-std_values_30_min_euc = list(std_displacement_30_min_euc.values())

-

-plt.plot(taus, mean_values_30_min_euc, marker='o', label='Cell & Time Aware (30 min interval)', color='green')

-plt.fill_between(taus,

- np.array(mean_values_30_min_euc) - np.array(std_values_30_min_euc),

- np.array(mean_values_30_min_euc) + np.array(std_values_30_min_euc),

- color='green', alpha=0.3, label='Std Dev (30 min interval)')

-

-mean_values_no_track_euc = list(mean_displacement_no_track_euc.values())

-std_values_no_track_euc = list(std_displacement_no_track_euc.values())

-

-plt.plot(taus, mean_values_no_track_euc, marker='o', label='Classical Contrastive (No Tracking)', color='blue')

-plt.fill_between(taus,

- np.array(mean_values_no_track_euc) - np.array(std_values_no_track_euc),

- np.array(mean_values_no_track_euc) + np.array(std_values_no_track_euc),

- color='blue', alpha=0.3, label='Std Dev (No Tracking)')

-

-plt.xlabel('Time Shift (τ)')

-plt.ylabel('Euclidean Distance')

-plt.title('Embedding Displacement Over Time (Features)')

-

-plt.grid(True)

-plt.legend()

-

-plt.show()

diff --git a/applications/contrastive_phenotyping/evaluation/figure.mplstyle b/applications/contrastive_phenotyping/evaluation/figure.mplstyle

deleted file mode 100644

index 7e609568..00000000

--- a/applications/contrastive_phenotyping/evaluation/figure.mplstyle

+++ /dev/null

@@ -1,8 +0,0 @@

-font.family: sans-serif

-font.sans-serif: Arial

-font.size: 10

-figure.titlesize: 12

-axes.titlesize: 10

-xtick.labelsize: 8

-ytick.labelsize: 8

-text.usetex: True

diff --git a/applications/contrastive_phenotyping/evaluation/grad_attr.py b/applications/contrastive_phenotyping/evaluation/grad_attr.py

deleted file mode 100644

index 321a7a00..00000000

--- a/applications/contrastive_phenotyping/evaluation/grad_attr.py

+++ /dev/null

@@ -1,265 +0,0 @@

-# %%

-from pathlib import Path

-

-import matplotlib as mpl

-import matplotlib.pyplot as plt

-import numpy as np

-import pandas as pd

-import torch

-from cmap import Colormap

-from lightning.pytorch import seed_everything

-from skimage.exposure import rescale_intensity

-

-from viscy.data.triplet import TripletDataModule

-from viscy.representation.embedding_writer import read_embedding_dataset

-from viscy.representation.engine import ContrastiveEncoder, ContrastiveModule

-from viscy.representation.evaluation import load_annotation

-from viscy.representation.lca import (

- AssembledClassifier,

- fit_logistic_regression,

- linear_from_binary_logistic_regression,

-)

-from viscy.transforms import NormalizeSampled, ScaleIntensityRangePercentilesd

-

-# %%

-seed_everything(42, workers=True)

-

-fov = "/B/4/8"

-track = 44

-

-# %%

-dm = TripletDataModule(

- data_path="/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/registered_test.zarr",

- tracks_path="/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/track_test.zarr",

- source_channel=["Phase3D", "RFP"],

- z_range=[25, 40],

- batch_size=48,

- num_workers=0,

- initial_yx_patch_size=(128, 128),

- final_yx_patch_size=(128, 128),

- normalizations=[

- NormalizeSampled(

- keys=["Phase3D"], level="fov_statistics", subtrahend="mean", divisor="std"

- ),

- ScaleIntensityRangePercentilesd(

- keys=["RFP"], lower=50, upper=99, b_min=0.0, b_max=1.0

- ),

- ],

- predict_cells=True,

- include_fov_names=[fov],

- include_track_ids=[track],

-)

-dm.setup("predict")

-len(dm.predict_dataset)

-

-# %%

-# load model

-model = ContrastiveModule.load_from_checkpoint(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval/predict/epoch=178-step=16826.ckpt",

- encoder=ContrastiveEncoder(

- backbone="convnext_tiny",

- in_channels=2,

- in_stack_depth=15,

- stem_kernel_size=(5, 4, 4),

- stem_stride=(5, 4, 4),

- embedding_dim=768,

- projection_dim=32,

- ),

-).eval()

-

-# %%

-# train linear classifier

-path_infection_embedding = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval/predict/feb_test_time_interval_1_epoch_178.zarr"

-)

-path_division_embedding = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/infection_classification/models/time_sampling_strategies/time_interval/predict/feb_test_time_interval_1_epoch_178_gt_tracks.zarr"

-)

-path_annotations_infection = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/8-train-test-split/supervised_inf_pred/extracted_inf_state.csv"

-)

-path_annotations_division = Path(

- "/hpc/projects/intracellular_dashboard/viral-sensor/2024_02_04_A549_DENV_ZIKV_timelapse/9-lineage-cell-division/lineages_gt/cell_division_state_test_set.csv"

-)

-

-infection_dataset = read_embedding_dataset(path_infection_embedding)

-infection_features = infection_dataset["features"]

-infection = load_annotation(

- infection_dataset,

- path_annotations_infection,

- "infection_state",

- {0.0: "background", 1.0: "uninfected", 2.0: "infected"},

-)

-

-division_dataset = read_embedding_dataset(path_division_embedding)

-division_features = division_dataset["features"]

-division = load_annotation(division_dataset, path_annotations_division, "division")

-# move the unknown class to the 0 label

-division[division == 1] = -2

-division += 2

-division /= 2

-division = division.astype("category")

-

-# %%

-train_fovs = ["/A/3/7", "/A/3/8", "/A/3/9", "/B/4/6", "/B/4/7"]

-

-# %%

-logistic_regression_infection, _ = fit_logistic_regression(

- infection_features.copy(),

- infection.copy(),

- train_fovs,

- remove_background_class=True,

- scale_features=False,

- class_weight="balanced",

- solver="liblinear",

-)

-# %%

-logistic_regression_division, _ = fit_logistic_regression(

- division_features.copy(),

- division.copy(),

- train_fovs,

- remove_background_class=True,

- scale_features=False,

- class_weight="balanced",