-

Notifications

You must be signed in to change notification settings - Fork 896

What's New

This page only shows a few highlights or important changes for each release, but covers them in more detail and sometimes with code samples. For a full listing of all changes, see our release notes directly in the repository instead.

We now provide basic root finding algorithms. A root of a function x -> f(x) is a solution of the equation f(x)=0. Root-finding algorithms can thus help finding numerical real solutions of arbitrary equations, provided f is reasonably well-behaved and we already have an idea about an interval [a,b] where we expect a root. As usual, there is a facade class RealRoots for simple scenarios, but you can also directly use one of the algorithms in the Algorithms sub-namespace.

RealRoots.OfFunction(x => x*x - 4, -5, 5) // -2.00000000046908

RealRoots.OfFunction(x => x*x - 4, -5, 5, accuracy: 1e-14) // -2 (exact)

RealRoots.OfFunctionAndDerivative(x => x*x - 4, x => 2*x, -5, 5) // -2 (exact)

We use Brent's method as default algorithm, implemented in the Brent class. When directly using the algorithm you can specify more parameters, like the maximum number of iterations.

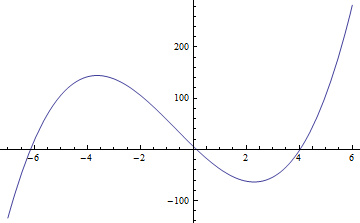

Example: Find the real roots of the cubic polynomial 2x^3 + 4x^2 - 50x + 6:

Func<double, double> f = x => Evaluate.Polynomial(x, 6, -50, 4, 2);

Brent.FindRoot(f, -6.5, -5.5, 1e-8, 100); // -6.14665621970684

Brent.FindRoot(f, -0.5, 0.5, 1e-8, 100); // 0.121247371972135

Brent.FindRoot(f, 3.5, 4.5, 1e-8, 100); // 4.02540884774855

Note that there are better algorithms for finding (all) roots of a polynomial. We plan to add specific polynomial root finding algorithms later on.

Each algorithm provides a FindRoot method as above which throws a NonConvergenceException if it fails. However, since not finding a root is not exactly exceptional, most algorithms also provide an exception-free TryFindRoot code path with the common Try-pattern as in TryParse. This also works with F# pattern matching:

match Brent.TryFindRoot((fun x -> Evaluate.Polynomial(x, 6., -50., 4., 2.)), -6.5, -5.5, 1.e-8, 100) with

| true, root -> sprintf "Root at %f" root

| false, _ -> "No root found"

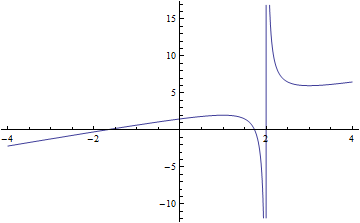

If you also know the function's first derivative you may want to use Newton-Raphson instead. It has been modified slightly to try to recover (instead of just failing) when overshooting, converging too slow or unexpected bracketing (e.g. in the presence of poles).

Example: Assume we want to find solutions of x+1/(x-2) == -2, hence x -> f(x) = 1/(x-2)+x+2 with a pole at x==2:

Func<double, double> f = x => 1/(x - 2) + x + 2;

Func<double, double> df = x => -1/(x*x - 4*x + 4) + 1;

RobustNewtonRaphson.FindRoot(f, df, -2, -1, 1e-14, 100, 20); // -1.73205080756888

RobustNewtonRaphson.FindRoot(f, df, 1, 1.99, 1e-14, 100, 20); // 1.73205080756888

RobustNewtonRaphson.FindRoot(f, df, -1.5, 1.99, 1e-14, 100, 20); // 1.73205080756888

RobustNewtonRaphson.FindRoot(f, df, 1, 6, 1e-14, 100, 20); // 1.73205080756888

Previously our order statistics and quantile functions were quite limited. With this release we finally bring relatively complete quantile support:

- OrderStatistic

- Median

- LowerQuartile

- UpperQuartile

- InterquartileRange

- FiveNumberSummary

- Percentile

- Quantile

- QuantileCustom

All of them are implemented on top of the quantile function. We always default to approximately median-unbiased quantiles, usually denoted as type R-8, which does not assume samples to be normally distributed. If you need compatibility with another implementation, you can use QuantileCustom which accepts either a QuantileDefinition enum (we support all 9 R-types, SAS 1-5, Excel, Nist, Hydrology, etc.) or a 4-parameter definition as in Mathematica.

For the empirical inverse cummulative distribution, which is essentially an R1-type quantile, you can use the new Statistics.InverseCDF function.

Previously there were two ways to estimate some statistics from a sample set: The Statistics class provided static extension methods to evaluate a single statistic from an enumerable, and DescriptiveStatistics to compute a whole set of standard statistics at once. This was unsatisfactory since it was not very efficient: the DescriptiveStatistics way actually required more than one pass internally (mostly because of the median) and it was not leveraging the fact that the sample set may already be sorted.

To fix the first issue, we've marked DescriptiveStatistics.Median as obsolete and will remove it in v3. Until then, the median computation is delayed until requested the first time. In normal cases where Median is not used therefore now only requires a single pass.

The second issue we attacked by introducing three new classes to compute a single statistic directly from the best fitting sample data format:

-

ArrayStatistics: operates on arrays which are not assumed to be sorted (but doesn't hurt if they are). -

SortedArrayStatistics: operates on arrays which must be sorted in ascending order. -

StreamingStatistics: operates on a stream in a single pass, without keeping the full data in memory at any time. Can thus be used to stream over data larger than system memory.

ArrayStatistics implements Minimum, Maximum, Mean, Variance, StandardDeviation, PopulationVariance, PopulationStandardDeviation. In addition it implements all the order statistics/quantile functions mentioned above, but in an inplace way that reorder the data array (partial sorting) and therefore have the Inplace-suffix to indicate the side effect. They get slightly faster when calling them repeatedly, yet are usually still faster with up to 5 calls than a full sorting.

Example: We want to compute the IQR of {3,1,2,4}.

var data = new double[] { 3.0, 1.0, 2.0, 4.0 };

ArrayStatistics.InterquartileRangeInplace(data); // iqr = 2.16666666666667

This is equivalent to executing IQR(c(3,1,2,4), type=8) in R, with quantile definition type R-8.

SortedArrayStatistics expects data to be sorted in ascending order and implements Minimum, Maximum, and all the order statistics/quantile functions mentioned above. It leverages the ordering for very fast (constant time) order statistics. There's also no need to reorder the data, so other than ArrayStatistics, this class never modifies the provided array and has no side effect. It does not re-implement any operations that cannot leverage the ordering, like Mean or Variance, so use the implementation from ArrayStatistics instead if needed.

var data = new[] { 1.0, 2.0, 3.0, 4.0 };

var iqr = SortedArrayStatistics.InterquartileRange(data); // iqr = 2.16666666666667

StreamingStatistics estimates statistics in a single pass without memorization and implements Minimum, Maximum, Mean, Variance, StandardDeviation, PopulationVariance, PopulationStandardDeviation. It does not implement any order statistics, since they require sorting and are thus not computable in a single pass without keeping the data in memory. No function of this class has any side effects on the data.

The Statistics class has been updated to leverage these new implementations internally, and implements all of the statistics mentioned above as extension methods on enumerables. No function of this class has any side effects on the data.

var iqr = new[] { 3.0, 1.0, 2.0, 4.0 }.InterquartileRange(); // iqr = 2.16666666666667

Note that this is generally slower than ArrayStatistis because it requires to copy the array to make sure there are no side effects, and much slower than SortedArrayStatistics which would have constant time (assuming we sorted it manually first).

Most of the quantile functions accept a tau-parameter. Often you need to evaluate that function with not one but a whole range of values for that parameter, say for plotting. In such scenarios it is advantageous to first sort the data and then use the SortedArrayStatistics functions with constant time complexity. For convenience we also provide alternative implementations with the Func-suffix in the Statistics class that do exactly that: instead of accepting a tau-parameter themselves, they return a function that accepts tau:

var icdf = new[] { 3.0, 1.0, 2.0, 4.0 }.InverseCDFFunc();

var a = icdf(0.3); // 2

var b = new[] { 0.0, 0.1, 0.5, 0.9, 1.0 }.Select(icdf).ToArray(); // 1,1,2,4,4

TODO