-

Notifications

You must be signed in to change notification settings - Fork 23

Architecture

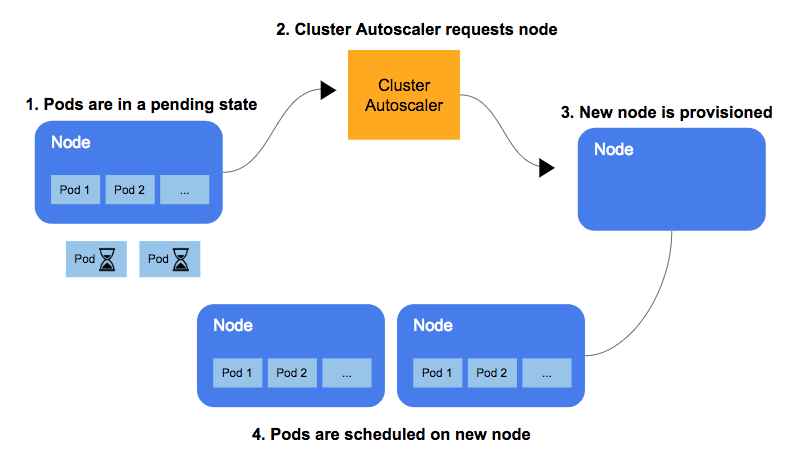

Cluster Autoscaler (CA) adjusts the number of nodes in the cluster when pods fail to schedule or when nodes are underutilized. It effectively performs Node based scaling.

Cluster autoscaler seeks unschedulable pods and tries to consolidate pods that are currently deployed on only a few nodes. It loops through these two tasks constantly.

Unschedulable pods are a result of inadequate memory or CPU resources, or inability to match an existing node due to the pod’s taint tolerations (rules preventing a pod from scheduling on a specific node), affinity rules (rules encouraging a pod to schedule on a specific node), or nodeSelector labels. If a cluster contains unschedulable pods, the autoscaler checks managed node pools to see if adding a node may unblock the pod. If so, and the node group can be enlarged, it adds a node to the group.

The autoscaler also scans a managed pool’s nodes for potential rescheduling of pods on other available cluster nodes. If it finds any, it evicts these and removes the node. When moving pods, the autoscaler takes pod priority and PodDisruptionBudgets into consideration.

When the cluster Autoscaler identifies pending pods that cannot be scheduled, potentially resulting in resource constraints, it adds nodes to the cluster. Moreover, when the utilization of a node falls below a particular threshold set by the cluster administrator, it removes nodes from the cluster allowing for all the pods to have a place to run and avoiding unnecessary nodes.

When scaling down, the cluster autoscaler allows a 10-minute graceful termination duration before forcing a node termination. This allows time for rescheduling the node’s pods to another node.

- The

mainfunction ofcluster-autoscalerperforms misc sequence of initializations: flag initialization, init snapshot debugger, initializing metrics and health-check endpoints and then performs a leader election using standard k8s client-goleaderelection.RunOrDie - On successfully electing a leader, the

runfunction is executed. - The

runfunction:

func run(healthCheck *metrics.HealthCheck, debuggingSnapshotter debuggingsnapshot.DebuggingSnapshotter)