-

-

Notifications

You must be signed in to change notification settings - Fork 107

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Normalisation issues when extracting SDF values with ShapeNetCore V2 #53

Comments

|

Good catch! I didn't realize all the shapenet models weren't normalized to [-0.5, 0.5]^3. If you have the dataset downloaded, you could find the actual bounding box by taking max/min over each bounding box. If you do this, I can update the code. Something like import os

import numpy as np

import point_cloud_utils as pcu

shapenet_root = "path/to/shapenet"

bbox_min = np.array([np.inf]*3)

bbox_max = np.array([-np.inf]*3)

for cat_name in os.listdir(shapenet_root):

for shape_name in os.listdir(os.path.join.join(shapenet_root, cat_name):

v, f = pcu.load_mesh_vf(os.path.join(shapenet_root, cat_name, shape_name, "model.obj")

bbox_min = np.minimum(v.min(0), bbox_min)

bbox_max = np.maximum(v.max(0), bbox_max)

print(f"ShapeNet range is {bbox_min} to {bbox_max}") |

|

Thank you for providing the code! I had to use my own library because of the compilation errors on my Mac that we discussed in other Issues, but I used a similar script to the one you suggested. The bounding box is the following: It seems like a volume defined within [-1, 1]^3 should cover all the shapes. |

|

Following up on my previous comment: once we solve #50 , it could be useful to reason around this normalisation task. I would change the bounding box definition as: This way the bounding box wraps all the objects correctly in the ShapeNetCore dataset. But (please correct me if I am wrong) reconstruction models based on signed distances like DeepSDF could suffer in performance due to the very limited portion of the volume that the object actually occupies - I think this is why papers based on DeepSDF always normalise the objects so that they fit a unit sphere. However, normalising objects in the range[-1, +1] makes reconstruction in the real world much harder, e.g. in robotic perception tasks. That is, by normalising inidividual objects within a [-1, +1] range we are effectively scaling each object by a different value. In real life, at inference, we are collecting a partial point cloud on the real object surface to infer a latent vector for the corresponding shape. This step is not possible if the DeepSDF model is trained on normalised objects, as we wouldn't know the scaling value to apply to the point cloud collected in the real world - as we don't know what object we are trying to reconstruct in advance. I hope it makes sense and thank you for reading this. I'd love to hear your opinion about that! |

|

You are right that normalizing will destroy object scale which is not what you want. One strategy is to sample sample points within [-1, 1]^3 but at different densities. For example, one could sample the first N points from by perturbing points on the surface by Gaussian noise with varianbce proportional to a fraction of the bounding sphere, then sample the next N by adding Gaussian noise with 2x the previous variance and so on. In this case, the shapes are still normalized in [-1, 1]^3 but the sampling adapts to the shape. @maurock could you give this a shot and see if it helps you? |

|

Thank you very much, I will give it a try and close this issue. Also, I have just sent you an email, I'm looking forward to hearing from you! |

Hi, thank you for this awesome library! I have a question regarding the extraction of SDF values. From the example file you provide:

I checked a few objects from the ShapeNetCore V2 dataset and noticed that they are not always bounded within [-0.5, 0.5] ^ 3. For example, the object

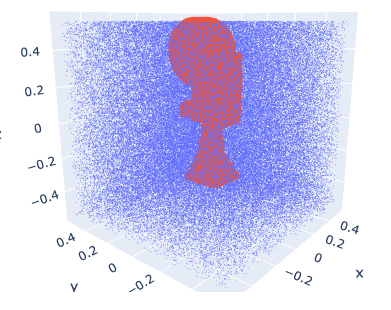

02942699/1ab3abb5c090d9b68e940c4e64a94e1eis within [0.115, 0.229, 0.56] and [-0.115, -0.171, -0.326]. Here's a picture of it:It's hard to see, but the object slightly exceeds the upper face of the sampling volume. Also, it is not centred at 0.

Because papers that use SDF values for 3D reconstruction as DeepSDF suggest to normalise the object so that it fits a unit sphere, does it make sense to change the normalisation strategy to reflect that? Thank you.

The text was updated successfully, but these errors were encountered: