-

Notifications

You must be signed in to change notification settings - Fork 125

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Running OKVIS with zr300 #19

Comments

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Hi,

I'm trying to run OKVIS on a data set captured by a ZR300 which also contains additional information (like joint odometry and lidar scans) from the robot it is mounted on. I'm currently using calibration information from [1], because my own calibration provides somehow false information. The config file looks therefore like that:

cameras:

- {T_SC: # fisheye

[ 1.0, 0.0, 0.0, 0.0067,

0.0, 1.0, 0.0, 0.0015,

0.0, 0.0, 1.0, -0.0073,

0.0, 0.0, 0.0, 1.0],

image_dimension: [640, 480],

distortion_coefficients: [0.0048721263900003645, 0.013074922065542347, -0.004719549370669489, 0.0005900323796213622],

distortion_type: radialtangential,

focal_length: [768.6436773886262, 765.9343209704857],

principal_point: [317.91945463536155, 241.50294129099476]}

camera_params:

camera_rate: 30 # just to manage the expectations of when there should be frames arriving

sigma_absolute_translation: 0.0 # The standard deviation of the camera extrinsics translation, e.g. 1.0e-10 for online-calib [m].

sigma_absolute_orientation: 0.0 # The standard deviation of the camera extrinsics orientation, e.g. 1.0e-3 for online-calib [rad].

sigma_c_relative_translation: 0.0 # The std. dev. of the cam. extr. transl. change between frames, e.g. 1.0e-6 for adaptive online calib (not less for numerics) [m].

sigma_c_relative_orientation: 0.0 # The std. dev. of the cam. extr. orient. change between frames, e.g. 1.0e-6 for adaptive online calib (not less for numerics) [rad].

timestamp_tolerance: 0.005 # [s] stereo frame out-of-sync tolerance

imu_params:

a_max: 176.0 # acceleration saturation [m/s^2]

g_max: 7.8 # gyro saturation [rad/s]

sigma_g_c: 4.2e-2 # gyro noise density [rad/s/sqrt(Hz)]

sigma_a_c: 4.5e-4 # accelerometer noise density [m/s^2/sqrt(Hz)]

sigma_bg: 0.03 # gyro bias prior [rad/s]

sigma_ba: 0.1 # accelerometer bias prior [m/s^2]

sigma_gw_c: 1.4e-2 # gyro drift noise density [rad/s^s/sqrt(Hz)]

sigma_aw_c: 1.5e-4 # accelerometer drift noise density [m/s^2/sqrt(Hz)]

tau: 3600.0 # reversion time constant, currently not in use [s]

g: 9.81007 # Earth's acceleration due to gravity [m/s^2]

a0: [ 0.0, 0.0, 0.0 ] # Accelerometer bias [m/s^2]

imu_rate: 200

# tranform Body-Sensor (IMU)

T_BS:

[1.0000, 0.0000, 0.0000, 0.0000,

0.0000, 1.0000, 0.0000, 0.0000,

0.0000, 0.0000, 1.0000, 0.0000,

0.0000, 0.0000, 0.0000, 1.0000]

The rest of it stayed unchanged to the here committed version. (If the error lies within the other part of the file, then i am happy to get a hint too)

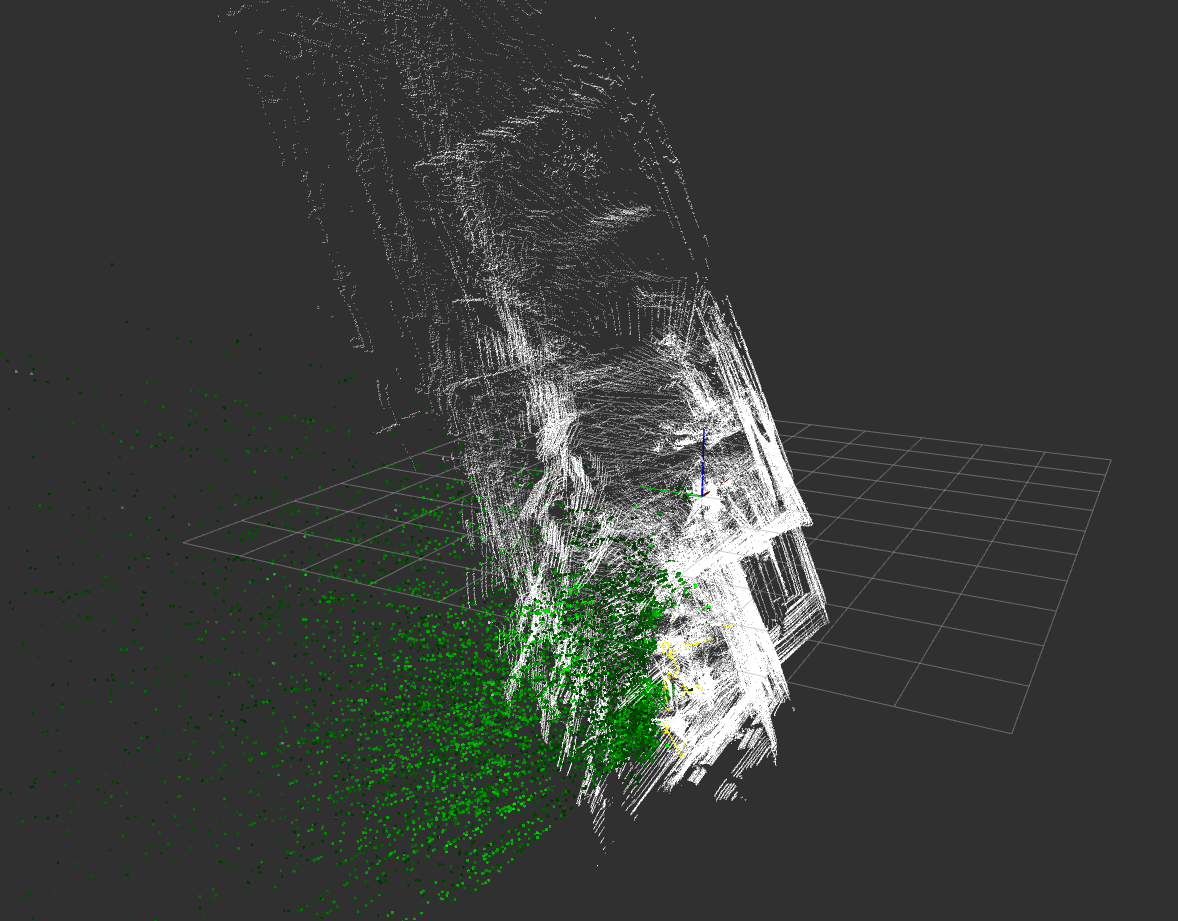

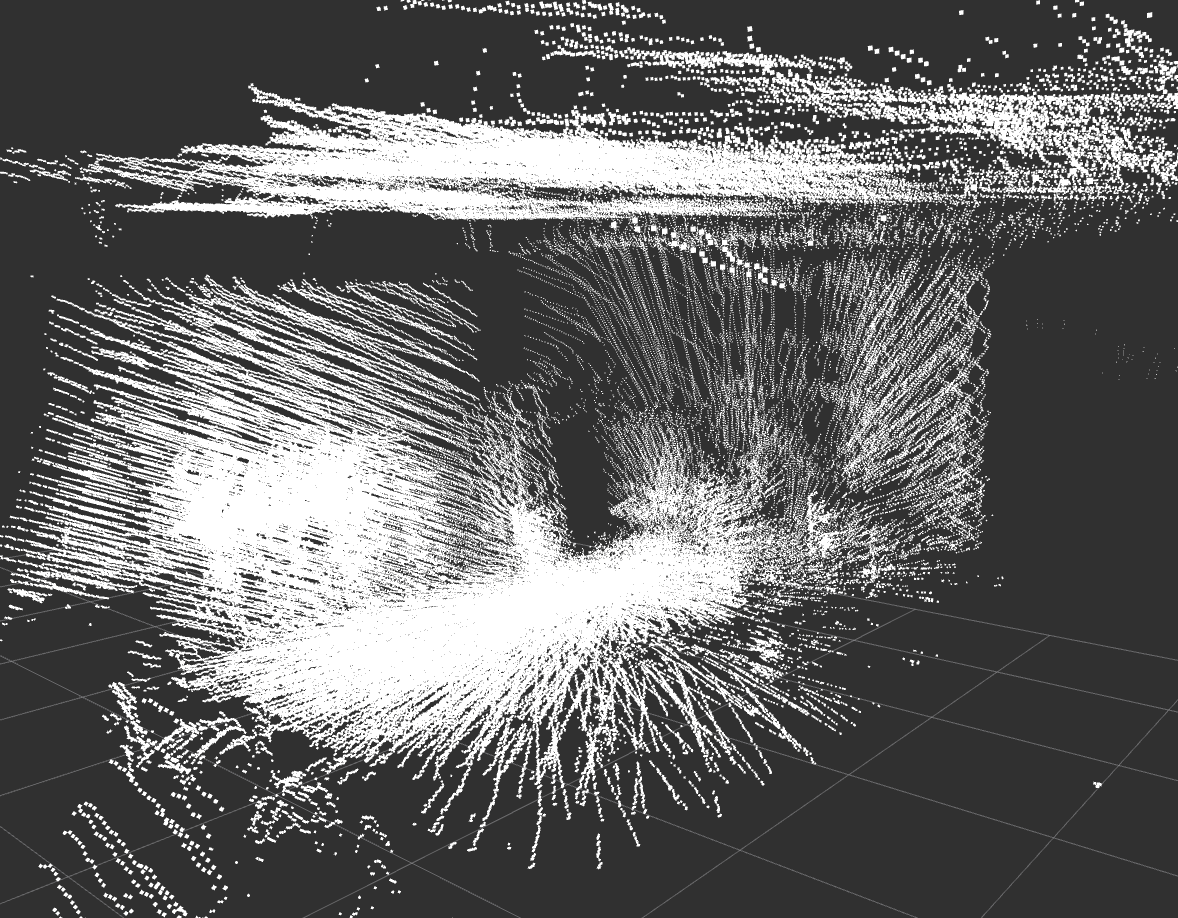

I added the lidar information at the positions caluated by XXX and this picture came out of rviz.

However, this result is pretty far away from the environment generated based on the joint odometry.

It is a different view angle, but i hope, you can get the point

Does anybody have a clue, what i am still doing wrong, or is the hardware of the camera that bad, that the results can't be better.

Thank you in advance.

Christian

[1] ethz-asl/rovio#192

The text was updated successfully, but these errors were encountered: