diff --git a/README.md b/README.md

index 9e7dd01..9367555 100644

--- a/README.md

+++ b/README.md

@@ -4,10 +4,28 @@

# Movement Primitives

+> Dynamical movement primitives (DMPs), probabilistic movement primitives

+> (ProMPs), and spatially coupled bimanual DMPs for imitation learning.

+

Movement primitives are a common group of policy representations in robotics.

There are many types and variations. This repository focuses mainly on

-imitation learning, generalization, and adaptation of movement primitives.

-It provides implementations in Python and Cython.

+imitation learning, generalization, and adaptation of movement primitives for

+Cartesian motions of robots. It provides implementations in Python and Cython

+and can be installed directly from

+[PyPI](https://pypi.org/project/movement-primitives/).

+

+## Content

+

+* [Features](#features)

+* [API Documentation](#api-documentation)

+* [Install Library](#install-library)

+* [Examples](#examples)

+* [Build API Documentation](#build-api-documentation)

+* [Test](#test)

+* [Contributing](#contributing)

+* [Non-public Extensions](#non-public-extensions)

+* [Related Publications](#related-publications)

+* [Funding](#funding)

## Features

@@ -19,14 +37,10 @@ It provides implementations in Python and Cython.

* Propagation of DMP weight distribution to state space distribution

* Probabilistic Movement Primitives (ProMPs)

-Example of dual Cartesian DMP with

-[RH5 Manus](https://robotik.dfki-bremen.de/en/research/robot-systems/rh5-manus/):

-

- -

-Example of joint space DMP with UR5:

+

-

-Example of joint space DMP with UR5:

+

-

- +Left: Example of dual Cartesian DMP with [RH5 Manus](https://robotik.dfki-bremen.de/en/research/robot-systems/rh5-manus/).

+Right: Example of joint space DMP with UR5.

## API Documentation

@@ -71,83 +85,12 @@ or install the library with

python setup.py install

```

-## Non-public Extensions

-

-Scripts from the subfolder `examples/external_dependencies/` require access to

-git repositories (URDF files or optional dependencies) and datasets that are

-not publicly available. They are available on request (email

-alexander.fabisch@dfki.de).

-

-Note that the library does not have any non-public dependencies! They are only

-required to run all examples.

-

-### MoCap Library

-

-```bash

-# untested: pip install git+https://git.hb.dfki.de/dfki-interaction/mocap.git

-git clone git@git.hb.dfki.de:dfki-interaction/mocap.git

-cd mocap

-python -m pip install -e .

-cd ..

-```

-

-### Get URDFs

-

-```bash

-# RH5

-git clone git@git.hb.dfki.de:models-robots/rh5_models/pybullet-only-arms-urdf.git --recursive

-# RH5v2

-git clone git@git.hb.dfki.de:models-robots/rh5v2_models/pybullet-urdf.git --recursive

-# Kuka

-git clone git@git.hb.dfki.de:models-robots/kuka_lbr.git

-# Solar panel

-git clone git@git.hb.dfki.de:models-objects/solar_panels.git

-# RH5 Gripper

-git clone git@git.hb.dfki.de:motto/abstract-urdf-gripper.git --recursive

-```

-

-### Data

-

-I assume that your data is located in the folder `data/` in most scripts.

-You should put a symlink there to point to your actual data folder.

-

-## Build API Documentation

-

-You can build an API documentation with [pdoc3](https://pdoc3.github.io/pdoc/).

-You can install pdoc3 with

-

-```bash

-pip install pdoc3

-```

-

-... and build the documentation from the main folder with

-

-```bash

-pdoc movement_primitives --html

-```

-

-It will be located at `html/movement_primitives/index.html`.

-

-## Test

-

-To run the tests some python libraries are required:

-

-```bash

-python -m pip install -e .[test]

-```

-

-The tests are located in the folder `test/` and can be executed with:

-`python -m nose test`

-

-This command searches for all files with `test` and executes the functions with `test_*`.

-

-## Contributing

-

-To add new features, documentation, or fix bugs you can open a pull request.

-Directly pushing to the main branch is not allowed.

-

## Examples

+You will find a lot of examples in the subfolder

+[`examples/`](https://github.com/dfki-ric/movement_primitives/tree/main/examples).

+Here are just some highlights to showcase the library.

+

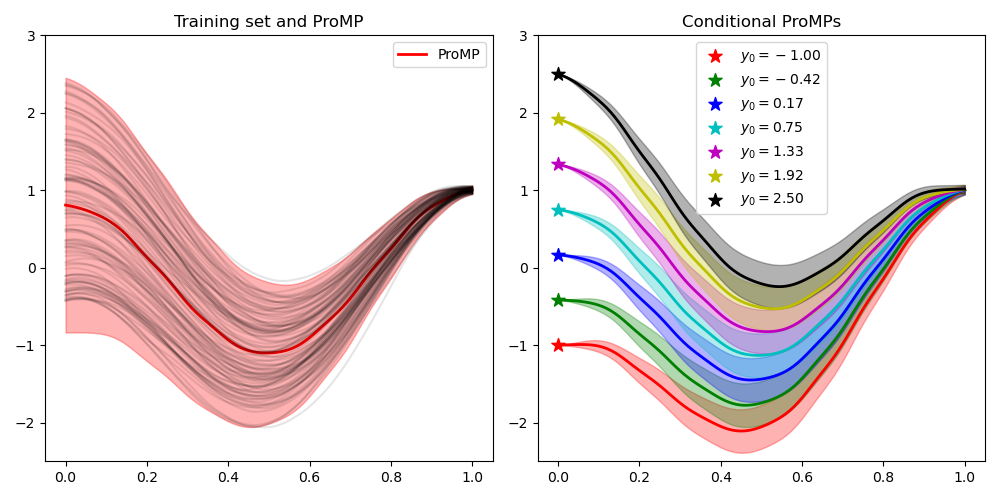

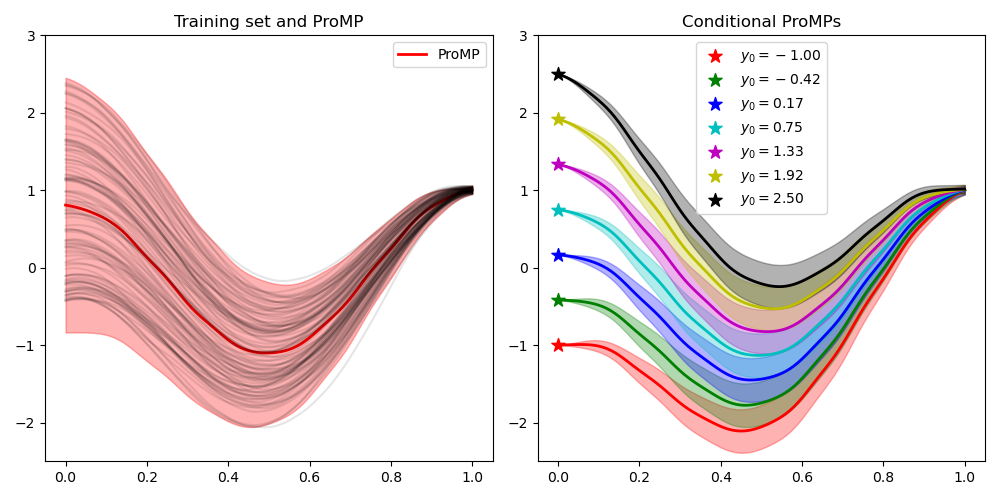

### Conditional ProMPs

+Left: Example of dual Cartesian DMP with [RH5 Manus](https://robotik.dfki-bremen.de/en/research/robot-systems/rh5-manus/).

+Right: Example of joint space DMP with UR5.

## API Documentation

@@ -71,83 +85,12 @@ or install the library with

python setup.py install

```

-## Non-public Extensions

-

-Scripts from the subfolder `examples/external_dependencies/` require access to

-git repositories (URDF files or optional dependencies) and datasets that are

-not publicly available. They are available on request (email

-alexander.fabisch@dfki.de).

-

-Note that the library does not have any non-public dependencies! They are only

-required to run all examples.

-

-### MoCap Library

-

-```bash

-# untested: pip install git+https://git.hb.dfki.de/dfki-interaction/mocap.git

-git clone git@git.hb.dfki.de:dfki-interaction/mocap.git

-cd mocap

-python -m pip install -e .

-cd ..

-```

-

-### Get URDFs

-

-```bash

-# RH5

-git clone git@git.hb.dfki.de:models-robots/rh5_models/pybullet-only-arms-urdf.git --recursive

-# RH5v2

-git clone git@git.hb.dfki.de:models-robots/rh5v2_models/pybullet-urdf.git --recursive

-# Kuka

-git clone git@git.hb.dfki.de:models-robots/kuka_lbr.git

-# Solar panel

-git clone git@git.hb.dfki.de:models-objects/solar_panels.git

-# RH5 Gripper

-git clone git@git.hb.dfki.de:motto/abstract-urdf-gripper.git --recursive

-```

-

-### Data

-

-I assume that your data is located in the folder `data/` in most scripts.

-You should put a symlink there to point to your actual data folder.

-

-## Build API Documentation

-

-You can build an API documentation with [pdoc3](https://pdoc3.github.io/pdoc/).

-You can install pdoc3 with

-

-```bash

-pip install pdoc3

-```

-

-... and build the documentation from the main folder with

-

-```bash

-pdoc movement_primitives --html

-```

-

-It will be located at `html/movement_primitives/index.html`.

-

-## Test

-

-To run the tests some python libraries are required:

-

-```bash

-python -m pip install -e .[test]

-```

-

-The tests are located in the folder `test/` and can be executed with:

-`python -m nose test`

-

-This command searches for all files with `test` and executes the functions with `test_*`.

-

-## Contributing

-

-To add new features, documentation, or fix bugs you can open a pull request.

-Directly pushing to the main branch is not allowed.

-

## Examples

+You will find a lot of examples in the subfolder

+[`examples/`](https://github.com/dfki-ric/movement_primitives/tree/main/examples).

+Here are just some highlights to showcase the library.

+

### Conditional ProMPs

@@ -157,7 +100,7 @@ trajectories that can be conditioned on viapoints. In this example, we

plot the resulting posterior distribution after conditioning on varying

start positions.

-[Script](examples/plot_conditional_promp.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/plot_conditional_promp.py)

### Potential Field of 2D DMP

@@ -167,7 +110,7 @@ A Dynamical Movement Primitive defines a potential field that superimposes

several components: transformation system (goal-directed movement), forcing

term (learned shape), and coupling terms (e.g., obstacle avoidance).

-[Script](examples/plot_dmp_potential_field.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/plot_dmp_potential_field.py)

### DMP with Final Velocity

@@ -177,7 +120,7 @@ Not all DMPs allow a final velocity > 0. In this case we analyze the effect

of changing final velocities in an appropriate variation of the DMP

formulation that allows to set the final velocity.

-[Script](examples/plot_dmp_with_final_velocity.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/plot_dmp_with_final_velocity.py)

### ProMPs

@@ -188,7 +131,7 @@ The LASA Handwriting dataset learned with ProMPs. The dataset consists of

demonstrations and the second and fourth column show the imitated ProMPs

with 1-sigma interval.

-[Script](examples/plot_promp_lasa.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/plot_promp_lasa.py)

### Cartesian DMPs

@@ -197,7 +140,7 @@ with 1-sigma interval.

A trajectory is created manually, imitated with a Cartesian DMP, converted

to a joint trajectory by inverse kinematics, and executed with a UR5.

-[Script](examples/vis_cartesian_dmp.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/vis_cartesian_dmp.py)

### Contextual ProMPs

@@ -209,7 +152,7 @@ kinesthetic teaching. The panel width is considered to be the context over

which we generalize with contextual ProMPs. Each color in the above

visualizations corresponds to a ProMP for a different context.

-[Script](examples/external_dependencies/vis_contextual_promp_distribution.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/vis_contextual_promp_distribution.py)

**Dependencies that are not publicly available:**

@@ -229,7 +172,7 @@ visualizations corresponds to a ProMP for a different context.

We offer specific dual Cartesian DMPs to control dual-arm robotic systems like

humanoid robots.

-Scripts: [Open3D](examples/external_dependencies/vis_solar_panel.py), [PyBullet](examples/external_dependencies/sim_solar_panel.py)

+Scripts: [Open3D](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/vis_solar_panel.py), [PyBullet](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/sim_solar_panel.py)

**Dependencies that are not publicly available:**

@@ -251,7 +194,7 @@ We can introduce a coupling term in a dual Cartesian DMP to constrain the

relative position, orientation, or pose of two end-effectors of a dual-arm

robot.

-Scripts: [Open3D](examples/external_dependencies/vis_cartesian_dual_dmp.py), [PyBullet](examples/external_dependencies/sim_cartesian_dual_dmp.py)

+Scripts: [Open3D](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/vis_cartesian_dual_dmp.py), [PyBullet](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/sim_cartesian_dual_dmp.py)

**Dependencies that are not publicly available:**

@@ -271,7 +214,7 @@ Scripts: [Open3D](examples/external_dependencies/vis_cartesian_dual_dmp.py), [Py

If we have a distribution over DMP parameters, we can propagate them to state

space through an unscented transform.

-[Script](examples/external_dependencies/vis_dmp_to_state_variance.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/vis_dmp_to_state_variance.py)

**Dependencies that are not publicly available:**

@@ -286,6 +229,165 @@ space through an unscented transform.

git clone git@git.hb.dfki.de:models-robots/kuka_lbr.git

```

+## Build API Documentation

+

+You can build an API documentation with sphinx.

+You can install all dependencies with

+

+```bash

+python -m pip install movement_primitives[doc]

+```

+

+... and build the documentation from the folder `doc/` with

+

+```bash

+make html

+```

+

+It will be located at `doc/build/html/index.html`.

+

+## Test

+

+To run the tests some python libraries are required:

+

+```bash

+python -m pip install -e .[test]

+```

+

+The tests are located in the folder `test/` and can be executed with:

+`python -m nose test`

+

+This command searches for all files with `test` and executes the functions with `test_*`.

+

+## Contributing

+

+You can report bugs in the [issue tracker](https://github.com/dfki-ric/movement_primitives/issues).

+If you have questions about the software, please use the [discussions

+section](https://github.com/dfki-ric/movement_primitives/discussions).

+To add new features, documentation, or fix bugs you can open a pull request

+on [GitHub](https://github.com/dfki-ric/movement_primitives). Directly pushing

+to the main branch is not allowed.

+

+The recommended workflow to add a new feature, add documentation, or fix a bug

+is the following:

+

+* Push your changes to a branch (e.g., feature/x, doc/y, or fix/z) of your fork

+ of the repository.

+* Open a pull request to the main branch of the main repository.

+

+This is a checklist for new features:

+

+- are there unit tests?

+- does it have docstrings?

+- is it included in the API documentation?

+- run flake8 and pylint

+- should it be part of the readme?

+- should it be included in any example script?

+

+## Non-public Extensions

+

+Scripts from the subfolder `examples/external_dependencies/` require access to

+git repositories (URDF files or optional dependencies) and datasets that are

+not publicly available. They are available on request (email

+alexander.fabisch@dfki.de).

+

+Note that the library does not have any non-public dependencies! They are only

+required to run all examples.

+

+### MoCap Library

+

+```bash

+# untested: pip install git+https://git.hb.dfki.de/dfki-interaction/mocap.git

+git clone git@git.hb.dfki.de:dfki-interaction/mocap.git

+cd mocap

+python -m pip install -e .

+cd ..

+```

+

+### Get URDFs

+

+```bash

+# RH5

+git clone git@git.hb.dfki.de:models-robots/rh5_models/pybullet-only-arms-urdf.git --recursive

+# RH5v2

+git clone git@git.hb.dfki.de:models-robots/rh5v2_models/pybullet-urdf.git --recursive

+# Kuka

+git clone git@git.hb.dfki.de:models-robots/kuka_lbr.git

+# Solar panel

+git clone git@git.hb.dfki.de:models-objects/solar_panels.git

+# RH5 Gripper

+git clone git@git.hb.dfki.de:motto/abstract-urdf-gripper.git --recursive

+```

+

+### Data

+

+I assume that your data is located in the folder `data/` in most scripts.

+You should put a symlink there to point to your actual data folder.

+

+## Related Publications

+

+This library implements several types of dynamical movement primitives and

+probabilistic movement primitives. These are described in detail in the

+following papers.

+

+[1] Ijspeert, A. J., Nakanishi, J., Hoffmann, H., Pastor, P., Schaal, S. (2013).

+ Dynamical Movement Primitives: Learning Attractor Models for Motor

+ Behaviors, Neural Computation 25 (2), 328-373. DOI: 10.1162/NECO_a_00393,

+ https://homes.cs.washington.edu/~todorov/courses/amath579/reading/DynamicPrimitives.pdf

+

+[2] Pastor, P., Hoffmann, H., Asfour, T., Schaal, S. (2009).

+ Learning and Generalization of Motor Skills by Learning from Demonstration.

+ In 2009 IEEE International Conference on Robotics and Automation,

+ (pp. 763-768). DOI: 10.1109/ROBOT.2009.5152385,

+ https://h2t.iar.kit.edu/pdf/Pastor2009.pdf

+

+[3] Muelling, K., Kober, J., Kroemer, O., Peters, J. (2013).

+ Learning to Select and Generalize Striking Movements in Robot Table Tennis.

+ International Journal of Robotics Research 32 (3), 263-279.

+ https://www.ias.informatik.tu-darmstadt.de/uploads/Publications/Muelling_IJRR_2013.pdf

+

+[4] Ude, A., Nemec, B., Petric, T., Murimoto, J. (2014).

+ Orientation in Cartesian space dynamic movement primitives.

+ In IEEE International Conference on Robotics and Automation (ICRA)

+ (pp. 2997-3004). DOI: 10.1109/ICRA.2014.6907291,

+ https://acat-project.eu/modules/BibtexModule/uploads/PDF/udenemecpetric2014.pdf

+

+[5] Gams, A., Nemec, B., Zlajpah, L., Wächter, M., Asfour, T., Ude, A. (2013).

+ Modulation of Motor Primitives using Force Feedback: Interaction with

+ the Environment and Bimanual Tasks (2013), In 2013 IEEE/RSJ International

+ Conference on Intelligent Robots and Systems (pp. 5629-5635). DOI:

+ 10.1109/IROS.2013.6697172,

+ https://h2t.anthropomatik.kit.edu/pdf/Gams2013.pdf

+

+[6] Vidakovic, J., Jerbic, B., Sekoranja, B., Svaco, M., Suligoj, F. (2019).

+ Task Dependent Trajectory Learning from Multiple Demonstrations Using

+ Movement Primitives (2019),

+ In International Conference on Robotics in Alpe-Adria Danube Region (RAAD)

+ (pp. 275-282). DOI: 10.1007/978-3-030-19648-6_32,

+ https://link.springer.com/chapter/10.1007/978-3-030-19648-6_32

+

+[7] Paraschos, A., Daniel, C., Peters, J., Neumann, G. (2013).

+ Probabilistic movement primitives, In C.J. Burges and L. Bottou and M.

+ Welling and Z. Ghahramani and K.Q. Weinberger (Eds.), Advances in Neural

+ Information Processing Systems, 26,

+ https://papers.nips.cc/paper/2013/file/e53a0a2978c28872a4505bdb51db06dc-Paper.pdf

+

+[8] Maeda, G. J., Neumann, G., Ewerton, M., Lioutikov, R., Kroemer, O.,

+ Peters, J. (2017). Probabilistic movement primitives for coordination of

+ multiple human–robot collaborative tasks. Autonomous Robots, 41, 593-612.

+ DOI: 10.1007/s10514-016-9556-2,

+ https://link.springer.com/article/10.1007/s10514-016-9556-2

+

+[9] Paraschos, A., Daniel, C., Peters, J., Neumann, G. (2018).

+ Using probabilistic movement primitives in robotics. Autonomous Robots, 42,

+ 529-551. DOI: 10.1007/s10514-017-9648-7,

+ https://www.ias.informatik.tu-darmstadt.de/uploads/Team/AlexandrosParaschos/promps_auro.pdf

+

+[10] Lazaric, A., Ghavamzadeh, M. (2010).

+ Bayesian Multi-Task Reinforcement Learning. In Proceedings of the 27th

+ International Conference on International Conference on Machine Learning

+ (ICML'10) (pp. 599-606). https://hal.inria.fr/inria-00475214/document

+

## Funding

This library has been developed initially at the

diff --git a/doc/Makefile b/doc/Makefile

new file mode 100644

index 0000000..d0c3cbf

--- /dev/null

+++ b/doc/Makefile

@@ -0,0 +1,20 @@

+# Minimal makefile for Sphinx documentation

+#

+

+# You can set these variables from the command line, and also

+# from the environment for the first two.

+SPHINXOPTS ?=

+SPHINXBUILD ?= sphinx-build

+SOURCEDIR = source

+BUILDDIR = build

+

+# Put it first so that "make" without argument is like "make help".

+help:

+ @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

+

+.PHONY: help Makefile

+

+# Catch-all target: route all unknown targets to Sphinx using the new

+# "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

+%: Makefile

+ @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

diff --git a/doc/make.bat b/doc/make.bat

new file mode 100644

index 0000000..747ffb7

--- /dev/null

+++ b/doc/make.bat

@@ -0,0 +1,35 @@

+@ECHO OFF

+

+pushd %~dp0

+

+REM Command file for Sphinx documentation

+

+if "%SPHINXBUILD%" == "" (

+ set SPHINXBUILD=sphinx-build

+)

+set SOURCEDIR=source

+set BUILDDIR=build

+

+%SPHINXBUILD% >NUL 2>NUL

+if errorlevel 9009 (

+ echo.

+ echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

+ echo.installed, then set the SPHINXBUILD environment variable to point

+ echo.to the full path of the 'sphinx-build' executable. Alternatively you

+ echo.may add the Sphinx directory to PATH.

+ echo.

+ echo.If you don't have Sphinx installed, grab it from

+ echo.https://www.sphinx-doc.org/

+ exit /b 1

+)

+

+if "%1" == "" goto help

+

+%SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

+goto end

+

+:help

+%SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

+

+:end

+popd

diff --git a/doc/source/_templates/class.rst b/doc/source/_templates/class.rst

new file mode 100644

index 0000000..b29757c

--- /dev/null

+++ b/doc/source/_templates/class.rst

@@ -0,0 +1,32 @@

+{{ fullname | escape | underline}}

+

+.. currentmodule:: {{ module }}

+

+.. autoclass:: {{ objname }}

+ :members:

+ :show-inheritance:

+ :inherited-members:

+

+ {% block methods %}

+ .. automethod:: __init__

+

+ {% if methods %}

+ .. rubric:: {{ _('Methods') }}

+

+ .. autosummary::

+ {% for item in methods %}

+ ~{{ name }}.{{ item }}

+ {%- endfor %}

+ {% endif %}

+ {% endblock %}

+

+ {% block attributes %}

+ {% if attributes %}

+ .. rubric:: {{ _('Attributes') }}

+

+ .. autosummary::

+ {% for item in attributes %}

+ ~{{ name }}.{{ item }}

+ {%- endfor %}

+ {% endif %}

+ {% endblock %}

diff --git a/doc/source/api.rst b/doc/source/api.rst

new file mode 100644

index 0000000..2bf4443

--- /dev/null

+++ b/doc/source/api.rst

@@ -0,0 +1,160 @@

+.. _api:

+

+=================

+API Documentation

+=================

+

+This is the detailed documentation of all public classes and functions.

+You can also search for specific modules, classes, or functions in the

+:ref:`genindex`.

+

+.. contents:: :local:

+ :depth: 1

+

+

+:mod:`movement_primitives.dmp`

+==============================

+

+.. automodule:: movement_primitives.dmp

+

+.. autosummary::

+ :toctree: _apidoc/

+ :template: class.rst

+

+ ~movement_primitives.dmp.DMP

+ ~movement_primitives.dmp.DMPWithFinalVelocity

+ ~movement_primitives.dmp.CartesianDMP

+ ~movement_primitives.dmp.DualCartesianDMP

+ ~movement_primitives.dmp.DMPBase

+ ~movement_primitives.dmp.WeightParametersMixin

+ ~movement_primitives.dmp.CouplingTermPos1DToPos1D

+ ~movement_primitives.dmp.CouplingTermObstacleAvoidance2D

+ ~movement_primitives.dmp.CouplingTermObstacleAvoidance3D

+ ~movement_primitives.dmp.CouplingTermPos3DToPos3D

+ ~movement_primitives.dmp.CouplingTermDualCartesianPose

+ ~movement_primitives.dmp.CouplingTermObstacleAvoidance3D

+ ~movement_primitives.dmp.CouplingTermDualCartesianDistance

+ ~movement_primitives.dmp.CouplingTermDualCartesianTrajectory

+

+.. autosummary::

+ :toctree: _apidoc/

+

+ ~movement_primitives.dmp.dmp_transformation_system

+ ~movement_primitives.dmp.canonical_system_alpha

+ ~movement_primitives.dmp.phase

+ ~movement_primitives.dmp.obstacle_avoidance_acceleration_2d

+ ~movement_primitives.dmp.obstacle_avoidance_acceleration_3d

+

+

+:mod:`movement_primitives.promp`

+================================

+

+.. automodule:: movement_primitives.promp

+

+.. autosummary::

+ :toctree: _apidoc/

+ :template: class.rst

+

+ ~movement_primitives.promp.ProMP

+

+

+:mod:`movement_primitives.io`

+=============================

+

+.. automodule:: movement_primitives.io

+

+.. autosummary::

+ :toctree: _apidoc/

+

+ ~movement_primitives.io.write_pickle

+ ~movement_primitives.io.read_pickle

+ ~movement_primitives.io.write_yaml

+ ~movement_primitives.io.read_yaml

+ ~movement_primitives.io.write_json

+ ~movement_primitives.io.read_json

+

+

+:mod:`movement_primitives.base`

+===============================

+

+.. automodule:: movement_primitives.base

+

+.. autosummary::

+ :toctree: _apidoc/

+ :template: class.rst

+

+ ~movement_primitives.base.PointToPointMovement

+

+

+:mod:`movement_primitives.data`

+===============================

+

+.. automodule:: movement_primitives.data

+

+.. autosummary::

+ :toctree: _apidoc/

+

+ ~movement_primitives.data.load_lasa

+ ~movement_primitives.data.generate_minimum_jerk

+ ~movement_primitives.data.generate_1d_trajectory_distribution

+

+

+:mod:`movement_primitives.kinematics`

+=====================================

+

+.. automodule:: movement_primitives.kinematics

+

+.. autosummary::

+ :toctree: _apidoc/

+ :template: class.rst

+

+ ~movement_primitives.kinematics.Kinematics

+ ~movement_primitives.kinematics.Chain

+

+

+:mod:`movement_primitives.plot`

+===============================

+

+.. automodule:: movement_primitives.plot

+

+.. autosummary::

+ :toctree: _apidoc/

+

+ ~movement_primitives.plot.plot_trajectory_in_rows

+ ~movement_primitives.plot.plot_distribution_in_rows

+

+

+:mod:`movement_primitives.visualization`

+========================================

+

+.. automodule:: movement_primitives.visualization

+

+.. autosummary::

+ :toctree: _apidoc/

+

+ ~movement_primitives.visualization.plot_pointcloud

+ ~movement_primitives.visualization.to_ellipsoid

+

+

+:mod:`movement_primitives.dmp_potential_field`

+==============================================

+

+.. automodule:: movement_primitives.dmp_potential_field

+

+.. autosummary::

+ :toctree: _apidoc/

+

+ ~movement_primitives.dmp_potential_field.plot_potential_field_2d

+

+

+:mod:`movement_primitives.spring_damper`

+========================================

+

+.. automodule:: movement_primitives.spring_damper

+

+.. autosummary::

+ :toctree: _apidoc/

+ :template: class.rst

+

+ ~movement_primitives.spring_damper.SpringDamper

+ ~movement_primitives.spring_damper.SpringDamperOrientation

diff --git a/doc/source/conf.py b/doc/source/conf.py

new file mode 100644

index 0000000..8d7930d

--- /dev/null

+++ b/doc/source/conf.py

@@ -0,0 +1,93 @@

+# Configuration file for the Sphinx documentation builder.

+#

+# This file only contains a selection of the most common options. For a full

+# list see the documentation:

+# https://www.sphinx-doc.org/en/master/usage/configuration.html

+

+# -- Path setup --------------------------------------------------------------

+

+# If extensions (or modules to document with autodoc) are in another directory,

+# add these directories to sys.path here. If the directory is relative to the

+# documentation root, use os.path.abspath to make it absolute, like shown here.

+#

+import os

+import sys

+import time

+import sphinx_bootstrap_theme

+

+

+sys.path.insert(0, os.path.abspath('.'))

+sys.path.insert(0, os.path.abspath('../..'))

+

+

+# -- Project information -----------------------------------------------------

+

+project = "movement_primitives"

+copyright = "2020-{}, Alexander Fabisch, DFKI GmbH, Robotics Innovation Center".format(time.strftime("%Y"))

+author = "Alexander Fabisch"

+

+# The full version, including alpha/beta/rc tags

+release = __import__(project).__version__

+

+

+# -- General configuration ---------------------------------------------------

+

+# Add any Sphinx extension module names here, as strings. They can be

+# extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

+# ones.

+extensions = [

+ "numpydoc",

+ "myst_parser",

+ "sphinx.ext.autodoc",

+ "sphinx.ext.autosummary"

+]

+

+# Add any paths that contain templates here, relative to this directory.

+templates_path = ['_templates']

+

+# List of patterns, relative to source directory, that match files and

+# directories to ignore when looking for source files.

+# This pattern also affects html_static_path and html_extra_path.

+exclude_patterns = []

+

+autosummary_generate = True

+

+

+# -- Options for HTML output -------------------------------------------------

+

+# The theme to use for HTML and HTML Help pages. See the documentation for

+# a list of builtin themes.

+#

+html_theme = "bootstrap"

+html_theme_path = sphinx_bootstrap_theme.get_html_theme_path()

+html_theme_options = {

+ "bootswatch_theme": "readable",

+ "navbar_sidebarrel": False,

+ "bootstrap_version": "3",

+ "nosidebar": True,

+ "body_max_width": "90%",

+ "navbar_links": [

+ ("Home", "index"),

+ ("API", "api"),

+ ],

+}

+

+root_doc = "index"

+source_suffix = {

+ ".rst": "restructuredtext",

+ ".md": "markdown",

+}

+

+# Add any paths that contain custom static files (such as style sheets) here,

+# relative to this directory. They are copied after the builtin static files,

+# so a file named "default.css" will overwrite the builtin "default.css".

+html_static_path = ["_static"]

+html_show_sourcelink = False

+html_show_sphinx = False

+

+intersphinx_mapping = {

+ 'python': ('https://docs.python.org/{.major}'.format(sys.version_info), None),

+ 'numpy': ('https://numpy.org/doc/stable', None),

+ 'scipy': ('https://docs.scipy.org/doc/scipy/reference', None),

+ 'matplotlib': ('https://matplotlib.org/', None)

+}

diff --git a/doc/source/index.md b/doc/source/index.md

new file mode 120000

index 0000000..fe84005

--- /dev/null

+++ b/doc/source/index.md

@@ -0,0 +1 @@

+../../README.md

\ No newline at end of file

diff --git a/movement_primitives/base.py b/movement_primitives/base.py

index ec3070e..17c4e73 100644

--- a/movement_primitives/base.py

+++ b/movement_primitives/base.py

@@ -1,4 +1,7 @@

-"""Base classes of movement primitives."""

+"""Base Classes

+============

+

+Base classes of movement primitives."""

import abc

import numpy as np

@@ -13,6 +16,44 @@ class PointToPointMovement(abc.ABC):

n_vel_dims : int

Number of dimensions of the velocity that will be controlled.

+

+ Attributes

+ ----------

+ n_dims : int

+ Number of state space dimensions.

+

+ n_vel_dims : int

+ Number of velocity dimensions.

+

+ t : float

+ Current time.

+

+ last_t : float

+ Time during last step.

+

+ start_y : array, shape (n_dims,)

+ Initial state.

+

+ start_yd : array, shape (n_vel_dims,)

+ Initial velocity.

+

+ start_ydd : array, shape (n_vel_dims,)

+ Initial acceleration.

+

+ goal_y : array, shape (n_dims,)

+ Goal state.

+

+ goal_yd : array, shape (n_vel_dims,)

+ Goal velocity.

+

+ goal_ydd : array, shape (n_vel_dims,)

+ Goal acceleration.

+

+ current_y : array, shape (n_dims,)

+ Current state.

+

+ current_yd : array, shape (n_vel_dims,)

+ Current velocity.

"""

def __init__(self, n_pos_dims, n_vel_dims):

self.n_dims = n_pos_dims

@@ -40,25 +81,25 @@ def configure(

Parameters

----------

t : float, optional

- Time at current step

+ Time at current step.

start_y : array, shape (n_dims,)

- Initial state

+ Initial state.

start_yd : array, shape (n_vel_dims,)

- Initial velocity

+ Initial velocity.

start_ydd : array, shape (n_vel_dims,)

- Initial acceleration

+ Initial acceleration.

goal_y : array, shape (n_dims,)

- Goal state

+ Goal state.

goal_yd : array, shape (n_vel_dims,)

- Goal velocity

+ Goal velocity.

goal_ydd : array, shape (n_vel_dims,)

- Goal acceleration

+ Goal acceleration.

"""

if t is not None:

self.t = t

diff --git a/movement_primitives/data/__init__.py b/movement_primitives/data/__init__.py

index 6d84bdb..ffcc7f2 100644

--- a/movement_primitives/data/__init__.py

+++ b/movement_primitives/data/__init__.py

@@ -1,4 +1,8 @@

-"""Tools for loading datasets."""

+"""Data

+====

+

+Tools for loading datasets.

+"""

from ._lasa import load_lasa

from ._minimum_jerk import generate_minimum_jerk

from ._toy_1d import generate_1d_trajectory_distribution

diff --git a/movement_primitives/dmp/__init__.py b/movement_primitives/dmp/__init__.py

index 2d7a3d7..12c58bd 100644

--- a/movement_primitives/dmp/__init__.py

+++ b/movement_primitives/dmp/__init__.py

@@ -1,4 +1,25 @@

-"""Dynamical movement primitive variants."""

+r"""Dynamical Movement Primitive (DMP)

+==================================

+

+This module provides implementations of various DMP types. DMPs consist of

+a goal-directed movement generated by the transformation system and a forcing

+term that defines the shape of the trajectory. They are time-dependent and

+usually converge to the goal after a constant execution time.

+

+Every implementation is slightly different, but we use a similar notation for

+all of them:

+

+* :math:`y,\dot{y},\ddot{y}` - position, velocity, and acceleration; these

+ might be translation components only, orientation, or both

+* :math:`y_0` - start position

+* :math:`g` - goal of the movement (attractor of the DMP)

+* :math:`\tau` - execution time

+* :math:`z` - phase variable, starts at 1 and converges to 0

+* :math:`f(z)` - forcing term, learned component that defines the shape of the

+ movement

+* :math:`C_t` - coupling term that is added to the acceleration of the DMP

+"""

+from ._base import DMPBase, WeightParametersMixin

from ._dmp import DMP, dmp_transformation_system

from ._dmp_with_final_velocity import DMPWithFinalVelocity

from ._cartesian_dmp import CartesianDMP

@@ -14,6 +35,7 @@

__all__ = [

+ "DMPBase", "WeightParametersMixin",

"DMP", "dmp_transformation_system", "DMPWithFinalVelocity", "CartesianDMP",

"DualCartesianDMP", "CouplingTermPos1DToPos1D",

"CouplingTermObstacleAvoidance2D", "CouplingTermObstacleAvoidance3D",

diff --git a/movement_primitives/dmp/_base.py b/movement_primitives/dmp/_base.py

index a9eed67..da883af 100644

--- a/movement_primitives/dmp/_base.py

+++ b/movement_primitives/dmp/_base.py

@@ -3,7 +3,16 @@

class DMPBase(PointToPointMovement):

- """Base class of Dynamical Movement Primitives (DMPs)."""

+ """Base class of Dynamical Movement Primitives (DMPs).

+

+ Parameters

+ ----------

+ n_pos_dims : int

+ Number of dimensions of the position that will be controlled.

+

+ n_vel_dims : int

+ Number of dimensions of the velocity that will be controlled.

+ """

def __init__(self, n_pos_dims, n_vel_dims):

super(DMPBase, self).__init__(n_pos_dims, n_vel_dims)

@@ -18,7 +27,12 @@ def reset(self):

class WeightParametersMixin:

- """Mixin class providing common access methods to forcing term weights."""

+ """Mixin class providing common access methods to forcing term weights.

+

+ This can be used, for instance, for black-box optimization of the weights

+ with respect to some cost / objective function in a reinforcement learning

+ setting.

+ """

def get_weights(self):

"""Get weight vector of DMP.

diff --git a/movement_primitives/dmp/_cartesian_dmp.py b/movement_primitives/dmp/_cartesian_dmp.py

index 701badf..20dd73f 100644

--- a/movement_primitives/dmp/_cartesian_dmp.py

+++ b/movement_primitives/dmp/_cartesian_dmp.py

@@ -151,29 +151,35 @@ def dmp_step_quaternion_python(

class CartesianDMP(DMPBase):

- """Cartesian dynamical movement primitive.

+ r"""Cartesian dynamical movement primitive.

The Cartesian DMP handles orientation and position separately. The

- orientation is represented by a quaternion. The quaternion DMP is

- implemented according to

+ orientation is represented by a quaternion.

- A. Ude, B. Nemec, T. Petric, J. Murimoto:

- Orientation in Cartesian space dynamic movement primitives (2014),

- IEEE International Conference on Robotics and Automation (ICRA),

- pp. 2997-3004, doi: 10.1109/ICRA.2014.6907291,

- https://ieeexplore.ieee.org/document/6907291,

- https://acat-project.eu/modules/BibtexModule/uploads/PDF/udenemecpetric2014.pdf

+ While the dimension of the state space is 7, the dimension of the

+ velocity, acceleration, and forcing term is 6.

- (if smooth scaling is activated) with modification of scaling proposed by

+ Equation of transformation system for the orientation (according to [1]_,

+ Eq. 16):

- P. Pastor, H. Hoffmann, T. Asfour, S. Schaal:

- Learning and Generalization of Motor Skills by Learning from Demonstration,

- 2009 IEEE International Conference on Robotics and Automation,

- Kobe, Japan, 2009, pp. 763-768, doi: 10.1109/ROBOT.2009.5152385,

- https://h2t.iar.kit.edu/pdf/Pastor2009.pdf

+ .. math::

- While the dimension of the state space is 7, the dimension of the

- velocity, acceleration, and forcing term is 6.

+ \ddot{y} = (\alpha_y (\beta_y (g - y) - \tau \dot{y}) + f(z) + C_t) / \tau^2

+

+ Note that in this case :math:`y` is a quaternion in this case,

+ :math:`g - y` the quaternion difference (expressed as rotation vector),

+ :math:`\dot{y}` is the angular velocity, and :math:`\ddot{y}` the

+ angular acceleration.

+

+ With smooth scaling (according to [2]_):

+

+ .. math::

+

+ \ddot{y} = (\alpha_y (\beta_y (g - y) - \tau \dot{y}

+ - \underline{\beta_y (g - y_0) z}) + f(z) + C_t) / \tau^2

+

+ The position is handled in the same way, just like in the original

+ :class:`DMP`.

Parameters

----------

@@ -202,6 +208,20 @@ class CartesianDMP(DMPBase):

dt_ : float

Time difference between DMP steps. This value can be changed to adapt

the frequency.

+

+ References

+ ----------

+ .. [1] Ude, A., Nemec, B., Petric, T., Murimoto, J. (2014).

+ Orientation in Cartesian space dynamic movement primitives.

+ In IEEE International Conference on Robotics and Automation (ICRA)

+ (pp. 2997-3004). DOI: 10.1109/ICRA.2014.6907291,

+ https://acat-project.eu/modules/BibtexModule/uploads/PDF/udenemecpetric2014.pdf

+

+ .. [2] Pastor, P., Hoffmann, H., Asfour, T., Schaal, S. (2009). Learning

+ and Generalization of Motor Skills by Learning from Demonstration.

+ In 2009 IEEE International Conference on Robotics and Automation,

+ (pp. 763-768). DOI: 10.1109/ROBOT.2009.5152385,

+ https://h2t.iar.kit.edu/pdf/Pastor2009.pdf

"""

def __init__(

self, execution_time=1.0, dt=0.01, n_weights_per_dim=10,

diff --git a/movement_primitives/dmp/_coupling_terms.py b/movement_primitives/dmp/_coupling_terms.py

index 1dd4d40..cdf5085 100644

--- a/movement_primitives/dmp/_coupling_terms.py

+++ b/movement_primitives/dmp/_coupling_terms.py

@@ -65,8 +65,25 @@ def obstacle_avoidance_acceleration_2d(

return np.squeeze(cdd)

-class CouplingTermObstacleAvoidance2D: # for DMP

- """Coupling term for obstacle avoidance in 2D."""

+class CouplingTermObstacleAvoidance2D:

+ """Coupling term for obstacle avoidance in 2D.

+

+ For :class:`DMP` and :class:`DMPWithFinalVelocity`.

+

+ This is the simplified 2D implementation of

+ :class:`CouplingTermObstacleAvoidance3D`.

+

+ Parameters

+ ----------

+ obstacle_position : array, shape (2,)

+ Position of the point obstacle.

+

+ gamma : float, optional (default: 1000)

+ Parameter of obstacle avoidance.

+

+ beta : float, optional (default: 20 / pi)

+ Parameter of obstacle avoidance.

+ """

def __init__(self, obstacle_position, gamma=1000.0, beta=20.0 / math.pi,

fast=False):

self.obstacle_position = obstacle_position

@@ -78,19 +95,101 @@ def __init__(self, obstacle_position, gamma=1000.0, beta=20.0 / math.pi,

self.step_function = obstacle_avoidance_acceleration_2d

def coupling(self, y, yd):

+ """Computes coupling term based on current state.

+

+ Parameters

+ ----------

+ y : array, shape (n_dims,)

+ Current position.

+

+ yd : array, shape (n_dims,)

+ Current velocity.

+

+ Returns

+ -------

+ cd : array, shape (n_dims,)

+ Velocity. 0 for this coupling term.

+

+ cdd : array, shape (n_dims,)

+ Acceleration.

+ """

cdd = self.step_function(

y, yd, self.obstacle_position, self.gamma, self.beta)

return np.zeros_like(cdd), cdd

class CouplingTermObstacleAvoidance3D: # for DMP

- """Coupling term for obstacle avoidance in 3D."""

+ r"""Coupling term for obstacle avoidance in 3D.

+

+ For :class:`DMP` and :class:`DMPWithFinalVelocity`.

+

+ Following [1]_, this coupling term adds an acceleration

+

+ .. math::

+

+ \boldsymbol{C}_t = \gamma \boldsymbol{R} \dot{\boldsymbol{y}}

+ \theta \exp(-\beta \theta),

+

+ where

+

+ .. math::

+

+ \theta = \arccos\left( \frac{(\boldsymbol{o} - \boldsymbol{y})^T

+ \dot{\boldsymbol{y}}}{|\boldsymbol{o} - \boldsymbol{y}|

+ |\dot{\boldsymbol{y}}|} \right)

+

+ and a rotation axis :math:`\boldsymbol{r} =

+ (\boldsymbol{o} - \boldsymbol{y}) \times \dot{\boldsymbol{y}}` used to

+ compute the rotation matrix :math:`\boldsymbol{R}` that rotates about it

+ by 90 degrees for an obstacle at position :math:`\boldsymbol{o}`.

+

+ Intuitively, this coupling term adds a movement perpendicular to the

+ current velocity in the plane defined by the direction to the obstacle and

+ the current movement direction.

+

+ Parameters

+ ----------

+ obstacle_position : array, shape (3,)

+ Position of the point obstacle.

+

+ gamma : float, optional (default: 1000)

+ Parameter of obstacle avoidance.

+

+ beta : float, optional (default: 20 / pi)

+ Parameter of obstacle avoidance.

+

+ References

+ ----------

+ .. [1] Ijspeert, A. J., Nakanishi, J., Hoffmann, H., Pastor, P., Schaal, S.

+ (2013). Dynamical Movement Primitives: Learning Attractor Models for

+ Motor Behaviors. Neural Computation 25 (2), 328-373. DOI:

+ 10.1162/NECO_a_00393,

+ https://homes.cs.washington.edu/~todorov/courses/amath579/reading/DynamicPrimitives.pdf

+ """

def __init__(self, obstacle_position, gamma=1000.0, beta=20.0 / math.pi):

self.obstacle_position = obstacle_position

self.gamma = gamma

self.beta = beta

def coupling(self, y, yd):

+ """Computes coupling term based on current state.

+

+ Parameters

+ ----------

+ y : array, shape (n_dims,)

+ Current position.

+

+ yd : array, shape (n_dims,)

+ Current velocity.

+

+ Returns

+ -------

+ cd : array, shape (n_dims,)

+ Velocity. 0 for this coupling term.

+

+ cdd : array, shape (n_dims,)

+ Acceleration.

+ """

cdd = obstacle_avoidance_acceleration_3d(

y, yd, self.obstacle_position, self.gamma, self.beta)

return np.zeros_like(cdd), cdd

@@ -135,10 +234,7 @@ def obstacle_avoidance_acceleration_3d(

class CouplingTermPos1DToPos1D:

"""Couples position components of a 2D DMP with a virtual spring.

- A. Gams, B. Nemec, L. Zlajpah, M. Wächter, T. Asfour, A. Ude:

- Modulation of Motor Primitives using Force Feedback: Interaction with

- the Environment and Bimanual Tasks (2013), IROS,

- https://h2t.anthropomatik.kit.edu/pdf/Gams2013.pdf

+ For :class:`DMP` and :class:`DMPWithFinalVelocity`.

Parameters

----------

@@ -164,6 +260,15 @@ class CouplingTermPos1DToPos1D:

c2 : float, optional (default: 30)

Scaling factor for spring forces in the acceleration component.

+

+ References

+ ----------

+ .. [1] Gams, A., Nemec, B., Zlajpah, L., Wächter, M., Asfour, T., Ude, A.

+ (2013). Modulation of Motor Primitives using Force Feedback: Interaction

+ with the Environment and Bimanual Tasks (2013), In 2013 IEEE/RSJ

+ International Conference on Intelligent Robots and Systems (pp.

+ 5629-5635). DOI: 10.1109/IROS.2013.6697172,

+ https://h2t.anthropomatik.kit.edu/pdf/Gams2013.pdf

"""

def __init__(self, desired_distance, lf, k=1.0, c1=100.0, c2=30.0):

self.desired_distance = desired_distance

@@ -183,13 +288,10 @@ def coupling(self, y, yd=None):

return np.array([C12, C21]), np.array([C12dot, C21dot])

-class CouplingTermPos3DToPos3D: # for DMP

+class CouplingTermPos3DToPos3D:

"""Couples position components of a 6D DMP with a virtual spring in 3D.

- A. Gams, B. Nemec, L. Zlajpah, M. Wächter, T. Asfour, A. Ude:

- Modulation of Motor Primitives using Force Feedback: Interaction with

- the Environment and Bimanual Tasks (2013), IROS,

- https://h2t.anthropomatik.kit.edu/pdf/Gams2013.pdf

+ For :class:`DMP` and :class:`DMPWithFinalVelocity`.

Parameters

----------

@@ -215,6 +317,15 @@ class CouplingTermPos3DToPos3D: # for DMP

c2 : float, optional (default: 30)

Scaling factor for spring forces in the acceleration component.

+

+ References

+ ----------

+ .. [1] Gams, A., Nemec, B., Zlajpah, L., Wächter, M., Asfour, T., Ude, A.

+ (2013). Modulation of Motor Primitives using Force Feedback: Interaction

+ with the Environment and Bimanual Tasks (2013), In 2013 IEEE/RSJ

+ International Conference on Intelligent Robots and Systems (pp.

+ 5629-5635). DOI: 10.1109/IROS.2013.6697172,

+ https://h2t.anthropomatik.kit.edu/pdf/Gams2013.pdf

"""

def __init__(self, desired_distance, lf, k=1.0, c1=1.0, c2=30.0):

self.desired_distance = desired_distance

@@ -237,8 +348,36 @@ def coupling(self, y, yd=None):

return np.hstack([C12, C21]), np.hstack([C12dot, C21dot])

-class CouplingTermDualCartesianDistance: # for DualCartesianDMP

- """Couples distance between 3D positions of a dual Cartesian DMP."""

+class CouplingTermDualCartesianDistance:

+ """Couples distance between 3D positions of a dual Cartesian DMP.

+

+ For :class:`DualCartesianDMP`.

+

+ Parameters

+ ----------

+ desired_distance : float

+ Desired distance between components.

+

+ lf : array-like, shape (2,)

+ Binary values that indicate which DMP(s) will be adapted.

+ The variable lf defines the relation leader-follower. If lf[0] = lf[1],

+ then both robots will adapt their trajectories to follow average

+ trajectories at the defined distance dd between them [..]. On the other

+ hand, if lf[0] = 0 and lf[1] = 1, only DMP1 will change the trajectory

+ to match the trajectory of DMP0, again at the distance dd and again

+ only after learning. Vice versa applies as well. Leader-follower

+ relation can be determined by a higher-level planner [..].

+

+ k : float, optional (default: 1)

+ Virtual spring constant that couples the positions.

+

+ c1 : float, optional (default: 100)

+ Scaling factor for spring forces in the velocity component and

+ acceleration component.

+

+ c2 : float, optional (default: 30)

+ Scaling factor for spring forces in the acceleration component.

+ """

def __init__(self, desired_distance, lf, k=1.0, c1=1.0, c2=30.0):

self.desired_distance = desired_distance

self.lf = lf

@@ -260,8 +399,42 @@ def coupling(self, y, yd=None):

np.hstack([C12dot, np.zeros(3), C21dot, np.zeros(3)]))

-class CouplingTermDualCartesianPose: # for DualCartesianDMP

- """Couples relative poses of dual Cartesian DMP."""

+class CouplingTermDualCartesianPose:

+ """Couples relative poses of dual Cartesian DMP.

+

+ For :class:`DualCartesianDMP`.

+

+ Parameters

+ ----------

+ desired_distance : array, shape (4, 4)

+ Desired distance between components.

+

+ lf : array-like, shape (2,)

+ Binary values that indicate which DMP(s) will be adapted.

+ The variable lf defines the relation leader-follower. If lf[0] = lf[1],

+ then both robots will adapt their trajectories to follow average

+ trajectories at the defined distance dd between them [..]. On the other

+ hand, if lf[0] = 0 and lf[1] = 1, only DMP1 will change the trajectory

+ to match the trajectory of DMP0, again at the distance dd and again

+ only after learning. Vice versa applies as well. Leader-follower

+ relation can be determined by a higher-level planner [..].

+

+ couple_position : bool, optional (default: True)

+ Couple position between components.

+

+ couple_orientation : bool, optional (default: True)

+ Couple orientation between components.

+

+ k : float, optional (default: 1)

+ Virtual spring constant that couples the positions.

+

+ c1 : float, optional (default: 100)

+ Scaling factor for spring forces in the velocity component and

+ acceleration component.

+

+ c2 : float, optional (default: 30)

+ Scaling factor for spring forces in the acceleration component.

+ """

def __init__(self, desired_distance, lf, couple_position=True,

couple_orientation=True, k=1.0, c1=1.0, c2=30.0, verbose=0):

self.desired_distance = desired_distance

@@ -352,8 +525,43 @@ def _right2left_pq(self, y):

return right2left_pq

-class CouplingTermDualCartesianTrajectory(CouplingTermDualCartesianPose): # for DualCartesianDMP

- """Couples relative pose in dual Cartesian DMP with a given trajectory."""

+class CouplingTermDualCartesianTrajectory(CouplingTermDualCartesianPose):

+ """Couples relative pose in dual Cartesian DMP with a given trajectory.

+

+ For :class:`DualCartesianDMP`.

+

+ Parameters

+ ----------

+ offset : array, shape (7,)

+ Offset for desired distance between components as position and

+ quaternion.

+

+ lf : array-like, shape (2,)

+ Binary values that indicate which DMP(s) will be adapted.

+ The variable lf defines the relation leader-follower. If lf[0] = lf[1],

+ then both robots will adapt their trajectories to follow average

+ trajectories at the defined distance dd between them [..]. On the other

+ hand, if lf[0] = 0 and lf[1] = 1, only DMP1 will change the trajectory

+ to match the trajectory of DMP0, again at the distance dd and again

+ only after learning. Vice versa applies as well. Leader-follower

+ relation can be determined by a higher-level planner [..].

+

+ couple_position : bool, optional (default: True)

+ Couple position between components.

+

+ couple_orientation : bool, optional (default: True)

+ Couple orientation between components.

+

+ k : float, optional (default: 1)

+ Virtual spring constant that couples the positions.

+

+ c1 : float, optional (default: 100)

+ Scaling factor for spring forces in the velocity component and

+ acceleration component.

+

+ c2 : float, optional (default: 30)

+ Scaling factor for spring forces in the acceleration component.

+ """

def __init__(self, offset, lf, dt, couple_position=True,

couple_orientation=True, k=1.0, c1=1.0, c2=30.0, verbose=1):

self.offset = offset

diff --git a/movement_primitives/dmp/_dmp.py b/movement_primitives/dmp/_dmp.py

index 22697ea..3288d3a 100644

--- a/movement_primitives/dmp/_dmp.py

+++ b/movement_primitives/dmp/_dmp.py

@@ -340,23 +340,19 @@ def dmp_step_euler(

class DMP(WeightParametersMixin, DMPBase):

- """Dynamical movement primitive (DMP).

+ r"""Dynamical movement primitive (DMP).

- Implementation according to

+ Equation of transformation system (according to [1]_, Eq. 2.1):

- A.J. Ijspeert, J. Nakanishi, H. Hoffmann, P. Pastor, S. Schaal:

- Dynamical Movement Primitives: Learning Attractor Models for Motor

- Behaviors (2013), Neural Computation 25(2), pp. 328-373, doi:

- 10.1162/NECO_a_00393, https://ieeexplore.ieee.org/document/6797340,

- https://homes.cs.washington.edu/~todorov/courses/amath579/reading/DynamicPrimitives.pdf

+ .. math::

- (if smooth scaling is activated) with modification of scaling proposed by

+ \ddot{y} = (\alpha_y (\beta_y (g - y) - \tau \dot{y}) + f(z) + C_t) / \tau^2

- P. Pastor, H. Hoffmann, T. Asfour, S. Schaal:

- Learning and Generalization of Motor Skills by Learning from Demonstration,

- 2009 IEEE International Conference on Robotics and Automation,

- Kobe, Japan, 2009, pp. 763-768, doi: 10.1109/ROBOT.2009.5152385,

- https://h2t.iar.kit.edu/pdf/Pastor2009.pdf

+ and if smooth scaling is activated (according to [2]_):

+

+ .. math::

+

+ \ddot{y} = (\alpha_y (\beta_y (g - y) - \tau \dot{y} - \underline{\beta_y (g - y_0) z}) + f(z) + C_t) / \tau^2

Parameters

----------

@@ -364,16 +360,16 @@ class DMP(WeightParametersMixin, DMPBase):

State space dimensions.

execution_time : float, optional (default: 1)

- Execution time of the DMP.

+ Execution time of the DMP: :math:`\tau`.

dt : float, optional (default: 0.01)

- Time difference between DMP steps.

+ Time difference between DMP steps: :math:`\Delta t`.

n_weights_per_dim : int, optional (default: 10)

Number of weights of the function approximator per dimension.

int_dt : float, optional (default: 0.001)

- Time difference for Euler integration.

+ Time difference for Euler integration of transformation system.

p_gain : float, optional (default: 0)

Gain for proportional controller of DMP tracking error.

@@ -392,6 +388,20 @@ class DMP(WeightParametersMixin, DMPBase):

dt_ : float

Time difference between DMP steps. This value can be changed to adapt

the frequency.

+

+ References

+ ----------

+ .. [1] Ijspeert, A. J., Nakanishi, J., Hoffmann, H., Pastor, P., Schaal, S.

+ (2013). Dynamical Movement Primitives: Learning Attractor Models for

+ Motor Behaviors. Neural Computation 25 (2), 328-373. DOI:

+ 10.1162/NECO_a_00393,

+ https://homes.cs.washington.edu/~todorov/courses/amath579/reading/DynamicPrimitives.pdf

+

+ .. [2] Pastor, P., Hoffmann, H., Asfour, T., Schaal, S. (2009). Learning

+ and Generalization of Motor Skills by Learning from Demonstration.

+ In 2009 IEEE International Conference on Robotics and Automation,

+ (pp. 763-768). DOI: 10.1109/ROBOT.2009.5152385,

+ https://h2t.iar.kit.edu/pdf/Pastor2009.pdf

"""

def __init__(self, n_dims, execution_time=1.0, dt=0.01,

n_weights_per_dim=10, int_dt=0.001, p_gain=0.0,

diff --git a/movement_primitives/dmp/_dmp_with_final_velocity.py b/movement_primitives/dmp/_dmp_with_final_velocity.py

index 3803188..b7f34d0 100644

--- a/movement_primitives/dmp/_dmp_with_final_velocity.py

+++ b/movement_primitives/dmp/_dmp_with_final_velocity.py

@@ -6,14 +6,13 @@

class DMPWithFinalVelocity(WeightParametersMixin, DMPBase):

- """Dynamical movement primitive (DMP) with final velocity.

+ r"""Dynamical movement primitive (DMP) with final velocity.

- Implementation according to

+ Equation of transformation system (according to [1]_, Eq. 6):

- K. Muelling, J. Kober, O. Kroemer, J. Peters:

- Learning to Select and Generalize Striking Movements in Robot Table Tennis

- (2013), International Journal of Robotics Research 32(3), pp. 263-279,

- https://www.ias.informatik.tu-darmstadt.de/uploads/Publications/Muelling_IJRR_2013.pdf

+ .. math::

+

+ \ddot{y} = (\alpha_y (\beta_y (g - y) + \tau\dot{g} - \tau \dot{y}) + f(z))/\tau^2

Parameters

----------

@@ -21,10 +20,10 @@ class DMPWithFinalVelocity(WeightParametersMixin, DMPBase):

State space dimensions.

execution_time : float, optional (default: 1)

- Execution time of the DMP.

+ Execution time of the DMP: :math:`\tau`.

dt : float, optional (default: 0.01)

- Time difference between DMP steps.

+ Time difference between DMP steps: :math:`\Delta t`.

n_weights_per_dim : int, optional (default: 10)

Number of weights of the function approximator per dimension.

@@ -44,6 +43,13 @@ class DMPWithFinalVelocity(WeightParametersMixin, DMPBase):

dt_ : float

Time difference between DMP steps. This value can be changed to adapt

the frequency.

+

+ References

+ ----------

+ .. [1] Muelling, K., Kober, J., Kroemer, O., Peters, J. (2013). Learning to

+ Select and Generalize Striking Movements in Robot Table Tennis.

+ International Journal of Robotics Research 32 (3), 263-279.

+ https://www.ias.informatik.tu-darmstadt.de/uploads/Publications/Muelling_IJRR_2013.pdf

"""

def __init__(self, n_dims, execution_time=1.0, dt=0.01,

n_weights_per_dim=10, int_dt=0.001, p_gain=0.0):

diff --git a/movement_primitives/dmp/_dual_cartesian_dmp.py b/movement_primitives/dmp/_dual_cartesian_dmp.py

index 8089839..b948718 100644

--- a/movement_primitives/dmp/_dual_cartesian_dmp.py

+++ b/movement_primitives/dmp/_dual_cartesian_dmp.py

@@ -174,22 +174,9 @@ class DualCartesianDMP(WeightParametersMixin, DMPBase):

"""Dual cartesian dynamical movement primitive.

Each of the two Cartesian DMPs handles orientation and position separately.

- The orientation is represented by a quaternion. The quaternion DMP is

- implemented according to

-

- A. Ude, B. Nemec, T. Petric, J. Murimoto:

- Orientation in Cartesian space dynamic movement primitives (2014),

- IEEE International Conference on Robotics and Automation (ICRA),

- pp. 2997-3004, doi: 10.1109/ICRA.2014.6907291,

- https://ieeexplore.ieee.org/document/6907291

-

- (if smooth scaling is activated) with modification of scaling proposed by

-

- P. Pastor, H. Hoffmann, T. Asfour, S. Schaal:

- Learning and Generalization of Motor Skills by Learning from Demonstration,

- 2009 IEEE International Conference on Robotics and Automation,

- Kobe, Japan, 2009, pp. 763-768, doi: 10.1109/ROBOT.2009.5152385,

- https://h2t.iar.kit.edu/pdf/Pastor2009.pdf

+ The orientation is represented by a quaternion.

+ See :class:`CartesianDMP` for details about the equation of the

+ transformation system.

While the dimension of the state space is 14, the dimension of the

velocity, acceleration, and forcing term is 12.

@@ -225,6 +212,20 @@ class DualCartesianDMP(WeightParametersMixin, DMPBase):

dt_ : float

Time difference between DMP steps. This value can be changed to adapt

the frequency.

+

+ References

+ ----------

+ .. [1] Ude, A., Nemec, B., Petric, T., Murimoto, J. (2014).

+ Orientation in Cartesian space dynamic movement primitives.

+ In IEEE International Conference on Robotics and Automation (ICRA)

+ (pp. 2997-3004). DOI: 10.1109/ICRA.2014.6907291,

+ https://acat-project.eu/modules/BibtexModule/uploads/PDF/udenemecpetric2014.pdf

+

+ .. [2] Pastor, P., Hoffmann, H., Asfour, T., Schaal, S. (2009). Learning

+ and Generalization of Motor Skills by Learning from Demonstration.

+ In 2009 IEEE International Conference on Robotics and Automation,

+ (pp. 763-768). DOI: 10.1109/ROBOT.2009.5152385,

+ https://h2t.iar.kit.edu/pdf/Pastor2009.pdf

"""

def __init__(self, execution_time=1.0, dt=0.01, n_weights_per_dim=10,

int_dt=0.001, p_gain=0.0, smooth_scaling=False):

diff --git a/movement_primitives/dmp/_state_following_dmp.py b/movement_primitives/dmp/_state_following_dmp.py

index 288c617..1ff4c27 100644

--- a/movement_primitives/dmp/_state_following_dmp.py

+++ b/movement_primitives/dmp/_state_following_dmp.py

@@ -7,20 +7,22 @@

class StateFollowingDMP(WeightParametersMixin, DMPBase):

"""State following DMP (highly experimental).

- The DMP variant that is implemented here is described in

-

- J. Vidakovic, B. Jerbic, B. Sekoranja, M. Svaco, F. Suligoj:

- Task Dependent Trajectory Learning from Multiple Demonstrations Using

- Movement Primitives (2019),

- International Conference on Robotics in Alpe-Adria Danube Region (RAAD),

- pp. 275-282, doi: 10.1007/978-3-030-19648-6_32,

- https://link.springer.com/chapter/10.1007/978-3-030-19648-6_32

+ The DMP variant that is implemented here is described in [1]_.

Attributes

----------

dt_ : float

Time difference between DMP steps. This value can be changed to adapt

the frequency.

+

+ References

+ ----------

+ .. [1] Vidakovic, J., Jerbic, B., Sekoranja, B., Svaco, M., Suligoj, F.

+ (2019). Task Dependent Trajectory Learning from Multiple Demonstrations

+ Using Movement Primitives. In International Conference on Robotics in

+ Alpe-Adria Danube Region (RAAD) (pp. 275-282). DOI:

+ 10.1007/978-3-030-19648-6_32,

+ https://link.springer.com/chapter/10.1007/978-3-030-19648-6_32

"""

def __init__(self, n_dims, execution_time, dt=0.01, n_viapoints=10,

int_dt=0.001):

diff --git a/movement_primitives/dmp_potential_field.py b/movement_primitives/dmp_potential_field.py

index 4c9aa29..023ad9e 100644

--- a/movement_primitives/dmp_potential_field.py

+++ b/movement_primitives/dmp_potential_field.py

@@ -1,4 +1,7 @@

-"""Visualization of DMP as potential field."""

+"""Potential Field of DMP

+======================

+

+Visualization of DMP as potential field."""

import numpy as np

from .dmp import (

dmp_transformation_system, obstacle_avoidance_acceleration_2d, phase)

diff --git a/movement_primitives/io.py b/movement_primitives/io.py

index dfe8c98..b66f1d9 100644

--- a/movement_primitives/io.py

+++ b/movement_primitives/io.py

@@ -1,4 +1,8 @@

-"""Input and output from and to files of movement primitives."""

+"""Input / Output

+==============

+

+Input and output from and to files of movement primitives.

+"""

import inspect

import json

import pickle

diff --git a/movement_primitives/kinematics.py b/movement_primitives/kinematics.py

index 8a99677..a96015d 100644

--- a/movement_primitives/kinematics.py

+++ b/movement_primitives/kinematics.py

@@ -1,4 +1,7 @@

-"""Forward kinematics and a simple implementation of inverse kinematics."""

+"""Kinematics

+==========

+

+Forward kinematics and a simple implementation of inverse kinematics."""

import math

import numpy as np

import numba

@@ -133,6 +136,9 @@ def create_chain(self, joint_names, base_frame, ee_frame, verbose=0):

class Chain:

"""Kinematic chain.

+ You should not call this constructor manually. Use :class:`Kinematics` to

+ create a kinematic chain.

+

Parameters

----------

tm : FastUrdfTransformManager

diff --git a/movement_primitives/plot.py b/movement_primitives/plot.py

index 7c1c2c0..465e50e 100644

--- a/movement_primitives/plot.py

+++ b/movement_primitives/plot.py

@@ -1,4 +1,8 @@

-"""Tools for plotting multi-dimensional trajectories."""

+"""Plotting Tools

+==============

+

+Tools for plotting multi-dimensional trajectories.

+"""

import matplotlib.pyplot as plt

diff --git a/movement_primitives/promp.py b/movement_primitives/promp.py

index 6ae564a..f25baae 100644

--- a/movement_primitives/promp.py

+++ b/movement_primitives/promp.py

@@ -1,12 +1,17 @@

-"""Probabilistic movement primitive."""

+"""Probabilistic Movement Primitive (ProMP)

+========================================

+

+ProMPs represent distributions of trajectories.

+"""

import numpy as np

class ProMP:

"""Probabilistic Movement Primitive (ProMP).

- ProMPs have been proposed first in [1] and have been used later in [2,3].

- The learning algorithm is a specialized form of the one presented in [4].

+ ProMPs have been proposed first in [1]_ and have been used later in [2]_,

+ [3]_. The learning algorithm is a specialized form of the one presented in

+ [4]_.

Note that internally we represented trajectories with the task space

dimension as the first axis and the time step as the second axis while

@@ -24,19 +29,27 @@ class ProMP:

References

----------

- [1] Paraschos et al.: Probabilistic movement primitives, NeurIPS (2013),

- https://papers.nips.cc/paper/2013/file/e53a0a2978c28872a4505bdb51db06dc-Paper.pdf

-

- [3] Maeda et al.: Probabilistic movement primitives for coordination of

- multiple human–robot collaborative tasks, AuRo 2017,

- https://link.springer.com/article/10.1007/s10514-016-9556-2

-

- [2] Paraschos et al.: Using probabilistic movement primitives in robotics, AuRo (2018),

- https://www.ias.informatik.tu-darmstadt.de/uploads/Team/AlexandrosParaschos/promps_auro.pdf,

- https://link.springer.com/article/10.1007/s10514-017-9648-7

-

- [4] Lazaric et al.: Bayesian Multi-Task Reinforcement Learning, ICML (2010),

- https://hal.inria.fr/inria-00475214/document

+ .. [1] Paraschos, A., Daniel, C., Peters, J., Neumann, G. (2013).

+ Probabilistic movement primitives, In C.J. Burges and L. Bottou and M.

+ Welling and Z. Ghahramani and K.Q. Weinberger (Eds.), Advances in Neural

+ Information Processing Systems, 26,

+ https://papers.nips.cc/paper/2013/file/e53a0a2978c28872a4505bdb51db06dc-Paper.pdf

+

+ .. [3] Maeda, G. J., Neumann, G., Ewerton, M., Lioutikov, R., Kroemer, O.,

+ Peters, J. (2017). Probabilistic movement primitives for coordination of

+ multiple human–robot collaborative tasks. Autonomous Robots, 41, 593-612.

+ DOI: 10.1007/s10514-016-9556-2,

+ https://link.springer.com/article/10.1007/s10514-016-9556-2

+

+ .. [2] Paraschos, A., Daniel, C., Peters, J., Neumann, G. (2018).

+ Using probabilistic movement primitives in robotics. Autonomous Robots,

+ 42, 529-551. DOI: 10.1007/s10514-017-9648-7,

+ https://www.ias.informatik.tu-darmstadt.de/uploads/Team/AlexandrosParaschos/promps_auro.pdf

+

+ .. [4] Lazaric, A., Ghavamzadeh, M. (2010).

+ Bayesian Multi-Task Reinforcement Learning. In Proceedings of the 27th

+ International Conference on International Conference on Machine Learning

+ (ICML'10) (pp. 599-606). https://hal.inria.fr/inria-00475214/document

"""

def __init__(self, n_dims, n_weights_per_dim=10):

self.n_dims = n_dims

diff --git a/movement_primitives/spring_damper.py b/movement_primitives/spring_damper.py

index 507834a..66f9869 100644

--- a/movement_primitives/spring_damper.py

+++ b/movement_primitives/spring_damper.py

@@ -1,4 +1,8 @@

-"""Spring-damper based attractors."""

+"""Spring-Damper Based Attractors

+==============================

+

+Spring-damper based attractors are the basis of a DMP's transformation system.

+"""

import numpy as np

import pytransform3d.rotations as pr

from .base import PointToPointMovement

diff --git a/movement_primitives/visualization.py b/movement_primitives/visualization.py

index 7a946c8..abd4375 100644

--- a/movement_primitives/visualization.py

+++ b/movement_primitives/visualization.py

@@ -1,4 +1,8 @@

-"""3D visualization tools for movement primitives."""

+"""Visualization Tools

+===================

+

+3D visualization tools for movement primitives.

+"""

import numpy as np

import open3d as o3d

import scipy as sp

diff --git a/setup.py b/setup.py

index 149e8f3..b46ccaa 100644

--- a/setup.py

+++ b/setup.py

@@ -25,7 +25,8 @@

extras_require={

"all": ["pytransform3d", "cython", "numpy", "scipy", "matplotlib",

"open3d", "tqdm", "gmr", "PyYAML", "numba", "pybullet"],

- "doc": ["pdoc3"],

+ "doc": ["sphinx", "sphinx-bootstrap-theme", "numpydoc",

+ "myst-parser"],

"test": ["nose", "coverage"]

}

)

@@ -157,7 +100,7 @@ trajectories that can be conditioned on viapoints. In this example, we

plot the resulting posterior distribution after conditioning on varying

start positions.

-[Script](examples/plot_conditional_promp.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/plot_conditional_promp.py)

### Potential Field of 2D DMP

@@ -167,7 +110,7 @@ A Dynamical Movement Primitive defines a potential field that superimposes

several components: transformation system (goal-directed movement), forcing

term (learned shape), and coupling terms (e.g., obstacle avoidance).

-[Script](examples/plot_dmp_potential_field.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/plot_dmp_potential_field.py)

### DMP with Final Velocity

@@ -177,7 +120,7 @@ Not all DMPs allow a final velocity > 0. In this case we analyze the effect

of changing final velocities in an appropriate variation of the DMP

formulation that allows to set the final velocity.

-[Script](examples/plot_dmp_with_final_velocity.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/plot_dmp_with_final_velocity.py)

### ProMPs

@@ -188,7 +131,7 @@ The LASA Handwriting dataset learned with ProMPs. The dataset consists of

demonstrations and the second and fourth column show the imitated ProMPs

with 1-sigma interval.

-[Script](examples/plot_promp_lasa.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/plot_promp_lasa.py)

### Cartesian DMPs

@@ -197,7 +140,7 @@ with 1-sigma interval.

A trajectory is created manually, imitated with a Cartesian DMP, converted

to a joint trajectory by inverse kinematics, and executed with a UR5.

-[Script](examples/vis_cartesian_dmp.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/vis_cartesian_dmp.py)

### Contextual ProMPs

@@ -209,7 +152,7 @@ kinesthetic teaching. The panel width is considered to be the context over

which we generalize with contextual ProMPs. Each color in the above

visualizations corresponds to a ProMP for a different context.

-[Script](examples/external_dependencies/vis_contextual_promp_distribution.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/vis_contextual_promp_distribution.py)

**Dependencies that are not publicly available:**

@@ -229,7 +172,7 @@ visualizations corresponds to a ProMP for a different context.

We offer specific dual Cartesian DMPs to control dual-arm robotic systems like

humanoid robots.

-Scripts: [Open3D](examples/external_dependencies/vis_solar_panel.py), [PyBullet](examples/external_dependencies/sim_solar_panel.py)

+Scripts: [Open3D](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/vis_solar_panel.py), [PyBullet](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/sim_solar_panel.py)

**Dependencies that are not publicly available:**

@@ -251,7 +194,7 @@ We can introduce a coupling term in a dual Cartesian DMP to constrain the

relative position, orientation, or pose of two end-effectors of a dual-arm

robot.

-Scripts: [Open3D](examples/external_dependencies/vis_cartesian_dual_dmp.py), [PyBullet](examples/external_dependencies/sim_cartesian_dual_dmp.py)

+Scripts: [Open3D](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/vis_cartesian_dual_dmp.py), [PyBullet](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/sim_cartesian_dual_dmp.py)

**Dependencies that are not publicly available:**

@@ -271,7 +214,7 @@ Scripts: [Open3D](examples/external_dependencies/vis_cartesian_dual_dmp.py), [Py

If we have a distribution over DMP parameters, we can propagate them to state

space through an unscented transform.

-[Script](examples/external_dependencies/vis_dmp_to_state_variance.py)

+[Script](https://github.com/dfki-ric/movement_primitives/blob/main/examples/external_dependencies/vis_dmp_to_state_variance.py)

**Dependencies that are not publicly available:**

@@ -286,6 +229,165 @@ space through an unscented transform.

git clone git@git.hb.dfki.de:models-robots/kuka_lbr.git

```

+## Build API Documentation

+

+You can build an API documentation with sphinx.

+You can install all dependencies with

+

+```bash

+python -m pip install movement_primitives[doc]

+```

+

+... and build the documentation from the folder `doc/` with

+

+```bash

+make html

+```

+

+It will be located at `doc/build/html/index.html`.

+

+## Test

+

+To run the tests some python libraries are required:

+

+```bash

+python -m pip install -e .[test]

+```

+

+The tests are located in the folder `test/` and can be executed with:

+`python -m nose test`

+

+This command searches for all files with `test` and executes the functions with `test_*`.

+

+## Contributing

+

+You can report bugs in the [issue tracker](https://github.com/dfki-ric/movement_primitives/issues).

+If you have questions about the software, please use the [discussions

+section](https://github.com/dfki-ric/movement_primitives/discussions).

+To add new features, documentation, or fix bugs you can open a pull request

+on [GitHub](https://github.com/dfki-ric/movement_primitives). Directly pushing

+to the main branch is not allowed.

+

+The recommended workflow to add a new feature, add documentation, or fix a bug

+is the following:

+

+* Push your changes to a branch (e.g., feature/x, doc/y, or fix/z) of your fork

+ of the repository.

+* Open a pull request to the main branch of the main repository.

+

+This is a checklist for new features:

+

+- are there unit tests?

+- does it have docstrings?

+- is it included in the API documentation?

+- run flake8 and pylint

+- should it be part of the readme?

+- should it be included in any example script?

+

+## Non-public Extensions

+

+Scripts from the subfolder `examples/external_dependencies/` require access to

+git repositories (URDF files or optional dependencies) and datasets that are

+not publicly available. They are available on request (email

+alexander.fabisch@dfki.de).

+

+Note that the library does not have any non-public dependencies! They are only

+required to run all examples.

+

+### MoCap Library

+

+```bash