diff --git a/.gitmodules b/.gitmodules

index 29623c5ae..e6612923a 100644

--- a/.gitmodules

+++ b/.gitmodules

@@ -1,6 +1,7 @@

[submodule "galaxy/roles/galaxyproject.galaxyextras"]

path = galaxy/roles/galaxyprojectdotorg.galaxyextras

url = https://github.com/galaxyproject/ansible-galaxy-extras

+ branch = 18.01

[submodule "galaxy/roles/galaxy-postgresql"]

path = galaxy/roles/galaxy-postgresql

url = https://github.com/galaxyproject/ansible-postgresql

diff --git a/.travis.yml b/.travis.yml

index df8283fa6..b263b1a4e 100644

--- a/.travis.yml

+++ b/.travis.yml

@@ -267,15 +267,22 @@ script:

# Run a ton of BioBlend test against our servers.

- cd $TRAVIS_BUILD_DIR/test/bioblend/ && . ./test.sh && cd $WORKING_DIR/

- # Test the 'old' tool installation script

- - docker_exec_run bash -c "install-repository $INSTALL_REPO_ARG '--url https://toolshed.g2.bx.psu.edu -o iuc --name samtools_sort --panel-section-name SAMtools'"

- - |

- if [ "${COMPOSE_SLURM}" ] || [ "${KUBE}" ] || [ "${COMPOSE_CONDOR_DOCKER}" ] || [ "${COMPOSE_SLURM_SINGULARITY}" ]

- then

- # Test without install-repository wrapper

- sleep 10

- docker_exec_run bash -c 'cd $GALAXY_ROOT && python ./scripts/api/install_tool_shed_repositories.py --api admin -l http://localhost:80 --url https://toolshed.g2.bx.psu.edu -o devteam --name cut_columns --panel-section-name BEDTools'

- fi

+ # not working anymore in 18.01

+ # executing: /galaxy_venv/bin/uwsgi --yaml /etc/galaxy/galaxy.yml --master --daemonize2 galaxy.log --pidfile2 galaxy.pid --log-file=galaxy_install.log --pid-file=galaxy_install.pid

+ # [uWSGI] getting YAML configuration from /etc/galaxy/galaxy.yml

+ # /galaxy_venv/bin/python: unrecognized option '--log-file=galaxy_install.log'

+ # getopt_long() error

+ # cat: galaxy_install.pid: No such file or directory

+ # tail: cannot open ‘galaxy_install.log’ for reading: No such file or directory

+ #- |

+ # if [ "${COMPOSE_SLURM}" ] || [ "${KUBE}" ] || [ "${COMPOSE_CONDOR_DOCKER}" ] || [ "${COMPOSE_SLURM_SINGULARITY}" ]

+ # then

+ # # Test without install-repository wrapper

+ # sleep 10

+ # docker_exec_run bash -c 'cd $GALAXY_ROOT && python ./scripts/api/install_tool_shed_repositories.py --api admin -l http://localhost:80 --url https://toolshed.g2.bx.psu.edu -o devteam --name cut_columns --panel-section-name BEDTools'

+ # fi

+

+

# Test the 'new' tool installation script

# - docker_exec bash -c "install-tools $GALAXY_HOME/ephemeris/sample_tool_list.yaml"

- |

@@ -283,7 +290,7 @@ script:

then

# Compose uses the online installer (uses the running instance)

sleep 10

- docker_exec_run shed-install -g "http://localhost:80" -a admin -t "$SAMPLE_TOOLS"

+ docker_exec_run shed-tools install -g "http://localhost:80" -a admin -t "$SAMPLE_TOOLS"

else

docker_exec_run install-tools "$SAMPLE_TOOLS"

fi

diff --git a/README.md b/README.md

index b9c2c806d..bf4bf75f2 100644

--- a/README.md

+++ b/README.md

@@ -8,7 +8,7 @@

Galaxy Docker Image

===================

-The [Galaxy](http://www.galaxyproject.org) [Docker](http://www.docker.io) Image is an easy distributable full-fledged Galaxy installation, that can be used for testing, teaching and presenting new tools and features.

+The [Galaxy](http://www.galaxyproject.org) [Docker](http://www.docker.io) Image is an easy distributable full-fledged Galaxy installation, that can be used for testing, teaching and presenting new tools and features.

One of the main goals is to make the access to entire tool suites as easy as possible. Usually,

this includes the setup of a public available web-service that needs to be maintained, or that the Tool-user needs to either setup a Galaxy Server by its own or to have Admin access to a local Galaxy server.

@@ -18,7 +18,6 @@ The Image is based on [Ubuntu 14.04 LTS](http://releases.ubuntu.com/14.04/) and

-

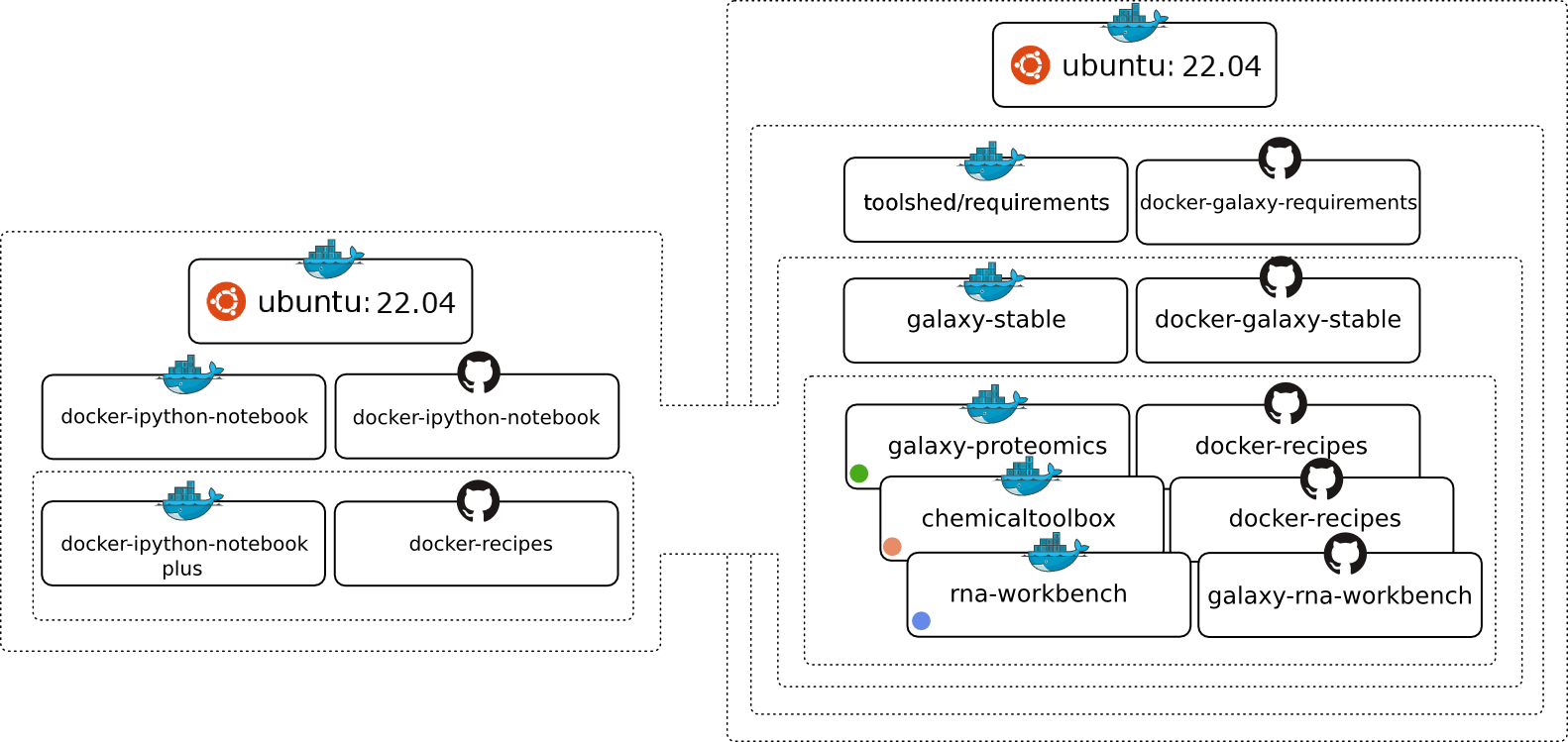

# Table of Contents ▲ back to top

+# Architectural Overview

+

+

+

+

# Usage

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+ image/svg+xml

+

+

+

+

+

+ Cluster

+

+

+ quai.io/galaxy/ github.com/bgruening/compose/ galaxy-slurm galaxy-slurm

+

+ quai.io/bgruening/ github.com/bgruening/compose/ galaxy-htcondor galaxy-htcondor

+

+ quai.io/bgruening/ github.com/bgruening/compose/ galaxy-htcondor-executor galaxy-htcondor-executor

+

+ quai.io/bgruening/ github.com/bgruening/compose/ galaxy-htcondor-base galaxy-htcondor-base

+ build base

+

+ library/ github.com/docker-library/ rabbitmq rabbitmq

+

+ quai.io/galaxy/ github.com/bgruening/ proftpd galaxy-proftpd

+

+ Database

+ quai.io/galaxy/, library/ github.com/bgruening/compose/ postgres galaxy-postgres

+

+ thajeztah/ github.com/thaJeztah/ pgadmin4 pgadmin4-docker

+

+

+

+

+ /export/ftp/

+

+ /export/rabbitmq/

+ network

+ /export/postgres/

+

+ /export/* /export/slurm.conf /export/munge.key

+

+

+ quai.io/bgruening/ github.com/bgruening/compose/ galaxy-web galaxy-web

+

+ quai.io/bgruening/ github.com/bgruening/compose/ galaxy-init galaxy-init

+

+ quai.io/bgruening/ github.com/bgruening/compose/ galaxy-base galaxy-base

+ build base

+

+

+ /export/* /export/.initdone

+

+ /export/* /export/.initdone

+ lock file port open

+

+ Galaxy

+

+ /export/*

+

+ /export/*

+

+

diff --git a/compose/galaxy-grafana/Dockerfile b/compose/galaxy-grafana/Dockerfile

new file mode 100644

index 000000000..6ca89d6c0

--- /dev/null

+++ b/compose/galaxy-grafana/Dockerfile

@@ -0,0 +1,2 @@

+FROM grafana/grafana:4.6.3

+ADD grafana.db /var/lib/grafana/grafana.db

diff --git a/compose/galaxy-grafana/configure_slurm.py b/compose/galaxy-grafana/configure_slurm.py

new file mode 100644

index 000000000..9e389496d

--- /dev/null

+++ b/compose/galaxy-grafana/configure_slurm.py

@@ -0,0 +1,114 @@

+from socket import gethostname

+from string import Template

+from os import environ

+import psutil

+

+

+SLURM_CONFIG_TEMPLATE = '''

+# slurm.conf file generated by configurator.html.

+# Put this file on all nodes of your cluster.

+# See the slurm.conf man page for more information.

+#

+ControlMachine=$control_machine

+#ControlAddr=

+#BackupController=

+#BackupAddr=

+#

+AuthType=auth/munge

+CacheGroups=0

+#CheckpointType=checkpoint/none

+CryptoType=crypto/munge

+MpiDefault=none

+#PluginDir=

+#PlugStackConfig=

+#PrivateData=jobs

+ProctrackType=proctrack/pgid

+#Prolog=

+#PrologSlurmctld=

+#PropagatePrioProcess=0

+#PropagateResourceLimits=

+#PropagateResourceLimitsExcept=

+ReturnToService=1

+#SallocDefaultCommand=

+SlurmctldPidFile=/var/run/slurmctld.pid

+SlurmctldPort=6817

+SlurmdPidFile=/var/run/slurmd.pid

+SlurmdPort=6818

+SlurmdSpoolDir=/tmp/slurmd

+SlurmUser=$user

+#SlurmdUser=root

+#SrunEpilog=

+#SrunProlog=

+StateSaveLocation=/tmp/slurm

+SwitchType=switch/none

+#TaskEpilog=

+TaskPlugin=task/none

+#TaskPluginParam=

+#TaskProlog=

+InactiveLimit=0

+KillWait=30

+MinJobAge=300

+#OverTimeLimit=0

+SlurmctldTimeout=120

+SlurmdTimeout=300

+#UnkillableStepTimeout=60

+#VSizeFactor=0

+Waittime=0

+FastSchedule=1

+SchedulerType=sched/backfill

+SchedulerPort=7321

+SelectType=select/cons_res

+SelectTypeParameters=CR_Core_Memory

+AccountingStorageType=accounting_storage/none

+#AccountingStorageUser=

+AccountingStoreJobComment=YES

+ClusterName=$cluster_name

+#DebugFlags=

+#JobCompHost=

+#JobCompLoc=

+#JobCompPass=

+#JobCompPort=

+JobCompType=jobcomp/none

+#JobCompUser=

+JobAcctGatherFrequency=30

+JobAcctGatherType=jobacct_gather/none

+SlurmctldDebug=3

+#SlurmctldLogFile=

+SlurmdDebug=3

+#SlurmdLogFile=

+NodeName=$node_name NodeAddr=$hostname NodeHostname=$hostname CPUs=$cpus RealMemory=$memory State=UNKNOWN

+PartitionName=$partition_name Nodes=$nodes Default=YES MaxTime=INFINITE State=UP Shared=YES

+'''

+

+try:

+ # psutil version 0.2

+ cpus = psutil.NUM_CPUS

+ mem = psutil.TOTAL_PHYMEM

+except:

+ # psutil version 0.3

+ cpus = psutil.cpu_count()

+ mem = psutil.virtual_memory().total

+

+def main():

+ hostname = gethostname()

+ node_name = environ.get('SLURM_NODE_NAME', hostname)

+ template_params = {

+ "hostname": hostname,

+ "node_name": node_name,

+ "nodes": ",".join(environ.get('SLURM_NODES', node_name).split(',')),

+ "cluster_name": environ.get('SLURM_CLUSTER_NAME', 'Cluster'),

+ "control_machine": environ.get('SLURM_CONTROL_MACHINE', hostname),

+ "user": environ.get('SLURM_USER_NAME', '{{ galaxy_user_name }}'),

+ "cpus": environ.get("SLURM_CPUS", cpus),

+ "partition_name": environ.get('SLURM_PARTITION_NAME', 'debug'),

+ "memory": environ.get("SLURM_MEMORY", int(mem / (1024 * 1024)))

+ }

+ config_contents = Template(SLURM_CONFIG_TEMPLATE).substitute(template_params)

+ control_addr = environ.get('SLURM_CONTROL_ADDR', None)

+ if control_addr:

+ config_contents = config_contents.replace("#ControlAddr=", "ControlAddr=%s" % control_addr)

+ # TODO: NodeAddr should probably galaxy-slurm in the Kubernetes case.

+ open("/etc/slurm-llnl/slurm.conf", "w").write(config_contents)

+

+if __name__ == "__main__":

+ main()

diff --git a/compose/galaxy-grafana/grafana.db b/compose/galaxy-grafana/grafana.db

new file mode 100644

index 000000000..07c3a31d8

Binary files /dev/null and b/compose/galaxy-grafana/grafana.db differ

diff --git a/compose/galaxy-grafana/munge.conf b/compose/galaxy-grafana/munge.conf

new file mode 100644

index 000000000..8d0046893

--- /dev/null

+++ b/compose/galaxy-grafana/munge.conf

@@ -0,0 +1,13 @@

+###############################################################################

+# $Id: munge.sysconfig 507 2006-05-11 20:28:55Z dun $

+###############################################################################

+

+##

+# Pass additional command-line options to the daemon.

+##

+OPTIONS="--force --key-file /etc/munge/munge.key --num-threads 1"

+

+##

+# Adjust the scheduling priority of the daemon.

+##

+# NICE=

diff --git a/compose/galaxy-grafana/requirements.txt b/compose/galaxy-grafana/requirements.txt

new file mode 100644

index 000000000..3f79978d5

--- /dev/null

+++ b/compose/galaxy-grafana/requirements.txt

@@ -0,0 +1,71 @@

+# packages with C extensions

+bx-python==0.7.3

+MarkupSafe==0.23

+PyYAML==3.11

+SQLAlchemy==1.0.15

+mercurial==3.7.3

+numpy==1.9.2

+pycrypto==2.6.1

+

+# Install python_lzo if you want to support indexed access to lzo-compressed

+# locally cached maf files via bx-python

+#python_lzo==1.8

+

+# pure Python packages

+Paste==2.0.2

+PasteDeploy==1.5.2

+docutils==0.12

+wchartype==0.1

+repoze.lru==0.6

+Routes==2.2

+WebOb==1.4.1

+WebHelpers==1.3

+Mako==1.0.2

+pytz==2015.4

+Babel==2.0

+Beaker==1.7.0

+dictobj==0.3.1

+nose==1.3.7

+Parsley==1.3

+six==1.9.0

+Whoosh==2.7.4

+testfixtures==4.10.0

+

+# Cheetah and dependencies

+Cheetah==2.4.4

+Markdown==2.6.3

+

+# BioBlend and dependencies

+bioblend==0.7.0

+boto==2.38.0

+requests==2.8.1

+requests-toolbelt==0.4.0

+

+# kombu and dependencies

+kombu==3.0.30

+amqp==1.4.8

+anyjson==0.3.3

+

+# Pulsar requirements

+psutil==4.1.0

+pulsar-galaxy-lib==0.7.0.dev5

+

+# sqlalchemy-migrate and dependencies

+sqlalchemy-migrate==0.10.0

+decorator==4.0.2

+Tempita==0.5.3dev

+sqlparse==0.1.16

+pbr==1.8.0

+

+# svgwrite and dependencies

+svgwrite==1.1.6

+pyparsing==2.1.1

+

+# Fabric and dependencies

+Fabric==1.10.2

+paramiko==1.15.2

+ecdsa==0.13

+

+# Flexible BAM index naming

+pysam==0.8.4+gx5

+

diff --git a/compose/galaxy-grafana/startup.sh b/compose/galaxy-grafana/startup.sh

new file mode 100644

index 000000000..e6ebf8994

--- /dev/null

+++ b/compose/galaxy-grafana/startup.sh

@@ -0,0 +1,26 @@

+#!/usr/bin/env bash

+

+# Setup the galaxy user UID/GID and pass control on to supervisor

+if id "$SLURM_USER_NAME" >/dev/null 2>&1; then

+ echo "user exists"

+else

+ echo "user does not exist, creating"

+ useradd -m -d /var/"$SLURM_USER_NAME" "$SLURM_USER_NAME"

+fi

+usermod -u $SLURM_UID $SLURM_USER_NAME

+groupmod -g $SLURM_GID $SLURM_USER_NAME

+if [ ! -f "$MUNGE_KEY_PATH" ]

+ then

+ cp /etc/munge/munge.key "$MUNGE_KEY_PATH"

+fi

+

+if [ ! -f "$SLURM_CONF_PATH" ]

+ then

+ python /usr/local/bin/configure_slurm.py

+ cp /etc/slurm-llnl/slurm.conf "$SLURM_CONF_PATH"

+fi

+mkdir -p /tmp/slurm

+chown $SLURM_USER_NAME /tmp/slurm

+ln -sf "$GALAXY_DIR" "$SYMLINK_TARGET"

+ln -sf "$SLURM_CONF_PATH" /etc/slurm-llnl/slurm.conf

+exec /usr/local/bin/supervisord -n -c /etc/supervisor/supervisord.conf

diff --git a/compose/galaxy-grafana/supervisor_slurm.conf b/compose/galaxy-grafana/supervisor_slurm.conf

new file mode 100644

index 000000000..06482a1c5

--- /dev/null

+++ b/compose/galaxy-grafana/supervisor_slurm.conf

@@ -0,0 +1,18 @@

+[program:munge]

+user=root

+command=/usr/sbin/munged --key-file=%(ENV_MUNGE_KEY_PATH)s -F --force

+

+[program:slurmctld]

+user=root

+command=/usr/sbin/slurmctld -D -L /var/log/slurm-llnl/slurmctld.log -f %(ENV_SLURM_CONF_PATH)s

+autostart = %(ENV_SLURMCTLD_AUTOSTART)s

+autorestart = true

+priority = 200

+

+[program:slurmd]

+user=root

+command=/usr/sbin/slurmd -f %(ENV_SLURM_CONF_PATH)s -D -L /var/log/slurm-llnl/slurmd.log

+autostart = %(ENV_SLURMD_AUTOSTART)s

+autorestart = true

+priority = 300

+

diff --git a/compose/galaxy-htcondor-base/Dockerfile b/compose/galaxy-htcondor-base/Dockerfile

index c99a9a4c3..2469c4434 100644

--- a/compose/galaxy-htcondor-base/Dockerfile

+++ b/compose/galaxy-htcondor-base/Dockerfile

@@ -7,10 +7,18 @@ RUN echo "force-unsafe-io" > /etc/dpkg/dpkg.cfg.d/02apt-speedup && \

echo 'Acquire::Retries "5";' > /etc/apt/apt.conf.d/99AcquireRetries && \

locale-gen en_US.UTF-8 && dpkg-reconfigure locales && \

apt-get update -qq && apt-get install -y --no-install-recommends \

- apt-transport-https wget supervisor unattended-upgrades && \

+ apt-transport-https wget supervisor unattended-upgrades ca-certificates && \

# Condor mirror is really unreliable and times out too often, using a copy of the deb from cargo port

#echo "deb [arch=amd64] http://research.cs.wisc.edu/htcondor/ubuntu/stable/ trusty contrib" > /etc/apt/sources.list.d/htcondor.list

- wget -q --no-check-certificate https://depot.galaxyproject.org/software/condor/condor_8.6.3_linux_all.deb && \

+ wget -q https://depot.galaxyproject.org/software/condor/condor_8.6.3_linux_all.deb && \

dpkg -i condor_8.6.3_linux_all.deb ; apt-get install -f -y && \

rm condor_8.6.3_linux_all.deb && \

rm -rf /var/lib/apt/lists/*

+

+RUN wget https://dl.influxdata.com/telegraf/releases/telegraf-1.5.0_linux_amd64.tar.gz && \

+ cd / && tar xvfz telegraf-1.5.0_linux_amd64.tar.gz && \

+ cp -Rv telegraf/* / && \

+ rm -rf telegraf && \

+ rm telegraf-1.5.0_linux_amd64.tar.gz

+

+ADD telegraf.conf /etc/telegraf/telegraf.conf

diff --git a/compose/galaxy-htcondor-base/telegraf.conf b/compose/galaxy-htcondor-base/telegraf.conf

new file mode 100644

index 000000000..70db77900

--- /dev/null

+++ b/compose/galaxy-htcondor-base/telegraf.conf

@@ -0,0 +1,94 @@

+# Global tags can be specified here in key="value" format.

+[global_tags]

+ dc = "docker" # will tag all metrics with dc=us-east-1

+

+# Configuration for telegraf agent

+[agent]

+ interval = "10s"

+ round_interval = true

+ metric_batch_size = 1000

+ metric_buffer_limit = 10000

+ collection_jitter = "0s"

+ flush_interval = "10s"

+ flush_jitter = "0s"

+ precision = ""

+ debug = false

+ quiet = false

+ logfile = ""

+ hostname = ""

+ omit_hostname = false

+

+

+# Configuration for influxdb server to send metrics to

+[[outputs.influxdb]]

+ urls = ["http://influxdb:8086"] # required

+ database = "default" # required

+ retention_policy = ""

+ write_consistency = "any"

+ timeout = "5s"

+

+

+# Read metrics about cpu usage

+[[inputs.cpu]]

+ percpu = true

+ totalcpu = true

+ collect_cpu_time = false

+ report_active = false

+

+# Read metrics about disk usage by mount point

+[[inputs.disk]]

+ ignore_fs = ["tmpfs", "devtmpfs", "devfs"]

+

+# Read metrics about disk IO by device

+[[inputs.diskio]]

+

+# Get kernel statistics from /proc/stat

+[[inputs.kernel]]

+ # no configuration

+

+

+# Read metrics about memory usage

+[[inputs.mem]]

+ # no configuration

+

+

+# Get the number of processes and group them by status

+[[inputs.processes]]

+ # no configuration

+

+

+# Read metrics about swap memory usage

+[[inputs.swap]]

+ # no configuration

+

+

+# Read metrics about system load & uptime

+[[inputs.system]]

+ # no configuration

+

+

+# # Collect statistics about itself

+[[inputs.internal]]

+ collect_memstats = true

+

+# Get kernel statistics from /proc/vmstat

+[[inputs.kernel_vmstat]]

+ # no configuration

+

+

+# Provides Linux sysctl fs metrics

+[[inputs.linux_sysctl_fs]]

+ # no configuration

+

+# Read metrics about network interface usage

+[[inputs.net]]

+ ## By default, telegraf gathers stats from any up interface (excluding loopback)

+ ## Setting interfaces will tell it to gather these explicit interfaces,

+ ## regardless of status.

+ ##

+ # interfaces = ["eth0"]

+

+# Read TCP metrics such as established, time wait and sockets counts.

+[[inputs.netstat]]

+ # no configuration

+

diff --git a/compose/galaxy-htcondor-executor/Dockerfile b/compose/galaxy-htcondor-executor/Dockerfile

index 7b2288ae0..b2992e778 100644

--- a/compose/galaxy-htcondor-executor/Dockerfile

+++ b/compose/galaxy-htcondor-executor/Dockerfile

@@ -1,4 +1,4 @@

-FROM quay.io/bgruening/galaxy-htcondor-base

+FROM quay.io/bgruening/galaxy-htcondor-base:18.01

ENV GALAXY_USER=galaxy \

GALAXY_UID=1450 \

diff --git a/compose/galaxy-htcondor-executor/startup.sh b/compose/galaxy-htcondor-executor/startup.sh

index cf8bb9645..cf7f728ab 100644

--- a/compose/galaxy-htcondor-executor/startup.sh

+++ b/compose/galaxy-htcondor-executor/startup.sh

@@ -29,6 +29,7 @@ UID_DOMAIN = galaxy

SCHED_NAME = $CONDOR_HOST

" > /etc/condor/condor_config.local

+/usr/bin/telegraf --config /etc/telegraf/telegraf.conf &

sudo -u condor touch /var/log/condor/StartLog

sudo -u condor touch /var/log/condor/StarterLog

tail -f -n 1000 /var/log/condor/StartLog /var/log/condor/StarterLog &

diff --git a/compose/galaxy-htcondor/Dockerfile b/compose/galaxy-htcondor/Dockerfile

index 1e5fd7bec..401373a3f 100644

--- a/compose/galaxy-htcondor/Dockerfile

+++ b/compose/galaxy-htcondor/Dockerfile

@@ -1,4 +1,4 @@

-FROM quay.io/bgruening/galaxy-htcondor-base

+FROM quay.io/bgruening/galaxy-htcondor-base:18.01

ADD condor_config.local /etc/condor/condor_config.local

ADD supervisord.conf /etc/supervisord.conf

diff --git a/compose/galaxy-htcondor/supervisord.conf b/compose/galaxy-htcondor/supervisord.conf

index d63b615c7..b4c2d8265 100644

--- a/compose/galaxy-htcondor/supervisord.conf

+++ b/compose/galaxy-htcondor/supervisord.conf

@@ -42,6 +42,18 @@ autostart=true

autorestart=false

user=condor

+[program:telegraf]

+command=/usr/bin/telegraf --config /etc/telegraf/telegraf.conf

+stdout_logfile=/dev/stdout

+stdout_logfile_maxbytes=0

+stderr_logfile=/dev/stderr

+stderr_logfile_maxbytes=0

+stopwaitsecs=1

+startretries=5

+autostart=true

+autorestart=false

+user=root

+

[rpcinterface:supervisor]

supervisor.rpcinterface_factory = supervisor.rpcinterface:make_main_rpcinterface

diff --git a/compose/galaxy-init/Dockerfile b/compose/galaxy-init/Dockerfile

index 7873a7f4b..ae570e91e 100644

--- a/compose/galaxy-init/Dockerfile

+++ b/compose/galaxy-init/Dockerfile

@@ -2,11 +2,11 @@

#

# VERSION Galaxy-central

-FROM quay.io/bgruening/galaxy-base

+FROM quay.io/bgruening/galaxy-base:18.01

MAINTAINER Björn A. Grüning, bjoern.gruening@gmail.com

-ARG GALAXY_RELEASE=release_17.09

+ARG GALAXY_RELEASE=release_18.01

ARG GALAXY_REPO=galaxyproject/galaxy

# Create these folders and link to target directory for installation

@@ -22,11 +22,13 @@ RUN mkdir -p /export /galaxy-export && \

ln -s -f /galaxy-export/venv /export/venv && \

ln -s -f /galaxy-export/galaxy-central /export/galaxy-central && \

chown -R $GALAXY_USER:$GALAXY_USER /galaxy-export /export && \

- wget -q -O - https://api.github.com/repos/$GALAXY_REPO/tarball/$GALAXY_RELEASE | tar xz --strip-components=1 -C $GALAXY_ROOT && \

+ wget -q -O - https://api.github.com/repos/$GALAXY_REPO/tarball/release_18.01 | tar xz --strip-components=1 -C $GALAXY_ROOT && \

virtualenv $GALAXY_VIRTUAL_ENV && \

+ cp $GALAXY_ROOT/config/galaxy.yml.sample $GALAXY_CONFIG_FILE && \

. $GALAXY_VIRTUAL_ENV/bin/activate && pip install pip --upgrade && \

chown -R $GALAXY_USER:$GALAXY_USER $GALAXY_VIRTUAL_ENV/* && \

- chown -R $GALAXY_USER:$GALAXY_USER $GALAXY_ROOT/*

+ chown -R $GALAXY_USER:$GALAXY_USER $GALAXY_ROOT/* && \

+ chown -R $GALAXY_USER:$GALAXY_USER $GALAXY_CONFIG_FILE

ADD config $GALAXY_ROOT/config

ADD welcome /galaxy-export/welcome

@@ -39,13 +41,17 @@ RUN ansible-playbook /ansible/provision.yml \

--extra-vars galaxy_config_file=$GALAXY_CONFIG_FILE \

--extra-vars galaxy_extras_config_condor=True \

--extra-vars galaxy_extras_config_condor_docker=True \

- --extra-vars galaxy_extras_config_k8_jobs=True \

+ --extra-vars galaxy_extras_config_k8s_jobs=True \

--extra-vars galaxy_minimum_version=17.09 \

--extra-vars galaxy_extras_config_rabbitmq=False \

--extra-vars nginx_upload_store_path=/export/nginx_upload_store \

--extra-vars nginx_welcome_location=$NGINX_WELCOME_LOCATION \

--extra-vars nginx_welcome_path=$NGINX_WELCOME_PATH \

- --tags=ie,pbs,slurm,uwsgi,metrics,k8 -c local && \

+ #--extra-vars galaxy_extras_config_container_resolution=True \

+ #--extra-vars container_resolution_explicit=True \

+ #--extra-vars container_resolution_cached_mulled=False \

+ #--extra-vars container_resolution_build_mulled=False \

+ --tags=ie,pbs,slurm,uwsgi,metrics,k8s -c local && \

apt-get autoremove -y && apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

#--extra-vars galaxy_extras_config_container_resolution=True \

@@ -66,13 +72,12 @@ WORKDIR $GALAXY_ROOT

# prefetch Python wheels

# Install all required Node dependencies. This is required to get proxy support to work for Interactive Environments

-RUN ./scripts/common_startup.sh && \

+RUN ./scripts/common_startup.sh --skip-client-build && \

+ . $GALAXY_VIRTUAL_ENV/bin/activate && \

+ python ./scripts/manage_tool_dependencies.py -c "$GALAXY_CONFIG_FILE" init_if_needed && \

+ # Install all required Node dependencies. This is required to get proxy support to work for Interactive Environments

cd $GALAXY_ROOT/lib/galaxy/web/proxy/js && \

- npm install && \

- wget -q https://repo.continuum.io/miniconda/Miniconda3-4.0.5-Linux-x86_64.sh && \

- bash Miniconda3-4.0.5-Linux-x86_64.sh -b -p $GALAXY_CONDA_PREFIX && \

- rm Miniconda3-4.0.5-Linux-x86_64.sh && \

- $GALAXY_CONDA_PREFIX/bin/conda install -y conda==3.19.3

+ npm install

# the following can be removed with 18.01

RUN /tool_deps/_conda/bin/conda create -y --override-channels --channel iuc --channel bioconda --channel conda-forge --channel defaults --channel r --name mulled-v1-eb2018fd3ce0fcbcee48a4bf89c57219add691c16729281b6c46b30f08339397 samtools=1.3.1 bcftools=1.5

@@ -86,8 +91,7 @@ RUN mv $GALAXY_ROOT/config /galaxy-export/config && ln -s -f /export/config $GAL

mv $GALAXY_ROOT/tool-data /galaxy-export/tool-data && ln -s -f /export/tool-data $GALAXY_ROOT/tool-data && \

mv $GALAXY_ROOT/display_applications /galaxy-export/display_applications && ln -s -f /export/display_applications $GALAXY_ROOT/display_applications && \

mv $GALAXY_ROOT/database /galaxy-export/database && ln -s -f /export/database $GALAXY_ROOT/database && \

- rm -rf $GALARY_ROOT/.venv && ln -s -f /export/venv $GALAXY_ROOT/.venv && ln -s -f /export/venv $GALAXY_ROOT/venv && \

- cp /galaxy-export/config/galaxy.ini.sample /galaxy-export/config/galaxy.ini

+ rm -rf $GALARY_ROOT/.venv && ln -s -f /export/venv $GALAXY_ROOT/.venv && ln -s -f /export/venv $GALAXY_ROOT/venv

WORKDIR /galaxy-export

diff --git a/compose/galaxy-postgres/init-galaxy-db.sql.in b/compose/galaxy-postgres/init-galaxy-db.sql.in

index 2a3da8c0a..77c16f4ce 100644

--- a/compose/galaxy-postgres/init-galaxy-db.sql.in

+++ b/compose/galaxy-postgres/init-galaxy-db.sql.in

@@ -467,7 +467,8 @@ CREATE TABLE dataset_collection (

create_time timestamp without time zone,

update_time timestamp without time zone,

populated_state character varying(64) DEFAULT 'ok'::character varying NOT NULL,

- populated_state_message text

+ populated_state_message text,

+ element_count integer

);

@@ -1326,7 +1327,8 @@ CREATE TABLE history_dataset_association (

purged boolean,

tool_version text,

extended_metadata_id integer,

- hidden_beneath_collection_instance_id integer

+ hidden_beneath_collection_instance_id integer,

+ version integer

);

@@ -1394,6 +1396,41 @@ CREATE SEQUENCE history_dataset_association_display_at_authorization_id_seq

ALTER SEQUENCE history_dataset_association_display_at_authorization_id_seq OWNED BY history_dataset_association_display_at_authorization.id;

+--

+-- Name: history_dataset_association_history; Type: TABLE; Schema: public; Owner: -

+--

+

+CREATE TABLE history_dataset_association_history (

+ id integer NOT NULL,

+ history_dataset_association_id integer,

+ update_time timestamp without time zone,

+ version integer,

+ name character varying(255),

+ extension character varying(64),

+ metadata bytea,

+ extended_metadata_id integer

+);

+

+

+--

+-- Name: history_dataset_association_history_id_seq; Type: SEQUENCE; Schema: public; Owner: -

+--

+

+CREATE SEQUENCE history_dataset_association_history_id_seq

+ START WITH 1

+ INCREMENT BY 1

+ NO MINVALUE

+ NO MAXVALUE

+ CACHE 1;

+

+

+--

+-- Name: history_dataset_association_history_id_seq; Type: SEQUENCE OWNED BY; Schema: public; Owner: -

+--

+

+ALTER SEQUENCE history_dataset_association_history_id_seq OWNED BY history_dataset_association_history.id;

+

+

--

-- Name: history_dataset_association_id_seq; Type: SEQUENCE; Schema: public; Owner: -

--

@@ -1553,7 +1590,9 @@ CREATE TABLE history_dataset_collection_association (

deleted boolean,

visible boolean,

copied_from_history_dataset_collection_association_id integer,

- implicit_output_name character varying(255)

+ implicit_output_name character varying(255),

+ implicit_collection_jobs_id integer,

+ job_id integer

);

@@ -1755,6 +1794,66 @@ CREATE SEQUENCE history_user_share_association_id_seq

ALTER SEQUENCE history_user_share_association_id_seq OWNED BY history_user_share_association.id;

+--

+-- Name: implicit_collection_jobs; Type: TABLE; Schema: public; Owner: -

+--

+

+CREATE TABLE implicit_collection_jobs (

+ id integer NOT NULL,

+ populated_state character varying(64) NOT NULL

+);

+

+

+--

+-- Name: implicit_collection_jobs_id_seq; Type: SEQUENCE; Schema: public; Owner: -

+--

+

+CREATE SEQUENCE implicit_collection_jobs_id_seq

+ START WITH 1

+ INCREMENT BY 1

+ NO MINVALUE

+ NO MAXVALUE

+ CACHE 1;

+

+

+--

+-- Name: implicit_collection_jobs_id_seq; Type: SEQUENCE OWNED BY; Schema: public; Owner: -

+--

+

+ALTER SEQUENCE implicit_collection_jobs_id_seq OWNED BY implicit_collection_jobs.id;

+

+

+--

+-- Name: implicit_collection_jobs_job_association; Type: TABLE; Schema: public; Owner: -

+--

+

+CREATE TABLE implicit_collection_jobs_job_association (

+ implicit_collection_jobs_id integer,

+ id integer NOT NULL,

+ job_id integer,

+ order_index integer NOT NULL

+);

+

+

+--

+-- Name: implicit_collection_jobs_job_association_id_seq; Type: SEQUENCE; Schema: public; Owner: -

+--

+

+CREATE SEQUENCE implicit_collection_jobs_job_association_id_seq

+ START WITH 1

+ INCREMENT BY 1

+ NO MINVALUE

+ NO MAXVALUE

+ CACHE 1;

+

+

+--

+-- Name: implicit_collection_jobs_job_association_id_seq; Type: SEQUENCE OWNED BY; Schema: public; Owner: -

+--

+

+ALTER SEQUENCE implicit_collection_jobs_job_association_id_seq OWNED BY implicit_collection_jobs_job_association.id;

+

+

--

-- Name: implicitly_converted_dataset_association; Type: TABLE; Schema: public; Owner: -

--

@@ -1854,7 +1953,8 @@ CREATE TABLE job (

exit_code integer,

destination_id character varying(255),

destination_params bytea,

- dependencies bytea

+ dependencies bytea,

+ copied_from_job_id integer

);

@@ -2148,7 +2248,8 @@ CREATE TABLE job_to_input_dataset (

id integer NOT NULL,

job_id integer,

dataset_id integer,

- name character varying(255)

+ name character varying(255),

+ dataset_version integer

);

@@ -4795,6 +4896,70 @@ CREATE SEQUENCE workflow_invocation_id_seq

ALTER SEQUENCE workflow_invocation_id_seq OWNED BY workflow_invocation.id;

+--

+-- Name: workflow_invocation_output_dataset_association; Type: TABLE; Schema: public; Owner: -

+--

+

+CREATE TABLE workflow_invocation_output_dataset_association (

+ id integer NOT NULL,

+ workflow_invocation_id integer,

+ workflow_step_id integer,

+ dataset_id integer,

+ workflow_output_id integer

+);

+

+

+--

+-- Name: workflow_invocation_output_dataset_association_id_seq; Type: SEQUENCE; Schema: public; Owner: -

+--

+

+CREATE SEQUENCE workflow_invocation_output_dataset_association_id_seq

+ START WITH 1

+ INCREMENT BY 1

+ NO MINVALUE

+ NO MAXVALUE

+ CACHE 1;

+

+

+--

+-- Name: workflow_invocation_output_dataset_association_id_seq; Type: SEQUENCE OWNED BY; Schema: public; Owner: -

+--

+

+ALTER SEQUENCE workflow_invocation_output_dataset_association_id_seq OWNED BY workflow_invocation_output_dataset_association.id;

+

+

+--

+-- Name: workflow_invocation_output_dataset_collection_association; Type: TABLE; Schema: public; Owner: -

+--

+

+CREATE TABLE workflow_invocation_output_dataset_collection_association (

+ id integer NOT NULL,

+ workflow_invocation_id integer,

+ workflow_step_id integer,

+ dataset_collection_id integer,

+ workflow_output_id integer

+);

+

+

+--

+-- Name: workflow_invocation_output_dataset_collection_associatio_id_seq; Type: SEQUENCE; Schema: public; Owner: -

+--

+

+CREATE SEQUENCE workflow_invocation_output_dataset_collection_associatio_id_seq

+ START WITH 1

+ INCREMENT BY 1

+ NO MINVALUE

+ NO MAXVALUE

+ CACHE 1;

+

+

+--

+-- Name: workflow_invocation_output_dataset_collection_associatio_id_seq; Type: SEQUENCE OWNED BY; Schema: public; Owner: -

+--

+

+ALTER SEQUENCE workflow_invocation_output_dataset_collection_associatio_id_seq OWNED BY workflow_invocation_output_dataset_collection_association.id;

+

+

--

-- Name: workflow_invocation_step; Type: TABLE; Schema: public; Owner: -

--

@@ -4806,7 +4971,9 @@ CREATE TABLE workflow_invocation_step (

workflow_invocation_id integer NOT NULL,

workflow_step_id integer NOT NULL,

job_id integer,

- action bytea

+ action bytea,

+ implicit_collection_jobs_id integer,

+ state character varying(64)

);

@@ -4829,6 +4996,69 @@ CREATE SEQUENCE workflow_invocation_step_id_seq

ALTER SEQUENCE workflow_invocation_step_id_seq OWNED BY workflow_invocation_step.id;

+--

+-- Name: workflow_invocation_step_output_dataset_association; Type: TABLE; Schema: public; Owner: -

+--

+

+CREATE TABLE workflow_invocation_step_output_dataset_association (

+ id integer NOT NULL,

+ workflow_invocation_step_id integer,

+ dataset_id integer,

+ output_name character varying(255)

+);

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_association_id_seq; Type: SEQUENCE; Schema: public; Owner: -

+--

+

+CREATE SEQUENCE workflow_invocation_step_output_dataset_association_id_seq

+ START WITH 1

+ INCREMENT BY 1

+ NO MINVALUE

+ NO MAXVALUE

+ CACHE 1;

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_association_id_seq; Type: SEQUENCE OWNED BY; Schema: public; Owner: -

+--

+

+ALTER SEQUENCE workflow_invocation_step_output_dataset_association_id_seq OWNED BY workflow_invocation_step_output_dataset_association.id;

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_collection_association; Type: TABLE; Schema: public; Owner: -

+--

+

+CREATE TABLE workflow_invocation_step_output_dataset_collection_association (

+ id integer NOT NULL,

+ workflow_invocation_step_id integer,

+ workflow_step_id integer,

+ dataset_collection_id integer,

+ output_name character varying(255)

+);

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_collection_assoc_id_seq; Type: SEQUENCE; Schema: public; Owner: -

+--

+

+CREATE SEQUENCE workflow_invocation_step_output_dataset_collection_assoc_id_seq

+ START WITH 1

+ INCREMENT BY 1

+ NO MINVALUE

+ NO MAXVALUE

+ CACHE 1;

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_collection_assoc_id_seq; Type: SEQUENCE OWNED BY; Schema: public; Owner: -

+--

+

+ALTER SEQUENCE workflow_invocation_step_output_dataset_collection_assoc_id_seq OWNED BY workflow_invocation_step_output_dataset_collection_association.id;

+

+

--

-- Name: workflow_invocation_to_subworkflow_invocation_association; Type: TABLE; Schema: public; Owner: -

--

@@ -5518,6 +5748,13 @@ ALTER TABLE ONLY history_dataset_association_annotation_association ALTER COLUMN

ALTER TABLE ONLY history_dataset_association_display_at_authorization ALTER COLUMN id SET DEFAULT nextval('history_dataset_association_display_at_authorization_id_seq'::regclass);

+--

+-- Name: history_dataset_association_history id; Type: DEFAULT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY history_dataset_association_history ALTER COLUMN id SET DEFAULT nextval('history_dataset_association_history_id_seq'::regclass);

+

+

--

-- Name: history_dataset_association_rating_association id; Type: DEFAULT; Schema: public; Owner: -

--

@@ -5588,6 +5825,20 @@ ALTER TABLE ONLY history_tag_association ALTER COLUMN id SET DEFAULT nextval('hi

ALTER TABLE ONLY history_user_share_association ALTER COLUMN id SET DEFAULT nextval('history_user_share_association_id_seq'::regclass);

+--

+-- Name: implicit_collection_jobs id; Type: DEFAULT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY implicit_collection_jobs ALTER COLUMN id SET DEFAULT nextval('implicit_collection_jobs_id_seq'::regclass);

+

+

+--

+-- Name: implicit_collection_jobs_job_association id; Type: DEFAULT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY implicit_collection_jobs_job_association ALTER COLUMN id SET DEFAULT nextval('implicit_collection_jobs_job_association_id_seq'::regclass);

+

+

--

-- Name: implicitly_converted_dataset_association id; Type: DEFAULT; Schema: public; Owner: -

--

@@ -6211,6 +6462,20 @@ ALTER TABLE ONLY workflow ALTER COLUMN id SET DEFAULT nextval('workflow_id_seq':

ALTER TABLE ONLY workflow_invocation ALTER COLUMN id SET DEFAULT nextval('workflow_invocation_id_seq'::regclass);

+--

+-- Name: workflow_invocation_output_dataset_association id; Type: DEFAULT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_association ALTER COLUMN id SET DEFAULT nextval('workflow_invocation_output_dataset_association_id_seq'::regclass);

+

+

+--

+-- Name: workflow_invocation_output_dataset_collection_association id; Type: DEFAULT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_collection_association ALTER COLUMN id SET DEFAULT nextval('workflow_invocation_output_dataset_collection_associatio_id_seq'::regclass);

+

+

--

-- Name: workflow_invocation_step id; Type: DEFAULT; Schema: public; Owner: -

--

@@ -6218,6 +6483,20 @@ ALTER TABLE ONLY workflow_invocation ALTER COLUMN id SET DEFAULT nextval('workfl

ALTER TABLE ONLY workflow_invocation_step ALTER COLUMN id SET DEFAULT nextval('workflow_invocation_step_id_seq'::regclass);

+--

+-- Name: workflow_invocation_step_output_dataset_association id; Type: DEFAULT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_step_output_dataset_association ALTER COLUMN id SET DEFAULT nextval('workflow_invocation_step_output_dataset_association_id_seq'::regclass);

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_collection_association id; Type: DEFAULT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_step_output_dataset_collection_association ALTER COLUMN id SET DEFAULT nextval('workflow_invocation_step_output_dataset_collection_assoc_id_seq'::regclass);

+

+

--

-- Name: workflow_invocation_to_subworkflow_invocation_association id; Type: DEFAULT; Schema: public; Owner: -

--

@@ -6509,7 +6788,7 @@ COPY dataset (id, create_time, update_time, state, deleted, purged, purgable, ex

-- Data for Name: dataset_collection; Type: TABLE DATA; Schema: public; Owner: -

--

-COPY dataset_collection (id, collection_type, create_time, update_time, populated_state, populated_state_message) FROM stdin;

+COPY dataset_collection (id, collection_type, create_time, update_time, populated_state, populated_state_message, element_count) FROM stdin;

\.

@@ -6884,7 +7163,7 @@ SELECT pg_catalog.setval('history_annotation_association_id_seq', 1, false);

-- Data for Name: history_dataset_association; Type: TABLE DATA; Schema: public; Owner: -

--

-COPY history_dataset_association (id, history_id, dataset_id, create_time, update_time, copied_from_history_dataset_association_id, hid, name, info, blurb, peek, extension, metadata, parent_id, designation, deleted, visible, copied_from_library_dataset_dataset_association_id, state, purged, tool_version, extended_metadata_id, hidden_beneath_collection_instance_id) FROM stdin;

+COPY history_dataset_association (id, history_id, dataset_id, create_time, update_time, copied_from_history_dataset_association_id, hid, name, info, blurb, peek, extension, metadata, parent_id, designation, deleted, visible, copied_from_library_dataset_dataset_association_id, state, purged, tool_version, extended_metadata_id, hidden_beneath_collection_instance_id, version) FROM stdin;

\.

@@ -6918,6 +7197,21 @@ COPY history_dataset_association_display_at_authorization (id, create_time, upda

SELECT pg_catalog.setval('history_dataset_association_display_at_authorization_id_seq', 1, false);

+--

+-- Data for Name: history_dataset_association_history; Type: TABLE DATA; Schema: public; Owner: -

+--

+

+COPY history_dataset_association_history (id, history_dataset_association_id, update_time, version, name, extension, metadata, extended_metadata_id) FROM stdin;

+\.

+

+

+--

+-- Name: history_dataset_association_history_id_seq; Type: SEQUENCE SET; Schema: public; Owner: -

+--

+

+SELECT pg_catalog.setval('history_dataset_association_history_id_seq', 1, false);

+

+

--

-- Name: history_dataset_association_id_seq; Type: SEQUENCE SET; Schema: public; Owner: -

--

@@ -6989,7 +7283,7 @@ SELECT pg_catalog.setval('history_dataset_collection_annotation_association_id_s

-- Data for Name: history_dataset_collection_association; Type: TABLE DATA; Schema: public; Owner: -

--

-COPY history_dataset_collection_association (id, collection_id, history_id, hid, name, deleted, visible, copied_from_history_dataset_collection_association_id, implicit_output_name) FROM stdin;

+COPY history_dataset_collection_association (id, collection_id, history_id, hid, name, deleted, visible, copied_from_history_dataset_collection_association_id, implicit_output_name, implicit_collection_jobs_id, job_id) FROM stdin;

\.

@@ -7082,6 +7376,36 @@ COPY history_user_share_association (id, history_id, user_id) FROM stdin;

SELECT pg_catalog.setval('history_user_share_association_id_seq', 1, false);

+--

+-- Data for Name: implicit_collection_jobs; Type: TABLE DATA; Schema: public; Owner: -

+--

+

+COPY implicit_collection_jobs (id, populated_state) FROM stdin;

+\.

+

+

+--

+-- Name: implicit_collection_jobs_id_seq; Type: SEQUENCE SET; Schema: public; Owner: -

+--

+

+SELECT pg_catalog.setval('implicit_collection_jobs_id_seq', 1, false);

+

+

+--

+-- Data for Name: implicit_collection_jobs_job_association; Type: TABLE DATA; Schema: public; Owner: -

+--

+

+COPY implicit_collection_jobs_job_association (implicit_collection_jobs_id, id, job_id, order_index) FROM stdin;

+\.

+

+

+--

+-- Name: implicit_collection_jobs_job_association_id_seq; Type: SEQUENCE SET; Schema: public; Owner: -

+--

+

+SELECT pg_catalog.setval('implicit_collection_jobs_job_association_id_seq', 1, false);

+

+

--

-- Data for Name: implicitly_converted_dataset_association; Type: TABLE DATA; Schema: public; Owner: -

--

@@ -7116,7 +7440,7 @@ SELECT pg_catalog.setval('implicitly_created_dataset_collection_inputs_id_seq',

-- Data for Name: job; Type: TABLE DATA; Schema: public; Owner: -

--

-COPY job (id, create_time, update_time, history_id, tool_id, tool_version, state, info, command_line, param_filename, runner_name, stdout, stderr, traceback, session_id, job_runner_name, job_runner_external_id, library_folder_id, user_id, imported, object_store_id, params, handler, exit_code, destination_id, destination_params, dependencies) FROM stdin;

+COPY job (id, create_time, update_time, history_id, tool_id, tool_version, state, info, command_line, param_filename, runner_name, stdout, stderr, traceback, session_id, job_runner_name, job_runner_external_id, library_folder_id, user_id, imported, object_store_id, params, handler, exit_code, destination_id, destination_params, dependencies, copied_from_job_id) FROM stdin;

\.

@@ -7251,7 +7575,7 @@ SELECT pg_catalog.setval('job_to_implicit_output_dataset_collection_id_seq', 1,

-- Data for Name: job_to_input_dataset; Type: TABLE DATA; Schema: public; Owner: -

--

-COPY job_to_input_dataset (id, job_id, dataset_id, name) FROM stdin;

+COPY job_to_input_dataset (id, job_id, dataset_id, name, dataset_version) FROM stdin;

\.

@@ -7606,7 +7930,7 @@ GalaxyTools lib/tool_shed/galaxy_install/migrate 1

--

COPY migrate_version (repository_id, repository_path, version) FROM stdin;

-Galaxy lib/galaxy/model/migrate 135

+Galaxy lib/galaxy/model/migrate 140

\.

@@ -8443,11 +8767,41 @@ COPY workflow_invocation (id, create_time, update_time, workflow_id, history_id,

SELECT pg_catalog.setval('workflow_invocation_id_seq', 1, false);

+--

+-- Data for Name: workflow_invocation_output_dataset_association; Type: TABLE DATA; Schema: public; Owner: -

+--

+

+COPY workflow_invocation_output_dataset_association (id, workflow_invocation_id, workflow_step_id, dataset_id, workflow_output_id) FROM stdin;

+\.

+

+

+--

+-- Name: workflow_invocation_output_dataset_association_id_seq; Type: SEQUENCE SET; Schema: public; Owner: -

+--

+

+SELECT pg_catalog.setval('workflow_invocation_output_dataset_association_id_seq', 1, false);

+

+

+--

+-- Name: workflow_invocation_output_dataset_collection_associatio_id_seq; Type: SEQUENCE SET; Schema: public; Owner: -

+--

+

+SELECT pg_catalog.setval('workflow_invocation_output_dataset_collection_associatio_id_seq', 1, false);

+

+

+--

+-- Data for Name: workflow_invocation_output_dataset_collection_association; Type: TABLE DATA; Schema: public; Owner: -

+--

+

+COPY workflow_invocation_output_dataset_collection_association (id, workflow_invocation_id, workflow_step_id, dataset_collection_id, workflow_output_id) FROM stdin;

+\.

+

+

--

-- Data for Name: workflow_invocation_step; Type: TABLE DATA; Schema: public; Owner: -

--

-COPY workflow_invocation_step (id, create_time, update_time, workflow_invocation_id, workflow_step_id, job_id, action) FROM stdin;

+COPY workflow_invocation_step (id, create_time, update_time, workflow_invocation_id, workflow_step_id, job_id, action, implicit_collection_jobs_id, state) FROM stdin;

\.

@@ -8458,6 +8812,36 @@ COPY workflow_invocation_step (id, create_time, update_time, workflow_invocation

SELECT pg_catalog.setval('workflow_invocation_step_id_seq', 1, false);

+--

+-- Data for Name: workflow_invocation_step_output_dataset_association; Type: TABLE DATA; Schema: public; Owner: -

+--

+

+COPY workflow_invocation_step_output_dataset_association (id, workflow_invocation_step_id, dataset_id, output_name) FROM stdin;

+\.

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_association_id_seq; Type: SEQUENCE SET; Schema: public; Owner: -

+--

+

+SELECT pg_catalog.setval('workflow_invocation_step_output_dataset_association_id_seq', 1, false);

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_collection_assoc_id_seq; Type: SEQUENCE SET; Schema: public; Owner: -

+--

+

+SELECT pg_catalog.setval('workflow_invocation_step_output_dataset_collection_assoc_id_seq', 1, false);

+

+

+--

+-- Data for Name: workflow_invocation_step_output_dataset_collection_association; Type: TABLE DATA; Schema: public; Owner: -

+--

+

+COPY workflow_invocation_step_output_dataset_collection_association (id, workflow_invocation_step_id, workflow_step_id, dataset_collection_id, output_name) FROM stdin;

+\.

+

+

--

-- Name: workflow_invocation_to_subworkflow_invocation_associatio_id_seq; Type: SEQUENCE SET; Schema: public; Owner: -

--

@@ -8950,6 +9334,14 @@ ALTER TABLE ONLY history_dataset_association_display_at_authorization

ADD CONSTRAINT history_dataset_association_display_at_authorization_pkey PRIMARY KEY (id);

+--

+-- Name: history_dataset_association_history history_dataset_association_history_pkey; Type: CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY history_dataset_association_history

+ ADD CONSTRAINT history_dataset_association_history_pkey PRIMARY KEY (id);

+

+

--

-- Name: history_dataset_association history_dataset_association_pkey; Type: CONSTRAINT; Schema: public; Owner: -

--

@@ -9046,6 +9438,22 @@ ALTER TABLE ONLY history_user_share_association

ADD CONSTRAINT history_user_share_association_pkey PRIMARY KEY (id);

+--

+-- Name: implicit_collection_jobs_job_association implicit_collection_jobs_job_association_pkey; Type: CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY implicit_collection_jobs_job_association

+ ADD CONSTRAINT implicit_collection_jobs_job_association_pkey PRIMARY KEY (id);

+

+

+--

+-- Name: implicit_collection_jobs implicit_collection_jobs_pkey; Type: CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY implicit_collection_jobs

+ ADD CONSTRAINT implicit_collection_jobs_pkey PRIMARY KEY (id);

+

+

--

-- Name: implicitly_converted_dataset_association implicitly_converted_dataset_association_pkey; Type: CONSTRAINT; Schema: public; Owner: -

--

@@ -9774,6 +10182,22 @@ ALTER TABLE ONLY visualization_user_share_association

ADD CONSTRAINT visualization_user_share_association_pkey PRIMARY KEY (id);

+--

+-- Name: workflow_invocation_output_dataset_association workflow_invocation_output_dataset_association_pkey; Type: CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_association

+ ADD CONSTRAINT workflow_invocation_output_dataset_association_pkey PRIMARY KEY (id);

+

+

+--

+-- Name: workflow_invocation_output_dataset_collection_association workflow_invocation_output_dataset_collection_association_pkey; Type: CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_collection_association

+ ADD CONSTRAINT workflow_invocation_output_dataset_collection_association_pkey PRIMARY KEY (id);

+

+

--

-- Name: workflow_invocation workflow_invocation_pkey; Type: CONSTRAINT; Schema: public; Owner: -

--

@@ -9782,6 +10206,22 @@ ALTER TABLE ONLY workflow_invocation

ADD CONSTRAINT workflow_invocation_pkey PRIMARY KEY (id);

+--

+-- Name: workflow_invocation_step_output_dataset_association workflow_invocation_step_output_dataset_association_pkey; Type: CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_step_output_dataset_association

+ ADD CONSTRAINT workflow_invocation_step_output_dataset_association_pkey PRIMARY KEY (id);

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_collection_association workflow_invocation_step_output_dataset_collection_associa_pkey; Type: CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_step_output_dataset_collection_association

+ ADD CONSTRAINT workflow_invocation_step_output_dataset_collection_associa_pkey PRIMARY KEY (id);

+

+

--

-- Name: workflow_invocation_step workflow_invocation_step_pkey; Type: CONSTRAINT; Schema: public; Owner: -

--

@@ -10601,6 +11041,20 @@ CREATE INDEX ix_history_dataset_association_display_at_authorization_a293 ON his

CREATE INDEX ix_history_dataset_association_display_at_authorization_user_id ON history_dataset_association_display_at_authorization USING btree (user_id);

+--

+-- Name: ix_history_dataset_association_history_extended_metadata_id; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_history_dataset_association_history_extended_metadata_id ON history_dataset_association_history USING btree (extended_metadata_id);

+

+

+--

+-- Name: ix_history_dataset_association_history_history_dataset__5f1c; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_history_dataset_association_history_history_dataset__5f1c ON history_dataset_association_history USING btree (history_dataset_association_id);

+

+

--

-- Name: ix_history_dataset_association_history_id; Type: INDEX; Schema: public; Owner: -

--

@@ -10608,6 +11062,13 @@ CREATE INDEX ix_history_dataset_association_display_at_authorization_user_id ON

CREATE INDEX ix_history_dataset_association_history_id ON history_dataset_association USING btree (history_id);

+--

+-- Name: ix_history_dataset_association_history_version; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_history_dataset_association_history_version ON history_dataset_association_history USING btree (version);

+

+

--

-- Name: ix_history_dataset_association_purged; Type: INDEX; Schema: public; Owner: -

--

@@ -10930,6 +11391,20 @@ CREATE INDEX ix_icda_ldda_id ON implicitly_converted_dataset_association USING b

CREATE INDEX ix_icda_ldda_parent_id ON implicitly_converted_dataset_association USING btree (ldda_parent_id);

+--

+-- Name: ix_implicit_collection_jobs_job_association_implicit_co_ea04; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_implicit_collection_jobs_job_association_implicit_co_ea04 ON implicit_collection_jobs_job_association USING btree (implicit_collection_jobs_id);

+

+

+--

+-- Name: ix_implicit_collection_jobs_job_association_job_id; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_implicit_collection_jobs_job_association_job_id ON implicit_collection_jobs_job_association USING btree (job_id);

+

+

--

-- Name: ix_implicitly_converted_dataset_assoc_ldda_parent_id; Type: INDEX; Schema: public; Owner: -

--

@@ -12848,6 +13323,34 @@ CREATE INDEX ix_wfinv_swfinv_wfi ON workflow_invocation_to_subworkflow_invocatio

CREATE INDEX ix_wfreq_inputstep_wfi ON workflow_request_input_step_parameter USING btree (workflow_invocation_id);

+--

+-- Name: ix_workflow_invocation_output_dataset_association_dataset_id; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_workflow_invocation_output_dataset_association_dataset_id ON workflow_invocation_output_dataset_association USING btree (dataset_id);

+

+

+--

+-- Name: ix_workflow_invocation_output_dataset_association_workf_5924; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_workflow_invocation_output_dataset_association_workf_5924 ON workflow_invocation_output_dataset_association USING btree (workflow_invocation_id);

+

+

+--

+-- Name: ix_workflow_invocation_output_dataset_collection_associ_ab6c; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_workflow_invocation_output_dataset_collection_associ_ab6c ON workflow_invocation_output_dataset_collection_association USING btree (workflow_invocation_id);

+

+

+--

+-- Name: ix_workflow_invocation_output_dataset_collection_associ_ec97; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_workflow_invocation_output_dataset_collection_associ_ec97 ON workflow_invocation_output_dataset_collection_association USING btree (dataset_collection_id);

+

+

--

-- Name: ix_workflow_invocation_step_job_id; Type: INDEX; Schema: public; Owner: -

--

@@ -12855,6 +13358,34 @@ CREATE INDEX ix_wfreq_inputstep_wfi ON workflow_request_input_step_parameter USI

CREATE INDEX ix_workflow_invocation_step_job_id ON workflow_invocation_step USING btree (job_id);

+--

+-- Name: ix_workflow_invocation_step_output_dataset_association__66f5; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_workflow_invocation_step_output_dataset_association__66f5 ON workflow_invocation_step_output_dataset_association USING btree (dataset_id);

+

+

+--

+-- Name: ix_workflow_invocation_step_output_dataset_association__bcc0; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_workflow_invocation_step_output_dataset_association__bcc0 ON workflow_invocation_step_output_dataset_association USING btree (workflow_invocation_step_id);

+

+

+--

+-- Name: ix_workflow_invocation_step_output_dataset_collection_a_b73b; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_workflow_invocation_step_output_dataset_collection_a_b73b ON workflow_invocation_step_output_dataset_collection_association USING btree (dataset_collection_id);

+

+

+--

+-- Name: ix_workflow_invocation_step_output_dataset_collection_a_db49; Type: INDEX; Schema: public; Owner: -

+--

+

+CREATE INDEX ix_workflow_invocation_step_output_dataset_collection_a_db49 ON workflow_invocation_step_output_dataset_collection_association USING btree (workflow_invocation_step_id);

+

+

--

-- Name: ix_workflow_invocation_step_workflow_invocation_id; Type: INDEX; Schema: public; Owner: -

--

@@ -13636,6 +14167,14 @@ ALTER TABLE ONLY history_dataset_association

ADD CONSTRAINT history_dataset_association_extended_metadata_id_fkey FOREIGN KEY (extended_metadata_id) REFERENCES extended_metadata(id);

+--

+-- Name: history_dataset_association_history history_dataset_association_h_history_dataset_association__fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY history_dataset_association_history

+ ADD CONSTRAINT history_dataset_association_h_history_dataset_association__fkey FOREIGN KEY (history_dataset_association_id) REFERENCES history_dataset_association(id);

+

+

--

-- Name: history_dataset_association history_dataset_association_hidden_beneath_collection_inst_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

--

@@ -13644,6 +14183,14 @@ ALTER TABLE ONLY history_dataset_association

ADD CONSTRAINT history_dataset_association_hidden_beneath_collection_inst_fkey FOREIGN KEY (hidden_beneath_collection_instance_id) REFERENCES history_dataset_collection_association(id);

+--

+-- Name: history_dataset_association_history history_dataset_association_history_extended_metadata_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY history_dataset_association_history

+ ADD CONSTRAINT history_dataset_association_history_extended_metadata_id_fkey FOREIGN KEY (extended_metadata_id) REFERENCES extended_metadata(id);

+

+

--

-- Name: history_dataset_association history_dataset_association_history_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

--

@@ -13732,6 +14279,14 @@ ALTER TABLE ONLY history_dataset_collection_association

ADD CONSTRAINT history_dataset_collection_as_copied_from_history_dataset__fkey FOREIGN KEY (copied_from_history_dataset_collection_association_id) REFERENCES history_dataset_collection_association(id);

+--

+-- Name: history_dataset_collection_association history_dataset_collection_ass_implicit_collection_jobs_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY history_dataset_collection_association

+ ADD CONSTRAINT history_dataset_collection_ass_implicit_collection_jobs_id_fkey FOREIGN KEY (implicit_collection_jobs_id) REFERENCES implicit_collection_jobs(id);

+

+

--

-- Name: history_dataset_collection_association history_dataset_collection_association_collection_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

--

@@ -13748,6 +14303,14 @@ ALTER TABLE ONLY history_dataset_collection_association

ADD CONSTRAINT history_dataset_collection_association_history_id_fkey FOREIGN KEY (history_id) REFERENCES history(id);

+--

+-- Name: history_dataset_collection_association history_dataset_collection_association_job_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY history_dataset_collection_association

+ ADD CONSTRAINT history_dataset_collection_association_job_id_fkey FOREIGN KEY (job_id) REFERENCES job(id);

+

+

--

-- Name: history_dataset_collection_rating_association history_dataset_collection_ra_history_dataset_collection_i_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

--

@@ -13852,6 +14415,22 @@ ALTER TABLE ONLY history_user_share_association

ADD CONSTRAINT history_user_share_association_user_id_fkey FOREIGN KEY (user_id) REFERENCES galaxy_user(id);

+--

+-- Name: implicit_collection_jobs_job_association implicit_collection_jobs_job_a_implicit_collection_jobs_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY implicit_collection_jobs_job_association

+ ADD CONSTRAINT implicit_collection_jobs_job_a_implicit_collection_jobs_id_fkey FOREIGN KEY (implicit_collection_jobs_id) REFERENCES implicit_collection_jobs(id);

+

+

+--

+-- Name: implicit_collection_jobs_job_association implicit_collection_jobs_job_association_job_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY implicit_collection_jobs_job_association

+ ADD CONSTRAINT implicit_collection_jobs_job_association_job_id_fkey FOREIGN KEY (job_id) REFERENCES job(id);

+

+

--

-- Name: implicitly_converted_dataset_association implicitly_converted_dataset_association_hda_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

--

@@ -15212,6 +15791,78 @@ ALTER TABLE ONLY workflow_invocation

ADD CONSTRAINT workflow_invocation_history_id_fkey FOREIGN KEY (history_id) REFERENCES history(id);

+--

+-- Name: workflow_invocation_output_dataset_association workflow_invocation_output_dataset__workflow_invocation_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_association

+ ADD CONSTRAINT workflow_invocation_output_dataset__workflow_invocation_id_fkey FOREIGN KEY (workflow_invocation_id) REFERENCES workflow_invocation(id);

+

+

+--

+-- Name: workflow_invocation_output_dataset_association workflow_invocation_output_dataset_asso_workflow_output_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_association

+ ADD CONSTRAINT workflow_invocation_output_dataset_asso_workflow_output_id_fkey FOREIGN KEY (workflow_output_id) REFERENCES workflow_output(id);

+

+

+--

+-- Name: workflow_invocation_output_dataset_association workflow_invocation_output_dataset_associ_workflow_step_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_association

+ ADD CONSTRAINT workflow_invocation_output_dataset_associ_workflow_step_id_fkey FOREIGN KEY (workflow_step_id) REFERENCES workflow_step(id);

+

+

+--

+-- Name: workflow_invocation_output_dataset_association workflow_invocation_output_dataset_association_dataset_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_association

+ ADD CONSTRAINT workflow_invocation_output_dataset_association_dataset_id_fkey FOREIGN KEY (dataset_id) REFERENCES history_dataset_association(id);

+

+

+--

+-- Name: workflow_invocation_output_dataset_collection_association workflow_invocation_output_dataset_c_dataset_collection_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_collection_association

+ ADD CONSTRAINT workflow_invocation_output_dataset_c_dataset_collection_id_fkey FOREIGN KEY (dataset_collection_id) REFERENCES history_dataset_collection_association(id);

+

+

+--

+-- Name: workflow_invocation_output_dataset_collection_association workflow_invocation_output_dataset_coll_workflow_output_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_collection_association

+ ADD CONSTRAINT workflow_invocation_output_dataset_coll_workflow_output_id_fkey FOREIGN KEY (workflow_output_id) REFERENCES workflow_output(id);

+

+

+--

+-- Name: workflow_invocation_output_dataset_collection_association workflow_invocation_output_dataset_collec_workflow_step_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_collection_association

+ ADD CONSTRAINT workflow_invocation_output_dataset_collec_workflow_step_id_fkey FOREIGN KEY (workflow_step_id) REFERENCES workflow_step(id);

+

+

+--

+-- Name: workflow_invocation_output_dataset_collection_association workflow_invocation_output_dataset_workflow_invocation_id_fkey1; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_output_dataset_collection_association

+ ADD CONSTRAINT workflow_invocation_output_dataset_workflow_invocation_id_fkey1 FOREIGN KEY (workflow_invocation_id) REFERENCES workflow_invocation(id);

+

+

+--

+-- Name: workflow_invocation_step workflow_invocation_step_implicit_collection_jobs_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_step

+ ADD CONSTRAINT workflow_invocation_step_implicit_collection_jobs_id_fkey FOREIGN KEY (implicit_collection_jobs_id) REFERENCES implicit_collection_jobs(id);

+

+

--

-- Name: workflow_invocation_step workflow_invocation_step_job_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

--

@@ -15220,6 +15871,46 @@ ALTER TABLE ONLY workflow_invocation_step

ADD CONSTRAINT workflow_invocation_step_job_id_fkey FOREIGN KEY (job_id) REFERENCES job(id);

+--

+-- Name: workflow_invocation_step_output_dataset_collection_association workflow_invocation_step_outp_workflow_invocation_step_id_fkey1; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_step_output_dataset_collection_association

+ ADD CONSTRAINT workflow_invocation_step_outp_workflow_invocation_step_id_fkey1 FOREIGN KEY (workflow_invocation_step_id) REFERENCES workflow_invocation_step(id);

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_association workflow_invocation_step_outpu_workflow_invocation_step_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_step_output_dataset_association

+ ADD CONSTRAINT workflow_invocation_step_outpu_workflow_invocation_step_id_fkey FOREIGN KEY (workflow_invocation_step_id) REFERENCES workflow_invocation_step(id);

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_collection_association workflow_invocation_step_output_data_dataset_collection_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_step_output_dataset_collection_association

+ ADD CONSTRAINT workflow_invocation_step_output_data_dataset_collection_id_fkey FOREIGN KEY (dataset_collection_id) REFERENCES history_dataset_collection_association(id);

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_association workflow_invocation_step_output_dataset_associa_dataset_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_step_output_dataset_association

+ ADD CONSTRAINT workflow_invocation_step_output_dataset_associa_dataset_id_fkey FOREIGN KEY (dataset_id) REFERENCES history_dataset_association(id);

+

+

+--

+-- Name: workflow_invocation_step_output_dataset_collection_association workflow_invocation_step_output_dataset_c_workflow_step_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

+--

+

+ALTER TABLE ONLY workflow_invocation_step_output_dataset_collection_association

+ ADD CONSTRAINT workflow_invocation_step_output_dataset_c_workflow_step_id_fkey FOREIGN KEY (workflow_step_id) REFERENCES workflow_step(id);

+

+

--

-- Name: workflow_invocation_step workflow_invocation_step_workflow_invocation_id_fkey; Type: FK CONSTRAINT; Schema: public; Owner: -

--

diff --git a/compose/galaxy-web/Dockerfile b/compose/galaxy-web/Dockerfile

index 23d81a36f..640dbbcfb 100644

--- a/compose/galaxy-web/Dockerfile

+++ b/compose/galaxy-web/Dockerfile

@@ -2,10 +2,12 @@

#

# VERSION Galaxy-central

-FROM quay.io/bgruening/galaxy-base

+FROM quay.io/bgruening/galaxy-base:18.01

MAINTAINER Björn A. Grüning, bjoern.gruening@gmail.com

+ARG GALAXY_ANSIBLE_TAGS=supervisor,startup,scripts,nginx,cvmfs

+

ENV GALAXY_DESTINATIONS_DEFAULT=slurm_cluster \

# The following 2 ENV vars can be used to set the number of uwsgi processes and threads

UWSGI_PROCESSES=2 \

@@ -34,7 +36,7 @@ RUN echo "force-unsafe-io" > /etc/dpkg/dpkg.cfg.d/02apt-speedup && \

gpasswd -a $GALAXY_USER docker && \

mkdir -p /etc/supervisor/conf.d/ /var/log/supervisor/ && \

pip install --upgrade pip && \

- pip install "ephemeris<0.8" supervisor --upgrade && \

+ pip install ephemeris supervisor --upgrade && \

ln -s /usr/local/bin/supervisord /usr/bin/supervisord

RUN rm -f /usr/bin/startup && \

@@ -66,7 +68,7 @@ RUN rm -f /usr/bin/startup && \

# Used for detecting privileged mode

--extra-vars host_docker_legacy=False \

--extra-vars galaxy_extras_docker_legacy=False \

- --tags=supervisor,startup,scripts,nginx,cvmfs -c local && \

+ --tags=$GALAXY_ANSIBLE_TAGS -c local && \

apt-get autoremove -y && apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

RUN chmod +x /usr/bin/startup

diff --git a/galaxy/Dockerfile b/galaxy/Dockerfile

index 4cf3f5f5c..c55901dc2 100644

--- a/galaxy/Dockerfile

+++ b/galaxy/Dockerfile

@@ -9,17 +9,18 @@ MAINTAINER Björn A. Grüning, bjoern.gruening@gmail.com

ARG GALAXY_RELEASE

ARG GALAXY_REPO

-ENV GALAXY_RELEASE=${GALAXY_RELEASE:-release_17.09} \

+ENV GALAXY_RELEASE=${GALAXY_RELEASE:-release_18.01} \

GALAXY_REPO=${GALAXY_REPO:-https://github.com/galaxyproject/galaxy} \

GALAXY_ROOT=/galaxy-central \

GALAXY_CONFIG_DIR=/etc/galaxy \

EXPORT_DIR=/export \

DEBIAN_FRONTEND=noninteractive

-ENV GALAXY_CONFIG_FILE=$GALAXY_CONFIG_DIR/galaxy.ini \

+ENV GALAXY_CONFIG_FILE=$GALAXY_CONFIG_DIR/galaxy.yml \

GALAXY_CONFIG_JOB_CONFIG_FILE=$GALAXY_CONFIG_DIR/job_conf.xml \

GALAXY_CONFIG_JOB_METRICS_CONFIG_FILE=$GALAXY_CONFIG_DIR/job_metrics_conf.xml \

GALAXY_CONFIG_TOOL_DATA_TABLE_CONFIG_PATH=/etc/galaxy/tool_data_table_conf.xml \

+GALAXY_CONFIG_WATCH_TOOL_DATA_DIR=True \

GALAXY_CONFIG_TOOL_DEPENDENCY_DIR=$EXPORT_DIR/tool_deps \

GALAXY_CONFIG_TOOL_PATH=$EXPORT_DIR/galaxy-central/tools \

GALAXY_VIRTUAL_ENV=/galaxy_venv \

@@ -63,7 +64,6 @@ RUN groupadd -r postgres -g $GALAXY_POSTGRES_GID && \

RUN echo "force-unsafe-io" > /etc/dpkg/dpkg.cfg.d/02apt-speedup && \

echo "Acquire::http {No-Cache=True;};" > /etc/apt/apt.conf.d/no-cache && \

apt-get -qq update && apt-get install --no-install-recommends -y apt-transport-https software-properties-common wget && \

- apt-key adv --keyserver hkp://p80.pool.sks-keyservers.net:80 --recv-keys 58118E89F3A912897C070ADBF76221572C52609D && \

sh -c "wget -qO - https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -" && \

sh -c "echo deb http://research.cs.wisc.edu/htcondor/ubuntu/stable/ trusty contrib > /etc/apt/sources.list.d/htcondor.list" && \

sh -c "wget -qO - http://research.cs.wisc.edu/htcondor/ubuntu/HTCondor-Release.gpg.key | sudo apt-key add -" && \

@@ -73,7 +73,7 @@ RUN echo "force-unsafe-io" > /etc/dpkg/dpkg.cfg.d/02apt-speedup && \

apt-get update -qq && apt-get upgrade -y && \

apt-get install --no-install-recommends -y postgresql-9.3 sudo python-virtualenv \

nginx-extras=1.4.6-1ubuntu3.8ppa1 nginx-common=1.4.6-1ubuntu3.8ppa1 docker-ce=17.09.0~ce-0~ubuntu slurm-llnl slurm-llnl-torque \

- slurm-drmaa-dev proftpd proftpd-mod-pgsql libyaml-dev nodejs-legacy npm ansible munge libmunge-dev \

+ slurm-drmaa-dev proftpd proftpd-mod-pgsql libyaml-dev ansible munge libmunge-dev \

nano vim curl python-crypto python-pip python-psutil condor python-ldap autofs \

gridengine-common gridengine-drmaa1.0 rabbitmq-server libswitch-perl unattended-upgrades supervisor samtools && \

# install and remove supervisor, so get the service config file

@@ -82,7 +82,7 @@ RUN echo "force-unsafe-io" > /etc/dpkg/dpkg.cfg.d/02apt-speedup && \

apt-get autoremove -y && apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/* && rm -rf ~/.cache/ && \

mkdir -p /etc/supervisor/conf.d/ /var/log/supervisor/ && \

pip install --upgrade pip && \

- pip install "ephemeris<0.8" supervisor --upgrade && \

+ pip install ephemeris supervisor --upgrade && \

ln -s /usr/local/bin/supervisord /usr/bin/supervisord

RUN groupadd -r $GALAXY_USER -g $GALAXY_GID && \

@@ -106,7 +106,7 @@ RUN mkdir $GALAXY_ROOT && \

chown -R $GALAXY_USER:$GALAXY_USER $GALAXY_VIRTUAL_ENV && \

rm -rf ~/.cache/

-RUN su $GALAXY_USER -c "cp $GALAXY_ROOT/config/galaxy.ini.sample $GALAXY_CONFIG_FILE"

+RUN su $GALAXY_USER -c "cp $GALAXY_ROOT/config/galaxy.yml.sample $GALAXY_CONFIG_FILE"

ADD ./reports_wsgi.ini.sample $GALAXY_CONFIG_DIR/reports_wsgi.ini

ADD sample_tool_list.yaml $GALAXY_HOME/ephemeris/sample_tool_list.yaml

@@ -132,7 +132,7 @@ RUN ansible-playbook /ansible/postgresql_provision.yml && \

--extra-vars proftpd_files_dir=$EXPORT_DIR/ftp \

--extra-vars proftpd_use_sftp=True \

--extra-vars galaxy_extras_docker_legacy=False \

- --extra-vars galaxy_minimum_version=17.09 \

+ --extra-vars galaxy_minimum_version=18.01 \

--extra-vars nginx_upload_store_path=$EXPORT_DIR/nginx_upload_store \

--extra-vars supervisor_postgres_config_path=$PG_CONF_DIR_DEFAULT/postgresql.conf \

--extra-vars supervisor_postgres_autostart=false \

diff --git a/galaxy/install_tools_wrapper.sh b/galaxy/install_tools_wrapper.sh

index 4ee5992fc..ff170f5f1 100644

--- a/galaxy/install_tools_wrapper.sh

+++ b/galaxy/install_tools_wrapper.sh

@@ -13,7 +13,7 @@ else

export PORT=8080

service postgresql start

install_log='galaxy_install.log'

-

+

# wait for database to finish starting up

STATUS=$(psql 2>&1)

while [[ ${STATUS} =~ "starting up" ]]

@@ -22,43 +22,30 @@ else

STATUS=$(psql 2>&1)

sleep 1

done

-

+

echo "starting Galaxy"

- sudo -E -u galaxy ./run.sh --daemon --log-file=$install_log --pid-file=galaxy_install.pid

-

+ sudo -E -u galaxy ./run.sh -d $install_log --pidfile galaxy_install.pid --http-timeout 3000

+

galaxy_install_pid=`cat galaxy_install.pid`

-

- while : ; do