-

Notifications

You must be signed in to change notification settings - Fork 35

GitHub CI_CD

The Nala Automation Framework is set up to run within GitHub's Actions Workflows separately or within a site's CI/CD pipelines. At this time support for different CI/CD tools are currently not available. Although if your team is using a different CI/CD, Nala can easily be executed as a shell job within your CI/CD.

This guide will provide information on how Nala runs within the GitHub Actions Workflows as a CI/CD pipeline.

All GitHub Actions require a workflow YAML file that describes the different jobs to execute under a single workflow. For deeper learning on GitHub Actions, you can go here.

All of the workflows provided out-of-the-box for Nala are found under the .github/workflows/ directory. There are three provided and are described below:

- daily.yml - The daily workflow runs all Nala test suites on a daily schedule.

- debug.yml - The debug workflow runs Nala against a specific feature branch and test filter tag(s) per user selection. The test results are then uploaded to GitHub as a zip file. The zip file can then be downloaded, unzipped, and used with Playwrights Trace Viewer to see screenshots, each line of method calls, and execution statuses, as well as any errors in the console. This is a great way of figuring out why a test may be failing in the GitHub Actions pipeline but works just fine on a developer's workstation.

- self.yml - The "self" workflow runs Nala against a specific PR in a site's repository.

Pull Requests (PRs) in GitHub can have specific labels attached to the PR to denote something to team members, or to GitHub workflows. Nala uses labels for its workflows to denote whether to run or not on a PR. It also can take specific filtering for test tags, which are labels themselves, to designate what tests to run against a PR.

The self.yml workflow requires labels on a PR to execute within a pipeline. When a developer raises a PR for their site's code, the developer must select and add the label "@run-nala" to the PR. This will then execute all of Nala's test suites against the PR. If a developer would like to only run a subset of tests or specific block tests, for example, a developer updates the Marquee block, then they would need to add both "@run-nala" and the "@marquee" label to their PR. An example is shown below where one could add labels to their PR as well as Nala running against the PR:

Note It is a part of the test creation process to add the needed test tag filter label to the team's site's code repository after merging a new test to Nala's repository. Otherwise, developers will not be able to filter the tests on their PRs to run only the needed tests for the new/updated feature. Which within a CI/CD pipeline may take more time than desired, or execute unnecessary tests, to get a PR released.

To have your team's site's PRs use your tests within a pipeline for PR releasing, you will need to add a GitHub workflow to their code repository under a .github/workflows directory. A base workflow that can be used to run Nala against PRs is provided below, update it as necessary for different environment variables, etc. that you may need to send in.

name: Nala Tests

on:

pull_request:

types: [ labeled, opened, synchronize, reopened ]

jobs:

action:

name: Running E2E & IT

if: contains(github.event.pull_request.labels.*.name, 'run-nala')

runs-on: ubuntu-latest

steps:

- name: Check out repository

uses: actions/checkout@v3

- name: Run Nala

uses: adobecom/nala@main # Change if doing dev work

env:

labels: ${{ join(github.event.pull_request.labels.*.name, ' ') }}

branch: ${{ github.event.pull_request.head.ref }}

To have PRs for new Nala tests executed for a code review/approval before being merged into Nala, you will need to add your test filter tag label first to the Nala repository. Then you can raise your PR and add the '@run-nala' label and your newly added test filter tag label to it. This will then execute the new test(s) that you are requesting to add to Nala.

To run the Nala debugger workflow, select the "Actions" navigation tab from the Nala Repository homepage. Then select the "Run Nala Debugging" workflow on the left side navigation menu. A list of all the executions of the workflow will show up, from there select the Run Workflow button, select the branch you want the test(s) to run against, then add the test filter tags you want to run in a space-separated list, i.e., @marquee @columns. Lastly, click the "Run workflow" button.

- Select the Actions Navigation Tab

- Select the "Run Nala Debugging" workflow

- Select the "Run Workflow" button

- Select the branch, add test tag filters, and click "Run workflow"

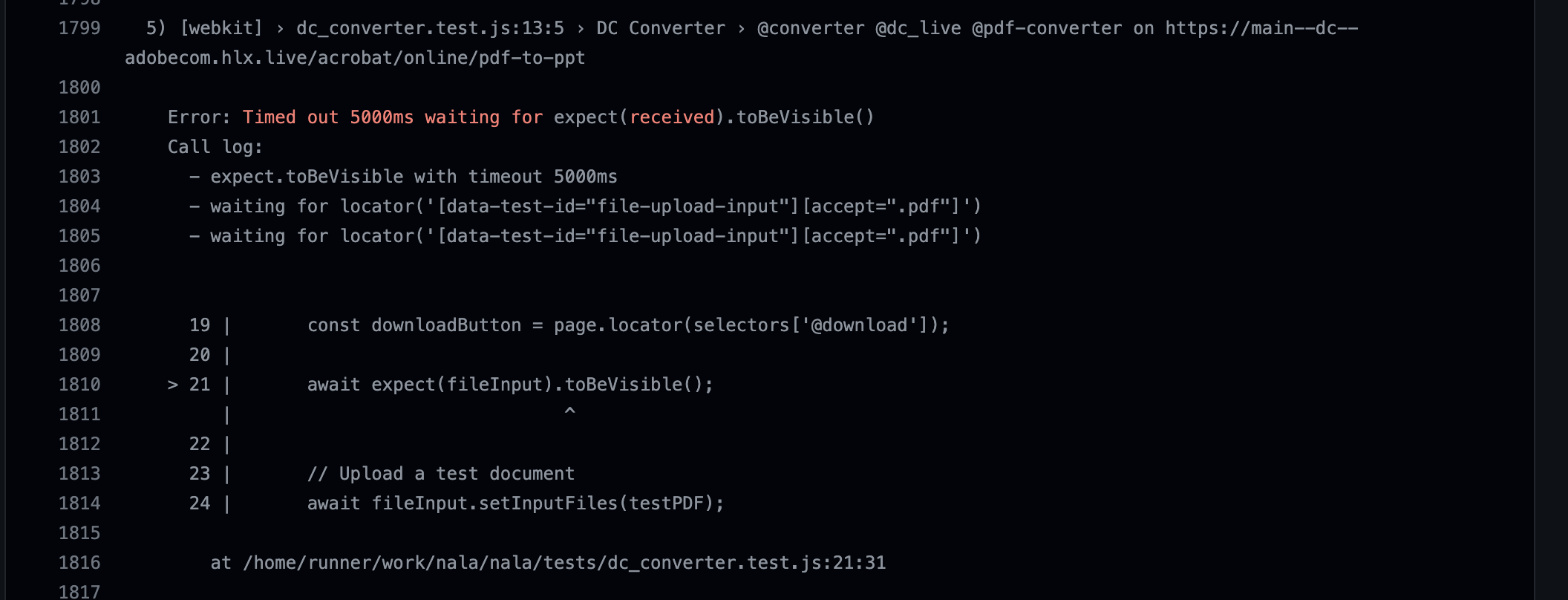

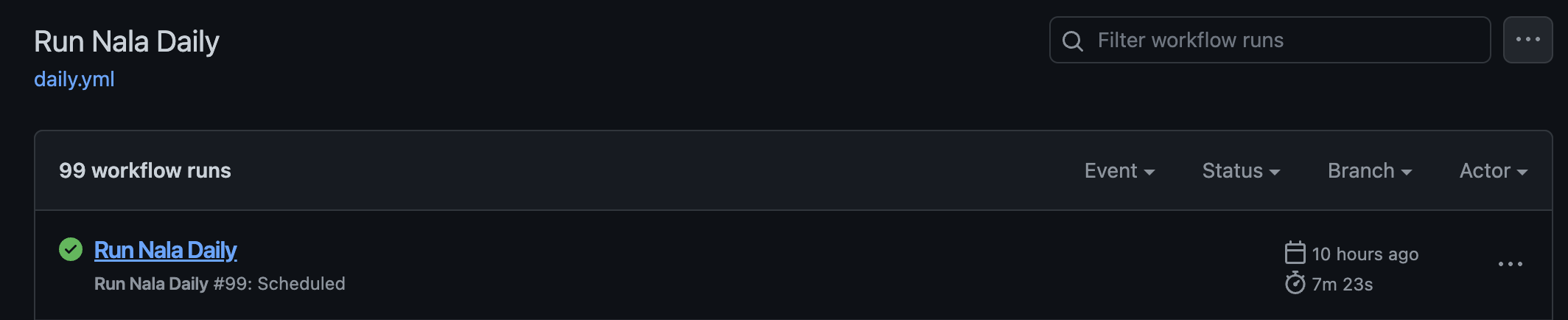

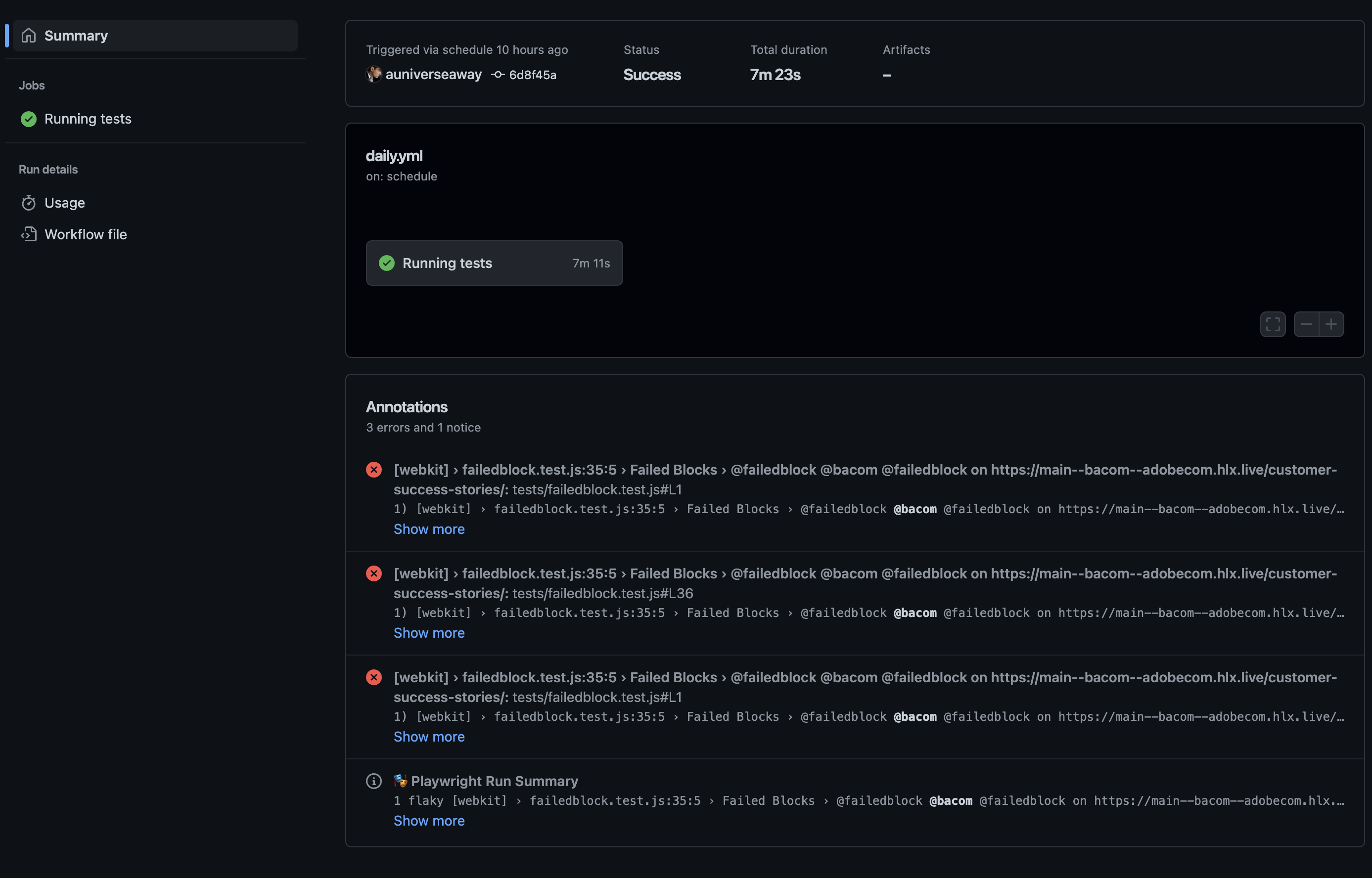

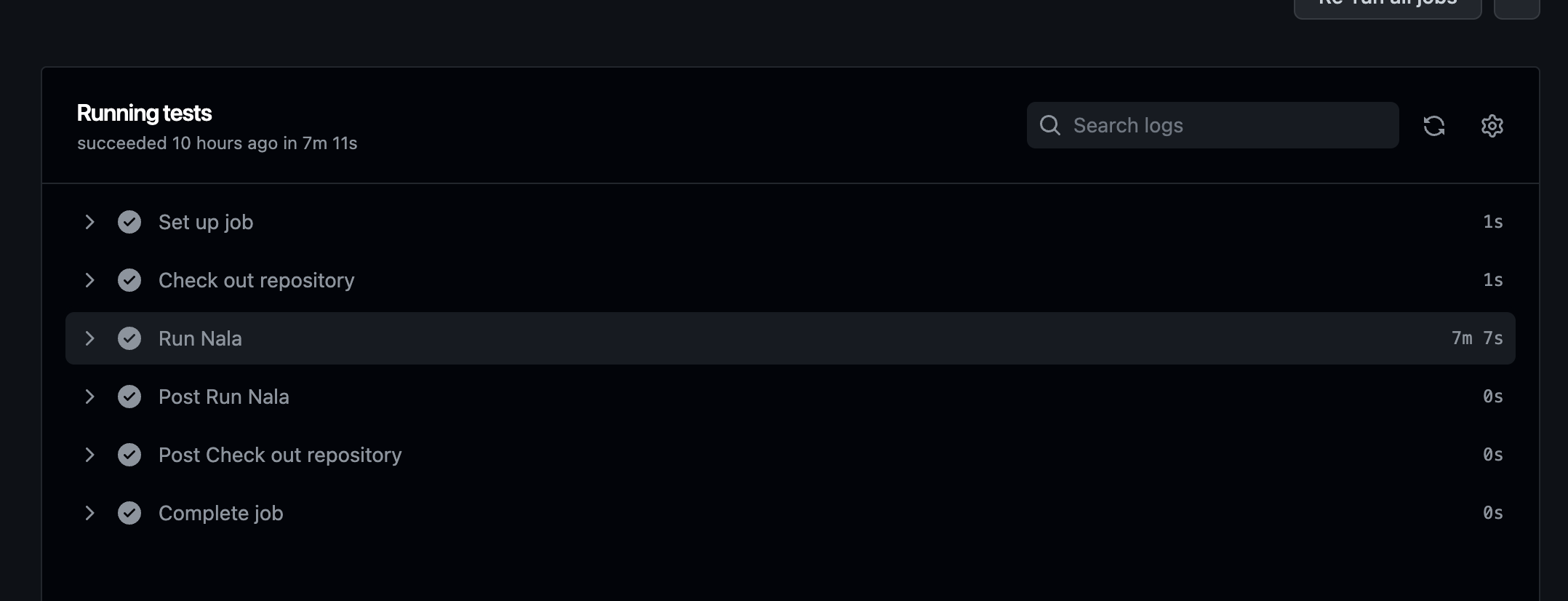

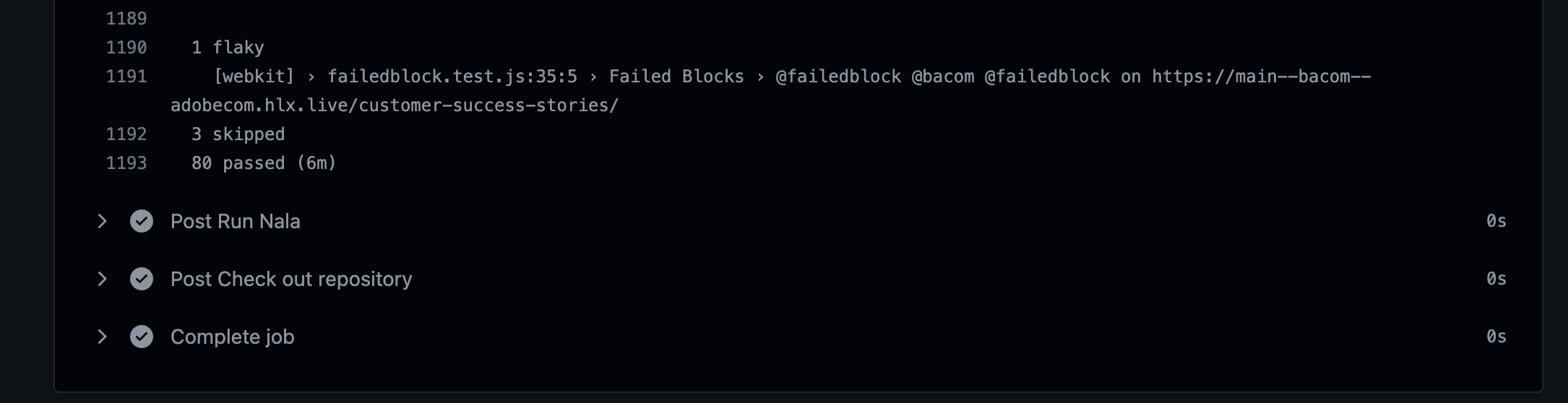

To see the results of a workflow, you will select the workflow execution from the list and then look at the Playwright Run Summary to see how many tests failed or were flaky (which means a test failed at least once and then passed on a subsequent retry) during that test run. You can drill down to see the terminal output that will show how many tests passed, failed, were flaky and where each specific test failed by clicking the workflow job name on the left side menu. Then you will click the "Run Nala" execution to expand it out, and scroll all the way to the bottom to see the terminal output.

- Select Workflow execution from the list

- Look at Playwright Run Summary

- Select the workflow job name on the left side menu

- Drilling down

- Scroll to the bottom

Note: Many times a test may fail with a timeout error, this is why it is imperative to add a specific message to your expect() methods to clearly state the failure from the expected behavior. As usual, a user should validate the failure and then log an issue to be fixed if necessary.