Browse ~smartcam~ module source code.Compile ~smartcam~ code.Modify ~smartcam~ code to use other kernels.- Write image compression $→$ storage prototype pipeline.

Xilinx’s Smart Camera application allows for a

MIPI capture PL processing RTSP/DP output pipeline.

https://xilinx.github.io/kria-apps-docs/kv260/2022.1/build/html/_images/sc_image_landing.jpg

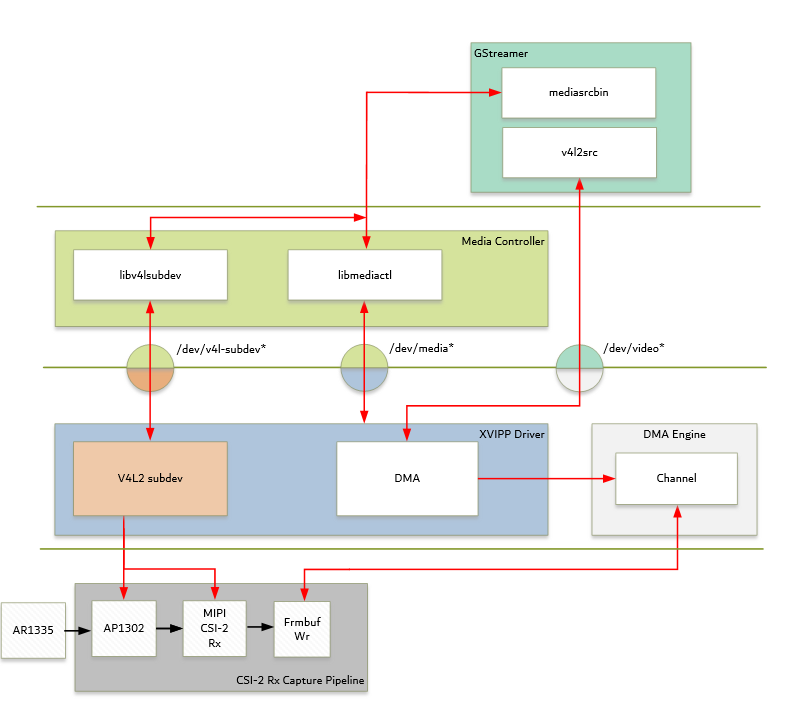

This is done via a GStreamer-Video4Linux2 driver.

- What is v4l2’s role in acquiring the image from DMA?

- How does GStreamer use v4l2?

- How does an acceleration kernel run using v4l2/GStreamer?

- What does the final software depend on?

- What does the developer need to have ready in order to build the application?

- Dependencies?

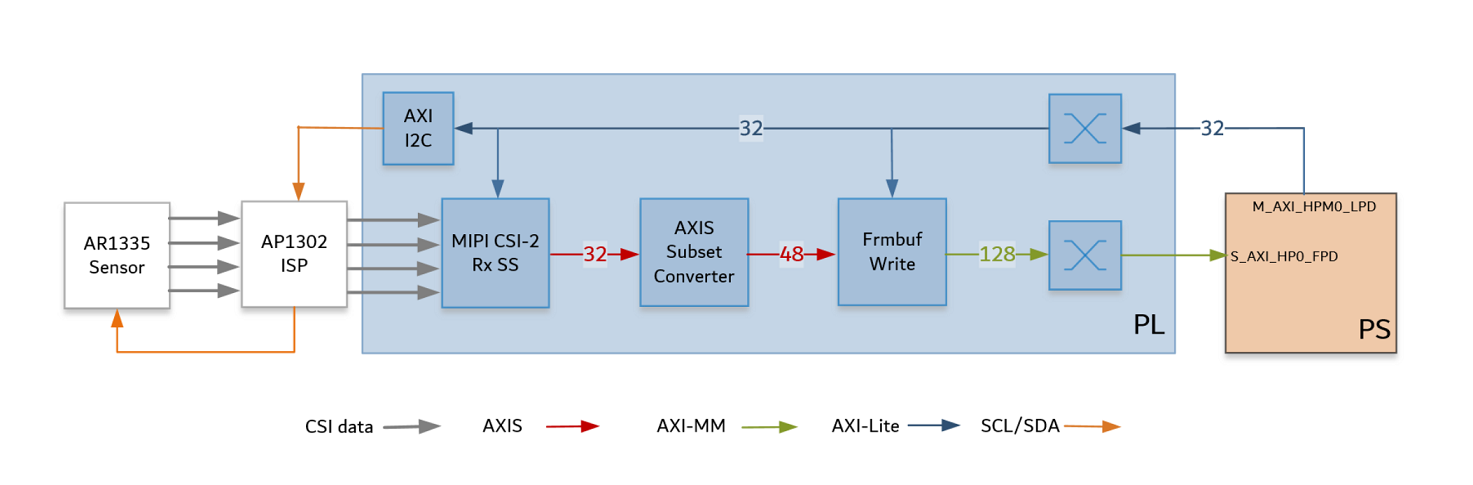

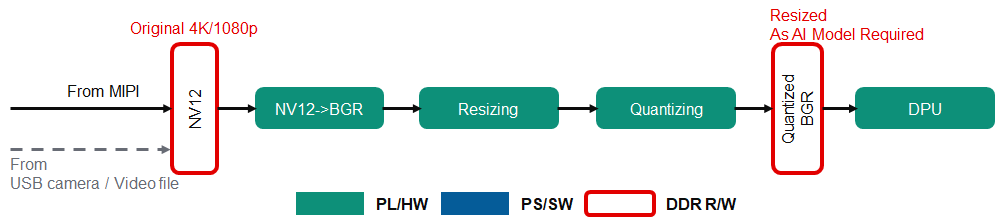

- MIPI source outputs to

S_AXI_HP0_FPD. NV12format.

| NV12 | Planar 8-bit YUV 4:2:0 |

| YUV420 | Semi-planar 8-bit YUV 4:2:0 |

- Source accessible via

v4l2→

/dev/media*→

/dev/video*…

→

v4l2src→

mediasrc

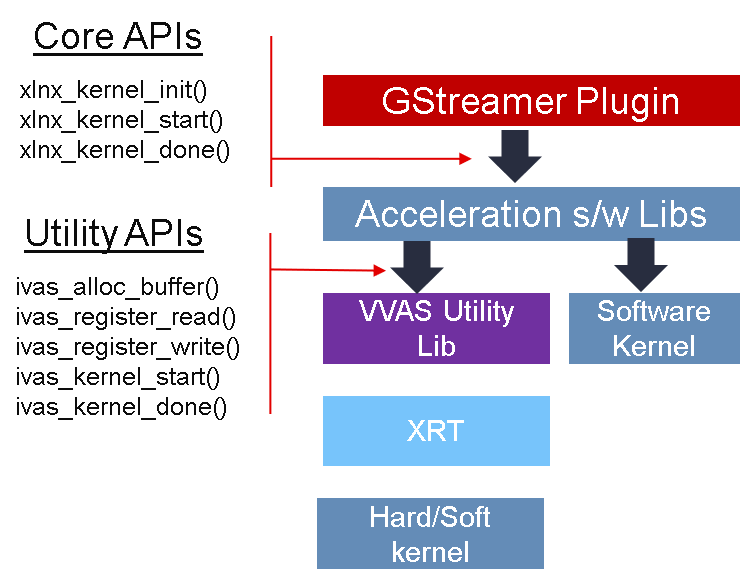

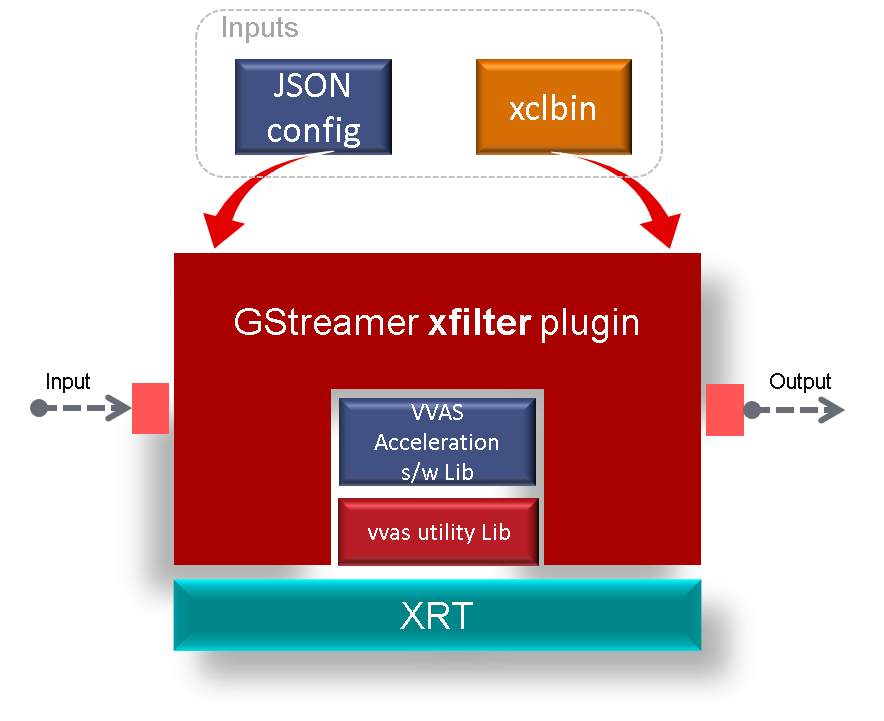

Acceleration on the new stack is based on HLS kernels. For vision, there is VVAS.

The Smart Camera application uses VVAS with GStreamer.

Vitis Video Analytics SDK is Xilinx’s software stack for building video processing applications.

Use GStreamer plugins for creating computer vision pipelines.

GStreamer is a pipeline-based multimedia framework linking various media processing systems to create workflows.

Objects derived from GstElement. Examples:

sourceelement: provides datafilterelement: acts on incoming data

Objects derived from GstPad. They are the “ports” that intermediate data between elements.

- Source pad: data flows outward

- Sink pad: data flows inward

GstMiniObject,GstBuffer,GstEvent…

- Enables plug-and-play functionality with GStreamer.

- Elements must be wrapped into a plugin before being used.

Essentially, it is a block of loadable code (a shared object/dynamically linked library).

https://gitlab.freedesktop.org/gstreamer/gst-template.git

- The above serves as good reference.

- The compiled code generates a

libgstplugin.so, which can be loaded bygst-launch-1.0by simply specifying the path inGST_PLUGIN_PATH.

- Collection, as well as an API for creating acceleration kernels for usage with GStreamer.

API usage:

- Plugin Initialization (

xlnx_kernel_init) - Kernel Start (

xlnx_kernel_start) - Waiting for the kernel to finish (

vvas_kernel_done) - Denitilizating the plugin (

xlnx_kernel_deinit)

Contains information regarding:

xclbinlocationvvas-library-repoelement-mode(passthrough, inplace, transform)

Used to program the FPGA.

- ~smartcam~: Contains the base smartcam application. It is built and used with Docker.

ivas_airender: aadasdaivas_xpp_pipeline: aadasda\

main: Contains the GStreamer pipelines that run everything.

~vvas_xfilter~: Plugin to act directly on data with the specified kernel.

gst-launch-1.0 v4l2src \

device=/dev/video0 io-mode=mmap !\

"video/x-raw, width=640, height=480" !\

videoconvert !\

"video/x-raw, width=640, height=480,

format=YUY2, framerate=30/1" !\

vvas_xfilter kernels-config=/tmp/kernel_xfilter2d_pl.json\

dynamic-config='{ "filter_preset" : "edge" }' !\

perf ! kmssink plane-id=39 fullscreen-overlay=true -v- Got smartcamera to work on Docker inside the KV260

- It also worked with a modified kernel

- Next: Write image compression $→$ storage prototype pipeline?

- I’m also working on making the build process for these packages simple.