diff --git a/PaperModel.md b/PaperModel.md

new file mode 100644

index 00000000..00f500fe

--- /dev/null

+++ b/PaperModel.md

@@ -0,0 +1,74 @@

+# Installation

+

+We now provide a *clean* version of GFPGAN, which does not require customized CUDA extensions. See [here](README.md#installation) for this easier installation.

+If you want want to use the original model in our paper, please follow the instructions below.

+

+1. Clone repo

+

+ ```bash

+ git clone https://github.com/xinntao/GFPGAN.git

+ cd GFPGAN

+ ```

+

+1. Install dependent packages

+

+ As StyleGAN2 uses customized PyTorch C++ extensions, you need to **compile them during installation** or **load them just-in-time(JIT)**.

+ You can refer to [BasicSR-INSTALL.md](https://github.com/xinntao/BasicSR/blob/master/INSTALL.md) for more details.

+

+ **Option 1: Load extensions just-in-time(JIT)** (For those just want to do simple inferences, may have less issues)

+

+ ```bash

+ # Install basicsr - https://github.com/xinntao/BasicSR

+ # We use BasicSR for both training and inference

+ pip install basicsr

+

+ # Install facexlib - https://github.com/xinntao/facexlib

+ # We use face detection and face restoration helper in the facexlib package

+ pip install facexlib

+

+ pip install -r requirements.txt

+

+ # remember to set BASICSR_JIT=True before your running commands

+ ```

+

+ **Option 2: Compile extensions during installation** (For those need to train/inference for many times)

+

+ ```bash

+ # Install basicsr - https://github.com/xinntao/BasicSR

+ # We use BasicSR for both training and inference

+ # Set BASICSR_EXT=True to compile the cuda extensions in the BasicSR - It may take several minutes to compile, please be patient

+ # Add -vvv for detailed log prints

+ BASICSR_EXT=True pip install basicsr -vvv

+

+ # Install facexlib - https://github.com/xinntao/facexlib

+ # We use face detection and face restoration helper in the facexlib package

+ pip install facexlib

+

+ pip install -r requirements.txt

+ ```

+

+## :zap: Quick Inference

+

+Download pre-trained models: [GFPGANv1.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth)

+

+```bash

+wget https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth -P experiments/pretrained_models

+```

+

+- Option 1: Load extensions just-in-time(JIT)

+

+ ```bash

+ BASICSR_JIT=True python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/whole_imgs --save_root results --arch original --channel 1

+

+ # for aligned images

+ BASICSR_JIT=True python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/whole_imgs --save_root results --arch original --channel 1 --aligned

+ ```

+

+- Option 2: Have successfully compiled extensions during installation

+

+ ```bash

+ python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/whole_imgs --save_root results --arch original --channel 1

+

+ # for aligned images

+ python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/whole_imgs --save_root results --arch original --channel 1 --aligned

+ ```

diff --git a/README.md b/README.md

index c7263bfe..8dede821 100644

--- a/README.md

+++ b/README.md

@@ -5,31 +5,23 @@

[](https://github.com/TencentARC/GFPGAN/blob/master/LICENSE)

[](https://github.com/TencentARC/GFPGAN/blob/master/.github/workflows/pylint.yml)

-[**Paper**](https://arxiv.org/abs/2101.04061) **|** [**Project Page**](https://xinntao.github.io/projects/gfpgan) [English](README.md) **|** [简体中文](README_CN.md)

+1. [Colab Demo](https://colab.research.google.com/drive/1sVsoBd9AjckIXThgtZhGrHRfFI6UUYOo) for GFPGAN  +1. We provide a *clean* version of GFPGAN, which can run without CUDA extensions. So that it can run in **Windows** or on **CPU mode**.

-GFPGAN is a blind face restoration algorithm towards real-world face images.

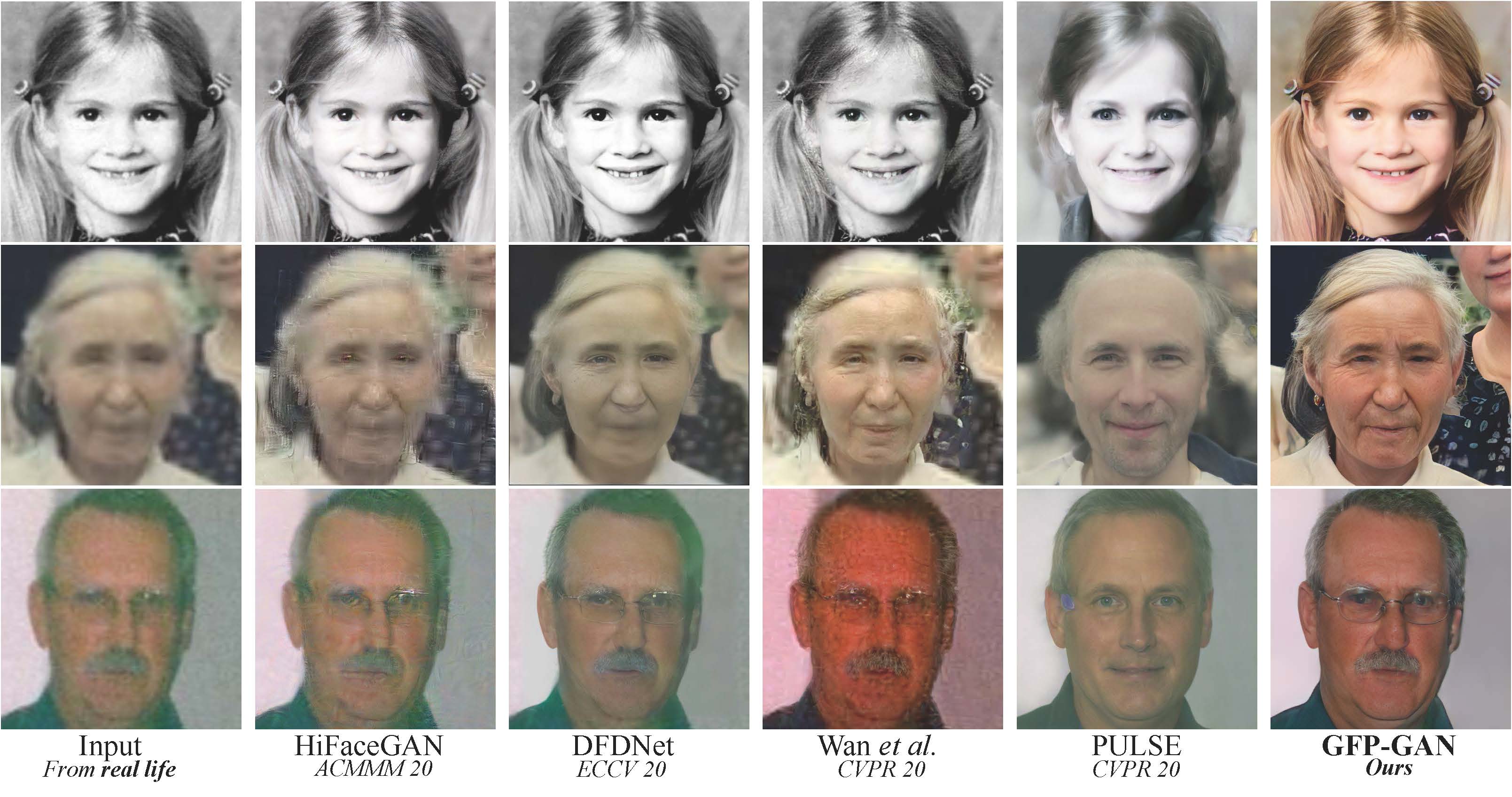

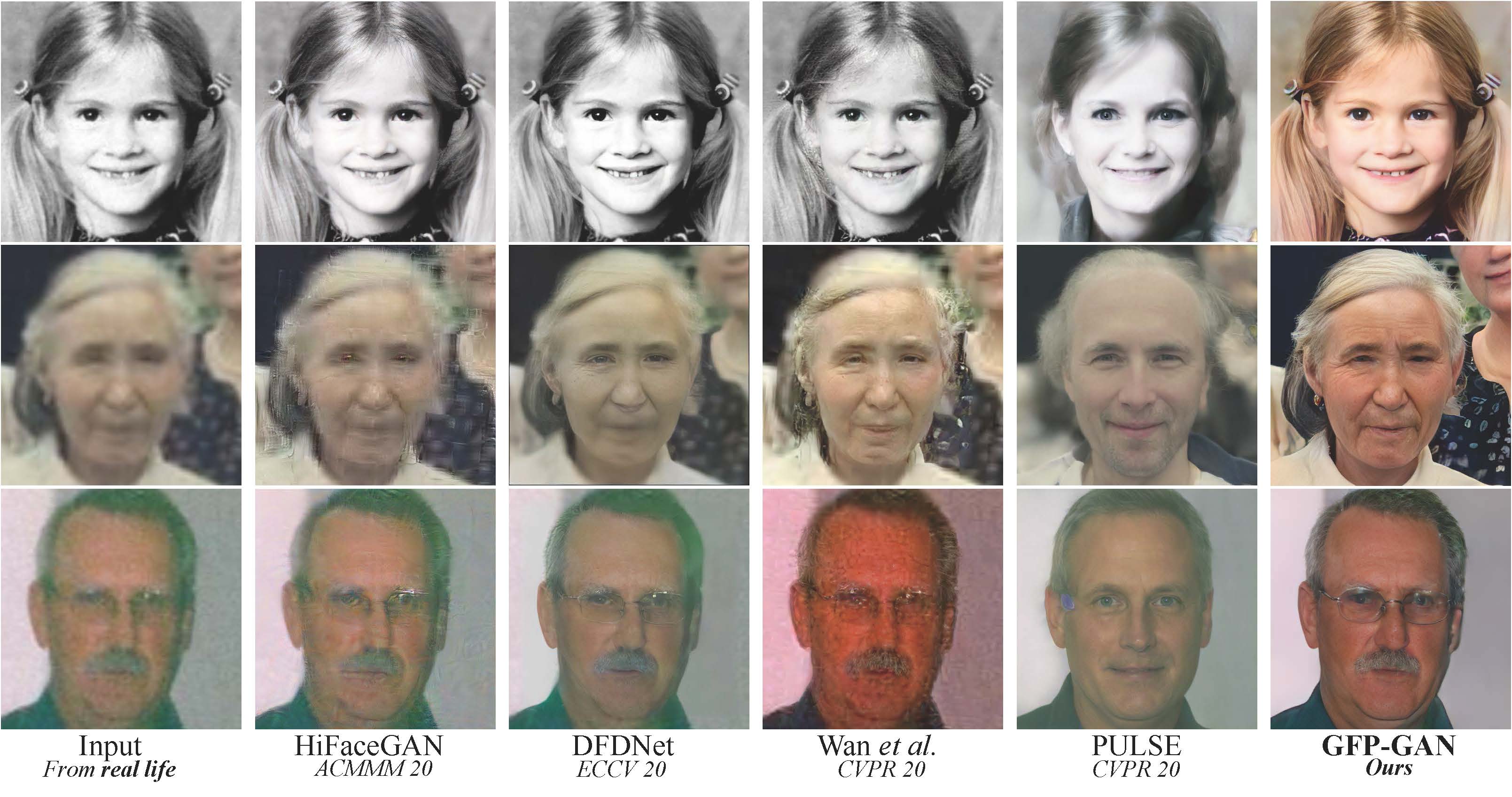

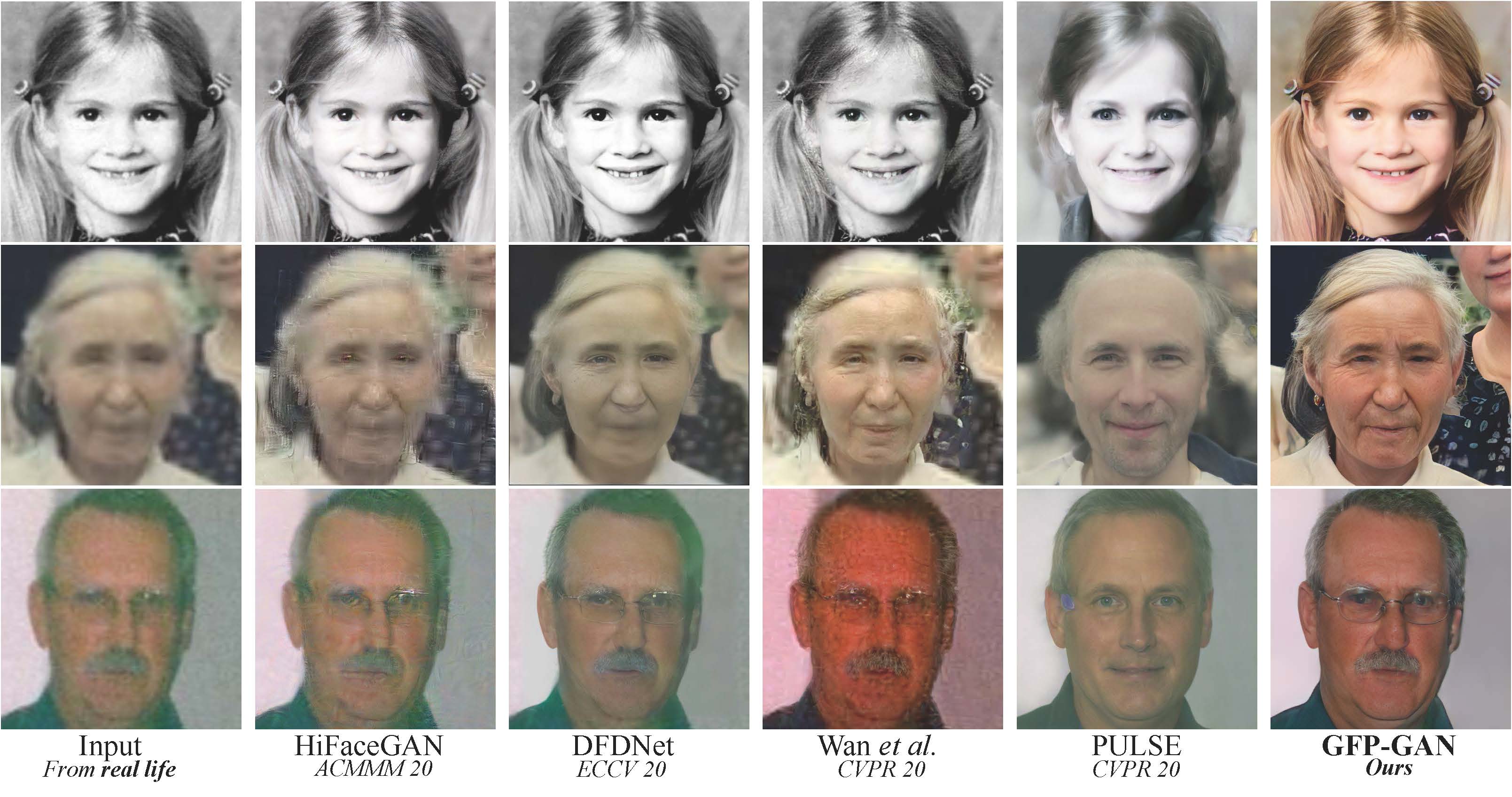

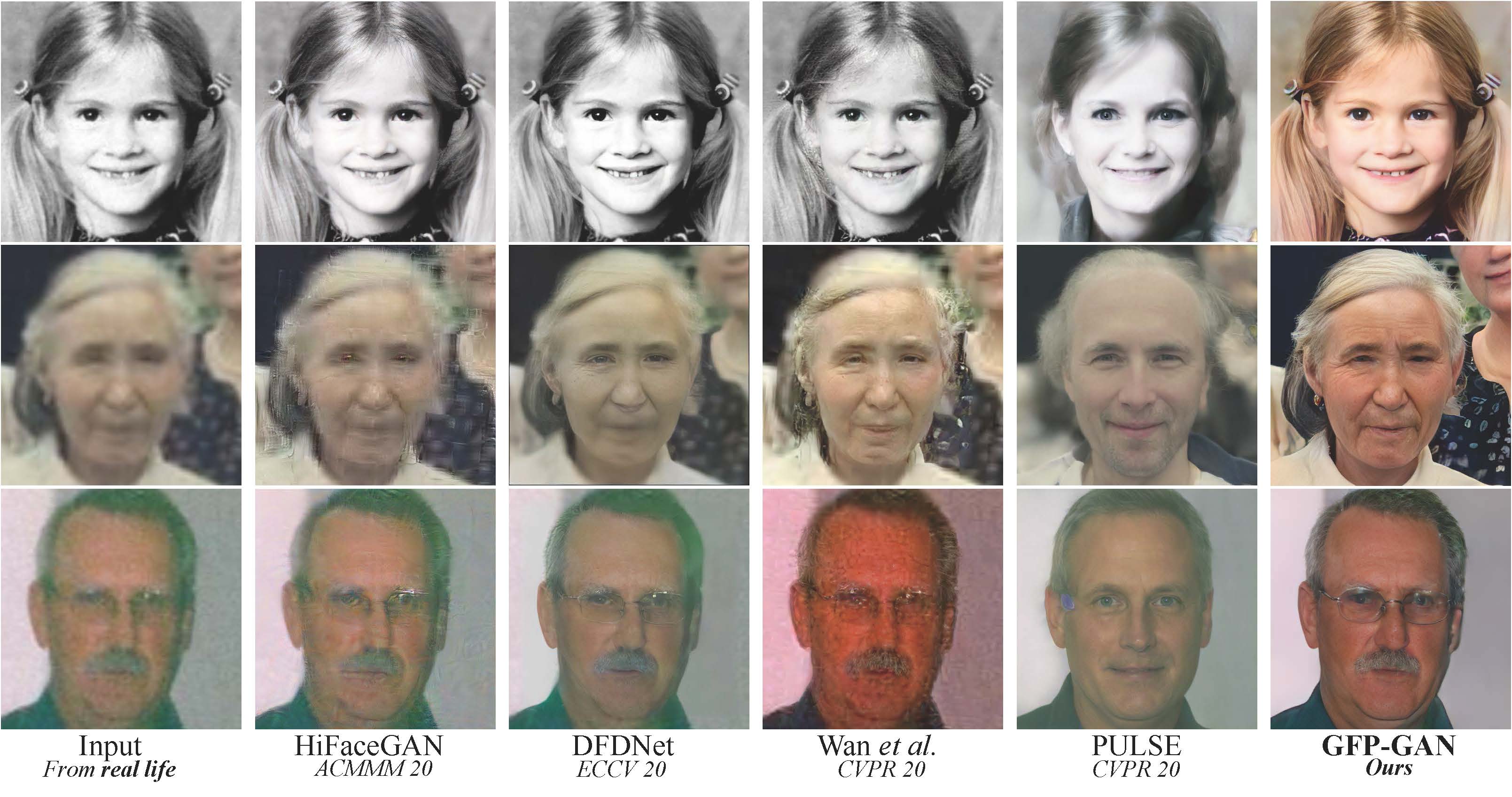

+GFPGAN aims at developing **Practical Algorithm for Real-world Face Restoration**.

+1. We provide a *clean* version of GFPGAN, which can run without CUDA extensions. So that it can run in **Windows** or on **CPU mode**.

-GFPGAN is a blind face restoration algorithm towards real-world face images.

+GFPGAN aims at developing **Practical Algorithm for Real-world Face Restoration**.

+It leverages rich and diverse priors encapsulated in a pretrained face GAN (*e.g.*, StyleGAN2) for blind face restoration.

- -[Colab Demo](https://colab.research.google.com/drive/1sVsoBd9AjckIXThgtZhGrHRfFI6UUYOo)

+:triangular_flag_on_post: **Updates**

+

+- :white_check_mark: We provide a *clean* version of GFPGAN, which does not require CUDA extensionts.

+- :white_check_mark: We provide an updated model without colorizing faces.

### :book: GFP-GAN: Towards Real-World Blind Face Restoration with Generative Facial Prior

+

> [[Paper](https://arxiv.org/abs/2101.04061)] [[Project Page](https://xinntao.github.io/projects/gfpgan)] [Demo]

-[Colab Demo](https://colab.research.google.com/drive/1sVsoBd9AjckIXThgtZhGrHRfFI6UUYOo)

+:triangular_flag_on_post: **Updates**

+

+- :white_check_mark: We provide a *clean* version of GFPGAN, which does not require CUDA extensionts.

+- :white_check_mark: We provide an updated model without colorizing faces.

### :book: GFP-GAN: Towards Real-World Blind Face Restoration with Generative Facial Prior

+

> [[Paper](https://arxiv.org/abs/2101.04061)] [[Project Page](https://xinntao.github.io/projects/gfpgan)] [Demo]

> [Xintao Wang](https://xinntao.github.io/), [Yu Li](https://yu-li.github.io/), [Honglun Zhang](https://scholar.google.com/citations?hl=en&user=KjQLROoAAAAJ), [Ying Shan](https://scholar.google.com/citations?user=4oXBp9UAAAAJ&hl=en)

> Applied Research Center (ARC), Tencent PCG

-#### Abstract

-

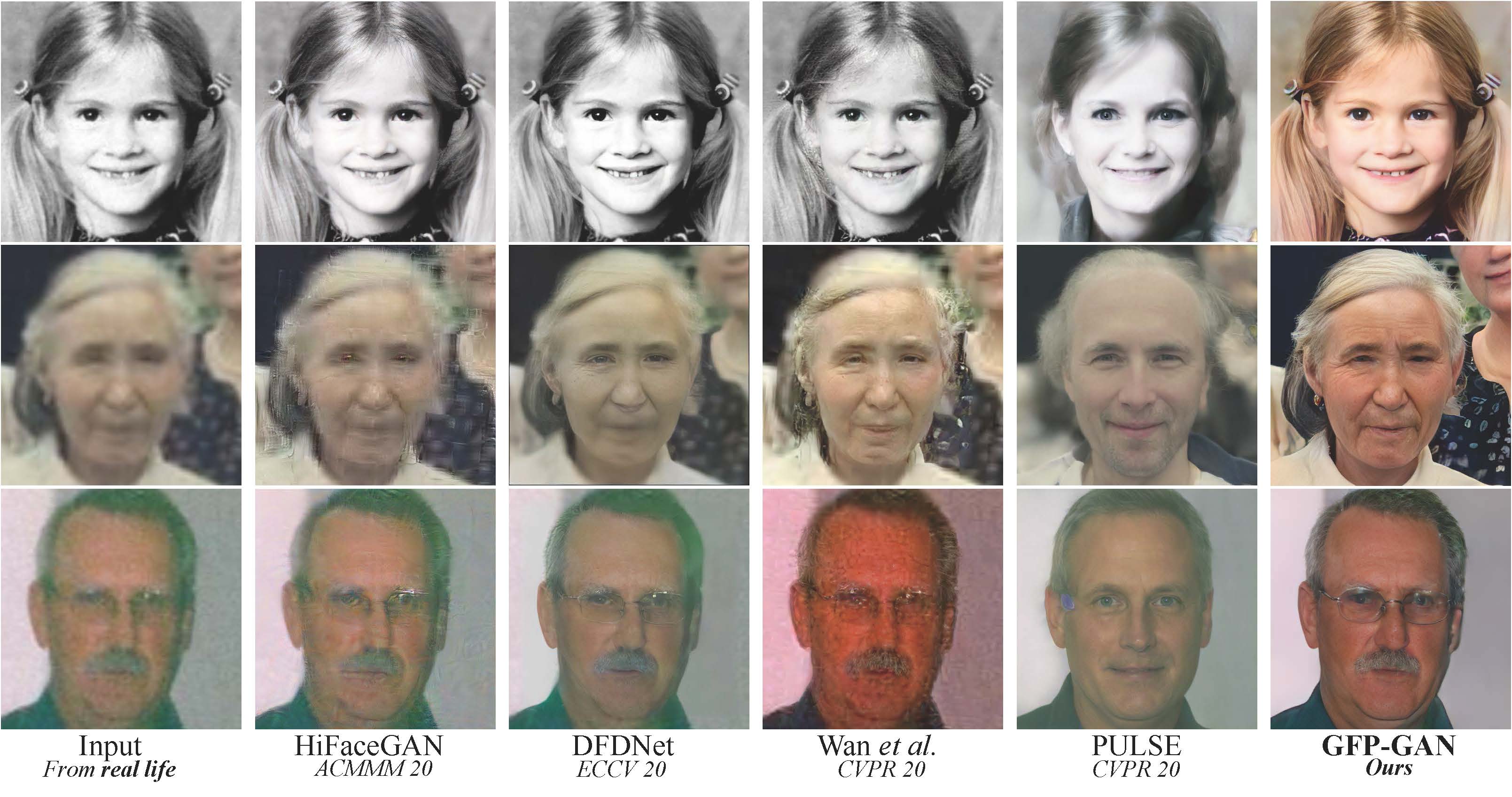

-Blind face restoration usually relies on facial priors, such as facial geometry prior or reference prior, to restore realistic and faithful details. However, very low-quality inputs cannot offer accurate geometric prior while high-quality references are inaccessible, limiting the applicability in real-world scenarios. In this work, we propose GFP-GAN that leverages **rich and diverse priors encapsulated in a pretrained face GAN** for blind face restoration. This Generative Facial Prior (GFP) is incorporated into the face restoration process via novel channel-split spatial feature transform layers, which allow our method to achieve a good balance of realness and fidelity. Thanks to the powerful generative facial prior and delicate designs, our GFP-GAN could jointly restore facial details and enhance colors with just a single forward pass, while GAN inversion methods require expensive image-specific optimization at inference. Extensive experiments show that our method achieves superior performance to prior art on both synthetic and real-world datasets.

-

-#### BibTeX

-

- @InProceedings{wang2021gfpgan,

- author = {Xintao Wang and Yu Li and Honglun Zhang and Ying Shan},

- title = {Towards Real-World Blind Face Restoration with Generative Facial Prior},

- booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

- year = {2021}

- }

-

@@ -40,25 +32,23 @@ Blind face restoration usually relies on facial priors, such as facial geometry

- Python >= 3.7 (Recommend to use [Anaconda](https://www.anaconda.com/download/#linux) or [Miniconda](https://docs.conda.io/en/latest/miniconda.html))

- [PyTorch >= 1.7](https://pytorch.org/)

-- NVIDIA GPU + [CUDA](https://developer.nvidia.com/cuda-downloads)

-- Linux (We have not tested on Windows)

+- Option: NVIDIA GPU + [CUDA](https://developer.nvidia.com/cuda-downloads)

+- Option: Linux (We have not tested on Windows)

### Installation

+We now provide a *clean* version of GFPGAN, which does not require customized CUDA extensions.

+If you want want to use the original model in our paper, please see [PaperModel.md](PaperModel.md) for installation.

+

1. Clone repo

```bash

- git clone https://github.com/xinntao/GFPGAN.git

+ git clone https://github.com/TencentARC/GFPGAN.git

cd GFPGAN

```

1. Install dependent packages

- As StyleGAN2 uses customized PyTorch C++ extensions, you need to **compile them during installation** or **load then just-in-time(JIT)**.

- You can refer to [BasicSR-INSTALL.md](https://github.com/xinntao/BasicSR/blob/master/INSTALL.md) for more details.

-

- **Option 1: Load extensions just-in-time(JIT)** (For those just want to do simple inferences, may have less issues)

-

```bash

# Install basicsr - https://github.com/xinntao/BasicSR

# We use BasicSR for both training and inference

@@ -69,56 +59,41 @@ Blind face restoration usually relies on facial priors, such as facial geometry

pip install facexlib

pip install -r requirements.txt

-

- # remember to set BASICSR_JIT=True before your running commands

```

- **Option 2: Compile extensions during installation** (For those need to train/inference for many times)

-

- ```bash

- # Install basicsr - https://github.com/xinntao/BasicSR

- # We use BasicSR for both training and inference

- # Set BASICSR_EXT=True to compile the cuda extensions in the BasicSR - It may take several minutes to compile, please be patient

- # Add -vvv for detailed log prints

- BASICSR_EXT=True pip install basicsr -vvv

-

- # Install facexlib - https://github.com/xinntao/facexlib

- # We use face detection and face restoration helper in the facexlib package

- pip install facexlib

+## :zap: Quick Inference

- pip install -r requirements.txt

- ```

+Download pre-trained models: [GFPGANCleanv1-NoCE-C2.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.2.0/GFPGANCleanv1-NoCE-C2.pth)

-## :zap: Quick Inference

+```bash

+wget https://github.com/TencentARC/GFPGAN/releases/download/v0.2.0/GFPGANCleanv1-NoCE-C2.pth -P experiments/pretrained_models

+```

-Download pre-trained models: [GFPGANv1.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth)

+**Inference!**

```bash

-wget https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth -P experiments/pretrained_models

+python inference_gfpgan_full.py --upscale_factor 2 --test_path inputs/whole_imgs --save_root results

```

-- Option 1: Load extensions just-in-time(JIT)

+## :european_castle: Model Zoo

- ```bash

- BASICSR_JIT=True python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/whole_imgs

+- [GFPGANCleanv1-NoCE-C2.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.2.0/GFPGANCleanv1-NoCE-C2.pth)

+- [GFPGANv1.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth)

- # for aligned images

- BASICSR_JIT=True python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/cropped_faces --aligned

- ```

+## :computer: Training

-- Option 2: Have successfully compiled extensions during installation

+We provide the training codes for GFPGAN (used in our paper).

+You could improve it according to your own needs.

- ```bash

- python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/whole_imgs

+**Tips**

- # for aligned images

- python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/cropped_faces --aligned

- ```

+1. More high quality faces can improve the restoration quality.

+2. You may need to perform some pre-processing, such as beauty makeup.

-## :computer: Training

-We provide complete training codes for GFPGAN.

-You could improve it according to your own needs.

+**Procedures**

+

+(You can try a simple version ( `train_gfpgan_v1_simple.yml`) that does not require face component landmarks.)

1. Dataset preparation: [FFHQ](https://github.com/NVlabs/ffhq-dataset)

@@ -133,13 +108,18 @@ You could improve it according to your own needs.

> python -m torch.distributed.launch --nproc_per_node=4 --master_port=22021 train.py -opt train_gfpgan_v1.yml --launcher pytorch

-or load extensions just-in-time(JIT)

+## :scroll: License and Acknowledgement

-> BASICSR_JIT=True python -m torch.distributed.launch --nproc_per_node=4 --master_port=22021 train.py -opt train_gfpgan_v1.yml --launcher pytorch

+GFPGAN is released under Apache License Version 2.0.

-## :scroll: License and Acknowledgement

+## BibTeX

-GFPGAN is realeased under Apache License Version 2.0.

+ @InProceedings{wang2021gfpgan,

+ author = {Xintao Wang and Yu Li and Honglun Zhang and Ying Shan},

+ title = {Towards Real-World Blind Face Restoration with Generative Facial Prior},

+ booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

+ year = {2021}

+ }

## :e-mail: Contact

diff --git a/README_CN.md b/README_CN.md

deleted file mode 100644

index 462fed3f..00000000

--- a/README_CN.md

+++ /dev/null

@@ -1,103 +0,0 @@

-# GFPGAN (CVPR 2021)

-

-[**Paper**](https://arxiv.org/abs/2101.04061) **|** [**Project Page**](https://xinntao.github.io/projects/gfpgan) [English](README.md) **|** [简体中文](README_CN.md)

-

-GFPGAN is a blind face restoration algorithm towards real-world face images.

-

- -[Colab Demo](https://colab.research.google.com/drive/1sVsoBd9AjckIXThgtZhGrHRfFI6UUYOo)

-

-### :book: GFP-GAN: Towards Real-World Blind Face Restoration with Generative Facial Prior

-> [[Paper](https://arxiv.org/abs/2101.04061)] [[Project Page](https://xinntao.github.io/projects/gfpgan)] [Demo]

-[Colab Demo](https://colab.research.google.com/drive/1sVsoBd9AjckIXThgtZhGrHRfFI6UUYOo)

-

-### :book: GFP-GAN: Towards Real-World Blind Face Restoration with Generative Facial Prior

-> [[Paper](https://arxiv.org/abs/2101.04061)] [[Project Page](https://xinntao.github.io/projects/gfpgan)] [Demo]

-> [Xintao Wang](https://xinntao.github.io/), [Yu Li](https://yu-li.github.io/), [Honglun Zhang](https://scholar.google.com/citations?hl=en&user=KjQLROoAAAAJ), [Ying Shan](https://scholar.google.com/citations?user=4oXBp9UAAAAJ&hl=en)

-> Applied Research Center (ARC), Tencent PCG

-

-#### Abstract

-

-Blind face restoration usually relies on facial priors, such as facial geometry prior or reference prior, to restore realistic and faithful details. However, very low-quality inputs cannot offer accurate geometric prior while high-quality references are inaccessible, limiting the applicability in real-world scenarios. In this work, we propose GFP-GAN that leverages **rich and diverse priors encapsulated in a pretrained face GAN** for blind face restoration. This Generative Facial Prior (GFP) is incorporated into the face restoration process via novel channel-split spatial feature transform layers, which allow our method to achieve a good balance of realness and fidelity. Thanks to the powerful generative facial prior and delicate designs, our GFP-GAN could jointly restore facial details and enhance colors with just a single forward pass, while GAN inversion methods require expensive image-specific optimization at inference. Extensive experiments show that our method achieves superior performance to prior art on both synthetic and real-world datasets.

-

-#### BibTeX

-

- @InProceedings{wang2021gfpgan,

- author = {Xintao Wang and Yu Li and Honglun Zhang and Ying Shan},

- title = {Towards Real-World Blind Face Restoration with Generative Facial Prior},

- booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

- year = {2021}

- }

-

-

-  -

-

-

----

-

-## :wrench: Dependencies and Installation

-

-- Python >= 3.7 (Recommend to use [Anaconda](https://www.anaconda.com/download/#linux) or [Miniconda](https://docs.conda.io/en/latest/miniconda.html))

-- [PyTorch >= 1.7](https://pytorch.org/)

-- NVIDIA GPU + [CUDA](https://developer.nvidia.com/cuda-downloads)

-

-### Installation

-

-1. Clone repo

-

- ```bash

- git clone https://github.com/xinntao/GFPGAN.git

- cd GFPGAN

- ```

-

-1. Install dependent packages

-

- ```bash

- # Install basicsr - https://github.com/xinntao/BasicSR

- # We use BasicSR for both training and inference

- # Set BASICSR_EXT=True to compile the cuda extensions in the BasicSR - It may take several minutes to compile, please be patient

- BASICSR_EXT=True pip install basicsr

-

- # Install facexlib - https://github.com/xinntao/facexlib

- # We use face detection and face restoration helper in the facexlib package

- pip install facexlib

-

- pip install -r requirements.txt

- ```

-

-## :zap: Quick Inference

-

-Download pre-trained models: [GFPGANv1.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth)

-

-```bash

-wget https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth -P experiments/pretrained_models

-```

-

-```bash

-python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/whole_imgs

-

-# for aligned images

-python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/cropped_faces --aligned

-```

-

-## :computer: Training

-

-We provide complete training codes for GFPGAN.

-You could improve it according to your own needs.

-

-1. Dataset preparation: [FFHQ](https://github.com/NVlabs/ffhq-dataset)

-

-1. Download pre-trained models and other data. Put them in the `experiments/pretrained_models` folder.

- 1. [Pretrained StyleGAN2 model: StyleGAN2_512_Cmul1_FFHQ_B12G4_scratch_800k.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/StyleGAN2_512_Cmul1_FFHQ_B12G4_scratch_800k.pth)

- 1. [Component locations of FFHQ: FFHQ_eye_mouth_landmarks_512.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/FFHQ_eye_mouth_landmarks_512.pth)

- 1. [A simple ArcFace model: arcface_resnet18.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/arcface_resnet18.pth)

-

-1. Modify the configuration file `train_gfpgan_v1.yml` accordingly.

-

-1. Training

-

-> python -m torch.distributed.launch --nproc_per_node=4 --master_port=22021 train.py -opt train_gfpgan_v1.yml --launcher pytorch

-

-## :scroll: License and Acknowledgement

-

-GFPGAN is realeased under Apache License Version 2.0.

-

-## :e-mail: Contact

-

-If you have any question, please email `xintao.wang@outlook.com` or `xintaowang@tencent.com`.

diff --git a/train_gfpgan_v1.yml b/train_gfpgan_v1.yml

index 4fe6e5c1..0997d7cd 100644

--- a/train_gfpgan_v1.yml

+++ b/train_gfpgan_v1.yml

@@ -34,6 +34,12 @@ datasets:

color_jitter_pt_prob: 0.3

gray_prob: 0.01

+ # If you do not want colorization, please set

+ # color_jitter_prob: ~

+ # color_jitter_pt_prob: ~

+ # gray_prob: 0.01

+ # gt_gray: True

+

crop_components: true

component_path: experiments/pretrained_models/FFHQ_eye_mouth_landmarks_512.pth

eye_enlarge_ratio: 1.4

@@ -42,7 +48,7 @@ datasets:

use_shuffle: true

num_worker_per_gpu: 6

batch_size_per_gpu: 3

- dataset_enlarge_ratio: 100

+ dataset_enlarge_ratio: 1

prefetch_mode: ~

val:

diff --git a/train_gfpgan_v1_simple.yml b/train_gfpgan_v1_simple.yml

new file mode 100644

index 00000000..8bf4f089

--- /dev/null

+++ b/train_gfpgan_v1_simple.yml

@@ -0,0 +1,216 @@

+# general settings

+name: train_GFPGANv1_512_simple

+model_type: GFPGANModel

+num_gpu: 4

+manual_seed: 0

+

+# dataset and data loader settings

+datasets:

+ train:

+ name: FFHQ

+ type: FFHQDegradationDataset

+ # dataroot_gt: datasets/ffhq/ffhq_512.lmdb

+ dataroot_gt: datasets/ffhq/ffhq_512

+ io_backend:

+ # type: lmdb

+ type: disk

+

+ use_hflip: true

+ mean: [0.5, 0.5, 0.5]

+ std: [0.5, 0.5, 0.5]

+ out_size: 512

+

+ blur_kernel_size: 41

+ kernel_list: ['iso', 'aniso']

+ kernel_prob: [0.5, 0.5]

+ blur_sigma: [0.1, 10]

+ downsample_range: [0.8, 8]

+ noise_range: [0, 20]

+ jpeg_range: [60, 100]

+

+ # color jitter and gray

+ color_jitter_prob: 0.3

+ color_jitter_shift: 20

+ color_jitter_pt_prob: 0.3

+ gray_prob: 0.01

+

+ # If you do not want colorization, please set

+ # color_jitter_prob: ~

+ # color_jitter_pt_prob: ~

+ # gray_prob: 0.01

+ # gt_gray: True

+

+ # crop_components: false

+ # component_path: experiments/pretrained_models/FFHQ_eye_mouth_landmarks_512.pth

+ # eye_enlarge_ratio: 1.4

+

+ # data loader

+ use_shuffle: true

+ num_worker_per_gpu: 6

+ batch_size_per_gpu: 3

+ dataset_enlarge_ratio: 1

+ prefetch_mode: ~

+

+ val:

+ # Please modify accordingly to use your own validation

+ # Or comment the val block if do not need validation during training

+ name: validation

+ type: PairedImageDataset

+ dataroot_lq: datasets/faces/validation/input

+ dataroot_gt: datasets/faces/validation/reference

+ io_backend:

+ type: disk

+ mean: [0.5, 0.5, 0.5]

+ std: [0.5, 0.5, 0.5]

+ scale: 1

+

+# network structures

+network_g:

+ type: GFPGANv1

+ out_size: 512

+ num_style_feat: 512

+ channel_multiplier: 1

+ resample_kernel: [1, 3, 3, 1]

+ decoder_load_path: experiments/pretrained_models/StyleGAN2_512_Cmul1_FFHQ_B12G4_scratch_800k.pth

+ fix_decoder: true

+ num_mlp: 8

+ lr_mlp: 0.01

+ input_is_latent: true

+ different_w: true

+ narrow: 1

+ sft_half: true

+

+network_d:

+ type: StyleGAN2Discriminator

+ out_size: 512

+ channel_multiplier: 1

+ resample_kernel: [1, 3, 3, 1]

+

+# network_d_left_eye:

+# type: FacialComponentDiscriminator

+

+# network_d_right_eye:

+# type: FacialComponentDiscriminator

+

+# network_d_mouth:

+# type: FacialComponentDiscriminator

+

+network_identity:

+ type: ResNetArcFace

+ block: IRBlock

+ layers: [2, 2, 2, 2]

+ use_se: False

+

+# path

+path:

+ pretrain_network_g: ~

+ param_key_g: params_ema

+ strict_load_g: ~

+ pretrain_network_d: ~

+ # pretrain_network_d_left_eye: ~

+ # pretrain_network_d_right_eye: ~

+ # pretrain_network_d_mouth: ~

+ pretrain_network_identity: experiments/pretrained_models/arcface_resnet18.pth

+ # resume

+ resume_state: ~

+ ignore_resume_networks: ['network_identity']

+

+# training settings

+train:

+ optim_g:

+ type: Adam

+ lr: !!float 2e-3

+ optim_d:

+ type: Adam

+ lr: !!float 2e-3

+ optim_component:

+ type: Adam

+ lr: !!float 2e-3

+

+ scheduler:

+ type: MultiStepLR

+ milestones: [600000, 700000]

+ gamma: 0.5

+

+ total_iter: 800000

+ warmup_iter: -1 # no warm up

+

+ # losses

+ # pixel loss

+ pixel_opt:

+ type: L1Loss

+ loss_weight: !!float 1e-1

+ reduction: mean

+ # L1 loss used in pyramid loss, component style loss and identity loss

+ L1_opt:

+ type: L1Loss

+ loss_weight: 1

+ reduction: mean

+

+ # image pyramid loss

+ pyramid_loss_weight: 1

+ remove_pyramid_loss: 50000

+ # perceptual loss (content and style losses)

+ perceptual_opt:

+ type: PerceptualLoss

+ layer_weights:

+ # before relu

+ 'conv1_2': 0.1

+ 'conv2_2': 0.1

+ 'conv3_4': 1

+ 'conv4_4': 1

+ 'conv5_4': 1

+ vgg_type: vgg19

+ use_input_norm: true

+ perceptual_weight: !!float 1

+ style_weight: 50

+ range_norm: true

+ criterion: l1

+ # gan loss

+ gan_opt:

+ type: GANLoss

+ gan_type: wgan_softplus

+ loss_weight: !!float 1e-1

+ # r1 regularization for discriminator

+ r1_reg_weight: 10

+ # facial component loss

+ # gan_component_opt:

+ # type: GANLoss

+ # gan_type: vanilla

+ # real_label_val: 1.0

+ # fake_label_val: 0.0

+ # loss_weight: !!float 1

+ # comp_style_weight: 200

+ # identity loss

+ identity_weight: 10

+

+ net_d_iters: 1

+ net_d_init_iters: 0

+ net_d_reg_every: 16

+

+# validation settings

+val:

+ val_freq: !!float 5e3

+ save_img: true

+

+ metrics:

+ psnr: # metric name, can be arbitrary

+ type: calculate_psnr

+ crop_border: 0

+ test_y_channel: false

+

+# logging settings

+logger:

+ print_freq: 100

+ save_checkpoint_freq: !!float 5e3

+ use_tb_logger: true

+ wandb:

+ project: ~

+ resume_id: ~

+

+# dist training settings

+dist_params:

+ backend: nccl

+ port: 29500

+

+find_unused_parameters: true

-

-