-

-

Notifications

You must be signed in to change notification settings - Fork 18

Advanced guide for larger areas

This guide is intended for advanced users who wish to use the custom terrain feature for Terra++, but wish to generate a dataset for a larger area, like a whole country or any big area... The only condition is that your raster data already contains the CRS metadata. You can check this with a tool like gdalinfo.

For example, if when your run gdalinfo dem.tif (where dem.tif is a file from your raster data) and the output contains 'Coordinate System is:...' then this guide is suitable for you. This assumes that all .tif files in your raster data contain CRS metadata. If not, you can set the projection to your raster data with set_projection.py or you can use the regular procedure with QGIS here.

Another way is with QGIS, by simply dragging one .tif file from your raster data into the window. If the imported file is in position and you don't need to manually set the CRS (when you right-click on the layer (on the left side) and select Set CRS/Set Layer CRS..., the CRS is already set), then this guide is suitable for you. This also assumes that all .tif files in your raster data contain CRS metadata. Again, if not, you can set the projection to your raster data with set_projection.py or you can use the regular procedure with QGIS here.

The following script also supports uploading the dataset via FTP/SFTP. Also, take note that the scripts scan's for .tif files recursively (all files in the source folder).

- First, download and install the latest release of QGIS in OSGeo4W

- I highly recommend that you install gdal as it can be useful for the guide

The create_dataset script itself outputs the dataset extent (in EPSG:4326[WGS84]) in the Log Messages (The dataset bounds are,...). You can then use this info to follow Part two: Generating/using your dataset starting at step E. Dataset bounds. Of course, once you finish with this guide. If you wish to find the dataset extent manually and you've installed gdal, follow the next steps, else continue to step C. Take note, that if you wish to do this, do the following steps before you run the create_dataset script for the first time.

- Set

cleanuponline:52to False and continue on with step C, but before that read the step bellow - After you've run the script (once you finish step D), navigate to your source folder, and with a tool like

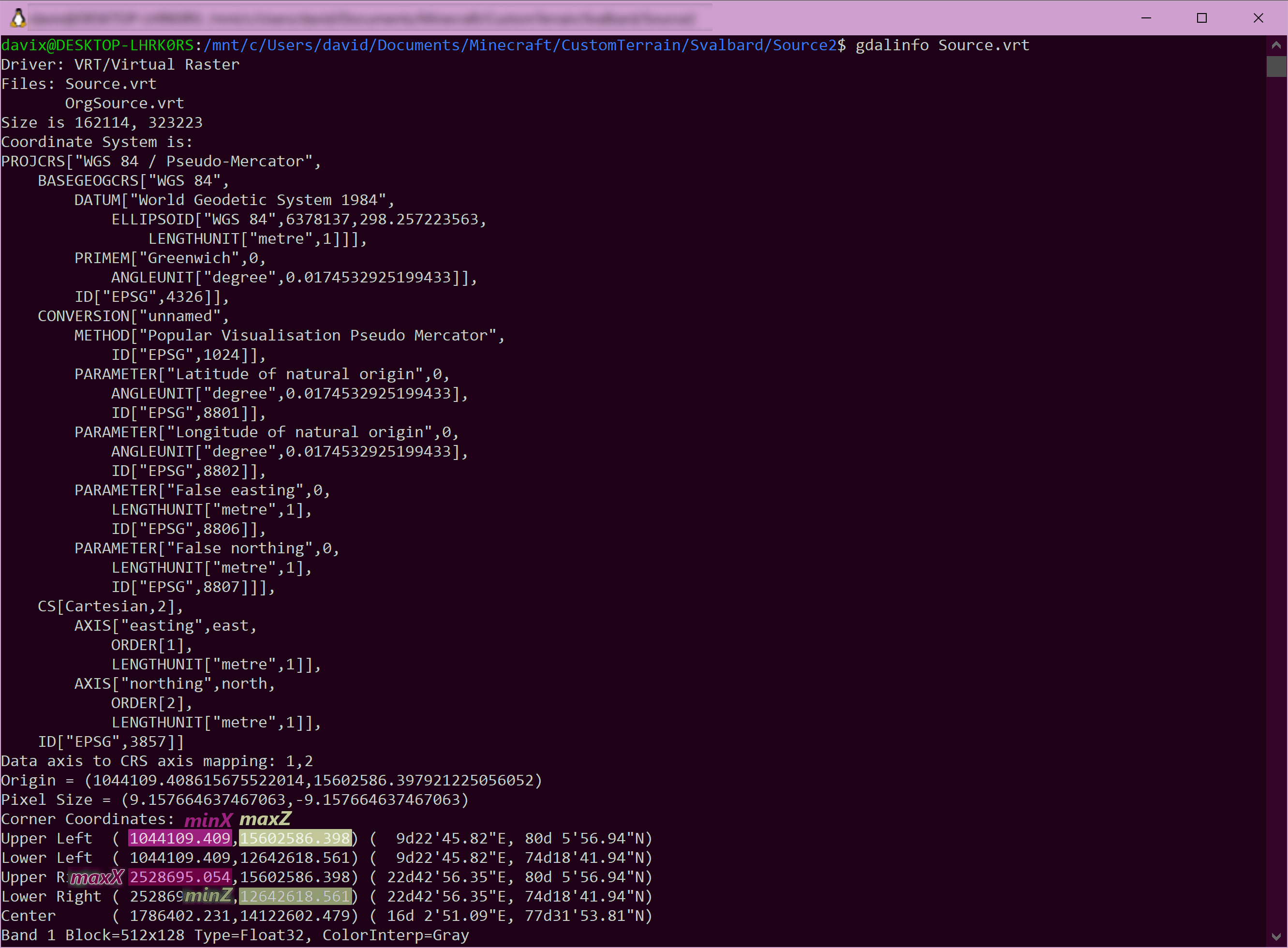

gdalinfo(ex.gdalinfo Source.vrt), find out the extent of the fileSource.vrt. The EPSG ofSource.vrtis 3857 (Web Mercator) - Example of

gdalinfooutput and how you would use it the step E. Dataset bounds in Part two: Generating/using your dataset can be seen in the image above.

After testing the various resampling algorithms, I concluded that near and mode produce the most accurate colors. By default, the script uses the near resampling algorithm, but you can choose another one if you wish, but be sure to take a careful look at the above image. You can change the algorithm on line:41 in the script.

- First download the

create_dataset.pyscript - Open QGIS as an administrator (Right-click

Run as administratoron Windows) - Next, navigate to

Plugins/Python Consolein the toolbar - In the opened bottom panel click on the

Show Editorbutton (Script icon) - Using the

Open Script...button open thecreate_dataset.pyfile - Once the script opens navigate to

line:37 - Here set

source_folderto the folder where your raster data is saved. You can use either\\or/for the path separators - Next on the

line:39changeoutput_directoryto the folder where you want to save the dataset (in this folder thezoomfolder will be created). Note, if you wish to upload the dataset via FTP/SFTP, you don't need to change this - On the

line:40changezoomto the zoom you wish to render the dataset to. To find out the proper zoom level follow the sub-step Finding out the zoom level in Part two: Generating/using your dataset and return here

If you wish to upload the dataset via FTP/SFTP, and not store the dataset locally, follow this sub-section, else skip it. The script can either upload each png individually or create a zip archive named RenderedDataset.zip and upload's it via FTP/SFTP.

- First set

ftp_uploadon theline:43to True - If you wish to upload one zip archive, instead of individual files, set

ftp_one_fileto True - If you wish to upload via SFTP, change

ftp_sto True. Else keep it at False - Nextly, set

ftp_upload_urlto the URL of your server, ex an IP address (192.168.0.26) or domain (ftp.us.debian.org). **It's essential that you don't include 'ftp://' or 'sftp://', only specify the hostname and no additional path (ex. ftp://192.168.0.26:2121/Dataset/Tiled is wrong) - Set

ftp_upload_porton theline:46to your ftp port (by default 21) -

This set is very important. On the

line:48setftp_upload_folderto the path or folder where you wish to upload the dataset to. In the above example, this would be set to'Dataset/Tiled' - If you wish to log-in anonymously, skip this step, else set

ftp_userandftp_passwordto your FTP login credentials

- Once you are done with setting up the input parameters, go through them once again to make sure they are set correctly, and then save the changes using the

Savebutton (Floppy disk icon) - Navigate to View/Panels/Log Messages in the toolbar

- Now, run the script by clicking on the

Run Scriptbutton (green play button) - It may lag a bit occasionally, but that is normal. Again, this can take a while

- The script outputs the progress into the Log Messages panel, and the whole progress is also shown in the progress bar. From 0% to 20% it's creating the VRT file in the terrarium format, and from 20% to 80% the actual tilling process begins

- The skip script skips empty tiles, so the reported number of rendered tiles at the end will at most cases always be less than the numbers of tiles to render reported at the start

- Once It's done QGIS will send you a notification and in the Log Messages panel it will tell you how long the script ran

For comparison, using the regular procedure with loadraster_folder script and QMetaTiles plugin, it took 23 minutes to render roughly the size of Canberra, Australia at zoom level 17, while it took 13 minutes with the create_dataset script.

From here on forward, follow Part two: Generating/using your dataset starting at step E. Dataset bounds, using the info from Log Messages (The dataset bounds are,...).

You can contact me on Discord, under davixdevelop#3914, or you can join us on our BTE Development Hub on Discord, and ask away under the #terraplusplus-support channel.