New (suspected) NAI model leak - AnythingV3.0 and VAE for it #4516

Replies: 11 comments 37 replies

-

|

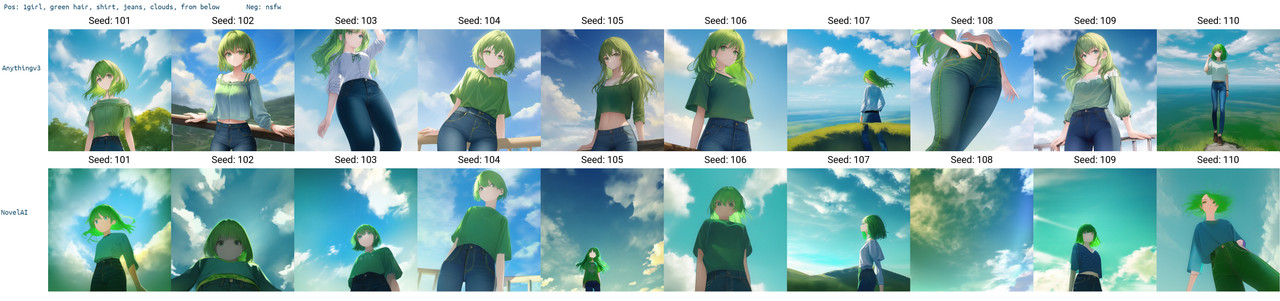

The test result of a user generated image shows that the effect of the generated image is much better than the previously leaked model. |

Beta Was this translation helpful? Give feedback.

-

|

It's not another leak, just resumed training and finetuning from the original leak, from what I understood? |

Beta Was this translation helpful? Give feedback.

-

|

Did a few comparisons, the "Anything" model looks better than the NAI, at least on higher resolution images. But many of the images look like it's a completely different model. |

Beta Was this translation helpful? Give feedback.

-

|

Guys, I have a big confusion about Anything-v3. That is, when training with Textual-Inversion, there is a problem if you use Anything-v3. The problem is that the generated images appear strangely distorted, as if they were trained without uninstalling vae. Yes, I have confirmed that there is no vae in the model directory and no vae is loaded in the webui logs, but training Textual-Inversion with Anything-v3 still results in a similar situation to training Textual-Inversion with vae loaded. I am not sure what is the reason for this. I have also tried adjusting the learning rate downwards, which even though it makes the "crashing of the image due to training after loading vae" disappear, it seems to be no change. I believe this should not be a problem with the training set either, because the same training set is very successful when using novelai as the main model for Textual-Inversion training, with character similarity seen at 3000step, approximation at 5000step, and perfect convergence above 8000step. So I am surprised because I am sure that when training Textual-Inversion with Anything-v3, there is no vae in the model directory and the settings show no vae loaded. As for the hypernetwork, it is also useless, as I found out when using novelai that the hypernetwork can forcefully suppress vae to affect the training. So I think the hyper network training is not affected by vae when using Anything-v3 as the main model, so the result is the same output after a few thousand steps as when there is no training. |

Beta Was this translation helpful? Give feedback.

-

|

I wanted to make a post for this model because it's so bizarre. But this model for some reason, produces the intended pictures well at an extremely low step count without the usual glitches. Has anyone experienced these good results too? |

Beta Was this translation helpful? Give feedback.

-

|

from what i read, and the suspect original leak source, anything V3.0 is a result of mixing multiple hypernetwork and models including the novel ai leak model, it is done by someone who then post it out for others to share and use, the information is coming from bilibili(the very first place where i can find the anything V3.0 model appears on internet) |

Beta Was this translation helpful? Give feedback.

-

|

Where is it possible to find lore behind NAI leaks and Anything? |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

With the introduction of |

Beta Was this translation helpful? Give feedback.

-

|

Is someone able to explain how I get the Model file? In huggingface there is no model file only a "safetensors" |

Beta Was this translation helpful? Give feedback.

-

|

with the https://huggingface.co/Linaqruf/anything-v3.0 is 404 |

Beta Was this translation helpful? Give feedback.

-

https://huggingface.co/Linaqruf/anything-v3.0/tree/main

Some comparisons: questianon/sdupdates#1 (comment)

For those that understands chinese, here are more comparisons: https://www.bilibili.com/read/cv19603218

I have also been trying it out and it indeed seems to be better than the animefull one, especially for hands holding stuff and backdrop. Note that it works with the animefull VAE and original SD VAE, just like the NAI model. In fact, the Anything VAE is exactly the same as NAI VAE.

This model should be a finetuned version of the original NAI animefull model. All embedding and hypetnetworks designed for original NAI model should work on this.

Beta Was this translation helpful? Give feedback.

All reactions