-

Notifications

You must be signed in to change notification settings - Fork 3

6) Nerf rendering, compositing and web viewing

- Nerf Rendering Basics

- Web rendering

Table of contents generated with markdown-toc

Faster Inference and real time rendering will be crucial to see Nerf adopted widely.

At the moment a NVIDIA series 3 or 4 rtx graphics card is required severely limiting the number of machines Nerf's can be generated an viewed on on unless cloud computing is used.

In NeRF we take advantage of a hierarchical sampling scheme that first uses a uniform sampler and is followed by a PDF sampler.

The uniform sampler distributes samples evenly between a predefined distance range from the camera. These are then used to compute an initial render of the scene. The renderer optionally produces weights for each sample that correlate with how important each sample was to the final renderer.

The PDF sampler uses these weights to generate a new set of samples that are biased to regions of higher weight. In practice, these regions are near the surface of the object.

For all sampling, we use Stratified samples during optimization and unmodified samples during inference.

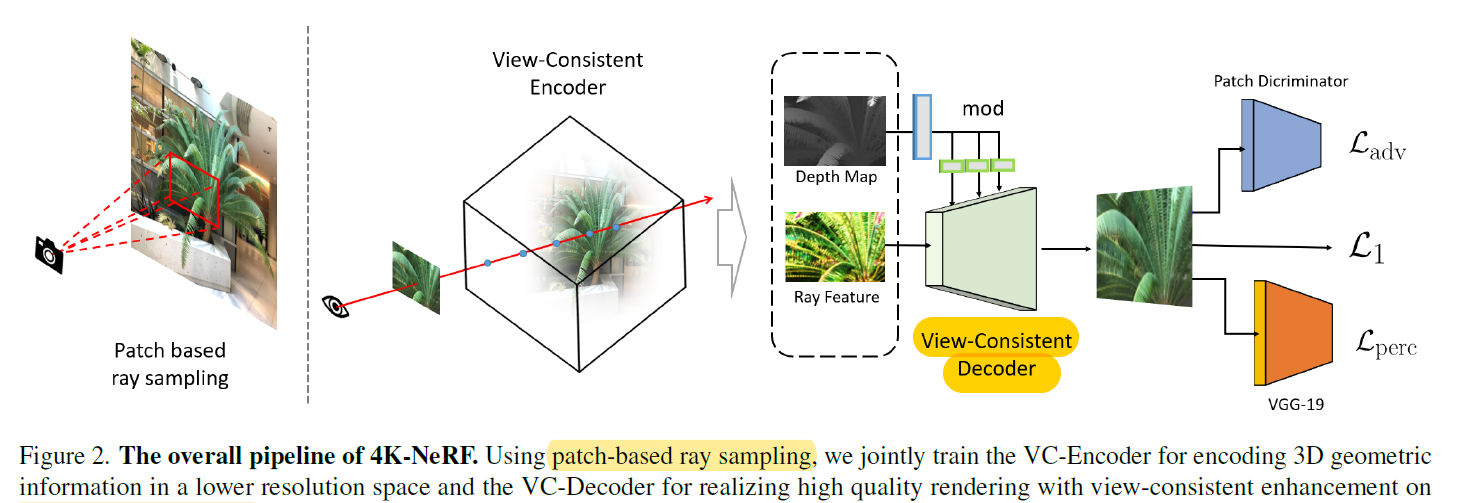

*https://arxiv.org/pdf/2112.01759.pdf

https://github.com/frozoul/4K-NeRF\

- https://arxiv.org/abs/2208.00945 Neural Radiance Field (NeRF) and its variants have exhibited great success on representing 3D scenes and synthesizing photo-realistic novel views. However, they are generally based on the pinhole

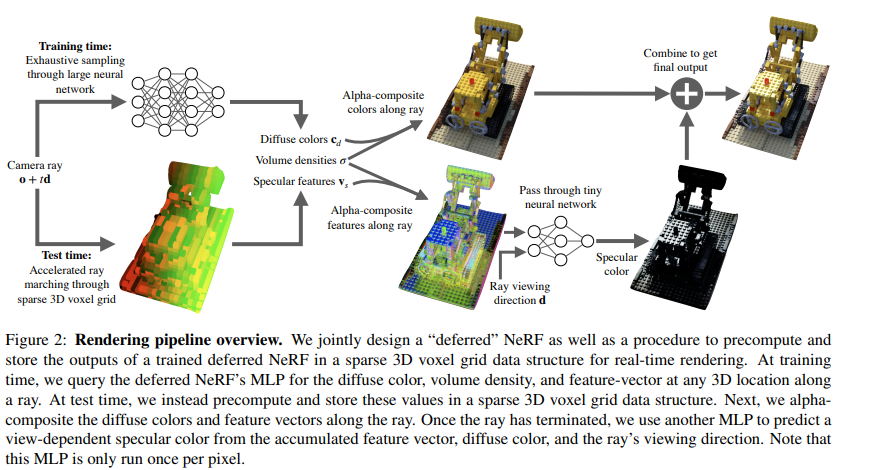

Neural radiance field (NeRF) techniques from volume rendering to accumulate samples of this scene representation along rays to render the scene from any viewpoint.

The neural network can also be converted to mesh in certain circumstances https://github.com/bmild/nerf/blob/master/extract_mesh.ipynb), we need to first infer which locations are occupied by the object. This is done by first create a grid volume in the form of a cuboid covering the whole object, then use the nerf model to predict whether a cell is occupied or not. This is the main reason why mesh construction is only available for 360 inward-facing scenes as forward facing scenes.

Mesh based rendering has been around long and gpus are optimzed for it.

ADOP: Approximate Differentiable One-Pixel Point Rendering,

*https://t.co/npOqsAstAx https://t.co/LE4ZdckQPO

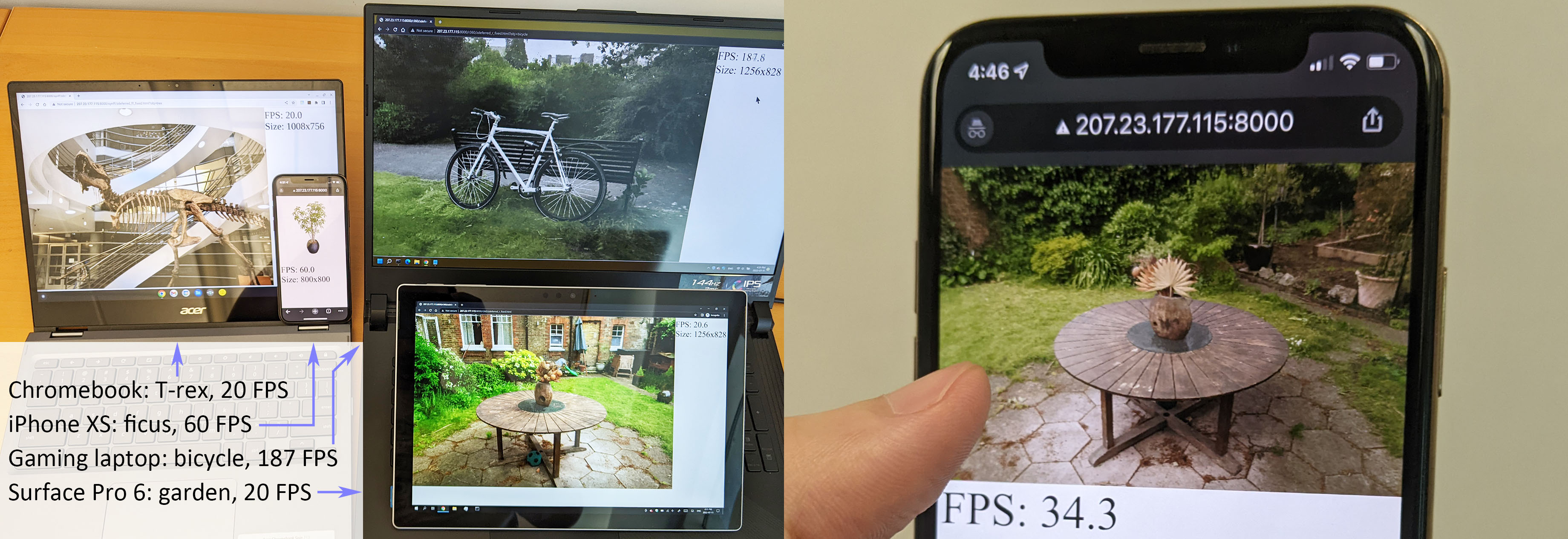

Neural Radiance Fields (NeRFs) in real time using PlenOctrees, an octree-based 3D representation which supports view-dependent effects. Our method can render 800x800 images at more than 150 FPS, which is over 3000 times faster than conventional NeRFs. We do so without sacrificing quality while preserving the ability of NeRFs to perform free-viewpoint rendering of scenes with arbitrary geometry and view-dependent effects. Real-time performance is achieved by pre-tabulating the NeRF into a PlenOctree.

In order to preserve view-dependent effects such as specularities, we factorize the appearance via closed-form spherical basis functions. Specifically, we show that it is possible to train NeRFs to predict a spherical harmonic representation of radiance, removing the viewing direction as an input to the neural network. Furthermore, we show that PlenOctrees can be directly optimized to further minimize the reconstruction loss, which leads to equal or better quality compared to competing methods. Moreover, this octree optimization step can be used to reduce the training time, as we no longer need to wait for the NeRF training to converge fully. Our real-time neural rendering approach may potentially enable new applications such as 6-DOF industrial and product visualizations, as well as next generation AR/VR systems. PlenOctrees are amenable to in-browser rendering as well;

Source https://alexyu.net/plenoctrees/

Source https://alexyu.net/plenoctrees/

Realtime online demo: https://alexyu.net/plenoctrees/demo/?load=https://angjookanazawa.com/plenoctree_data/ficus_cams.draw.npz;https://angjookanazawa.com/plenoctree_data/ficus.npz&hide_layers=1

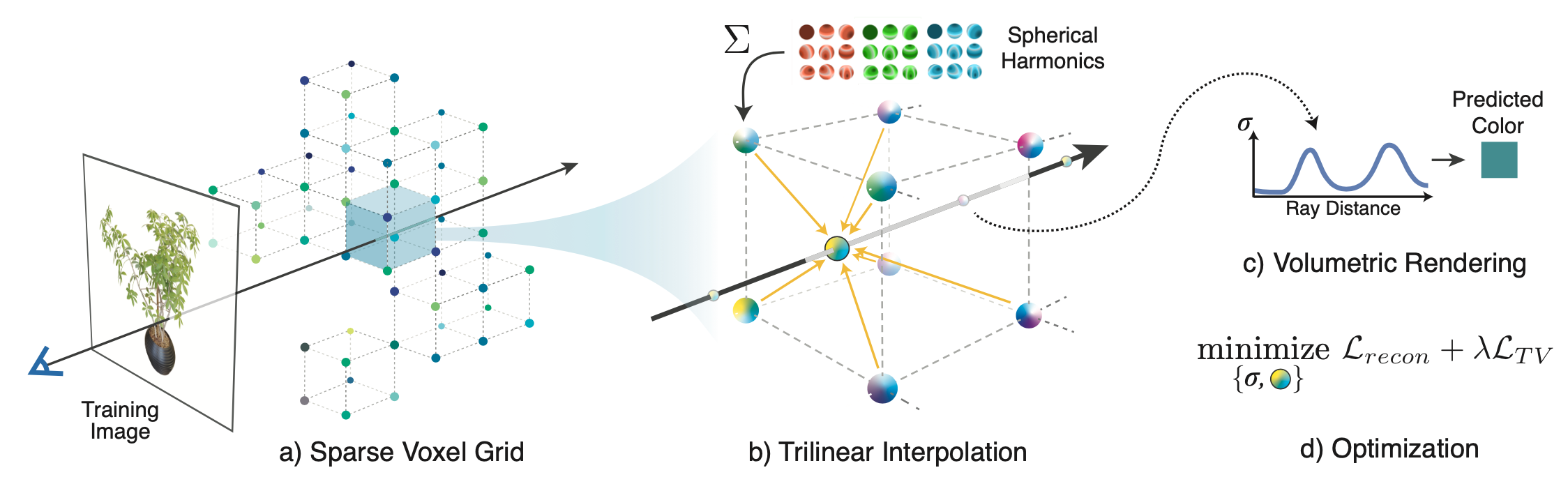

Proposes a view-dependent sparse voxel model, Plenoxel (plenoptic volume element), that can optimize to the same fidelity as Neural Radiance Fields (NeRFs) without any neural networks. Our typical optimization time is 11 minutes on a single GPU, a speedup of two orders of magnitude compared to NeRF.

Source https://github.com/sxyu/svox2

Also https://github.com/naruya/VaxNeRF https://avishek.net/2022/12/05/pytorch-guide-plenoxels-nerf-part-2.html

https://www.youtube.com/watch?v=nRCOsBHt97E

for Efficient Neural Field Rendering on Mobile Architectures

(https://arxiv.org/abs/2212.08057v1) Recent efforts in Neural Rendering Fields (NeRF) have shown impressive results on novel view synthesis by utilizing implicit neural representation to represent 3D scenes.